Boost your Alexa by making it respond as ChatGPT.

This repository contains a tutorial on how to create a simple Alexa skill that uses the OpenAI API to generate responses from the ChatGPT model.

Log in to your Amazon Developer account and navigate to the Alexa Developer Console.

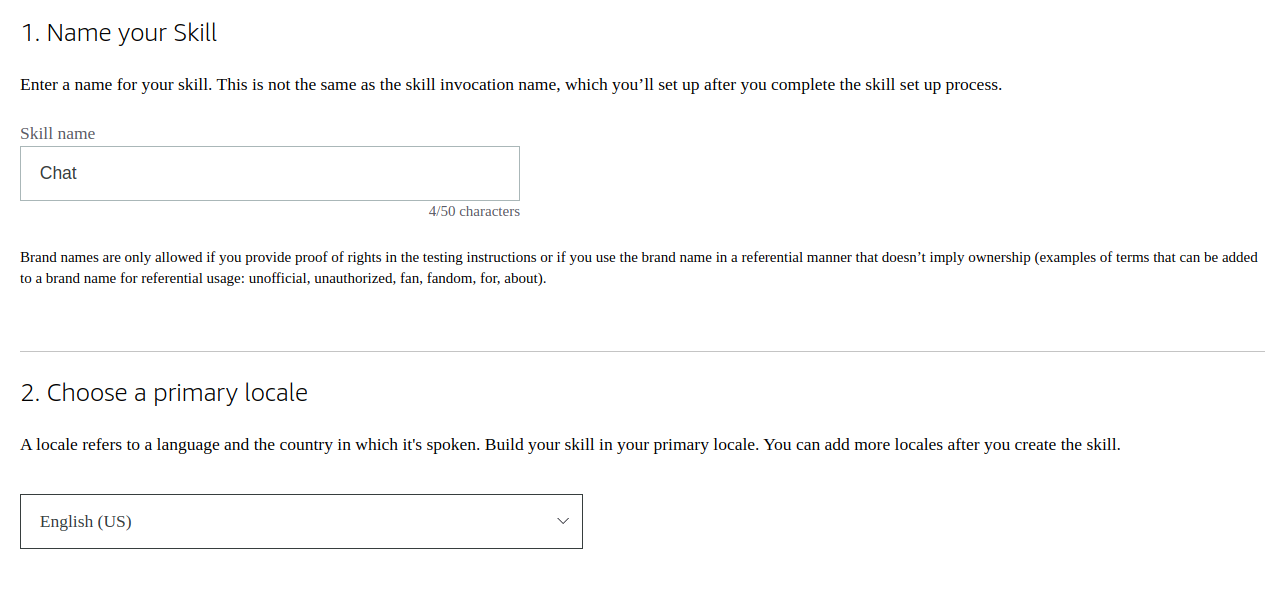

Click on "Create Skill" and name the skill "Chat". Choose the primary locale according to your language.

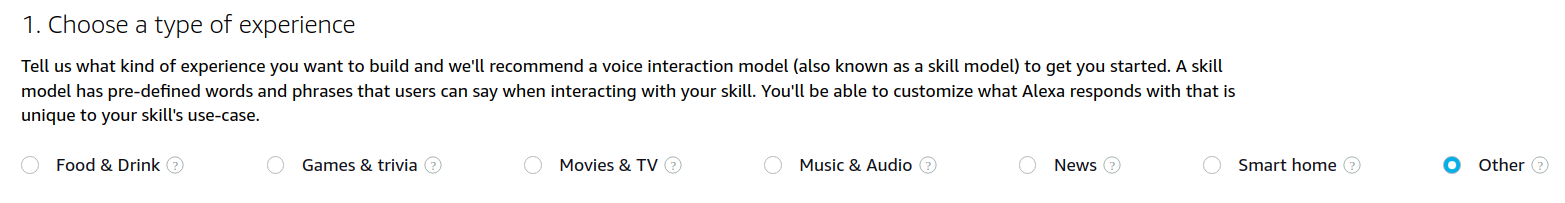

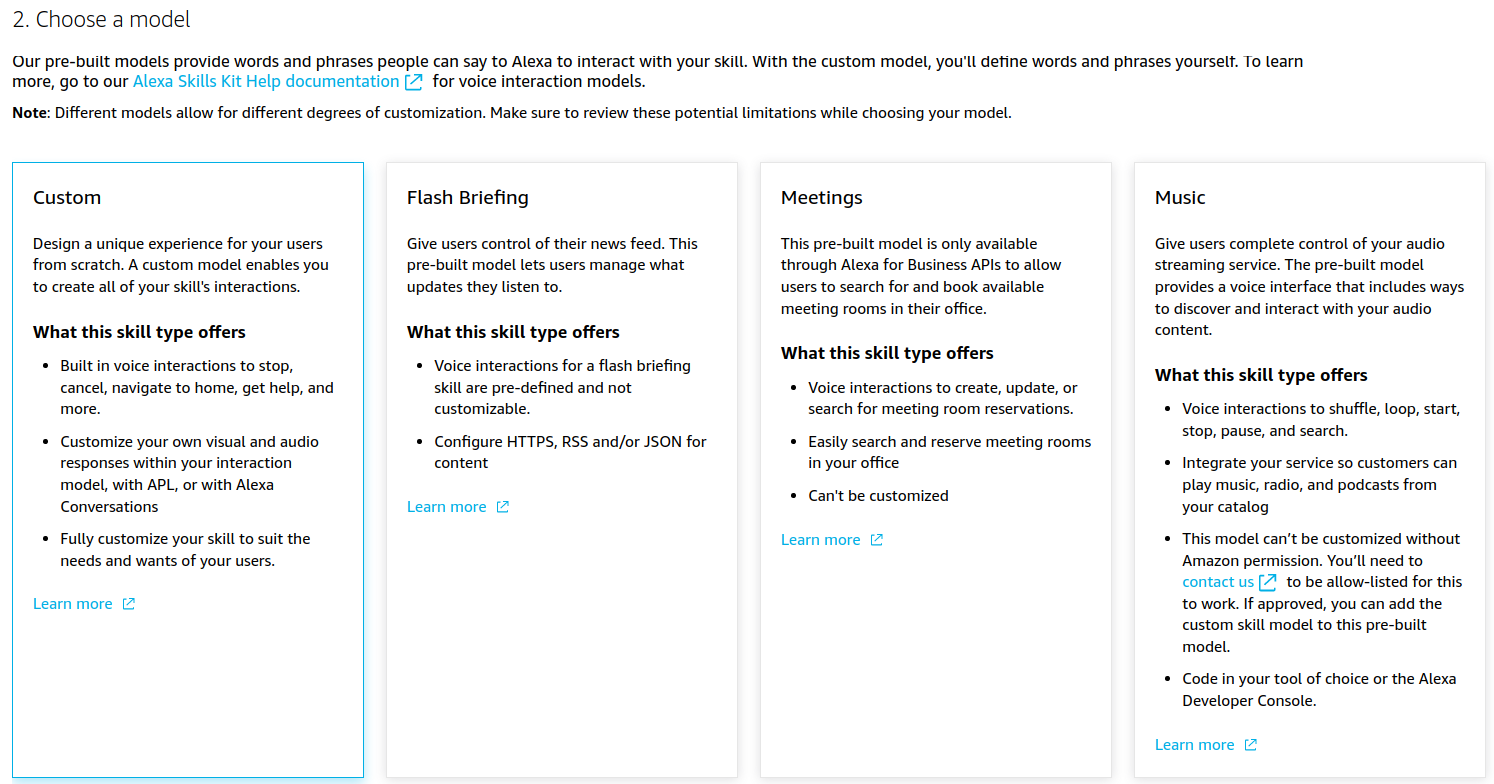

Choose "Other" and "Custom" for the model.

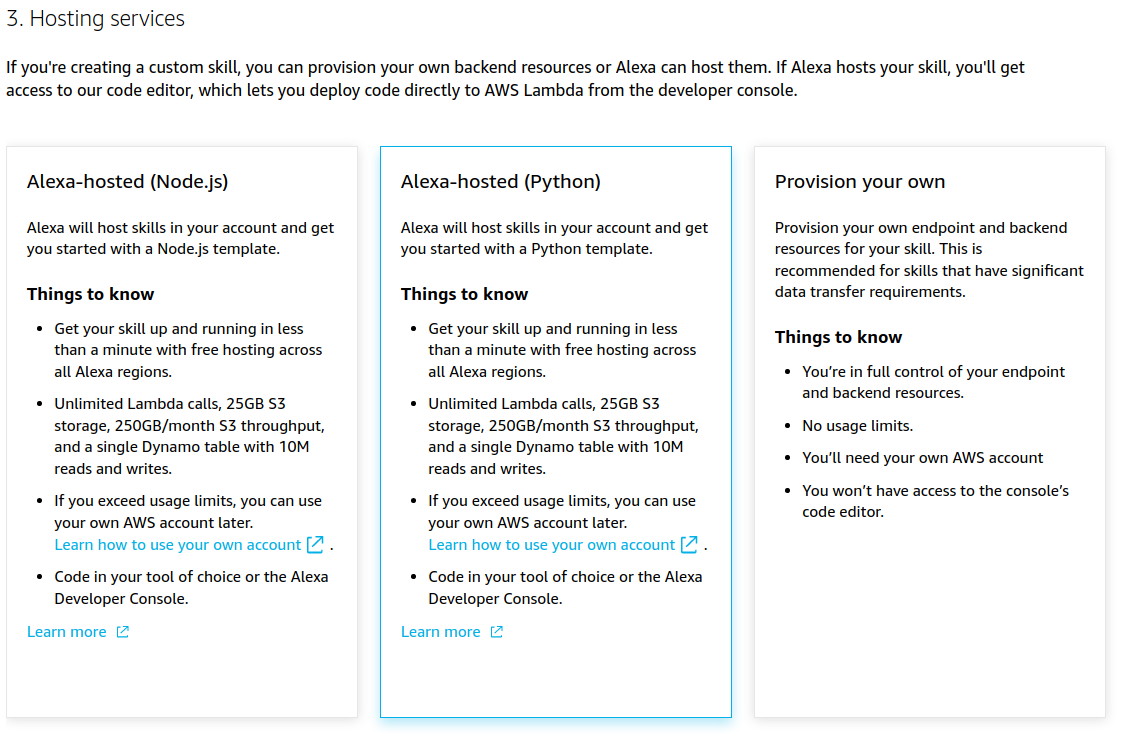

Choose "Alexa-hosted (Python)" for the backend resources.

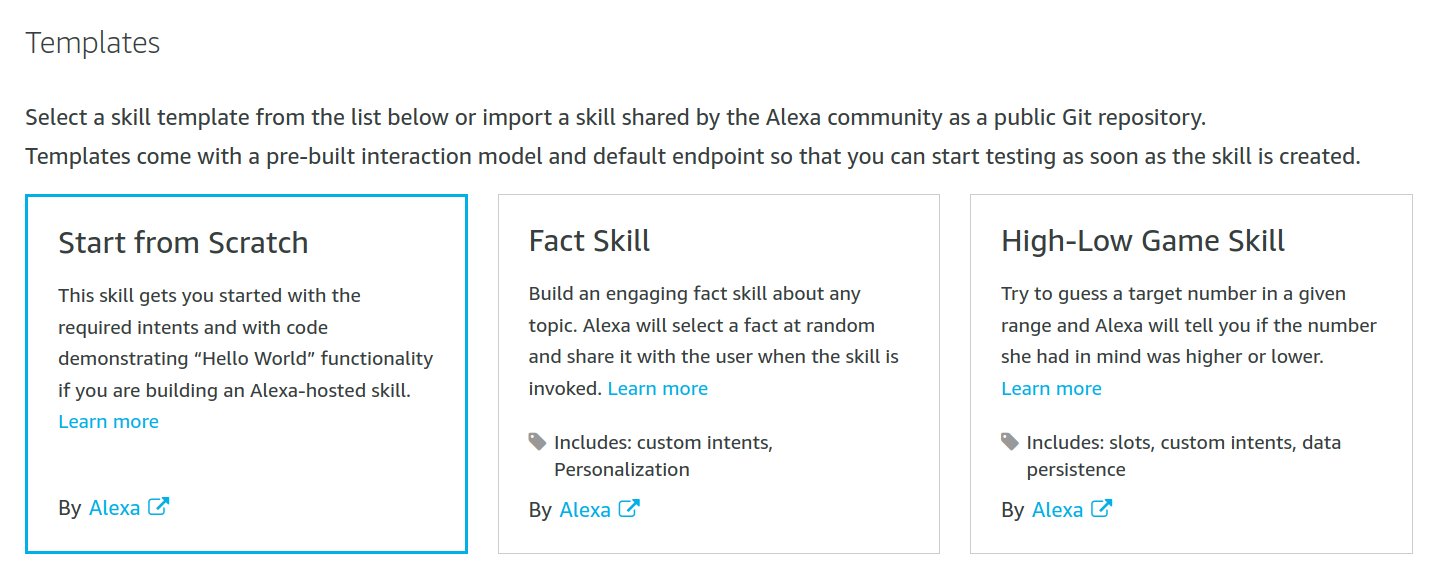

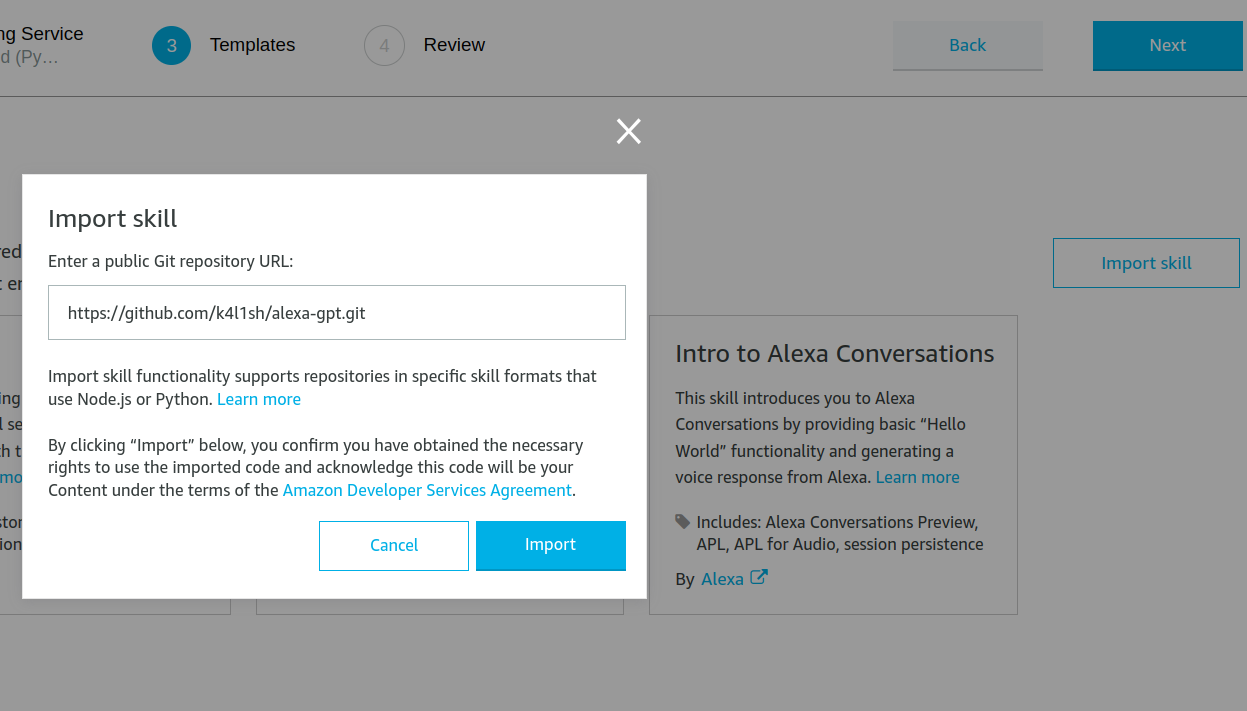

You now have two options:

- Click on "Import Skill", paste the link of this repository (https://github.com/k4l1sh/alexa-gpt.git), click "Import" and go directly to step 12

Or if you want to import the skill manually

- Select "Start from Scratch", click "Create Skill" and go to step 6

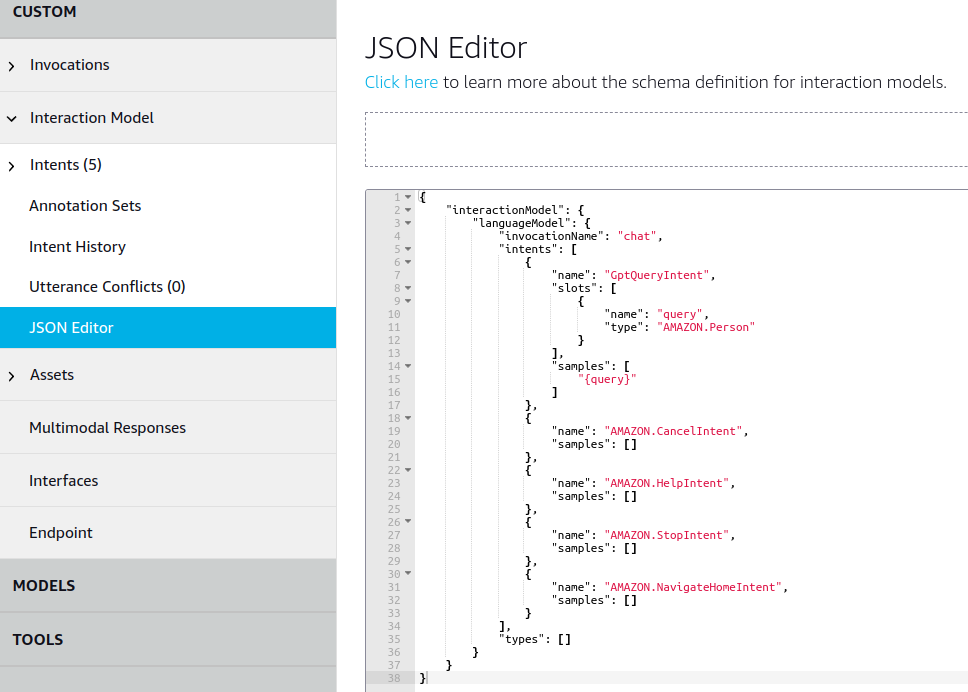

In the "Build" section, navigate to the "JSON Editor" tab.

Replace the existing JSON content with the provided JSON content:

{

"interactionModel": {

"languageModel": {

"invocationName": "chat",

"intents": [

{

"name": "GptQueryIntent",

"slots": [

{

"name": "query",

"type": "AMAZON.Person"

}

],

"samples": [

"{query}"

]

},

{

"name": "AMAZON.CancelIntent",

"samples": []

},

{

"name": "AMAZON.HelpIntent",

"samples": []

},

{

"name": "AMAZON.StopIntent",

"samples": []

},

{

"name": "AMAZON.NavigateHomeIntent",

"samples": []

}

],

"types": []

}

}

}Save the model and click on "Build Model".

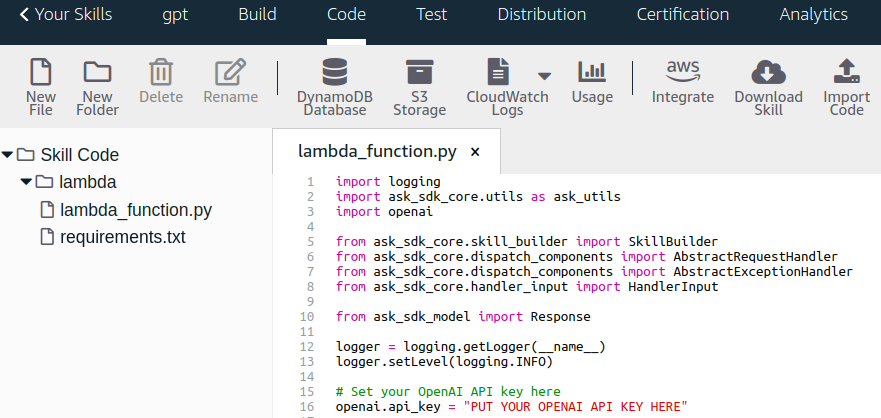

Go to "Code" section and add "openai" to requirements.txt. Your requirements.txt should look like this:

ask-sdk-core==1.11.0

boto3==1.9.216

openai==1.3.3Create an OpenAI API key by signing up and clicking in "+ Create new secret key" in the API keys page.

Replace your lambda_functions.py file with the provided lambda_function.py.

import logging

import ask_sdk_core.utils as ask_utils

from openai import OpenAI

from ask_sdk_core.skill_builder import SkillBuilder

from ask_sdk_core.dispatch_components import AbstractRequestHandler

from ask_sdk_core.dispatch_components import AbstractExceptionHandler

from ask_sdk_core.handler_input import HandlerInput

from ask_sdk_model import Response

# Set your OpenAI API key

client = OpenAI(

api_key="YOUR_API_KEY"

)

logger = logging.getLogger(__name__)

logger.setLevel(logging.INFO)

class LaunchRequestHandler(AbstractRequestHandler):

"""Handler for Skill Launch."""

def can_handle(self, handler_input):

# type: (HandlerInput) -> bool

return ask_utils.is_request_type("LaunchRequest")(handler_input)

def handle(self, handler_input):

# type: (HandlerInput) -> Response

speak_output = "Chat G.P.T. mode activated"

session_attr = handler_input.attributes_manager.session_attributes

session_attr["chat_history"] = []

return (

handler_input.response_builder

.speak(speak_output)

.ask(speak_output)

.response

)

class GptQueryIntentHandler(AbstractRequestHandler):

"""Handler for Gpt Query Intent."""

def can_handle(self, handler_input):

# type: (HandlerInput) -> bool

return ask_utils.is_intent_name("GptQueryIntent")(handler_input)

def handle(self, handler_input):

# type: (HandlerInput) -> Response

query = handler_input.request_envelope.request.intent.slots["query"].value

session_attr = handler_input.attributes_manager.session_attributes

chat_history = session_attr["chat_history"]

response = generate_gpt_response(chat_history, query)

session_attr["chat_history"].append((query, response))

return (

handler_input.response_builder

.speak(response)

.ask("Any other questions?")

.response

)

class CatchAllExceptionHandler(AbstractExceptionHandler):

"""Generic error handling to capture any syntax or routing errors."""

def can_handle(self, handler_input, exception):

# type: (HandlerInput, Exception) -> bool

return True

def handle(self, handler_input, exception):

# type: (HandlerInput, Exception) -> Response

logger.error(exception, exc_info=True)

speak_output = "Sorry, I had trouble doing what you asked. Please try again."

return (

handler_input.response_builder

.speak(speak_output)

.ask(speak_output)

.response

)

class CancelOrStopIntentHandler(AbstractRequestHandler):

"""Single handler for Cancel and Stop Intent."""

def can_handle(self, handler_input):

# type: (HandlerInput) -> bool

return (ask_utils.is_intent_name("AMAZON.CancelIntent")(handler_input) or

ask_utils.is_intent_name("AMAZON.StopIntent")(handler_input))

def handle(self, handler_input):

# type: (HandlerInput) -> Response

speak_output = "Leaving Chat G.P.T. mode"

return (

handler_input.response_builder

.speak(speak_output)

.response

)

def generate_gpt_response(chat_history, new_question):

try:

messages = [{"role": "system", "content": "You are a helpful assistant."}]

for question, answer in chat_history[-10:]:

messages.append({"role": "user", "content": question})

messages.append({"role": "assistant", "content": answer})

messages.append({"role": "user", "content": new_question})

response = client.chat.completions.create(

model="gpt-3.5-turbo-1106",

messages=messages,

max_tokens=300,

n=1,

temperature=0.5

)

return response.choices[0].message.content

except Exception as e:

return f"Error generating response: {str(e)}"

sb = SkillBuilder()

sb.add_request_handler(LaunchRequestHandler())

sb.add_request_handler(GptQueryIntentHandler())

sb.add_request_handler(CancelOrStopIntentHandler())

sb.add_exception_handler(CatchAllExceptionHandler())

lambda_handler = sb.lambda_handler()Put your OpenAI API key that you got from your OpenAI account

Save and deploy. Go to "Test" section and enable "Skill testing" in "Development".

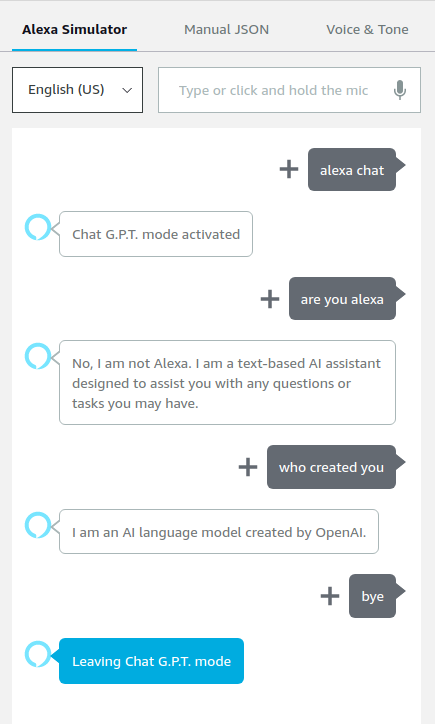

You are now ready to use your Alexa in ChatGPT mode. You should see results like this:

Please note that running this skill will incur costs for using both AWS Lambda and the OpenAI API. Make sure you understand the pricing structure and monitor your usage to avoid unexpected charges.