You will see an example of optimizing a simple Neural Network's Hyperparameters with Bayesian Optimization

Using Expected Improvement acquisition function:

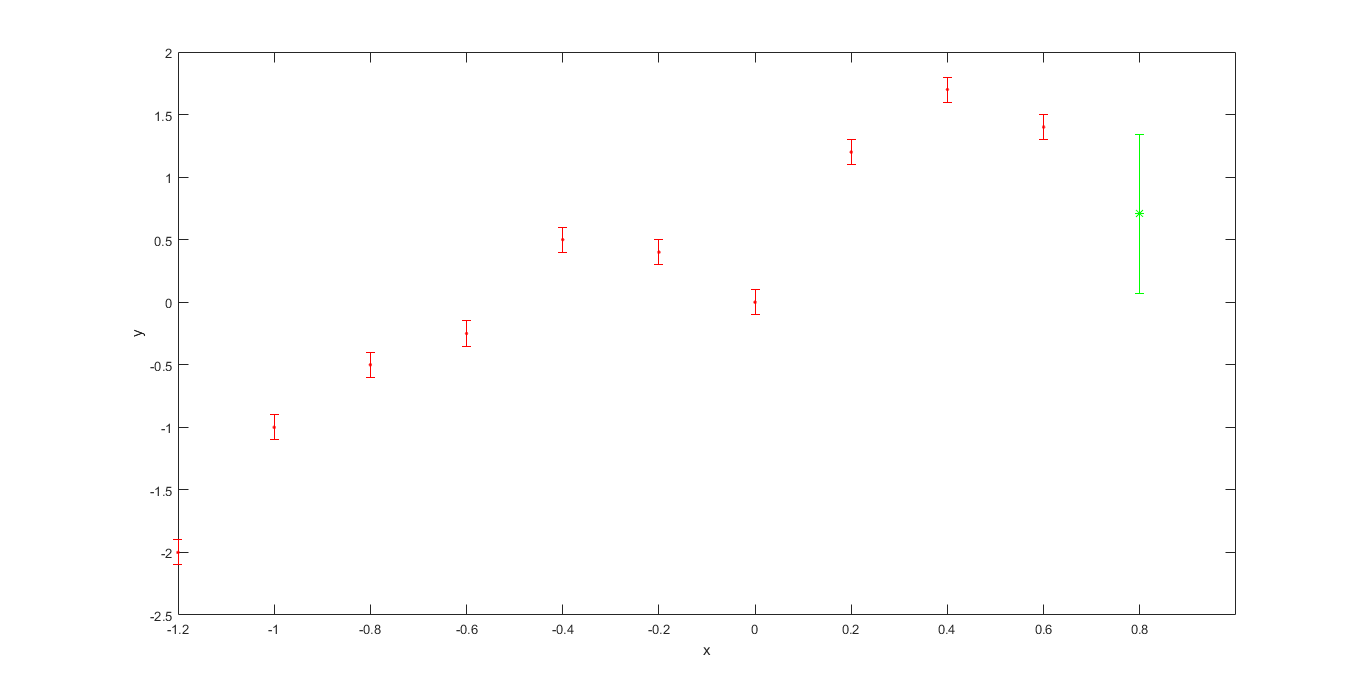

We have noisy sensor readings (indicated by errorbars). first we will do a point prediction:

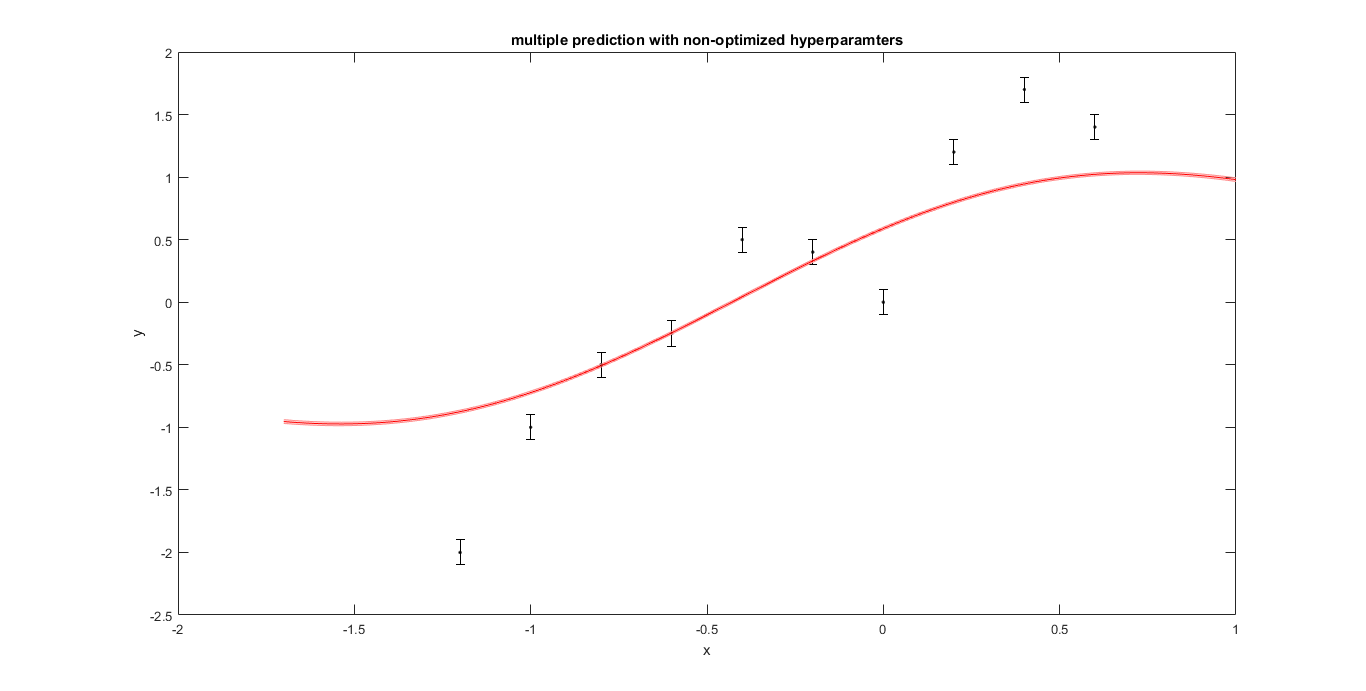

Next we will predict 100 points

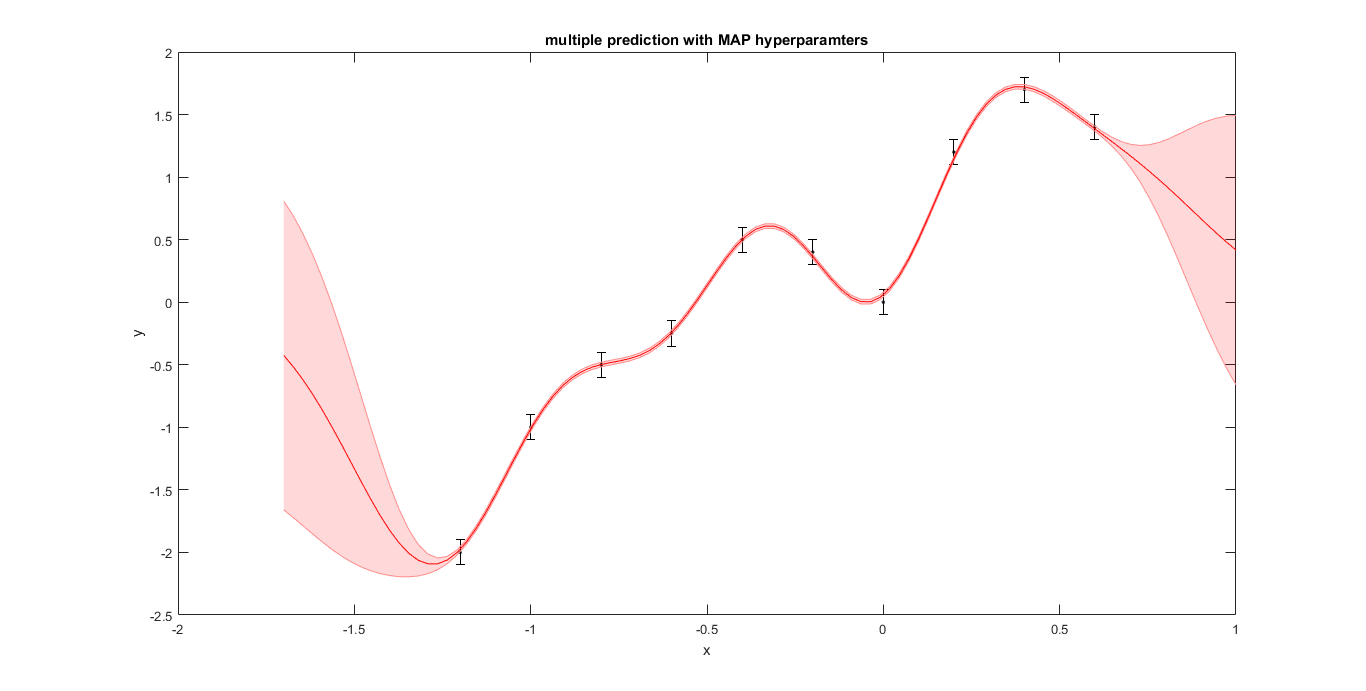

And we finally use MAP estimate of the hyperparameters:

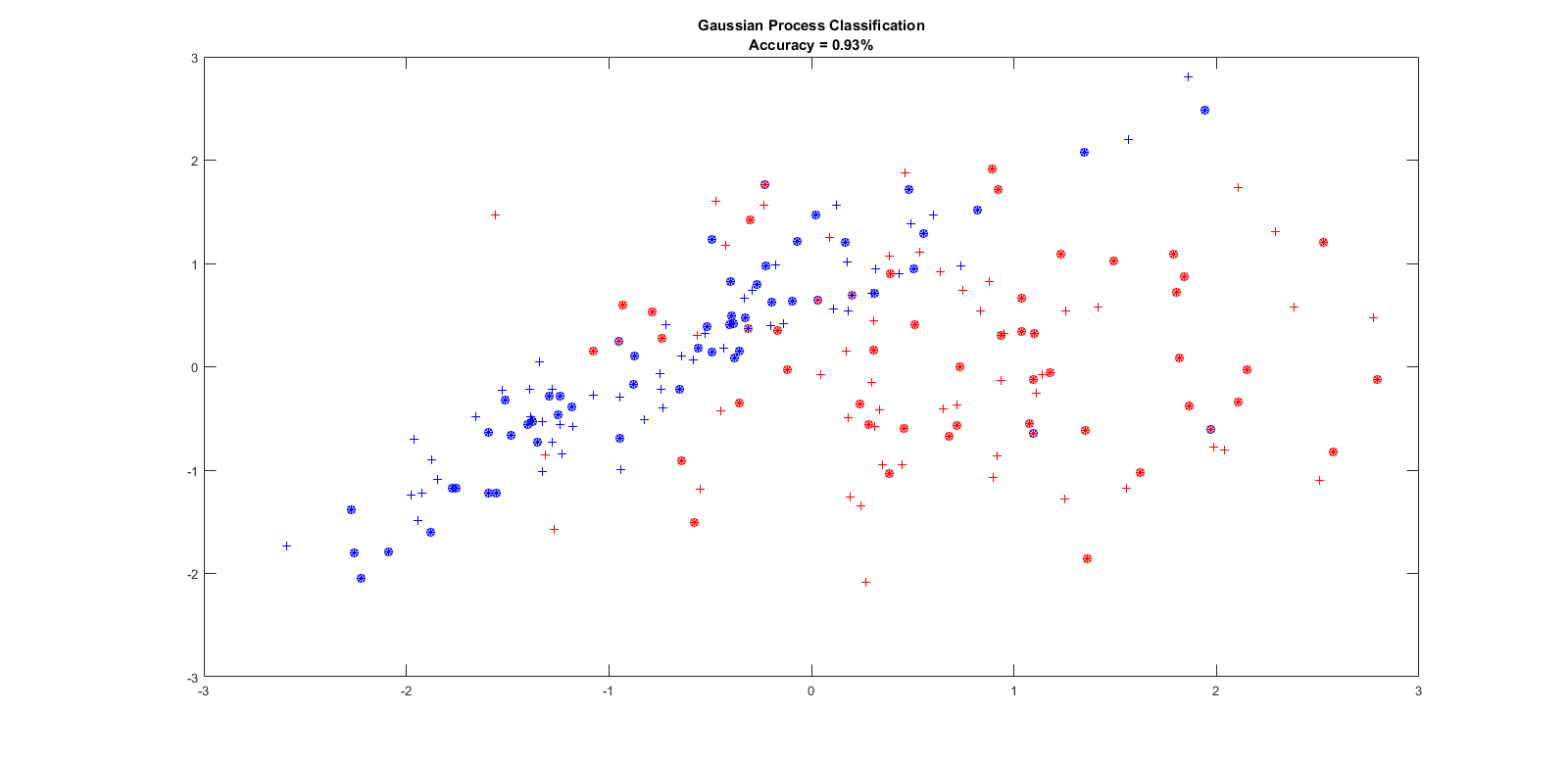

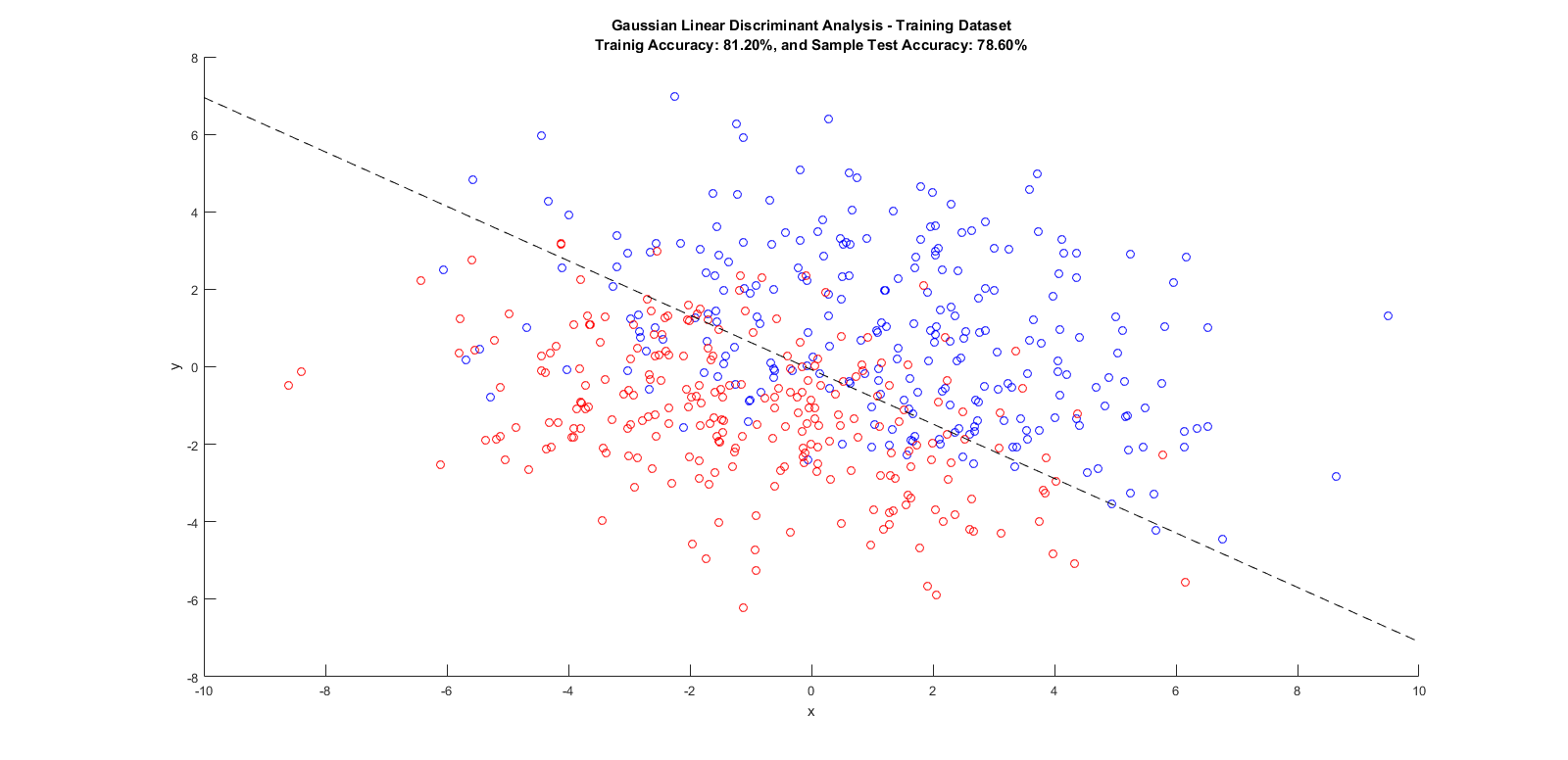

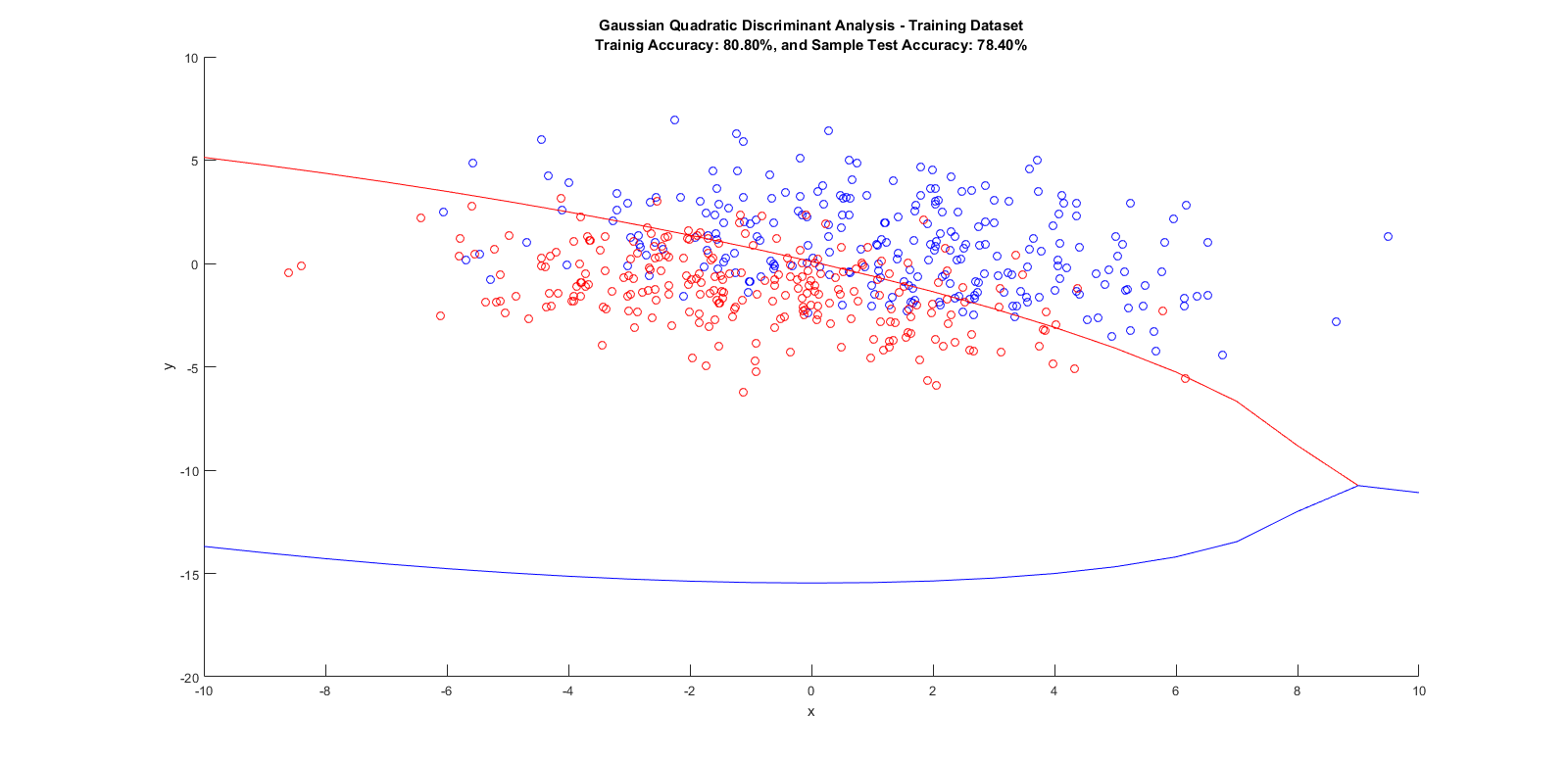

We have generated training points and labels and then tried to compute labels for test points. Each + is one trainig point and * is a test point:

If the color of '*' and 'o' coincide then that point is correctly classified. The points without any circle around them are training points ('+').

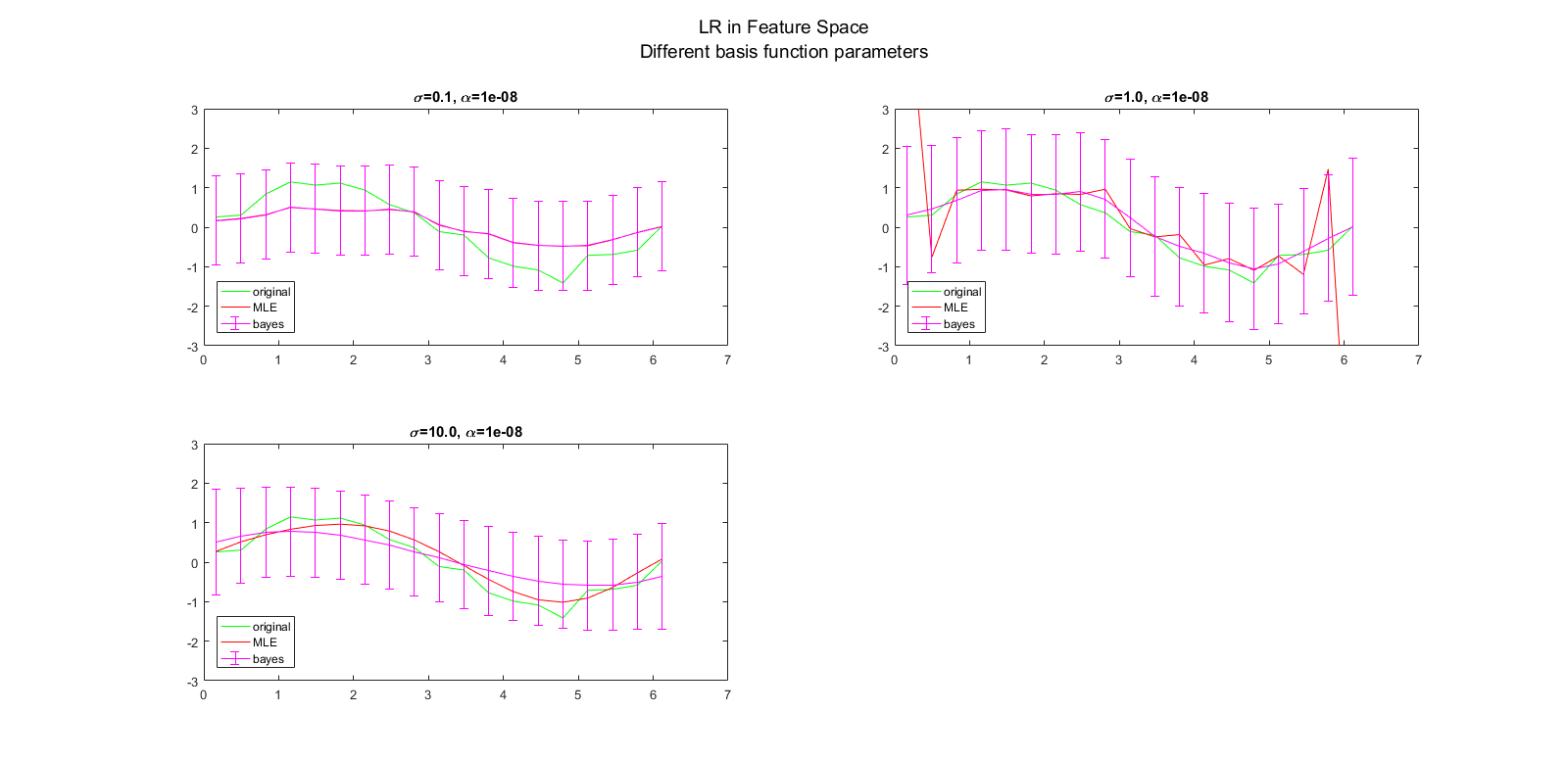

Here have projected input to a hd feature space with the help of basis functions and followed the rest of the standard linear model for regression. We see effect of different choices for basis function hyperparameters.

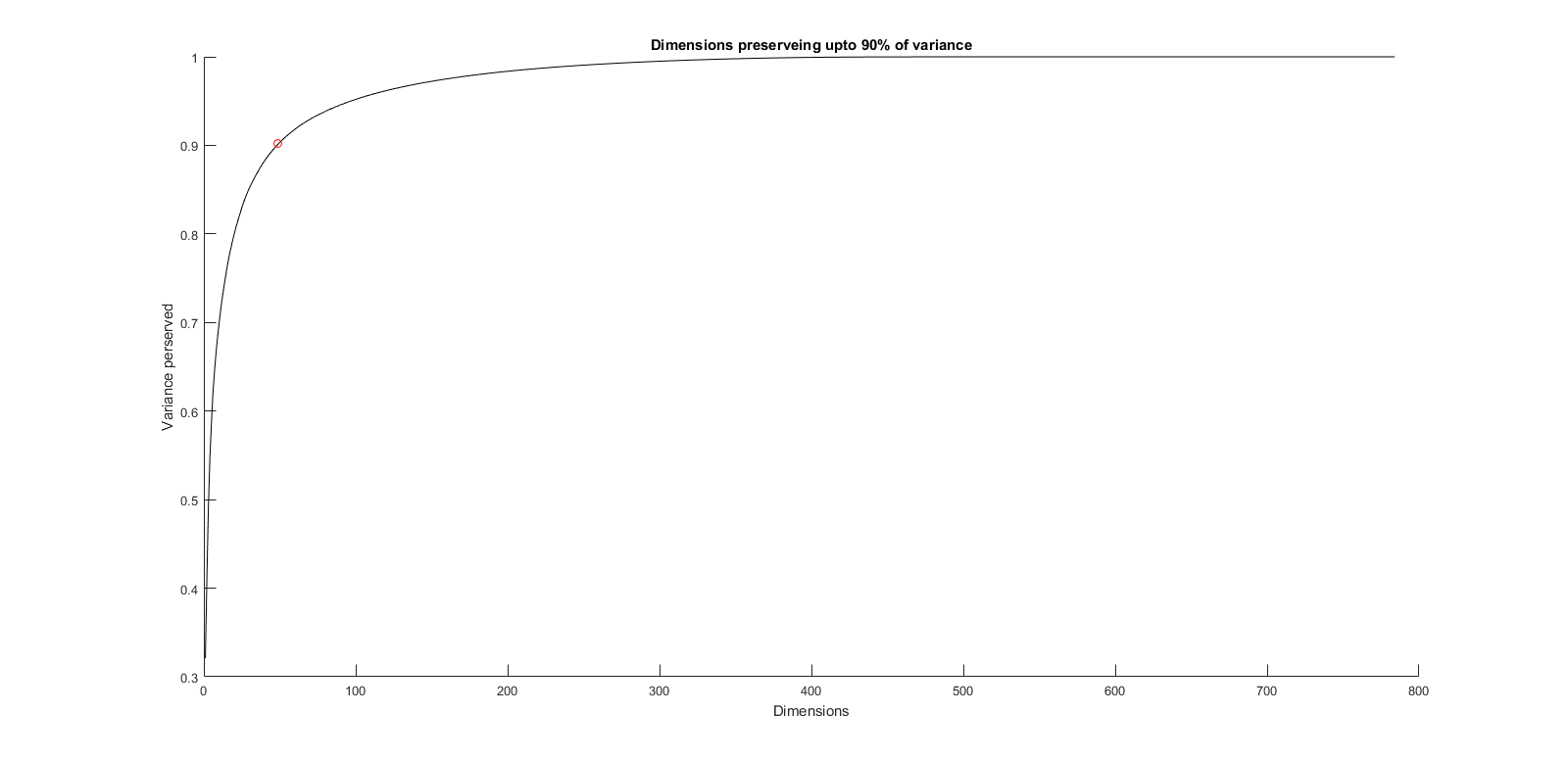

in the folder you can also find code for PCA with isotropic noise.