A fine-tuned Large Language Model (LLM) for the Vietnamese language based on the Llama 2 model.

Language is the heart and soul of a culture. The Vietnamese language, filled with its nuances, idioms, and unique characteristics, has always been a beautiful puzzle waiting to be embraced by large language models. Taking baby steps, this project is an effort to bring the power of large language models to the Vietnamese language.

We've just rolled out a experience version of a large language model for Vietnamese (finetuned on Llama2-7b (https://huggingface.co/meta-llama/Llama-2-7b-hf)). This model has been fine-tuned on a 20k instruction data sample. It's experimental and intended for lightweight tasks.

The model has been published on 🤗 Huggingface and can be accessed here.

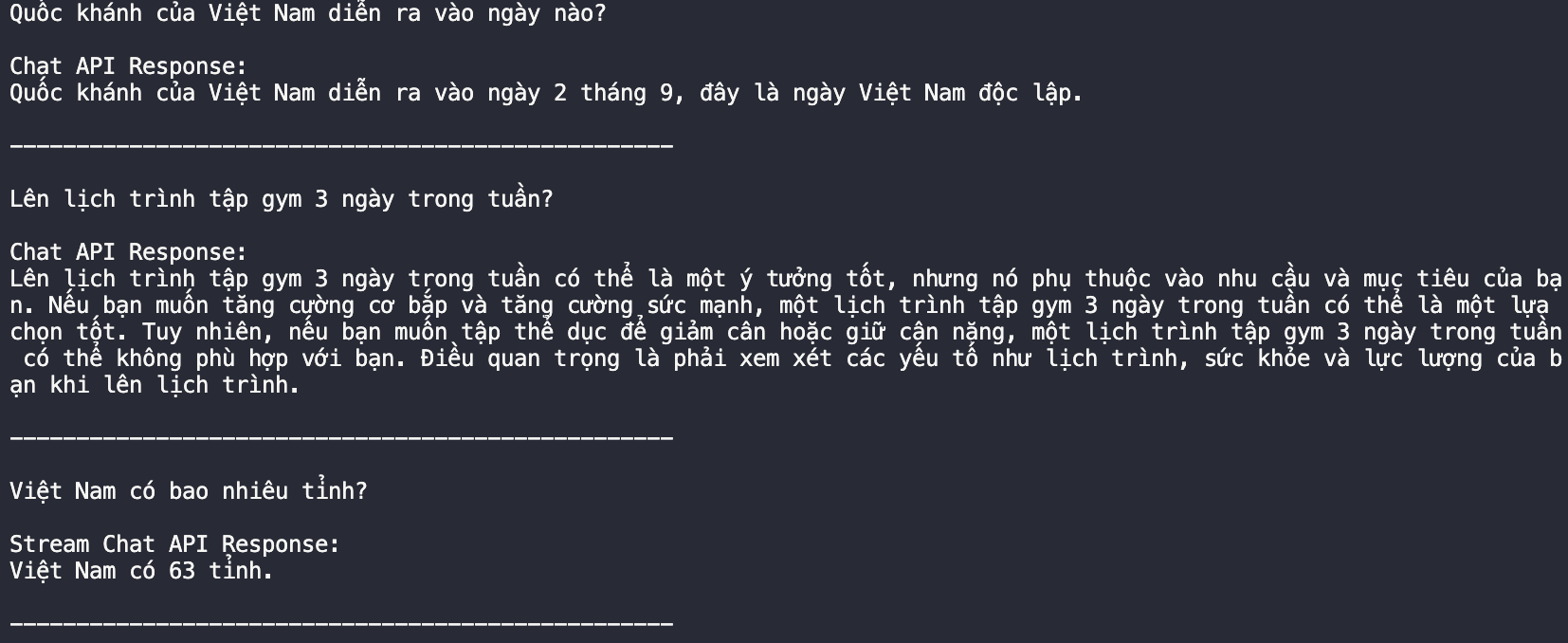

Here's a glance at what you can expect from the model:

-

Clone the repository:

git clone https://github.com/ngoanpv/llama2_vietnamese cd llama2_vietnamese -

Install dependencies:

pip install -r requirements.txt

-

Start the FastAPI server:

python serving/fastapi/main.py

-

To test the server, use the provided script:

python scripts/request_fastapi.py

- Predominant Focus on English: The original version of Llama 2 was chiefly focused on English-language data. While we've fine-tuned this model specifically for Vietnamese, its underlying base is primarily trained on English.

- Limited Fine-tuning: The current model has been fine-tuned on a small dataset. We are working on expanding the dataset and will release new versions as we make progress.

- Usage Caution: Owing to these limitations, users are advised to exercise caution when deploying the model for critical tasks or where high linguistic accuracy is paramount.

- LLaMA

- llama-recipes

- LLaMA-Efficient-Tuning

- Logo is generated by @thucth-qt

- Fine-tune on a larger dataset

- Evaluation on downstream tasks

- Experiment with different model sizes

- Experiment with different serving frameworks: vLLM, TGI, etc.

- Experiment with expanding the tokenizer and prepare for pre-training

Stay tuned for future releases as we are continuously working on improving the model, expanding the dataset, and adding new features.

Thank you for your interest in our project. We hope you find it useful. If you have any questions, please feel free to reach out to us at ngoanpham1196@gmail.com