- The straightening with Low-rank Adaptation (LoRA) is available. Check out the Section Finetuning using LoRA.

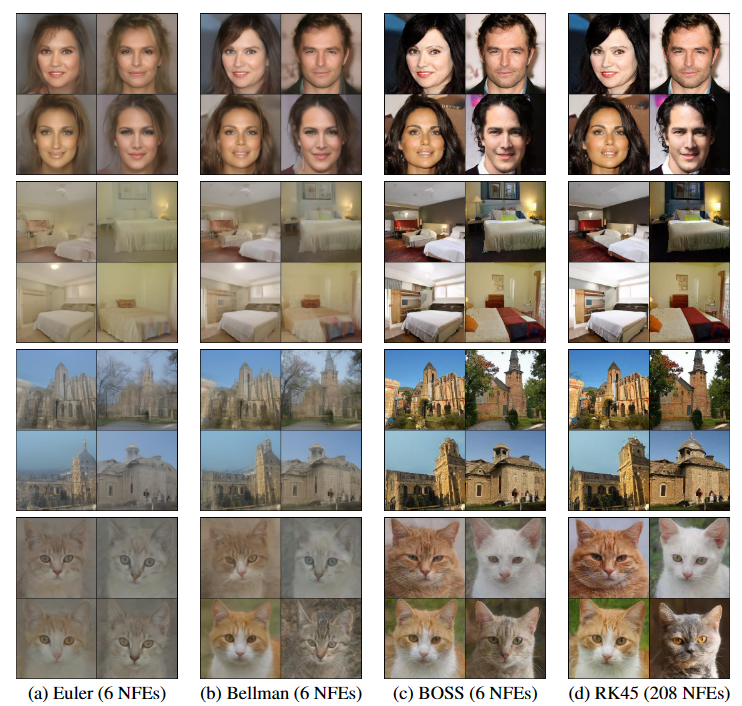

This paper introduces Bellman Optimal Step-size Straightening (BOSS) technique for distilling flow-matching generative models: it aims specifically for a few-step efficient image sampling while adhering to a computational budget constraint. First, this technique involves a dynamic programming algorithm that optimizes the step sizes of the pretrained network. Then, it refines the velocity network to match the optimal step sizes, aiming to straighten the generation paths.

Details regarding our methodology and the corresponding experimental results are available in our following paper:

Please CITE our paper whenever utilizing this repository to generate published results or incorporate it into other software.

@article{nguyen2023bellman,

title={Bellman Optimal Step-size Straightening of Flow-Matching Models},

author={Nguyen, Bao and Nguyen, Binh and Nguyen, Viet Anh},

journal={arXiv preprint arXiv:2312.16414},

year={2023}

}Python 3.9 and Pytorch 1.11.0+cu113 are used in this implementation.

It is recommended to create conda env from our provided config files ./environment.yml and ./requirements.txt:

conda env create -f environment.yml

conda activate boss

pip install -r requirements.txt

If you encounter problems related to CUDNN, try the following command lines:

mkdir -p $CONDA_PREFIX/etc/conda/activate.d

echo 'CUDNN_PATH=$(dirname $(python -c "import nvidia.cudnn;print(nvidia.cudnn.__file__)"))' >> $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

echo 'export LD_LIBRARY_PATH=$CUDNN_PATH/lib:$CONDA_PREFIX/lib/:$LD_LIBRARY_PATH' >> $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

source $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh

We use pretrained models on five datasets, including CIFAR-10 (32), AFHQ-Cat (256), LSUN Church (256), LSUN Bedroom (256), and CelebA-HQ (256). Our method is a data-free method so downloading datasets is unnecessary. The pretrained models are found in Rectified Flow. Download 5 models from their website and store them in the ./logs folder as follows:

- logs

- 1_rectified_flow

- church

- checkpoints

- checkpoints0.pth

- checkpoints

- cifar10

- checkpoints

- checkpoints0.pth

- checkpoints

- bedroom

- checkpoints

- checkpoints0.pth

- checkpoints

- celeba_hq

- checkpoints

- checkpoints0.pth

- checkpoints

- afhq_cat

- checkpoints

- checkpoints0.pth

- checkpoints

- church

- 1_rectified_flow

GPU allocation: Our work is experimented on NVIDIA A5000 GPUs. For finetuning, we use one GPU for CIFAR-10, and three GPUs for 256x256 resolution datasets.

Check out the clean-fid paper On Aliased Resizing and Surprising Subtleties in GAN Evaluation for generating the stats files and calculating the FID score.

For CIFAR10, they will be automatically downloaded in the first time execution.

For CelebA HQ (256) and LSUN, please check out here for dataset preparation.

For AFHQ-CAT, please check out here.

We also provide stats files for these datasets at here. Store these stats files in the folder stats which is in the cleanfid installed folder (~/anaconda3/envs/boss/lib/python3.9/site-packages/cleanfid/stats).

Check out the script(./ImageGeneration/scripts/evaluate.sh). Use the method bellman_uniform for DISC_METHODS.

Check out the script(./ImageGeneration/scripts/gen_data.sh).

Check out the script(./ImageGeneration/scripts/boss.sh and ./ImageGeneration/scripts/reflow.sh).

Check out the script(./ImageGeneration/scripts/evaluate_after_finetuning.sh).

Check out the script(./ImageGeneration/scripts/test_lora/boss_lora.sh) and check out the script (./ImageGeneration/scripts/test_lora/evaluate_after_finetuning_lora.sh) for evaluating fine-tuned models incorporating LoRA.

This repository is extended to finetune EDM models from Elucidating the Design Space of Diffusion-Based Generative Models (EDM). Check other folders in scripts for more details.

Thanks to Xingchao Liu et al., Yang Song et al., and Tero Karras et al. for releasing their official implementation of the Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow, Score-Based Generative Modeling through Stochastic Differential Equations, Elucidating the Design Space of Diffusion-Based Generative Models (EDM) papers and MinLora repository.

If you have any problems, please open an issue in this repository or ping an email to nguyenngocbaocmt@gmail.com.