- Introduction

- Project Description

- Getting Started

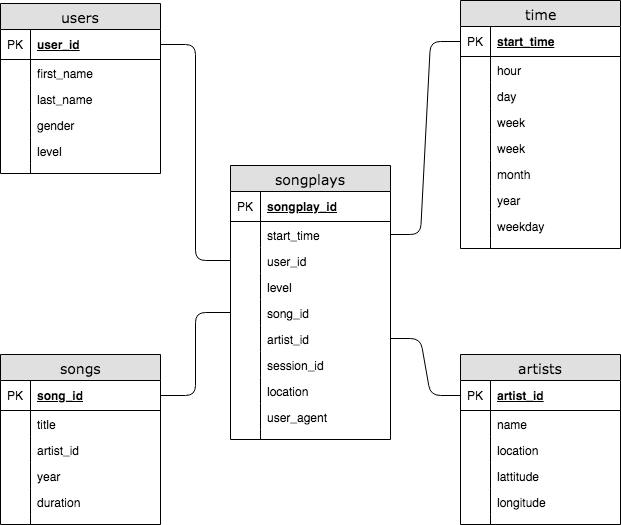

- Database Schema

- Prerequisites

- Installing

- Running the tests

- Built With

- Contributing

- Authors

- License

- Acknowledgments

Sparkify is a music streaming startup that provides free and paid on cloud music streaming plans and there are trying to enquire more users with paid plans. So they wanted to move from a local postgress analytic data wharehouse to a cloud based anlytics process using Amazon Redshift so that they can be more flexible on the of analyse their users behavior and how to convert them to paid customers. Now because the amount of data have grown they need a more powerfull data processing tolling at big data scale.

The Sparkify currently have a on premisse postgress data werehouse and they want to move there analytics process to the cloud. so they will need to move there song and log data using json files to a amazon S3 storage service as a staging area and after that load on a redshift databese for futher analysis. Because the volume of data and the need of more powerfull data processing tolling they have to use apache spark to move data back and forward from S3 to redshift in parquet format which is more suitable for big data analysis, therefore there an need for an more robust data warehouse. So as proposal we will sugest a data lake on AWS as solution for the new need.

etl.py: Reads data from S3, processes that data using Spark, and writes them back to S3 To run on an Jupyter Notebook powered by an EMR cluster, import the notebook found in this project.

AWS accout provisioned with, S3 bucket, and IAM role with admin level access to connect a S3 and perform above listed operations, use apache spark as data processing tool. and python 3.x, (local or cloud based) to run the scripts.

Use the etl.ipynd notebook to develop the ETL process for each of tables before completing running the etl.py file to read data from S3, processes that data using Spark, and writes them back to S3.

Test by running scrits provided by analytics team, and see if the result is what was expected.

- Amazon Redshift - Amazon Cloud Based Database Management System.

- Amazon IAM - Amazon Identity and Access Management System

- S3 buckets - Amazon storage service

- Python - Scripting Language

- Apache Spark - Lightning-fast unified analytics engine

- **Teofilo Carlos Chichume **

- Teofilo Carlos Chichume - Initial work - nhatofo

This project is licensed under the MIT License - see the LICENSE.md file for details

- Inspiration AWS Documentation, PurpleBooth