Michael Firman1, Sara Vicente1

1Niantic 2ETH Zürich 3University College London

[Project Webpage] [Paper]

This code is for non-commercial use; please see the license file for terms.

An Nvidia GPU is required to run this code. It's been tested on Ubuntu 20.04.4 LTS.

First, you need to clone this repo:

git clone --recursive git@github.com:nianticlabs/nerf-object-removal.gitIn order to install this repository, run the following commands. You might need to adjust the CUDA and cudNN versions in install.sh. This installation is only tested on CUDA 11.1. With other versions it might not run due to JAX issues.

conda create -n object-removal python=3.8

conda activate object-removal

# make sure libcudart.so can be found:

export LD_LIBRARY_PATH="${CONDA_PREFIX}/lib:${CONDA_PREFIX}/lib/python3.8/site-packages/nvidia/cuda_runtime/lib/:${LD_LIBRARY_PATH}"

bash ./install.shPlease download the data from here using the following command:

mkdir "$(pwd)/data"

wget -P "$(pwd)/data" "https://storage.googleapis.com/niantic-lon-static/research/nerf-object-removal/nerf-object-removal.zip"

unzip "$(pwd)/data/nerf-object-removal.zip" -d "$(pwd)/data"Please download the pretrained Lama model from Hugging Face.

cd external/lama

wget https://huggingface.co/smartywu/big-lama/resolve/main/big-lama.zip

unzip big-lama.zip

cd ../..In order to test our pipeline using the default configuration on a scene of the provided dataset, run the following command:

export SCENE_NUMBER="001"; \

ROOT_DIR="$(pwd)/data/object-removal-custom-clean" \

OUTPUT_DIR="$(pwd)/experiments/real" \

bash ./run_real.sh model/configs/custom/default.gin "${SCENE_NUMBER}"or on the synthetic dataset with

export SCENE_NUMBER="001"; \

ROOT_DIR="$(pwd)/data/object-removal-custom-clean" \

OUTPUT_DIR="$(pwd)/experiments/synthetic" \

bash ./run_synthetic.sh model/configs/custom_synthetic/default.gin "${SCENE_NUMBER}"You have to adjust the $EXPERIMENT_PATH and the $DATA_PATH accordingly in the files above.

You can test a trained model using the following command for a real scene

export SCENE_NUMBER="001"; \

ROOT_DIR="$(pwd)/data/object-removal-custom-clean" \

OUTPUT_DIR="$(pwd)/experiments/real" \

bash ./test_real.sh model/configs/custom/default.gin "${SCENE_NUMBER}"or the following command for a synthetic scene

export SCENE_NUMBER="001"; \

ROOT_DIR="$(pwd)/data/object-removal-custom-clean" \

OUTPUT_DIR="$(pwd)/experiments/synthetic" \

bash ./test_synthetic.sh model/configs/custom_synthetic/default.gin "${SCENE_NUMBER}"Once the models have been optimized, you can visualize the results using this notebook.

Make sure that you correctly set the $EXPERIMENT_DIR and the $GROUNDTRUTH_DIR and follow the notebook carefully to set all required options for your experiment.

You can evaluate the results using the evaluation script.

python eval.py --experiment "${EXPERIMENT_NAME}" --experiment_root_dir "${EXPERIMENT_DIR}" --benchmark (synthetic/real)You van visualize the evaluation results using this notebook.

See docker/README.md.

If you find this work helpful, please consider citing

@InProceedings{Weder_2023_CVPR,

author = {Weder, Silvan and Garcia-Hernando, Guillermo and Monszpart, \'Aron and Pollefeys, Marc and Brostow, Gabriel J. and Firman, Michael and Vicente, Sara},

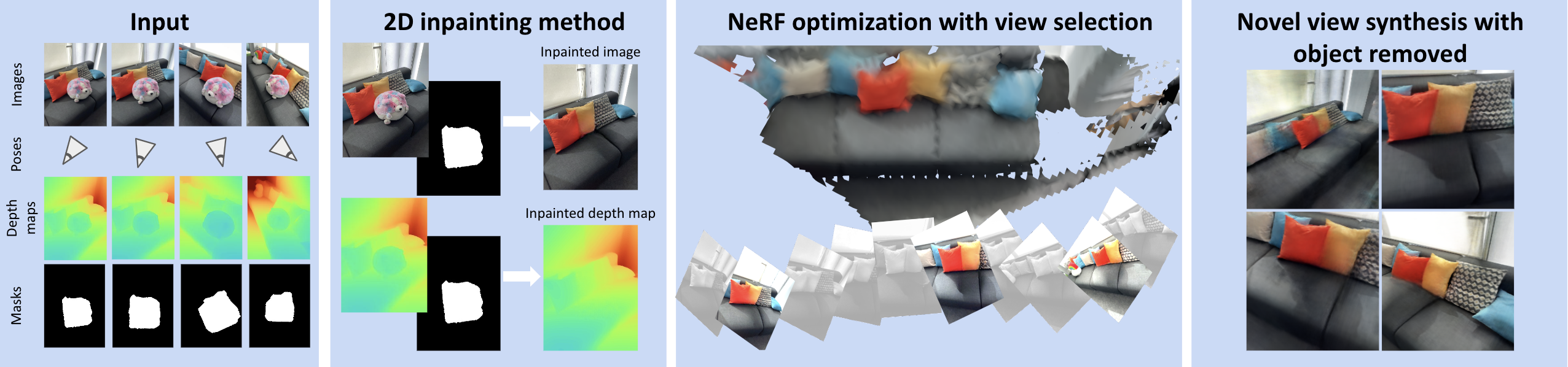

title = {Removing Objects From Neural Radiance Fields},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {16528-16538}

}