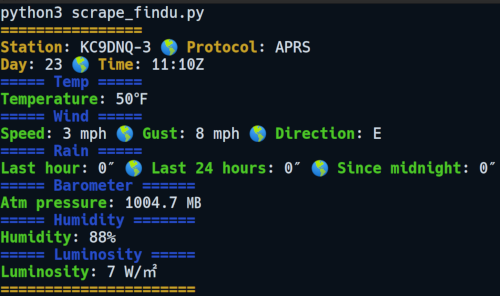

- Scrape findu.com for weather archive—the latest record.

- Python 3, urllib.request(), BeautifulSoup(), datetime.time(), regex

- Python 3.7.5

- Ubuntu 19.10 (Eoan)

- Bash 5.0.3 (x86_64-pc-linux-gnu)

In a Unix-like terminal emulator, enter the following arguments in the Bash CLI:

$ /bin/python3 scrape_findu.pyUser needs to be in the working directory for those arguments to execute correctly.

$ ./scrape_findu.py may also run the app, depending upon how permissions pan out (see Shebang Line section).

Also be aware of usage limitations.

#! /bin/python3

Following the shebang (#!) is the interpreter directive (/bin/python3). The shebang line enables a Python executable file to execute using ./, as shown in the previous section.

...in a Unix-like operating system, the program loader mechanism parses the rest of the file's initial line as an interpreter directive. The loader executes the specified interpreter program, passing to it, as an argument, the path that was initially used when attempting to run the script, so that the program may use the file as input data.

In other words, the loader parses /bin/python3 as a Unix argument, which explains why the ./scrape_findu.py argument can be used to run the file like a program (if permissions are favorable).

import urllib.request

from bs4 import BeautifulSoup

from datetime import time

import reThe classes and functions of the urllib.request module help open URLs—especially the HTTP protocol—for authentication, redirections, cookies, etc._

Beautiful Soup is a Python library for pulling data out of HTML and XML files. It works with your favorite parser to provide idiomatic ways of navigating, searching, and modifying the parse tree.

datetime.time(): Return time object with same hour, minute, second, microsecond and fold.

re.compile(pattern, flags=0): Compile a regular expression pattern into a regular expression object, which can be used for matching, using its match(), search() and other methods...

re.sub() searches for a regex pattern in a string, and replaces the substring with another string, or an empty string ('').

_res = urllib.request.urlopen('http://www.findu.com/cgi-bin/raw.cgi?call=KC9DNQ-3&units=english')

_html = _res.read()

_soup = BeautifulSoup(_html, "html.parser")

_data = _soup.find('tt').text

observ_list = _data.splitlines()

rgx = re.compile(

r'@([0-9]{6})z[0-9]{4}.[A-Z0-9]{3}\/[0-9]{5}.[A-Z0-9]{3}_([0-9]{3})\/([0-9]{3})g([0-9]{3})t([0-9]{3})r([0-9]{3})p([0-9]{3})P([0-9]{3})b([0-9]{5})h([0-9]{2})[l|L]([0-9]{3})')

grp = rgx.findall(observ_list[1])

(timestamp, wind_dir, wind_spd, wind_gst, temperature, rain_hr, rain_24, rain_mid, barometer, humidity, luminosity) = grp[0]urllib.request.urlopen()requests weather data archive from FindU. HTTPResponse instance wraps HTTP response into an iterable object for access to request headers and entity body. Which can be accessed using with statement.- HTTPResponse.read() reads and returns response body.

- BeautifulSoup supports html.parser library. Also, BS4 relies on the lxml parser, so that module may need to be installed. Basically, BS4 parses and organizes HTML syntax using a document tree. In the case of this app, BS4 accesses the

textin the<tt>container, which contains archived weather data transmitted by a personal weather station (_soup.find('tt').text). - splitlines() method basically comma separates the lines of weather data into a Python list. Line breaks are left out, but were used to determine where the string should be split into list elements.

- re.compile() basically compiles a regex pattern into a regex object.

- re.findall() returns a list of grouped weather data based on the compiled regex pattern. For example, the regex pattern

([0-9]{3})groups a three number value000. (timestamp, wind_dir, wind_spd, wind_gst, temperature, rain_hr, rain_24, rain_mid, barometer, humidity, luminosity) = grp[0]names each listed group so that they become more easily identifiable in theprint()calls.datetime.time(hour=int(timestamp[2:4]), minute=int(timestamp[4:6])).strftime("%H:%M")formats the six-digit string. The first two numbers indicate the day of the current month. The last four digits indicate military zulu time, which explains the 'Z'. Military zulu time is the same as UTC and Greenwich time. The splices separate 6 digits into three parts: day, hour, min.re.sub(r'\b0{1,2}', '', wind_spd)substitutes a leading zero with an empty space ('').{1,2}indicates that one or two zeros will be removed, and if the third digit is a zero, it will remain.\btells regex to eliminate zeros on the left only.['N', 'NNE', 'NE', 'ENE', 'E', 'ESE', 'SE', 'SSE', 'S', 'SSW', 'SW', 'WSW', 'W', 'WNW', 'NW', 'NNW'][round(int(re.sub(r'\b0{1,2}', '', wind_dir)[1:]) / 22.5) % 16]receives a numerical degrees value and returns a cardinal direction.