This repository is based on CompVis/latent-diffusion, with modifications for classifier conditioning and architecture improvements.

Since the original codebase is very big, complex and lack of documentation to fine-tune the original autoencoder and diffusion model.

It is extremely diffcult to fine tune existing pre trained model to produce good result.

How to finetune inpainting? #151

how to train Inpainting model using our own datasets? #280

Details about training inpainting model #9

how to train inpainting model with my own datasets #265

Training inpainting model on new datasets #298

Reproduction problem while training inpainting model #159

Without pretraining, it would take 8 V100 GPUs to produce satisfactory result.

With finetuning, 1 3090 is enough for transfer learning to medical images( in my case )

This repository made the fine tuning setup and inference easy by fixing some of the bug in the original repo.

- Load and Fine tune autoencoder (Very important for transfer learning )

- Load and fine tune latent diffusion model

- Combine trained autoencoder with latent diffusion model

- Inference example for both model

- Simplified data and mask loading

- Fixed some bug when training inpainting model

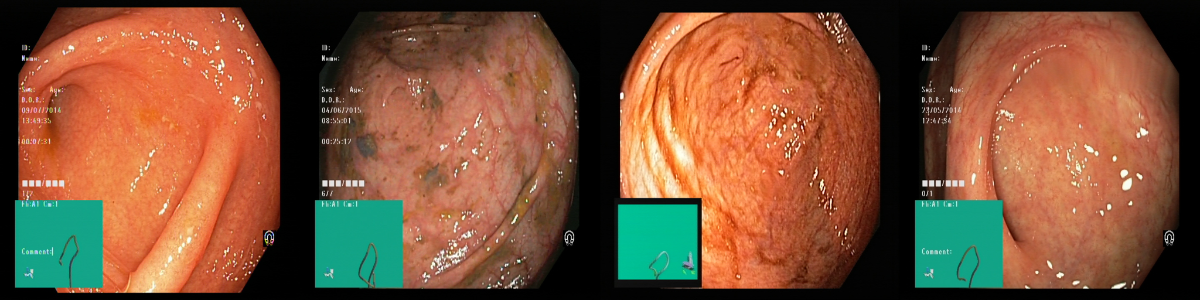

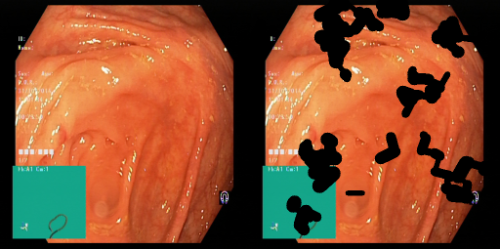

The original inpaint is to remove object from the image:

However, we can turn the model into createing object!

Two polyp

If you already have the ldm environment, please skip it

A suitable conda environment named ldm can be created

and activated with:

conda env create -f ldm/environment.yaml

conda activate ldm

From my experiment for medical images, it is better to produce a square mask instead of using polygon mask.

If you want to change it, feel free to modify the /ldm/ldm/data/PIL_data.py to change the data loading format.

All the dataloader used in training are in that .py file and it has simplified.

For most of the normal image, you DO NOT need to finetune the autoencoder.

You will want to funetune the autoencoder ONLY when your data is very different from the pretrained dataset, for exmaple endoscopic images.

So, you can skip part 1 and 2.

Since the autoencoder used for the pre-trained inpainting is vq-f4-noattn, we have to stick with it.

First, prepare the images and masks with the same format as in kvasir-seg folder (we DO NOT need any mask to finetune autoencoder)

Second, modify the data path in config.yaml( it should be in ldm/models/first_stage_models/vq-f4-noattn/config.yaml)

Then, run the following command

CUDA_VISIBLE_DEVICES=0 python main.py --base ldm/models/first_stage_models/vq-f4-noattn/config.yaml --resume ldm/models/first_stage_models/vq-f4-noattn/model.ckpt --stage 0 -t --gpus 0,

The model is trained with 50% of the original image and 50% of randomly masked image

Please refer to the combine.ipynb

Note that, the mask in here is in square mask, you can disable draw_rectangle_over_mask function in the /ldm/ldm/data/PIL_data.py to use original mask.

First, download the pre trained weight and prepare the images with the same format as in kvasir-seg folder

Download the pre-trained weights

wget -O models/ldm/inpainting_big/last.ckpt https://heibox.uni-heidelberg.de/f/4d9ac7ea40c64582b7c9/?dl=1

Second, modify the data path in config.yaml( it should be in ldm/models/ldm/inpainting_big/config.yaml )

Then, run the following command

CUDA_VISIBLE_DEVICES=0 python main.py --base ldm/models/ldm/inpainting_big/config.yaml --resume ldm/models/ldm/inpainting_big/last.ckpt --stage 1 -t --gpus 0,

Please refer to those inference notebook.