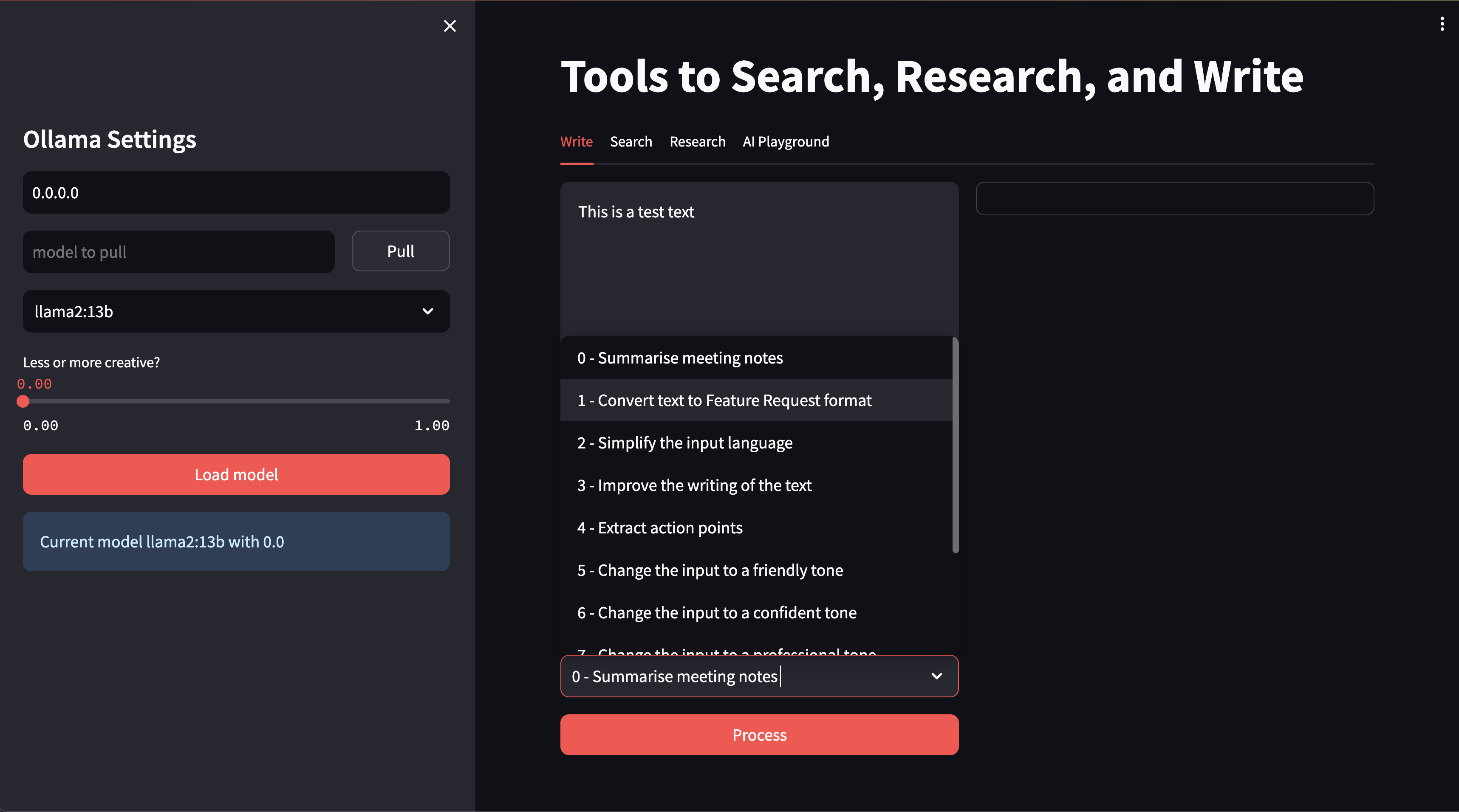

This is a tool to help HashiCorp Solutions Engineers in their day to day work.

Mac with M1 Pro

- Ollama - https://ollama.com/

- Docker

- Github access

- Python 3.11

-

Clone the repo

-

create the following sub-folders:

- cache_docker (for docker caching)

- cache (to use python directly)

-

Go to https://ollama.com/library and download some models.

- Have Ollama running -> see

run_ollama.sh. - set

OLLAMA_HOSTindocker-compose.yamlto the IP of your machine. - Run

docker compose up -d.

Note: Once I have a docker container published, this will be easier

- Have Ollama running -> see

run_ollama.sh - Run from source with

run_streamlit.sh

The quality of the answers only goes as far as the quality of the LLM, prompt and embeddings.

For RAG, create embeddings with the embed_hashicorp.py script or, if you work for HashiCorp, contact me directly.

See run_embed.sh