Create environment

Create conda environment:

conda create --name director python=3.10 -y

conda activate director

Install dependencies:

conda install pytorch pytorch-cuda=12.1 -c pytorch -c nvidia

conda install pytorch3d -c pytorch3d

pip install -r requirements.txt

Note: pytorch3d installation can be tricky. Please follow the official guidelines if you encounter any issues.

Set up the dataset

Check the data repository here.

First, install git lfs by following the instructions here.

To get the data, run:

cd /PATH/TO/THE/DATASET

git clone https://huggingface.co/datasets/robin-courant/et-data

Prepare the dataset (untar archives):

cd et-data

sh untar_and_move.sh

Then copy or symlink the et-data folder in ./data:

cd /PATH/TO/CLaTr

ln -s /PATH/TO/THE/DATASET/et-data ./data

Download checkpoints

Run:

sh download_checkpoints.sh

Note: You can specify the:

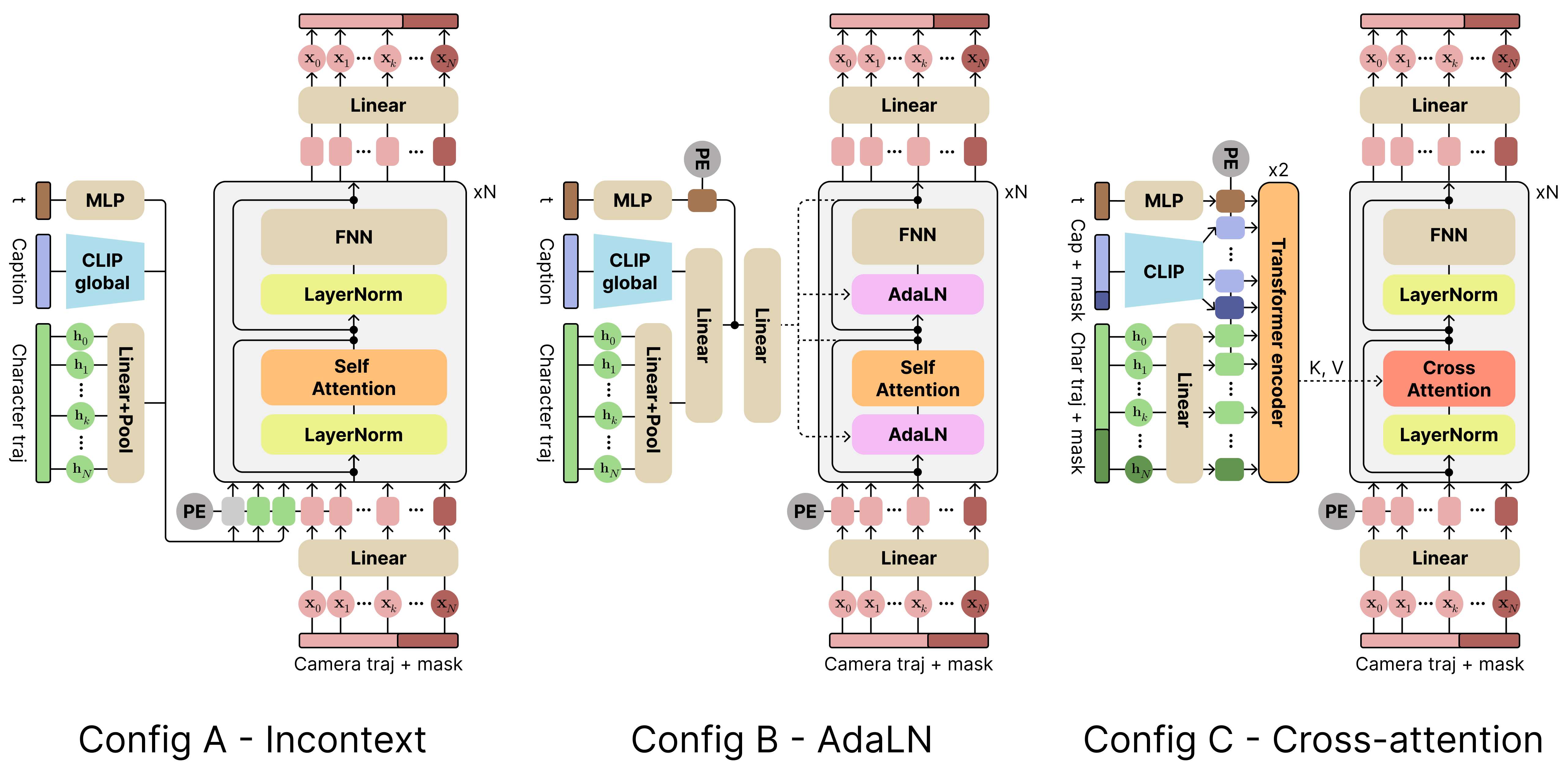

- DIRECTOR architecture, i.e. A:

incontext_director, B:adaln_director, C:ca_director. - Subset,

pure(only simple camera trajectories) ormixed(simple and complex camera trajectories).

To launch training, run:

HYDRA_FULL_ERROR=1 python src/train.py \

diffuser/network/module=ARCHITECTURE_NAME dataset.trajectory.set_name=SUBSET_NAME

To launch evaluation, run:

HYDRA_FULL_ERROR=1 python src/evaluate.py

compnode.num_gpus=1 \

diffuser/network/module=ARCHITECTURE_NAME dataset.trajectory.set_name=SUBSET_NAME \

checkpoint_path=CHECKPOINT_PATH diffuser/network/module=rn_mdm diffuser.guidance_weight=GUIDANCE_WEIGHT

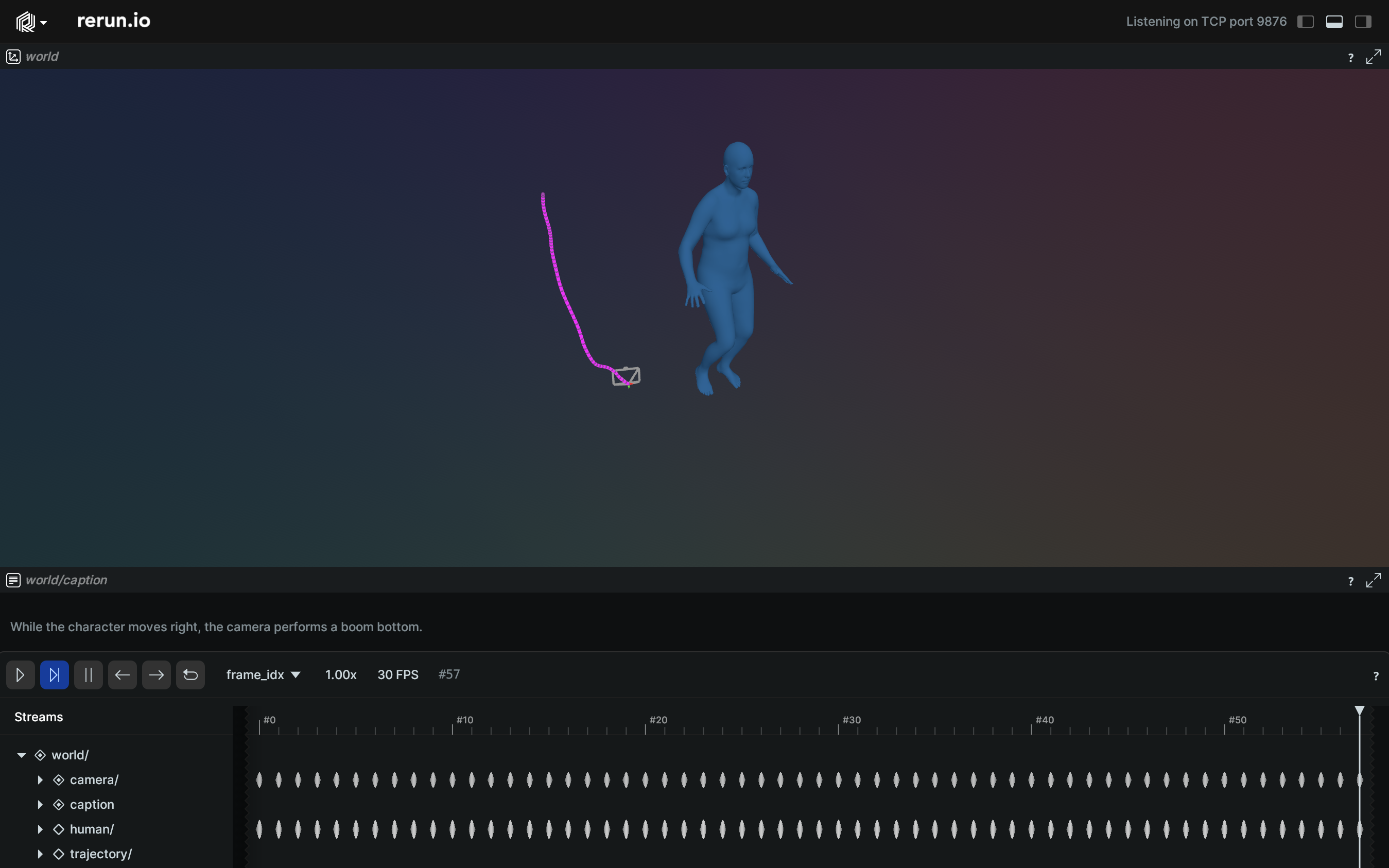

There are 3 different ways of visualizing results, using blender, rerun and gradio.

Note: You will need meshes, which are not yet released with the dataset.

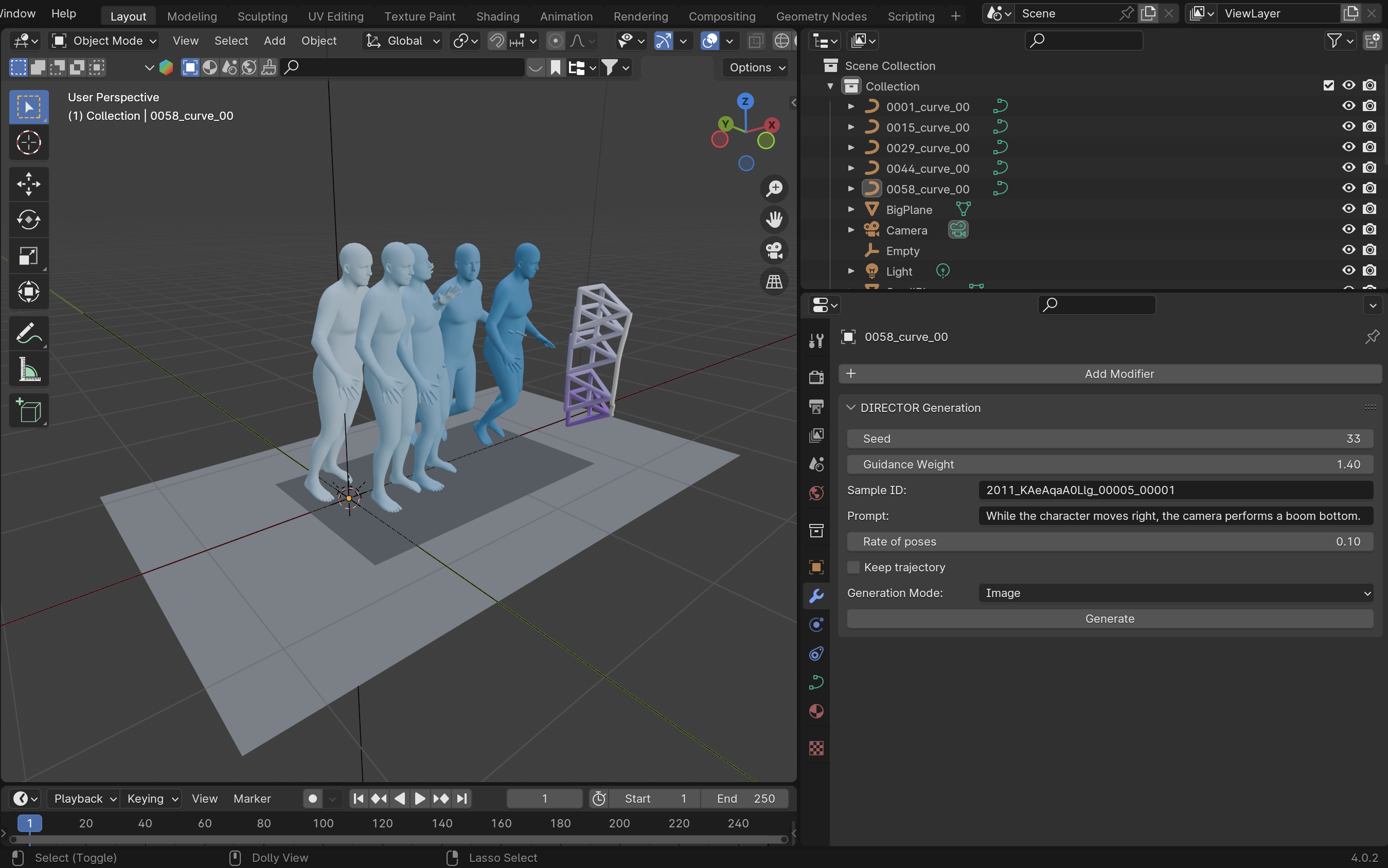

Blender visualization

First, install blender:

- Follow the official instructions.

- Locate the python installation used by conda with the following line (

/PATH/TO/CONDA/ENV/):conda env list | grep '*' - Locate the python installation used by blender with the following line (

/PATH/TO/BLENDER/python):blender --background --python-expr "import sys; import os; print('\nThe path to the installation of python of blender can be:'); print('\n'.join(['- '+x.replace('/lib/python', '/bin/python') for x in sys.path if 'python' in (file:=os.path.split(x)[-1]) and not file.endswith('.zip')]))" - Link conda env to blender python with the following line:

ln -s /PATH/TO/CONDA/ENV/ /PATH/TO/BLENDER/python

To launch Blender through the command line, run:

blender PATH/TO/BLENDER_FILENAME

Then, in Blender, go to the Scripting tab and open visualization/blender_viz.py.

Next, go to the Modifiers tab (wrench tool icon), enter your desired parameters, and generate your scene.

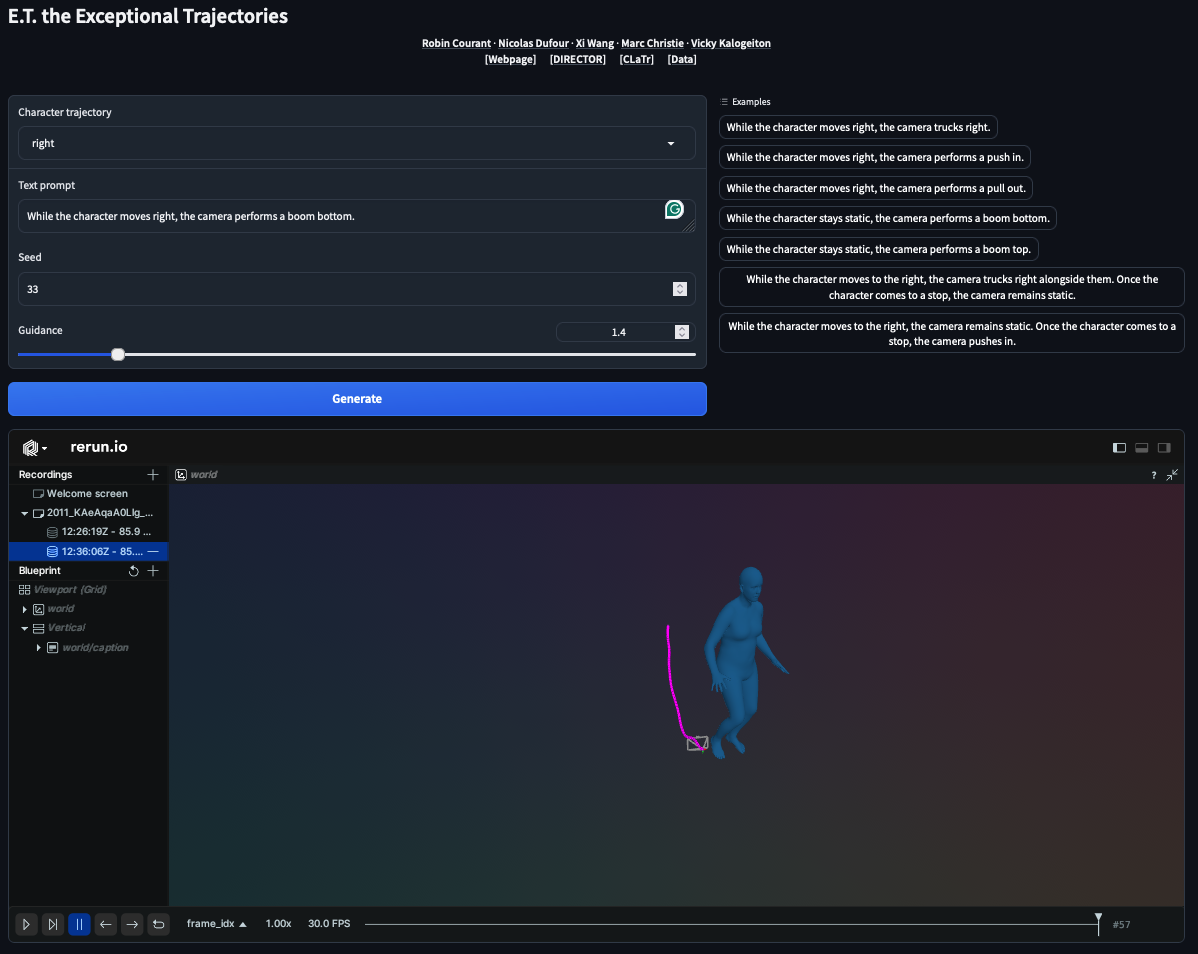

Gradio visualization

To launch Gradio visualization script, run:

HYDRA_FULL_ERROR=1 python visualization/gradio_viz.py

This code is distributed under an MIT LICENSE.

Note that our code depends on other libraries, including PyTorch, PyTorch3D, Hugging Face, Hydra, and uses datasets which each have their own respective licenses that must also be followed.