Final project on video question answering for the Master MVA Recvis 2020/2021 class. The idea is that given a video, we are able to automaticaly answer questions about what is happening in the video.

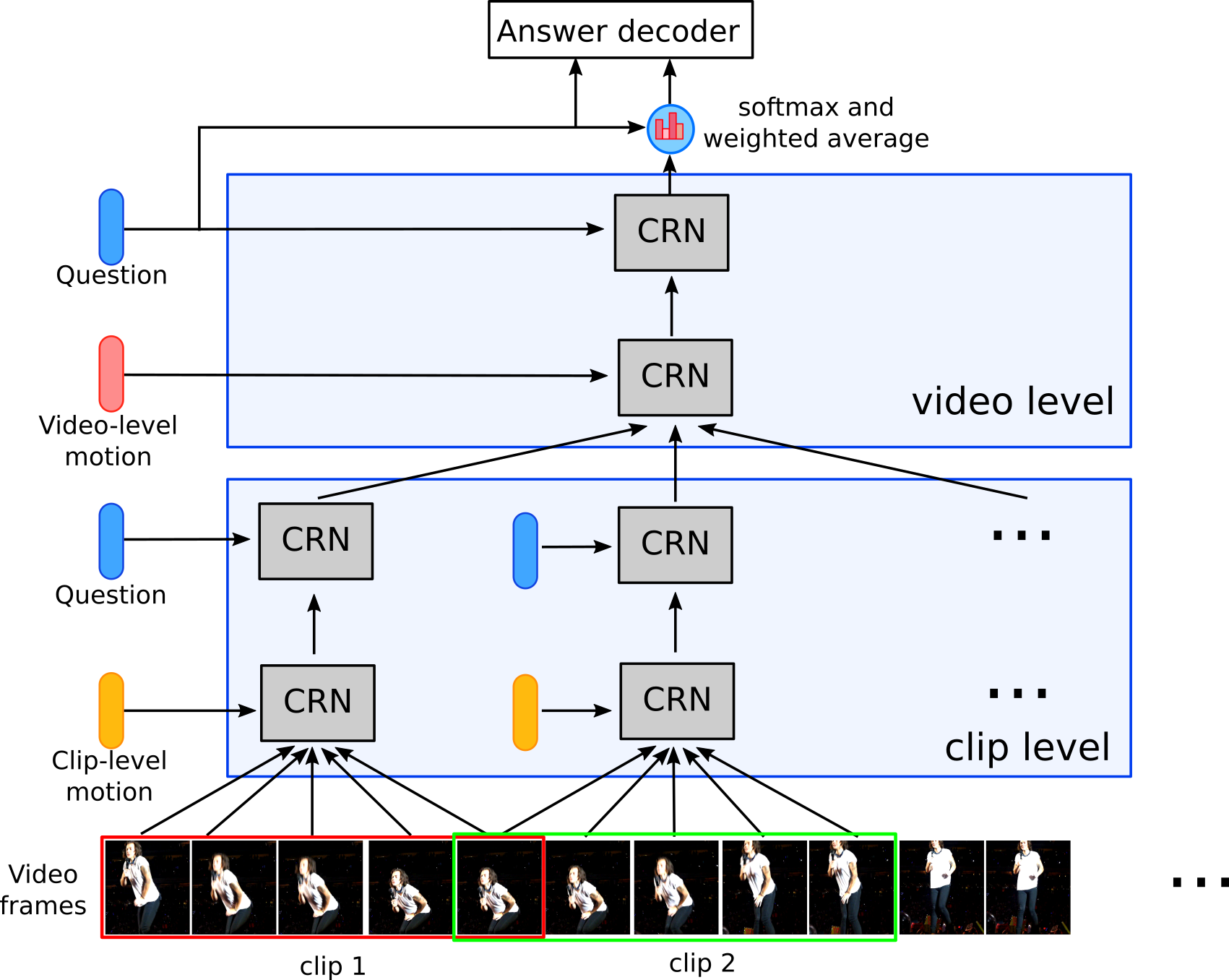

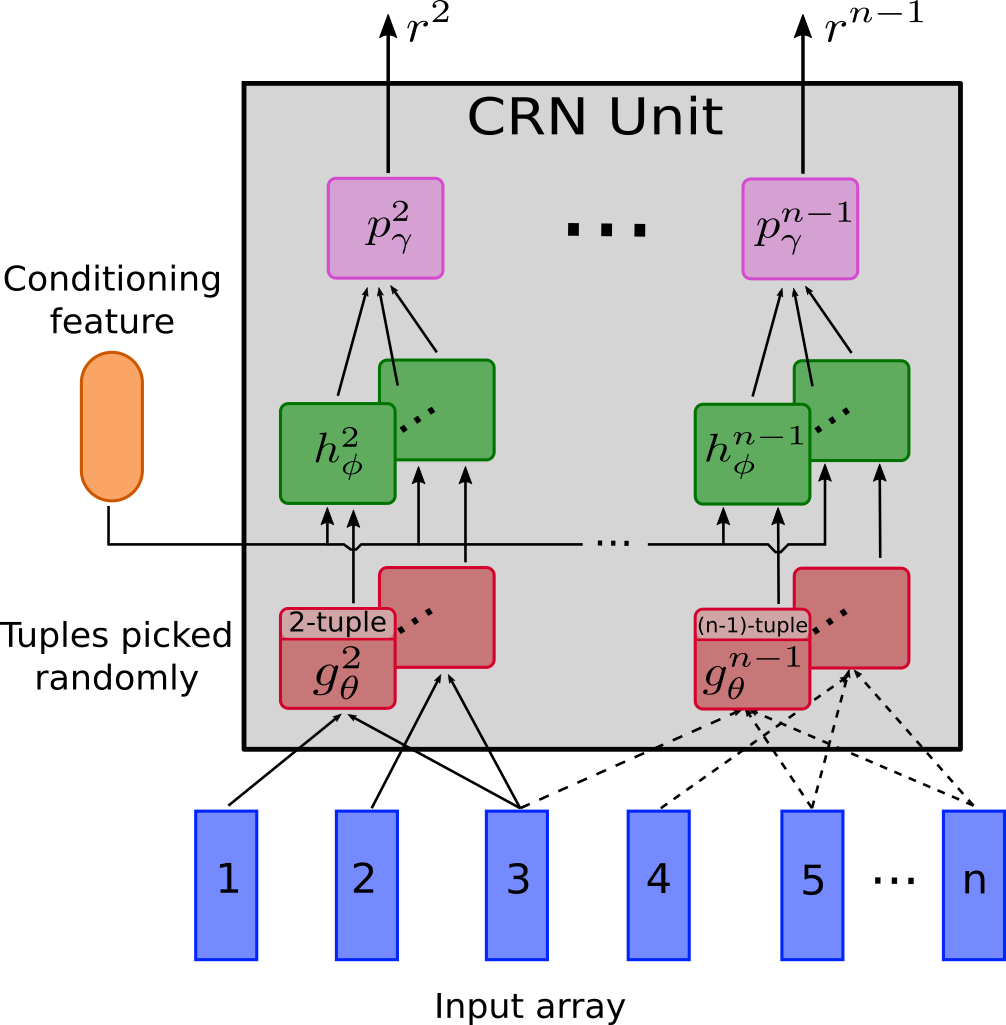

This project is based on the paper "Conditional Relation Networks for Video Question Answering". Le et al 2020

We build upon it to replace the Glove Text encoding by a BERT text encoder and adapt the multimodal setup to include subtitles modalities in the TVQA dataset. The following visuals where taken from Le et al paper. They explain how the HCRN model work