Code repository for the paper: MultiPhys: Multi-Person Physics-aware 3D Motion Estimation

Nicolas Ugrinovic, Boxiao Pan, Georgios Pavlakos, Despoina Paschalidou, Bokui Shen, Jordi Sanchez-Riera, Francesc Moreno-Noguer, Leonidas Guibas,

[2024/06] Demo code release!

This code was tested on Ubuntu 20.04 LTS and requires a CUDA-capable GPU.

-

First you need to clone the repository:

git clone https://github.com/nicolasugrinovic/multiphys.git cd multiphys -

Setup the conda environment, run the following command:

bash install_conda.sh

We also include the following steps for trouble-shooting.

EITHER:- Manually install the env and dependencies

conda create -n multiphys python=3.9 -y conda activate multiphys # install pytorch using pip, update with appropriate cuda drivers if necessary pip install torch==1.13.0 torchvision==0.14.0 --index-url https://download.pytorch.org/whl/cu117 # uncomment if pip installation isn't working # conda install pytorch=1.13.0 torchvision=0.14.0 pytorch-cuda=11.7 -c pytorch -c nvidia -y # install remaining requirements pip install -r requirements.txt

OR:

- Create environment

We use PyTorch 1.13.0 with CUDA 11.7. Use

env_build.yamlto speed up installation using already-solved dependencies, though it might not be compatible with your CUDA driver.conda env create -f env_build.yml conda activate multiphys

- Manually install the env and dependencies

-

Download and setup mujoco: Mujoco

wget https://github.com/deepmind/mujoco/releases/download/2.1.0/mujoco210-linux-x86_64.tar.gz tar -xzf mujoco210-linux-x86_64.tar.gz mkdir ~/.mujoco mv mujoco210 ~/.mujoco/ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/.mujoco/mujoco210/bin

If you have any problems with this, please follow the instructions in the EmbodiedPose repo regarding MuJoCo.

-

Download the data for the demo, this includes the used models:

bash fetch_demo_data.sh

Trouble-shooting

- (optional) Our code uses EGL to render MuJoCo simulation results in a headless fashion, so you need to have EGL installed.

You MAY need to run the following or similar commands, depending on your system:

sudo apt-get install libglfw3-dev libgles2-mesa-dev

The data used here, including SLAHMR estimates should have

been donwloaded and placed to the correct folders by using the fetch_demo_data.sh script.

Run the demo script. You can use the following command:

EITHER, to generate several sequences:

bash run_demo.shOR, to generate one sequence:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/lib/nvidia:/home/nugrinovic/.mujoco/mujoco210/bin;

export MUJOCO_GL='egl';

# generate sequence

# expi sequences

python run.py --cfg tcn_voxel_4_5_chi3d_multi_hum --data sample_data/expi/expi_acro1_p1_phalpBox_all_slaInit_slaCam.pkl --data_name expi --name slahmr_override_loop2 --loops_uhc 2 --filter acro1_around-the-back1_cam20Trouble-shooting

- If you have any issues when running mujoco_py for the first time while compiling, take a look at this github issue: mujoco_py issue

This will generate a video with each sample that appear in the paper and in the paper's video. Resuls are

saved in the results/scene+/tcn_voxel_4_5_chi3d_multi_hum/results folder. For each dataset this will

generate a folder with the results, following the structure:

<dataset-name>

├── slahmr_override_loop2

├── <subject-name>

├── <action-name>

├── <date>

├── 1_results_w_2d_p1.mp4

├── ...- Demo/inference code

- Data pre-processing code

- Evaluation

Parts of the code are taken or adapted from the following amazing repos:

If you find this code useful for your research, please consider citing the following paper:

@inproceedings{ugrinovic2024multiphys,

author={Ugrinovic, Nicolas and Pan, Boxiao and Pavlakos, Georgios and Paschalidou, Despoina and Shen, Bokui and Sanchez-Riera, Jordi and Moreno-Noguer, Francesc and Guibas, Leonidas},

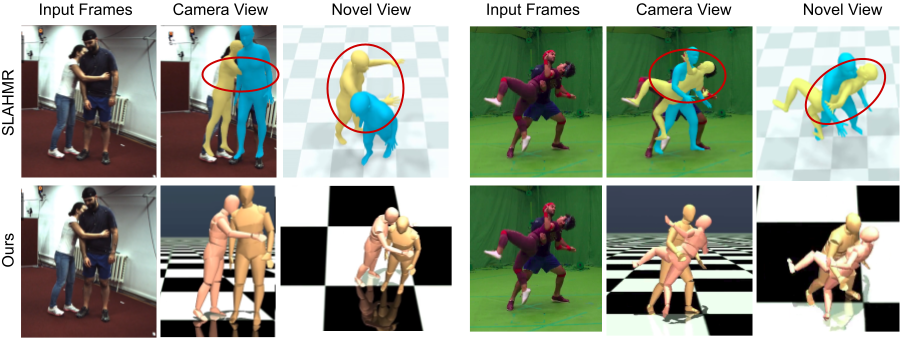

title={MultiPhys: Multi-Person Physics-aware 3D Motion Estimation},

booktitle={Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024}

}