Painting process generating using diffusion models

TODO:

- Basic Inference Code Release

- Full Inference Code Release

- Training Code Release

- Training Dataset Release

- Checkpoints Release

- More Examples

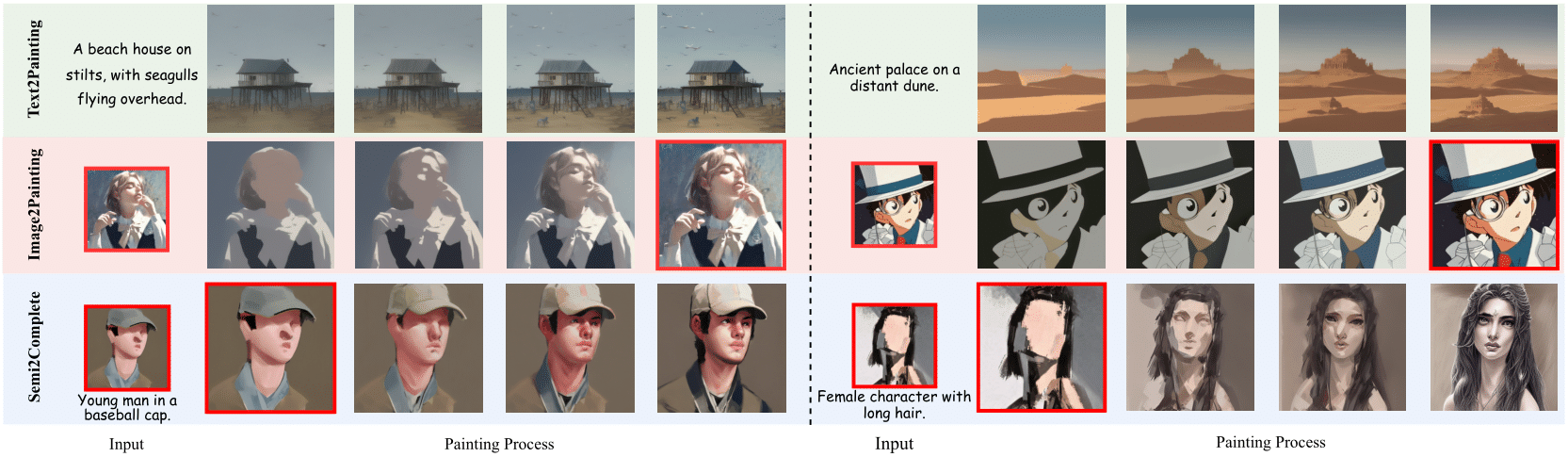

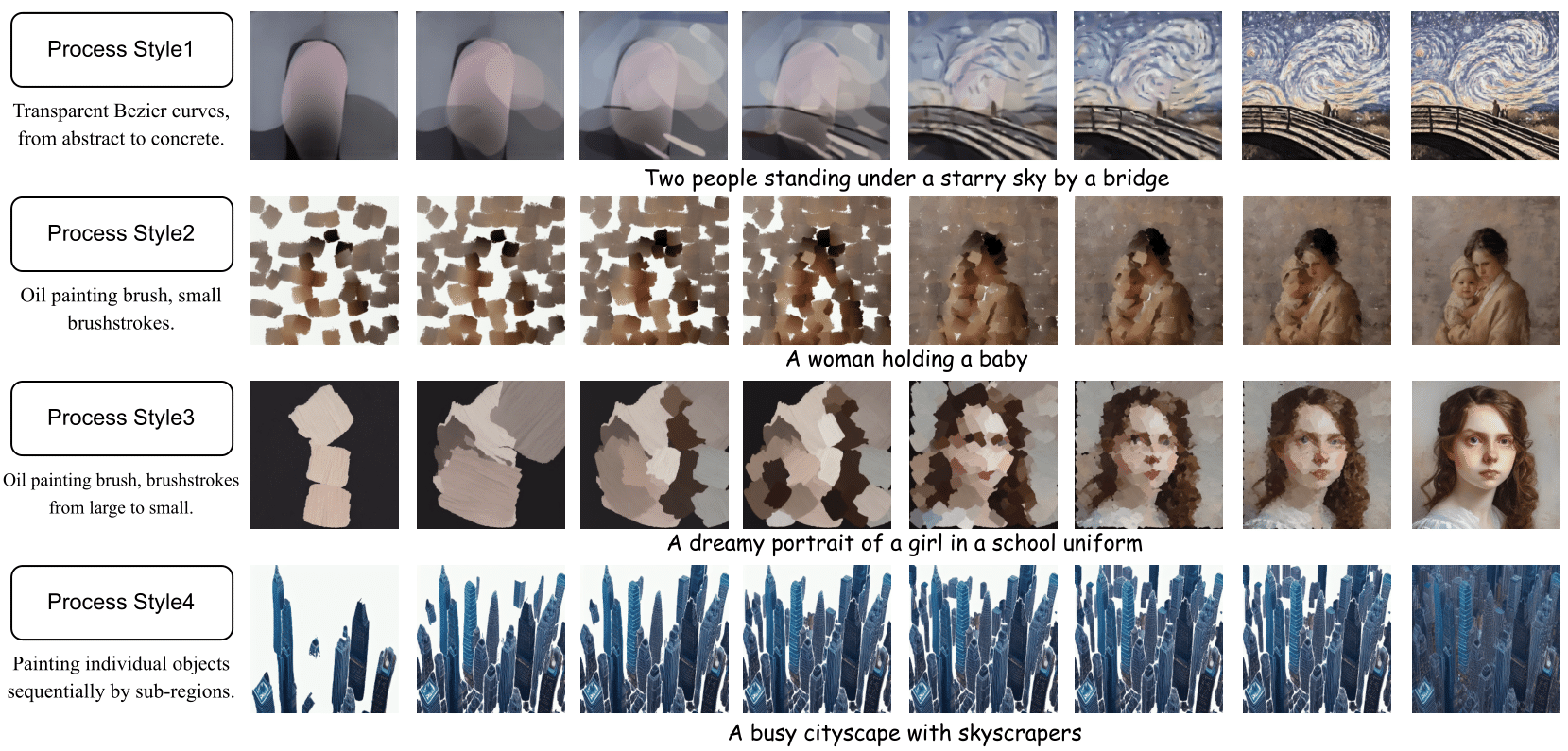

ProgressPainter is a model based on animatediff to generate Human-like painting progresses.

We pretrained the painting module based on multiple traditional painting pregress reconstruction methods.

Then we fintuned the painting moodule using very few real-world painting progresses with LoRA (Low Rank Adaptation) techniques, the perfromance of generating painting progresses is amazing.

Furthermore, by combining image reference net, we are able to reconstruct existing paintings or finish unfinished painting progresses.

MINIMAL 30GB GPU memory is REQUIRED for SINGLE inferencing!!!

Download the pre-trained models from Huggingface Repo and then get them into models folder accordingly. If you need to do speedpaintings, you would need to download the dreambooth model from the community HERE.

wget -O models/DreamBooth_LoRA/v3_sd15_adapter.ckpt https://huggingface.co/guoyww/animatediff/blob/main/v3_sd15_adapter.ckpt

git lfs install

git clone https://huggingface.co/runwayml/stable-diffusion-v1-5 models/stable-diffusion-v1-5python scripts/animate.py --config configs/prompts/character.yaml python scripts/animate.py --config configs/prompts/speedpainting.yaml Learning to Paint With Model-based Deep Reinforcement Learning

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction

AnimateDiff: Animate Your Personalized Text-to-Image Diffusion Models without Specific Tuning

Training data comes from @"Doodling by the Lakeside.", thanks for his contribution.