This repository contains my solutions to the programming assignments of the Neural Networks and Deep Learning course of the University of Padova a.y. 2022/2023.

In LSlinear_regression we provide a basic implementation of linear regression using least squares, both with and without regularization.

The notebook available at PyTorch provides a brief introduction to the PyTorch functionalities, such as the definition of tensors, the use of autograd, the definition of neural networks and datasets, the use of optimizers and the definition of loss functions.

Example of a feed forward neural network for linear regression, implemented with PyTorch. The notebook is available at PyTorch_linear_regression.

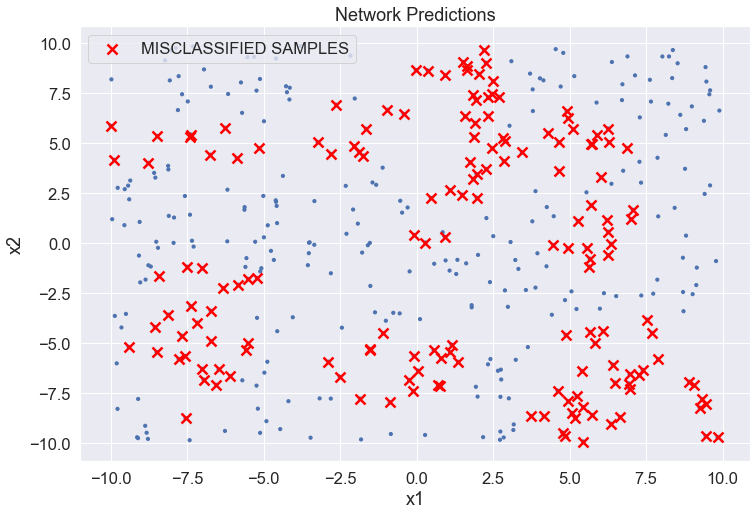

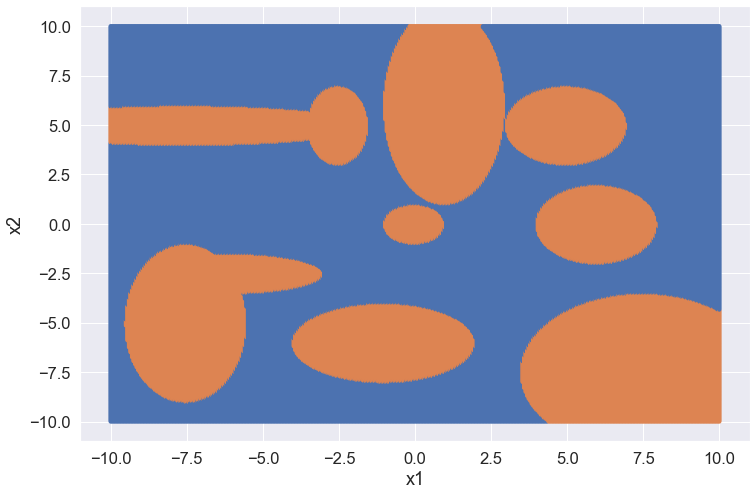

Example of a feed forward neural network for binary classification, implemented with PyTorch. The notebook is available at PyTorch_binary_classification. An example of model and classification results is shown below.

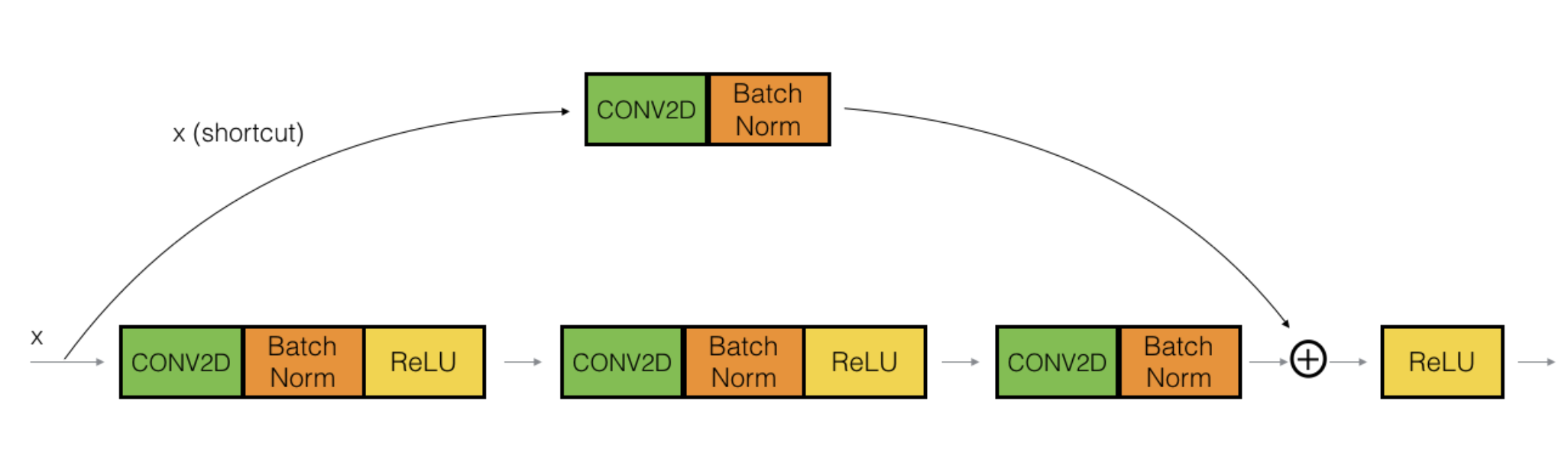

We implement a ResNet model for image classification, the architecture is based on CNN, among with the combination of convolutional and identity blocks. The notebook is available at ResNet.

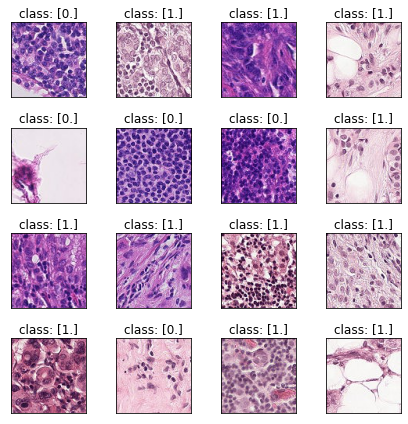

Example of the dataset and the model architecture is shown below.

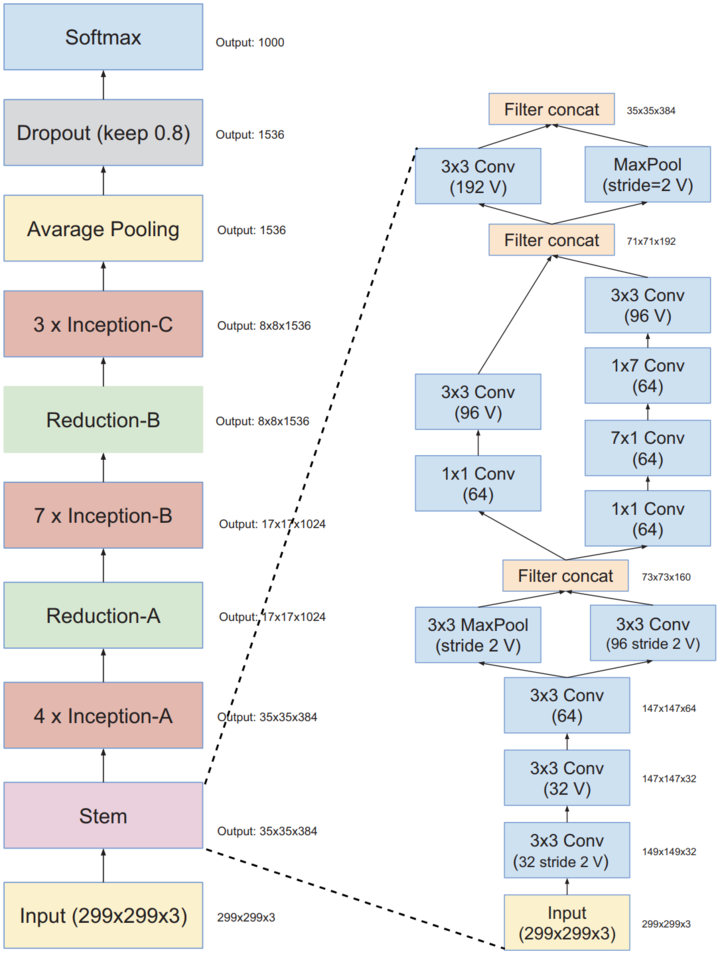

We implement an Inception-v4 model for image classification, the architecture consists of three different block types: Inception blocks, the Reduction blocks and stem block. The notebook is available at Inception-v4.

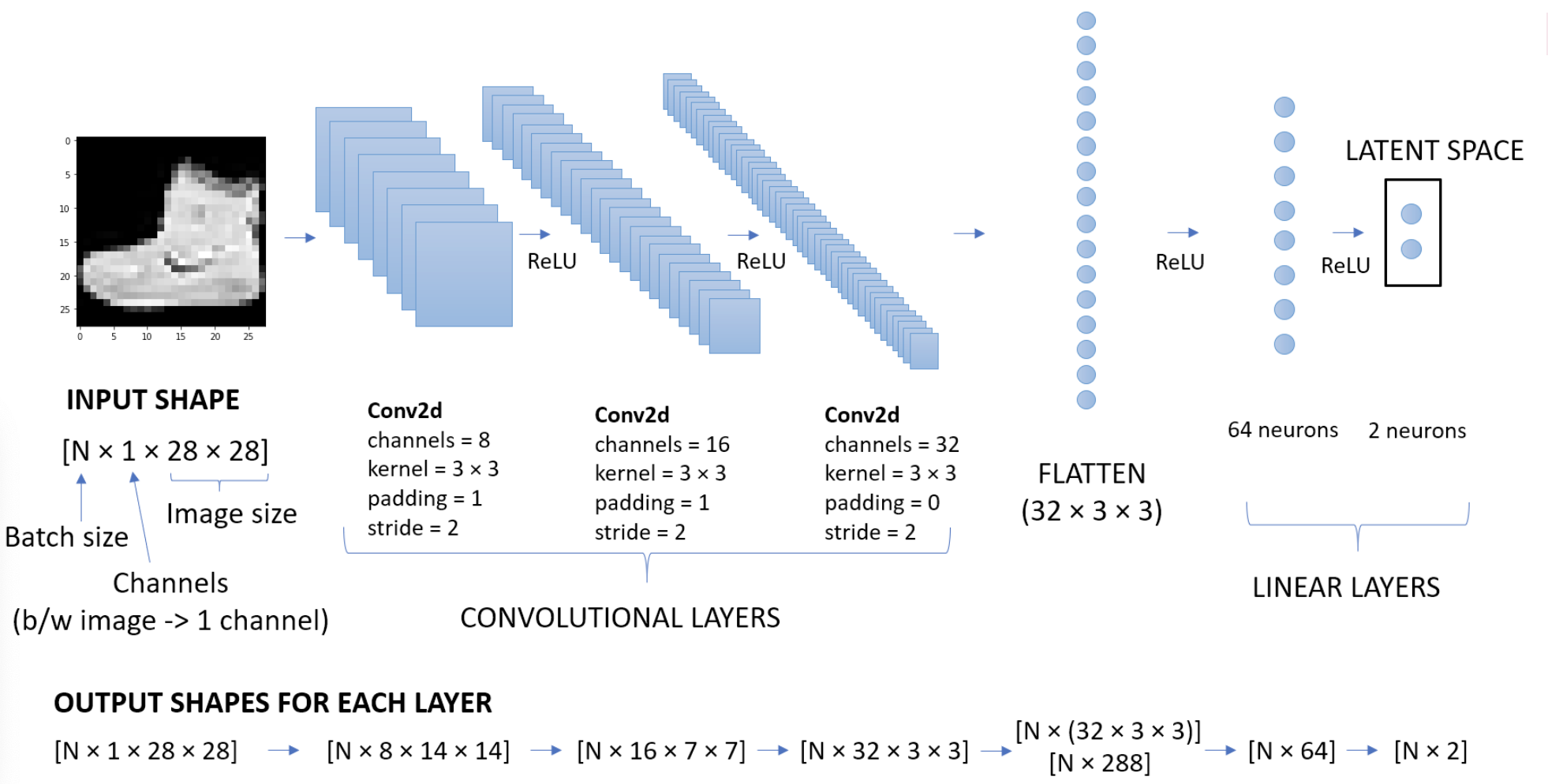

The dataset is the same as in the previous exercise.In CNN_autoencoder we implement a CNN autoencoder for image reconstruction and we test it on fashion MNIST dataset. The encoder structure follows:

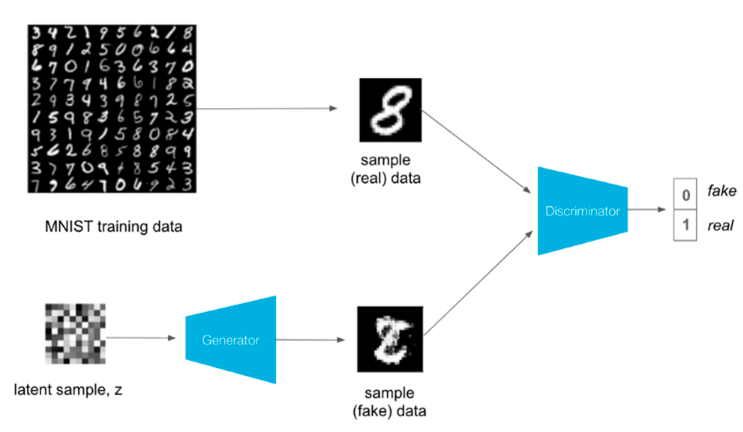

In GANs we implement a GAN model for MNIST digit image generation. The generation is structured as follows: