LIDIN Nicolas github repository: https://github.com/nicolidin/CloudTechnoFirstAssignmentStackExchange

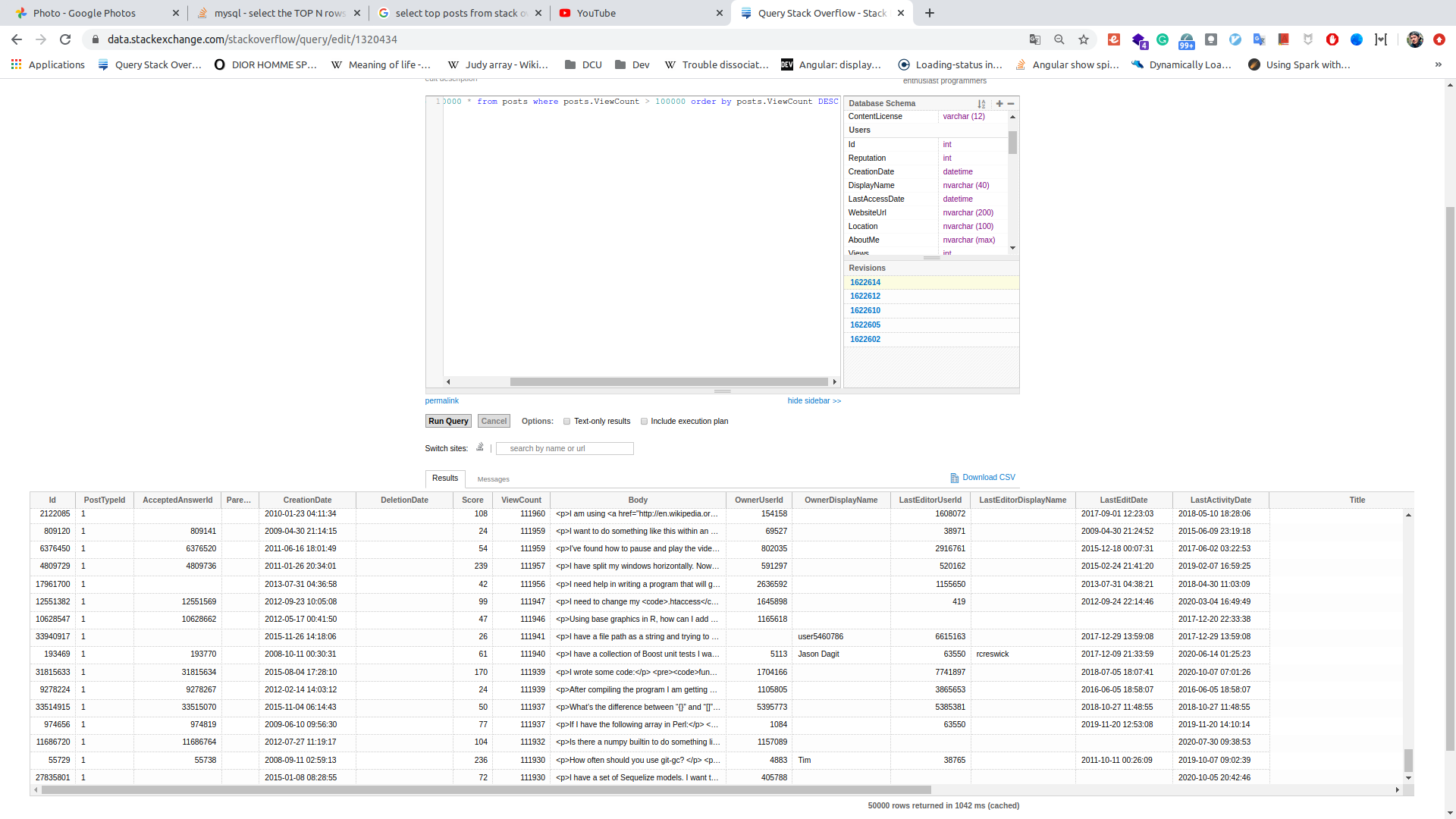

To do the queries we use "data.stackexchange.com/stackoverflow"

As we can't get 200 000 rows in one query the aim of this part 1 is to get 4 csv of 50 000 rows each to finally have the top 200 000 posts.

We firstly start to get the top 50 000 rows using a magic number in our first query.

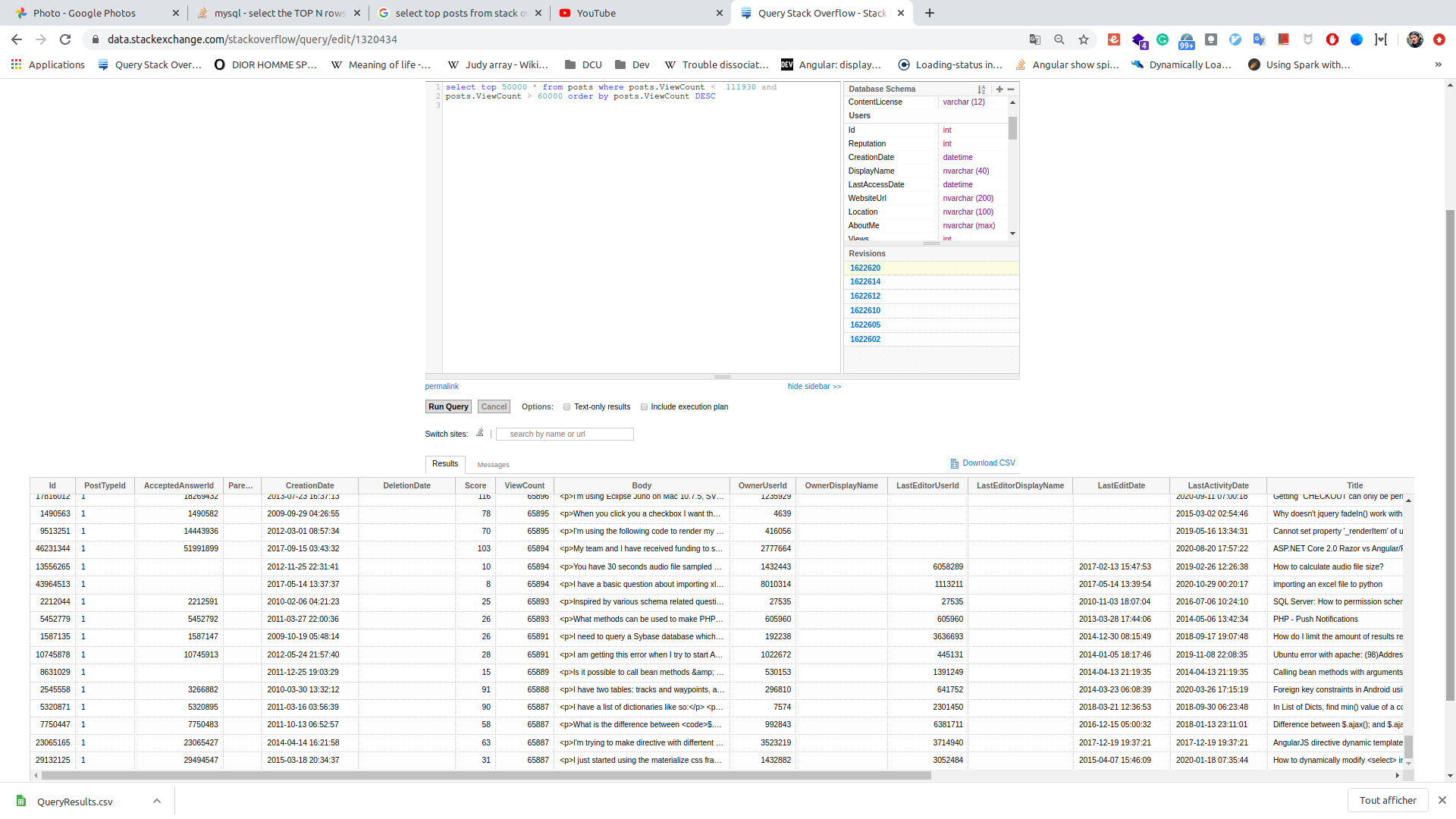

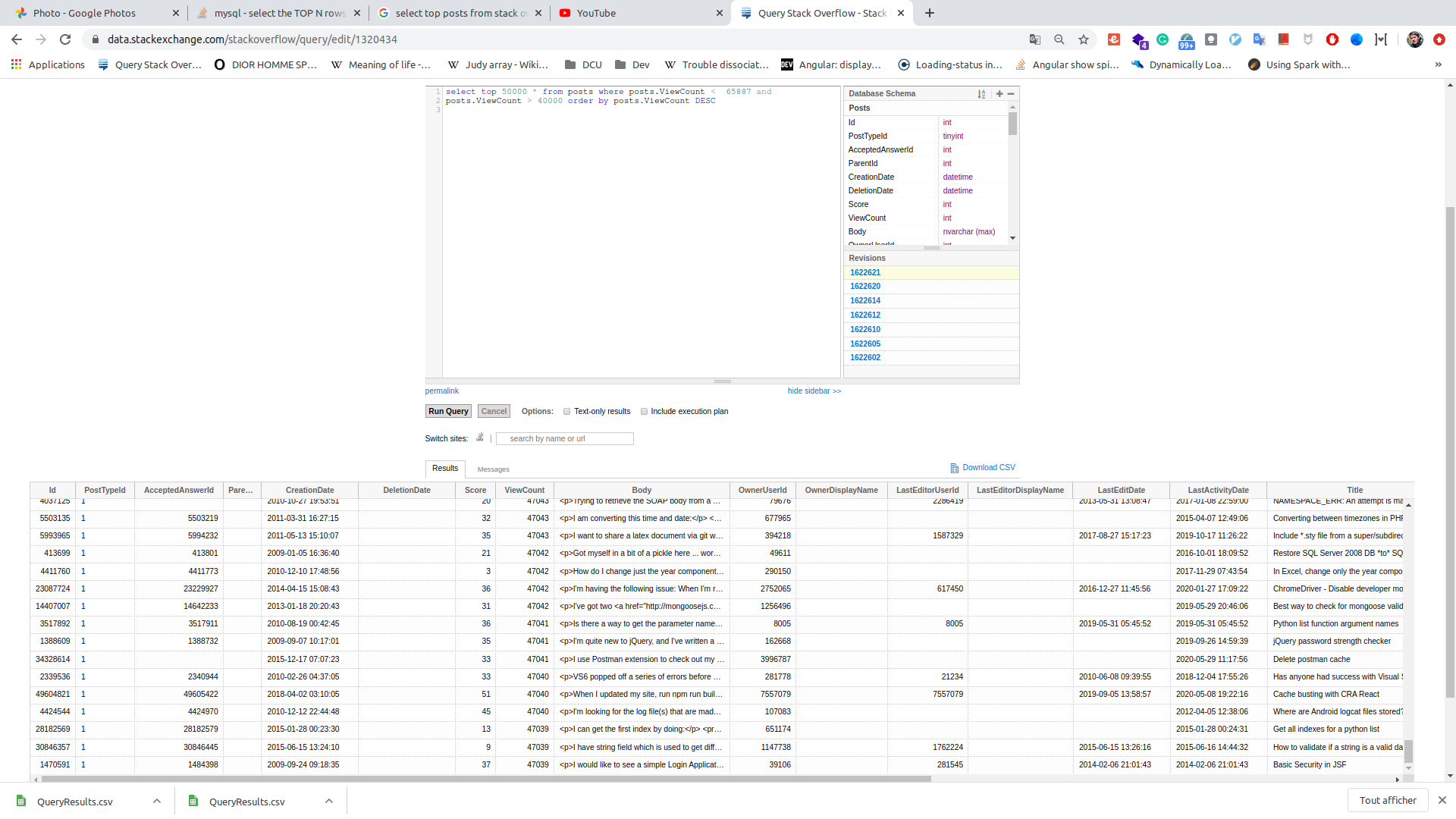

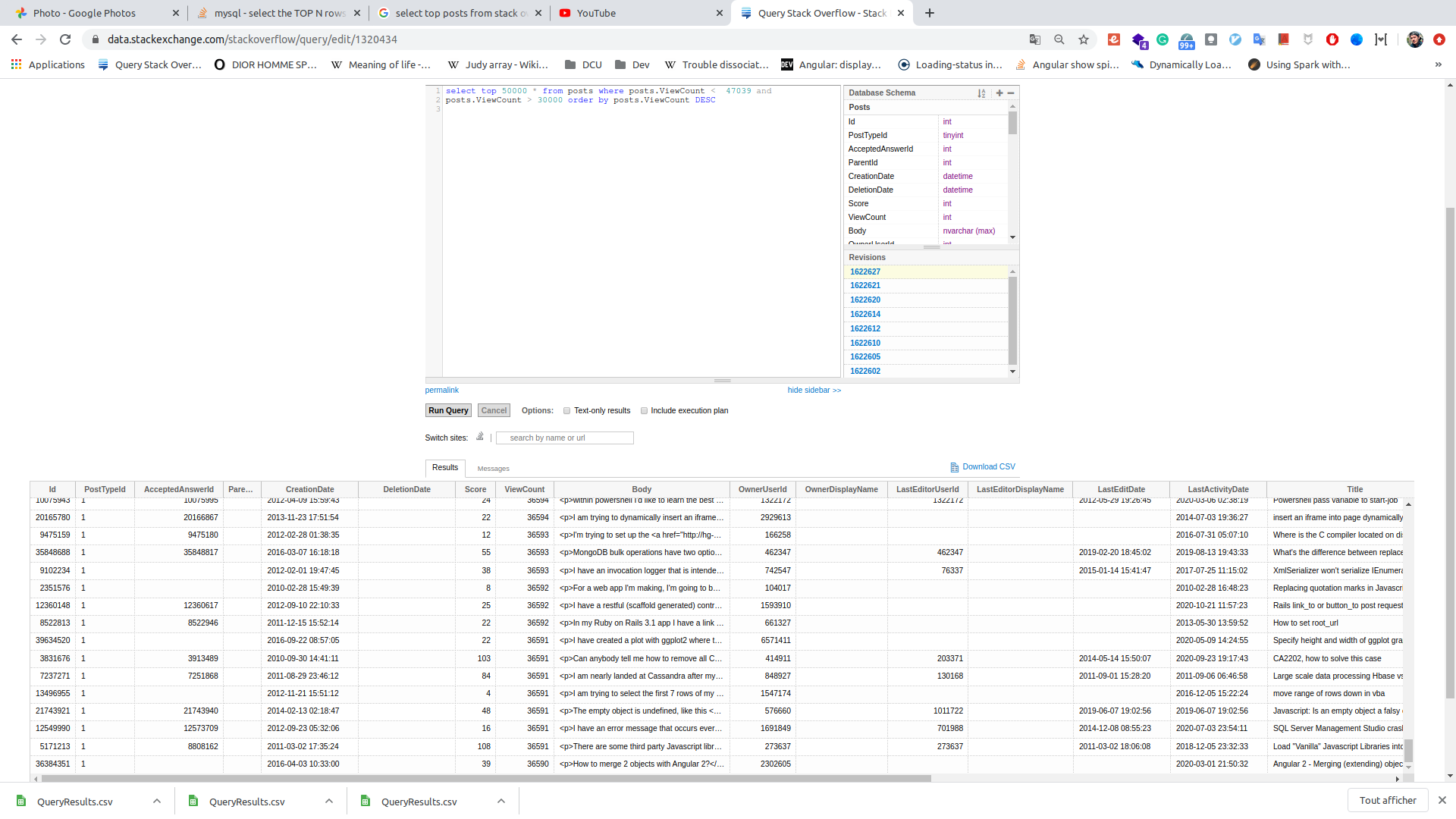

After that, we do 3 other times a request that gets 50 000 rows inferior of the last number got by the previous request.

We have finally 4 csv with each 50 000 posts rows

The second and third parts are made with Kotlin language in the same script. Indeed I chose to use for this second task MapReduce with Hive to extract, transform, and load the data as applicable. For the third part I also use Hive to do queries through Kotlin.

Firstly, before executing the script with Kotlin, I implemented a script written in R to remove the body column that is not perfectly formatted. Then, it will be simpler to use these csv with Hive and/or Pig:

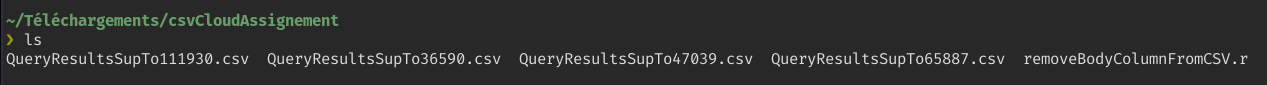

Indeed we can see below that we have the four files with an R script (removeBodyColumnFromCsv).

Below we can see that this script simply removes a column from a csv file.

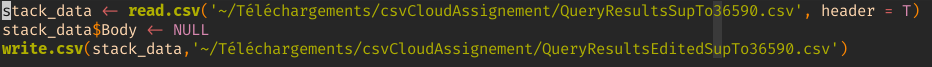

After edited the csv, we have to add these files into an Hadoop Distributed File System (HDFS) that will store these files on the servers inside our Cluster (created with Google Cloud Platform).

We push these files into HDFS to after be able to use Pig and/or Hive (MapReduce) to load data as applicable.

On the screen below we can see that we create a folder through HDFS, then we add the four csv files to finally print them with the ls command.

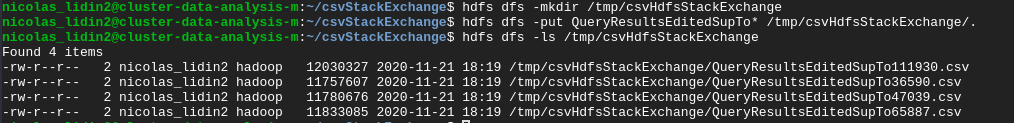

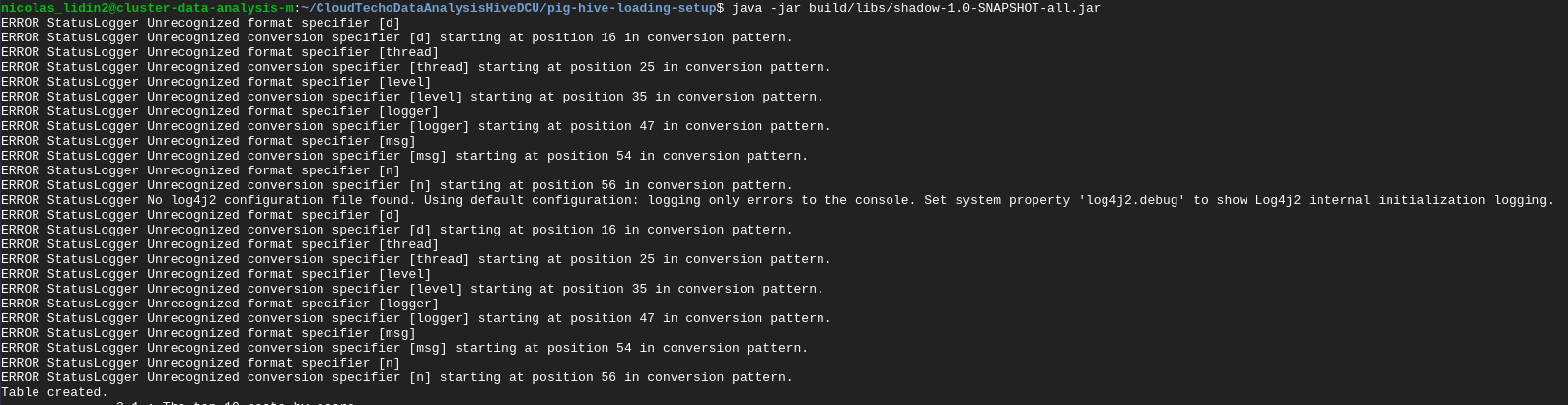

On the screen below we extract all the .csv and load the data in a new created table with only the column that interests us (we don't add the body column).

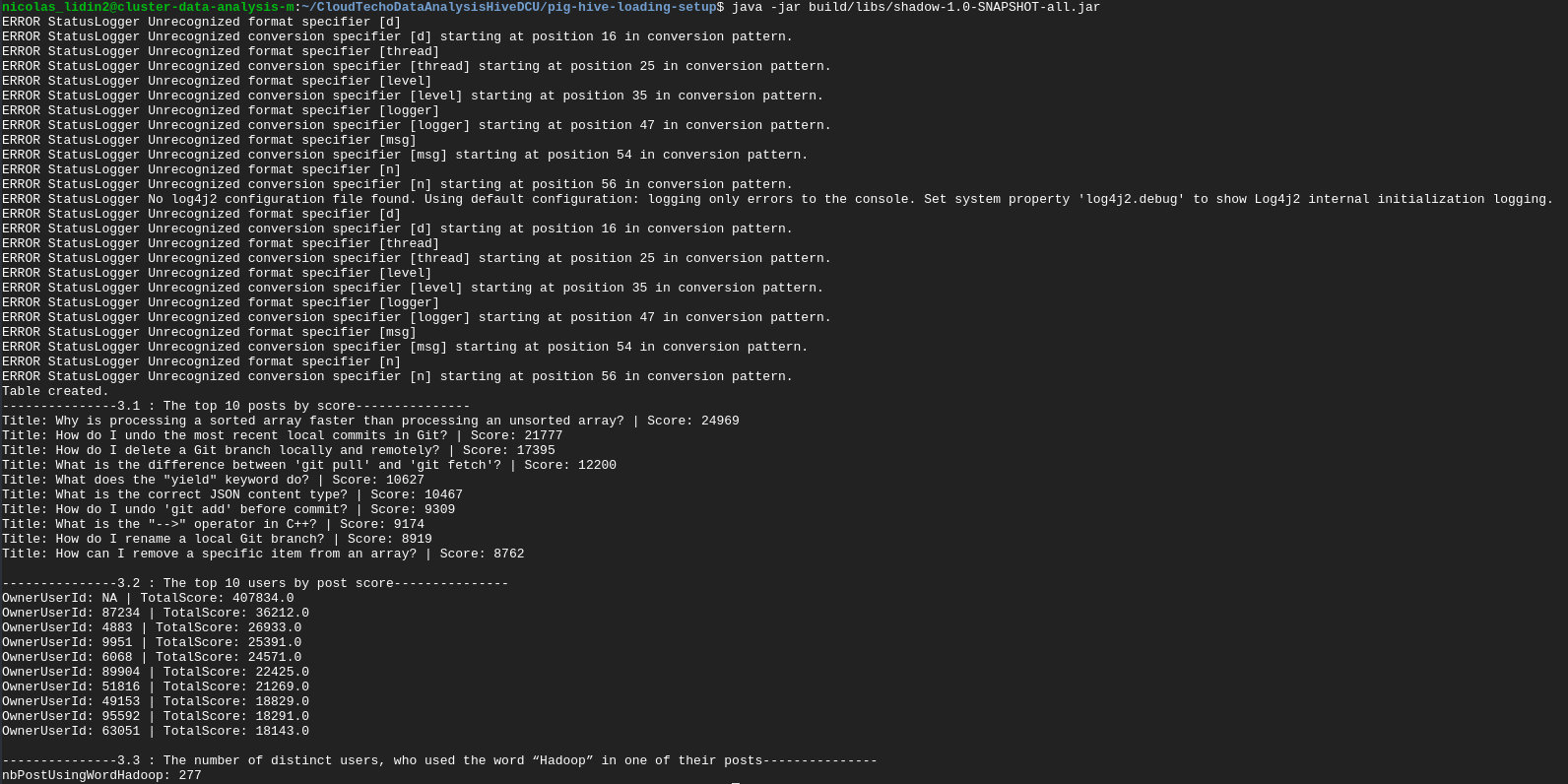

When executing the jar, we can in this the below screen see that the logs show the table is creating (with 200 000 row load with the 4 csv files).

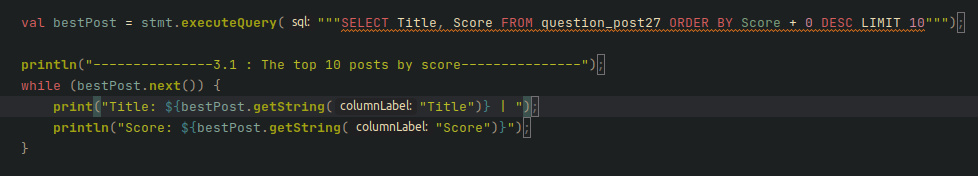

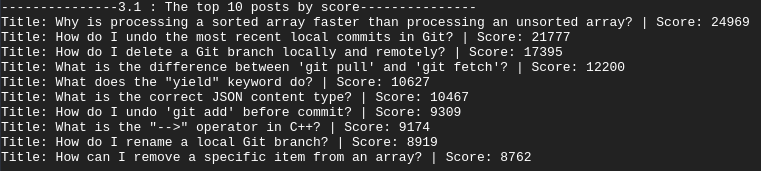

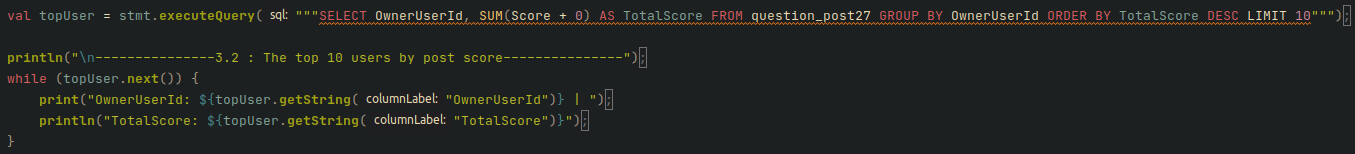

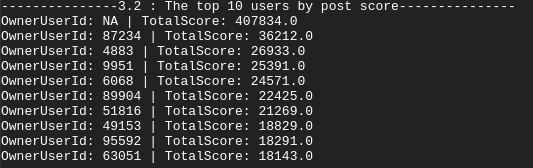

For this third part, I still use Hive (based on MapReduce) to do all the queries through the table that we just created. We keep doing this part in the same file after loading the data as applicable through stmt variable.

These two screens show the query to get the top 10 posts by score and the log of the Title and Score column.

These two screens show the query to get the top 10 users by post score and the log of the OwnerUserId and TotalScore column.

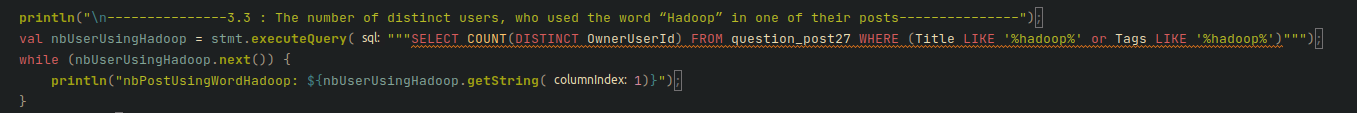

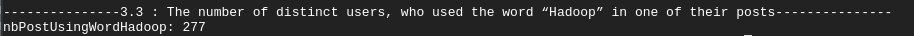

These two screens show the query to get the number of distinct users who use the world "hadoop" in one of their post and the log of the number.

If you want to run the program to do the task 2 and 3

run these two command lines:

./gradlew build

java -jar build/libs/shadow-1.0-SNAPSHOT-all.jar

Above we have all the log when executing our Kotlin script. (task 2 and task 3) by doing