This code is based on the implementation of DARTS and AutoDL-Projects. The training code for the ImageNet dataset is taken from P-DARTS

Python >= 3.6.9, PyTorch == 1.5.0, torchvision == 0.6.0

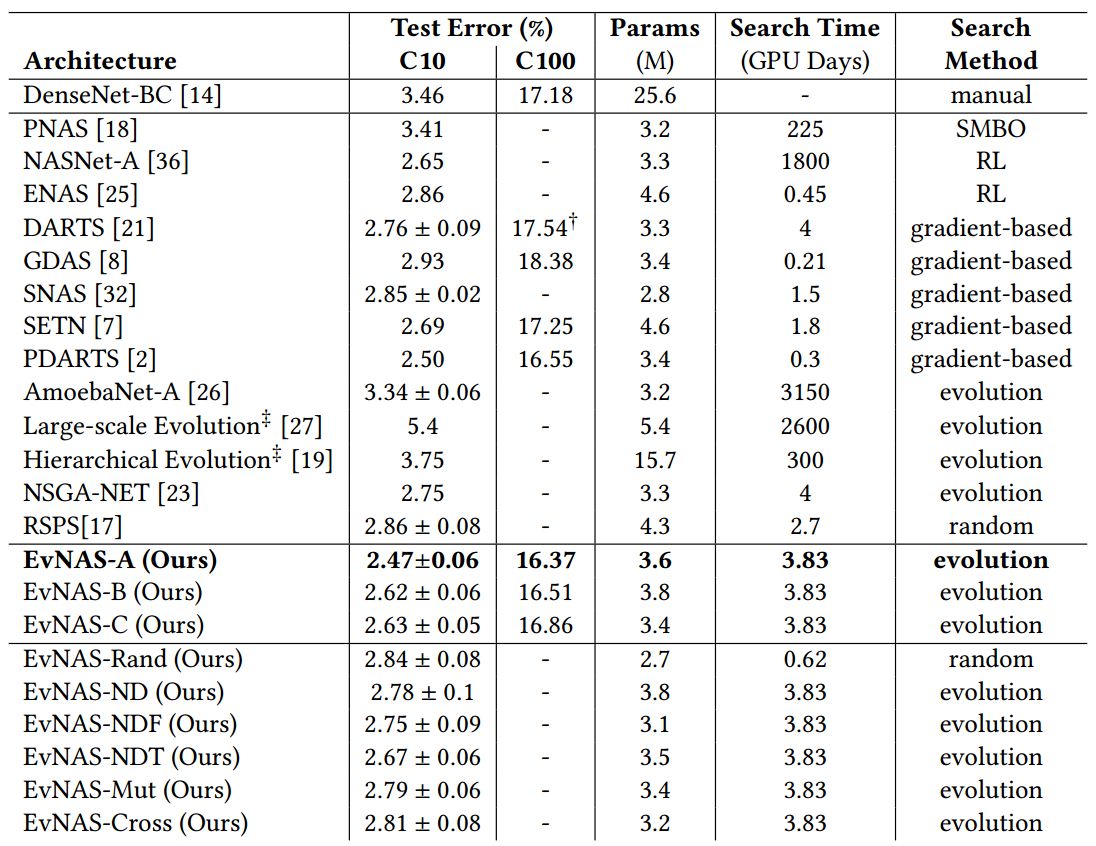

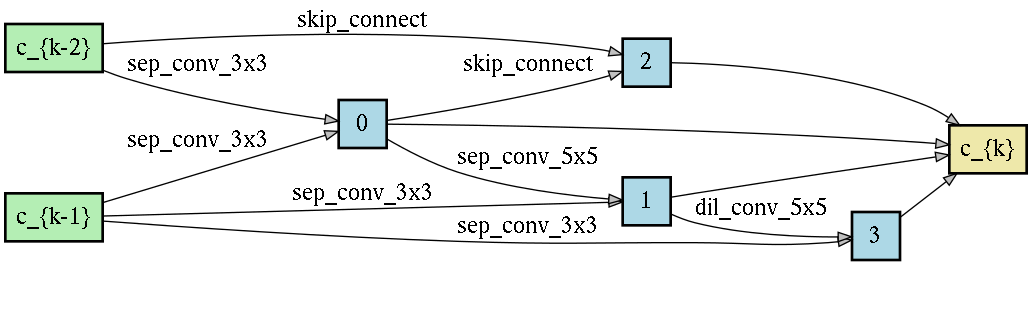

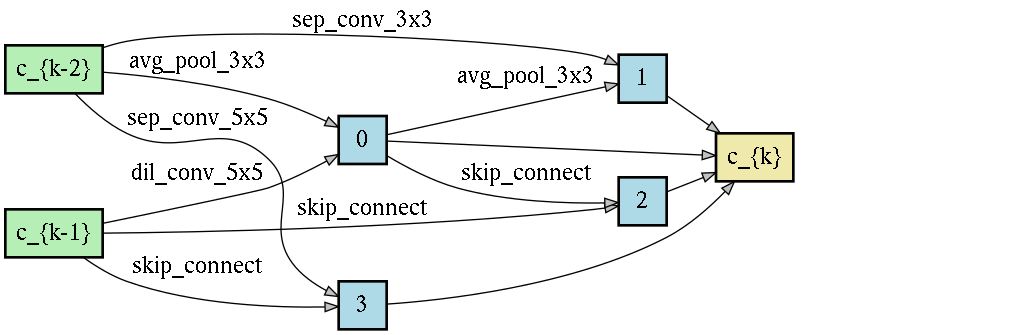

This repository contains the code for our work called Evolving Neural Architecture Search using One Shot Model (EvNAS). In our method, the architectures are represented by using the architecture parameter of the one-shot model which results in the weight sharing among the architectures for a given population of architectures and also weight inheritance from one generation to the next generation of architectures. We propose a decoding technique for the architecture parameter which is used to divert majority of the gradient information towards the given architecture and is also used for improving the performance prediction of the given architecture from the one-shot model during the search process. Furthermore, we use the accuracy of the partially trained architecture on the validation data as a prediction of its fitness in order to reduce the search time.

CIFAR-10 (EvNAS_A_cifar_weights.pt)

python3 test_cifar.py --arch EvNASA --model_path EvNAS_A_cifar_weights.pt

For EvNAS-B and EvNAS-C use EvNASB and EvNASC respectively for --arch option with their pretrained weigths

(EvNAS_B_cifar_weights.pt),

(EvNAS_C_cifar_weights.pt)

** Expected result for EvNAS-A: 2.40% test error rate (97.60% top-1 accuracy) with 3.6M model params.

** Expected result for EvNAS-B: 2.51% test error rate (97.49% top-1 accuracy) with 3.8M model params.

** Expected result for EvNAS-C: 2.55% test error rate (97.45% top-1 accuracy) with 3.4M model params.

CIFAR-100 (EvNAS_A_cifar100_weights.pt)

python3 test_cifar100.py --arch EvNASA --model_path EvNAS_A_cifar_weights.pt

For EvNAS-B and EvNAS-C use EvNASB and EvNASC respectively for --arch option with their pretrained weigths

(EvNAS_B_cifar100_weights.pt),

(EvNAS_C_cifar100_weights.pt)

** Expected result for EvNAS-A: 16.05% test error rate (83.95% top-1 accuracy) with 3.6M model params.

** Expected result for EvNAS-B: 16.08% test error rate (83.92% top-1 accuracy) with 3.8M model params.

** Expected result for EvNAS-C: 16.46% test error rate (83.54% top-1 accuracy) with 3.4M model params.

ImageNet (EvNAS_A_imagenet_model_best.pt.tar)

python test_imagenet.py --arch EvNASA --model_path EvNAS_A_imagenet_model_best.pth.tar

For EvNAS-B and EvNAS-C use EvNASB and EvNASC respectively for --arch option with their pretrained weigths

(EvNAS_B_imagenet_model_best.pt.tar)

(EvNAS_C_imagenet_model_best.pt.tar)

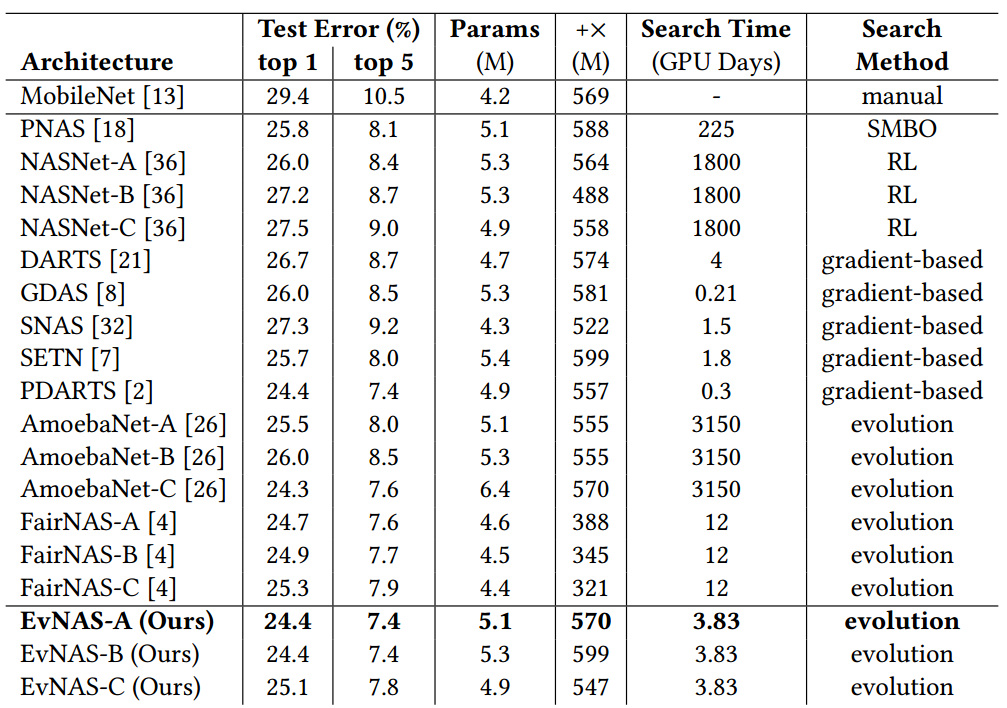

** Expected result for EvNAS-A: 24.4% top-1 error (75.6% top-1 accuracy) and 7.4% (92.6% top-5 accuracy) top-5 error with 5.1M model params.

** Expected result for EvNAS-B: 24.4% top-1 error (75.6% top-1 accuracy) and 7.4% (92.6% top-5 accuracy) top-5 error with 5.3M model params.

** Expected result for EvNAS-C: 25.1% top-1 error (74.9% top-1 accuracy) and 7.8% (92.2% top-5 accuracy) top-5 error with 4.9M model params.

python -i train_search.py --cutout

To evaluate our best cells by training from scratch on CIFAR-10 dataset, run

python train.py --cutout --auxiliary --epochs 600 --arch EvNASA # when architecture is present in genotype.py

or

python train.py --cutout --auxiliary --epochs 600 --dir search_DIR # when architecture is in search_DIR as genotype.pickle file

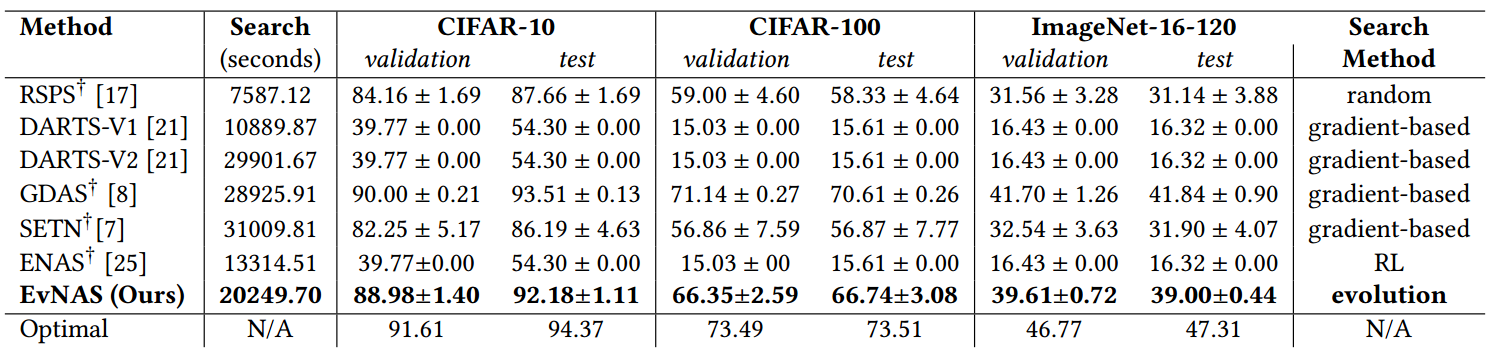

To download ImageNet-16-120 use the link. To use the NAS-201 benchmark for evaluating the search method, download the file NAS-Bench-201-v1_1-096897.pth

Using CIFAR-10:

bash ./searchNAS201.sh cifar10 0 outputs

Using CIFAR-100:

bash ./searchNAS201.sh cifar100 0 outputs

Using ImageNet-16-120:

bash ./searchNAS201.sh ImageNet16-120 0 outputs

To cite our paper, please use the following:

@inproceedings{sinha2021evolving,

title={Evolving neural architecture using one shot model},

author={Sinha, Nilotpal and Chen, Kuan-Wen},

booktitle={Proceedings of the Genetic and Evolutionary Computation Conference},

pages={910--918},

year={2021}

}