The project explores the process of human annotation, calculating inter-annotator agreement metrics and biases from 2000 Yelp Reviews annotated by 3 independent annotators on Amazon Mechanical Turk.

Make sure you have Docker and Docker Compose installed and running before proceeding.

-

Clone the git repository to your local machine:

git clone https://github.com/nihaldsouza/yelp-annotation-study.git -

Change directory into the project folder:

cd yelp-annotation-study -

Run the following command:

docker-compose up

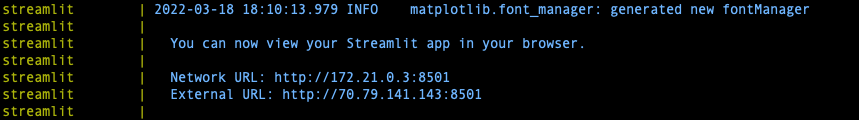

Note: This may take a few minutes as Elasticsearch requires some time to be setup. You can proceed once you see logs from the 'streamlit' container, something similar to:

-

On your browser visit: http://localhost:8501/

-

To gracefully shutdown the app, Control + C