Table of Contents

- Overview

- 🐍 Build the app with Python

- 🌀 Flow diagram

- 🚑 Troubleshooting

- 📖 Optional: Extra information useful for the user

- 🔮 Overview of the files

- 🐋 Deploy the prebuild application using Docker

- ⏭️ Next steps

- 👩👧👦 Community

- 🦄License

| Learnings | How to use image captions (short descriptions of the image content) to search for images. |

| Used for indexing | Images + captions. |

| Used for querying | Image caption e.g. "A boy playing basketball". |

| Dataset used | Flickr 8k containing 8k image caption pairs. |

| Model used | Both CLIP: Contrastive Language-Image Pre-Training and VSE++: Improving Visual-Semantic Embeddings with Hard Negatives are demonstrated. |

In this example, Jina is used to implement a cross-modal search system. This example allows the user to search for images given a caption description. First, we encode all images in our dataset into vectors and create an index of these vectors. When we search with text inputs, we compute the vector representation of this text and compare it to the previously calculated image vector index to find the most similar image. It is also possible to do it the other way around and use the image encoding to search for similar text-embeddings (captions).

These instructions explain how to build the example yourself and deploy it with Python. If you want to skip the building steps and just run the example with Docker, check out the Docker section below.

- You have a working Python 3.7 or 3.8 environment and a installation of Docker.

- We recommend creating a new Python virtual environment to have a clean installation of Jina and prevent dependency conflicts.

- You have at least 2 GB of free space on your hard drive.

Begin by cloning the repo, so you can get the required files and datasets. (If you already have the examples repository on your machine make sure to fetch the most recent version)

git clone https://github.com/jina-ai/examples

cd examples/cross-modal-searchIn your terminal, you should now be located in you the cross-modal-search folder. Let's install Jina and the other required Python libraries. For further information on installing Jina check out our documentation.

pip install -r requirements.txtTo quickly get started, you can index a small dataset to make sure everything is working correctly. You can pre-fetch the Pods containing the machine learning models required to calculate the embeddings of the data using Docker.

docker pull jinahub/pod.encoder.clipimageencoder:0.0.2-1.2.0

docker pull jinahub/pod.encoder.cliptextencoder:0.0.3-1.2.2Or for the VSE model:

docker pull jinahub/pod.encoder.vseimageencoder:0.0.5-1.2.0

docker pull jinahub/pod.encoder.vsetextencoder:0.0.6-1.2.0Once the images are downloaded, run

python app.py -t indexIf you see the following output, it means your data has been correctly indexed.

Flow@5162[S]:flow is closed and all resources are released, current build level is 0

We recommend you come back to this step later and index the full flickr 8k dataset for better results. To index the full dataset (8000 images) follow these steps:

- Register for a free Kaggle account

- Set up your API token (see authentication section of their API docs)

- Run

sh get_data.sh

To index the full dataset, run

python app.py -t index --data_set=f8kJina offers several ways to search (query) your data. In this example, we show two of the most common ones. In a production environment, you would only choose one which suits your use case best.

Begin by running the following command to open the REST API interface.

python app.py -t query_restfulYou should open another terminal window and paste the following command.

curl --request POST -d '{"top_k": 5, "mode": "search", "data": ["hello world"]}' -H 'Content-Type: application/json' 'http://localhost:45678/search'Once you run this command, you should see a JSON output returned to you. This contains the five most semantically similar images sentences to the text input you provided in the data parameter.

Note, that the toy-data only contains two images.

Feel free to alter the text in the 'data' parameter and play around with other queries (this is only fun with the large dataset)! For a better understanding of the parameters see the table below.

top_k |

Integer determining the number of sentences to return |

mode |

Mode to trigger in the call. See here for more details |

data |

Text input to query |

Jina Box is a light-weight, highly customizable JavaScript based front-end search interface. To use it for this example, begin by opening the REST API interface.

python app.py -t query_restfulIn your browser, open up the hosted Jina Box on jina.ai/jinabox.js. In the configuration bar on the left-hand side, choose a custom endpoint and enter the following: http://127.0.0.1:45678/search.

You can type search queries into the text box on the right-hand side!

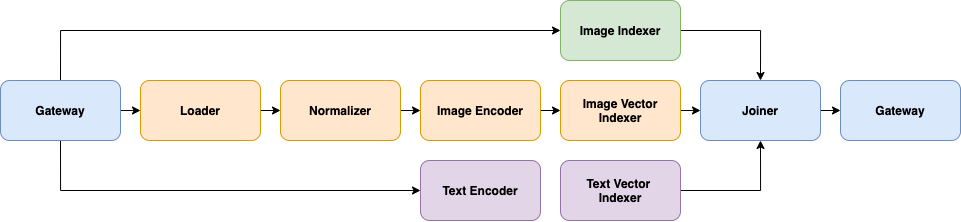

This diagram provides a visual representation of the Flows in this example; Showing which executors are used in which order. Remember, our goal is to compare vectors representing the semantics of images with vectors encoding the semantics of short text descriptions.

As you can see, the Flow that Indexes the data contains three parallel branches:

- Upper: a key-value indexer for the images that we use as a lookup (like a Dictionary in Python).

- Middle: transformations to get from the JPG to vectors

- Lower: transformations to get from text descriptions to vectors To have️ low latency at query time, we store the computed vectors on disk.

This Flow shows what happens when a user queries our data. First, the provided text description is passed through the text Encoder which turns

it into a vector. Now, we use our image vector index to find the most similar image encodings to the previously computed text vector from the user.

Because the user does not want to see the vector as a result, but the image this vector belongs to we use the key-value lookup to get from image vector to human-interpretable JPG image.

Note, that this Flow only shows how to search for images using text. The example actually support searching for text using images as well.

As an exercise, you can think of the required steps for that and check against our Flow configuration.

In case a Flow hangs during indexing it often helps to restart the process.

Motive behind Cross-Modal Retrieval

Cross-modal retrieval tries to effectively search for documents of one modality (text) in an index storing data of another modality (images). An example of this is google image search.

python app.py -t index -n $num_docs -s $request_size -d 'f8k' -m clipIf your index hangs, please remove the workspace,

reduce the request_size and re-run the above command to index.

The default request_size is 12.

Check out the fix suggested in this issue.

Not that num_docs should be 8k or 30k depending on the flickr dataset you use.

If you decide to index the complete datasets,

it is recommendable to increase the number of shards and parallelization.

The dataset is provided with the -d parameter with the valid options of 30k and 8k.

If you want to index your own dataset,

check dataset.py to see how data is provided and adapt to your own data source.

If you want to switch to VSE++ model, replace -m clip with -m vse

Request size can be configured with -s flag.

Jina normalizes the images needed before entering them in the encoder.

QueryLanguageDriver is used to redirect (filtering) documents based on modality.

Modality is an attribute assigned to a document in Jina in the protobuf Document structure. It is possible that documents may be of the same mime type, but come from different distributions, for them to have different modalities. Example: In an article or web page, the body text and the title are from the same mime type (text), but will differ in statistical properties (like expected length or frequency of certain key-words) and should therefore be modeled separately. If that is the case, we consider them as different modalities.

Different Encoders map different modalities to a common embedding space. They need to extract semantic information from the documents.

In this embedding space, documents that are semantically relevant to each other from different modalities are expected to be close to another - Metric Learning

In the example, we expect images embeddings to be nearby their captions’ embeddings.

Research for Cross-Modal Retrieval

The models used for the example are cited from the paper, you can try our example with one of them:

- CLIP: Contrastive Language-Image Pre-Training (recommend)

- VSE++: Improving Visual-Semantic Embeddings with Hard Negatives.

Both of the models have been trained to encode pairs of text and images into a common embedding space.

CLIP Encoders in Jina for Cross-Modal Search

Two encoders have been created for this example, namely CLIPImageEncoder and CLIPTextEncoder,

for encoding image and text respectively.

VSE Encoders in Jina for Cross-Modal Search

Two Encoders have been created for this example, namely VSEImageEncoder and VSETextEncoder,

for encoding image and text respectively.

📂 flows/ |

Folder to store Flow configuration |

--- 📃 flow-index.yml |

YAML file to configure indexing Flow |

--- 📃 flow-query.yml |

YAML file to configure querying Flow |

📂 pods/ |

Folder to store Pod configuration |

--- 📂 clip |

YAML files to configure the Encoder using the clip model |

--- 📂 vse |

YAML files to configure the Encoder using the vse model |

--- 📃 image-load.yml |

YAML file to configure loading JPG files |

--- 📃 image-normalzer.yml |

YAML file to configure how images are normalized |

--- 📃 index-image-kv.yml |

YAML file to configure the key-value image index |

--- 📃 index-image-vector.yml |

YAML file to configure the vector image index |

--- 📃 index-text.yml |

YAML file to configure the index for the text vectors |

--- 📃 merge_matche_sort_topk.yml |

YAML file to configure the merging ranking of matches in the final result. |

📂 workspace/ |

Folder to store indexed files (embeddings and documents). Automatically created after the first indexing |

📂 visualizations/ |

Folder to store images used for documentation |

📂 data/ |

Folder to store the toy-data for the example |

To make it easier for the user, we have built and published the Docker image with the indexed documents. Just be aware that the image weights 11 GB. Make sure that your docker lets you allocate a sufficient amount of memory.

You can retrieve the docker image using:

docker pull jinahub/app.example.crossmodalsearch:0.0.3-1.0.8So you can pull from its latest tags.

To run the application with the pre-indexed documents and ready to be used from Jina Box, run

docker run -p 45678:45678 jinahub/app.example.crossmodalsearch:0.0.3-1.0.8Did you like this example and are you interested in building your own? For a detailed tutorial on how to build your Jina app check out How to Build Your First Jina App guide in our documentation.

If you have any issues following this guide, you can always get support from our Slack community .

- Slack channel - a communication platform for developers to discuss Jina.

- LinkedIn - get to know Jina AI as a company and find job opportunities.

- follow us and interact with us using hashtag

#JinaSearch.- Company - know more about our company, we are fully committed to open-source!

Copyright (c) 2021 Jina AI Limited. All rights reserved.

Jina is licensed under the Apache License, Version 2.0. See LICENSE for the full license text.