special course project - DTU

supervised by Tobias Andersen & Agata Wlaszczyk

based on Alma's work: https://journals.plos.org/plosone/article/authors?id=10.1371/journal.pone.0246986

From Alma's work, we see both JPM and BCI model could fit the response data quite well, indicating both of them have the ability to estimate the internal logic of audio-visual perception. However, this conclusion might be limited due to the insufficient trails for each condition as well as the rough-classified responses. To explore whether there's a significant difference between these two models over audio-visual perception, we suggest to build a pilot experiment using stimulated data from cognitive models before conduct the complicated cognitive experiment, and address the degree of confidence about the models' consistency. We also hope the correctness of model would be explored through this experiment.

There are mainly x phases for building this experiment.

Steps for this Phase:

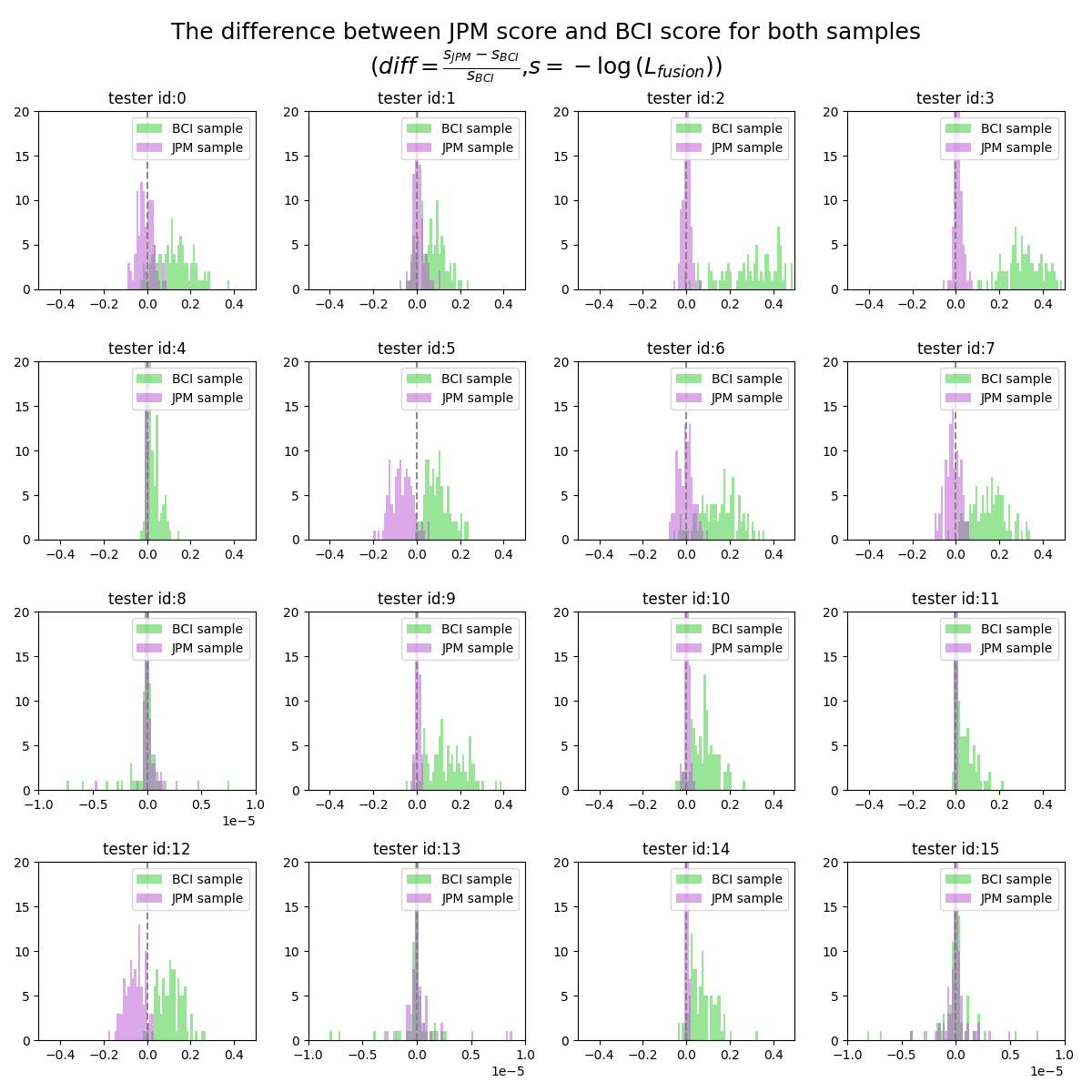

- We could observe from the visualization that when snr equals "VHigh + ALow" and with asynchrony condition. And for tester No.5,6,7,12, the differences of model distribution are much more obvious. Therefore, we would restrict the condition to asychnocy with VhighAlow.

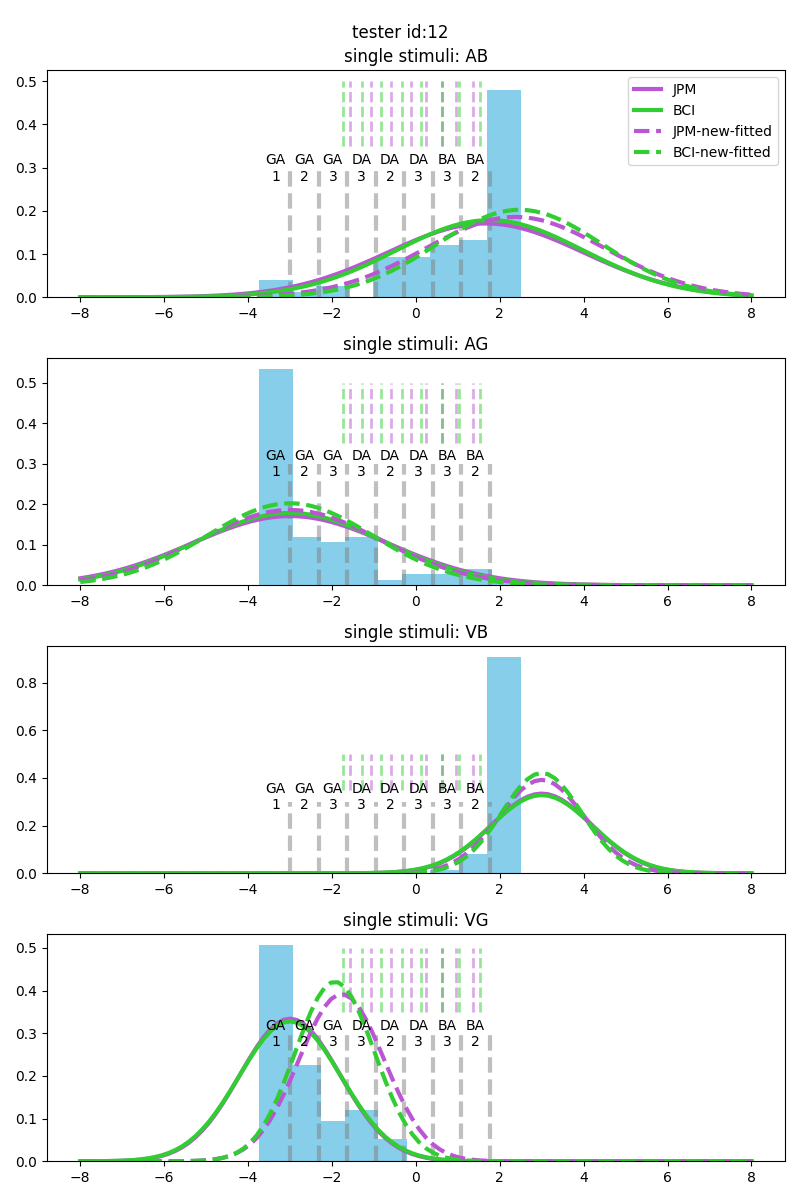

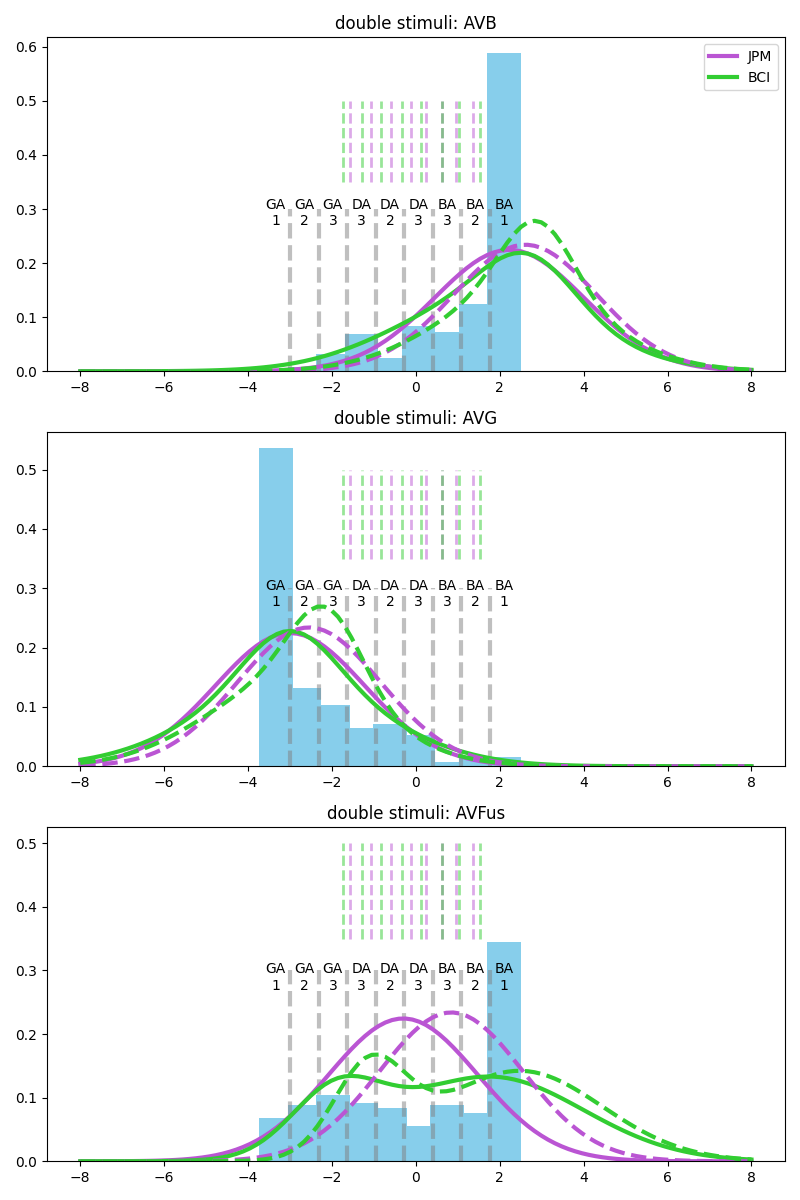

- Use the best parameters from the fitting process, generate 25*3 samples for single stimuli (only take the high quality for visual stimuli and low quality for audio stimuli), and 25*5*2 samples for fusion stimuli for only Vhigh-Alow snr. The sample size here are set to match the original total size of the responses.

- Assign the samples into 9 categories, the boundaries are set by evenly dividing from

$\mu_{ag}$ to$\mu_{ab}$ . - Store the counts and probabilities as the similar format as the original data responses.

Fitted models with tester No.12; samples are generated from BCI model.

The score for evaluating the model is the value of negative log likelihood. Here we compute two types of negative log likelihood, 1) only for fusion condition, 2) the sum of all conditions.

$$

s_{M_{fusion}} = -log\mathcal{L_{fusion}} \

s_M = -\sum_{c\in{C}}log\mathcal{L_c}

$$

where

According to the definition of likelihood, the larger the likelihood is, the better the model is. In our case, with the negative log likelihood, the smaller the

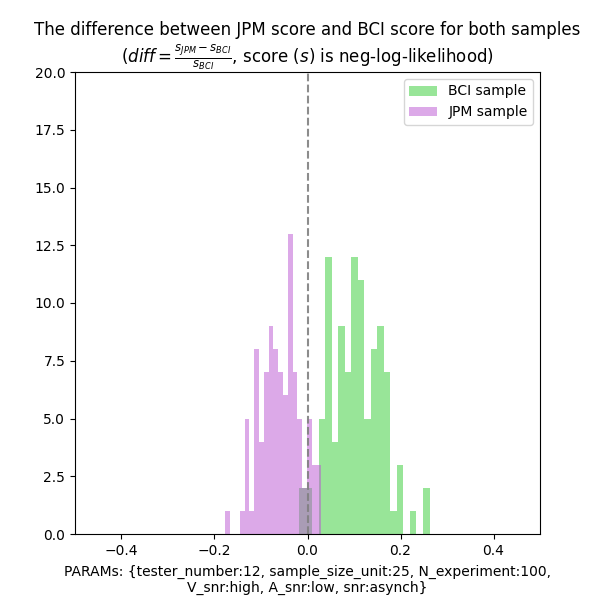

The first method we use to comparison the results over 2 models is computing the difference between the score from 2 models.

We define the new comparison factor

The plot below shows the distribution of

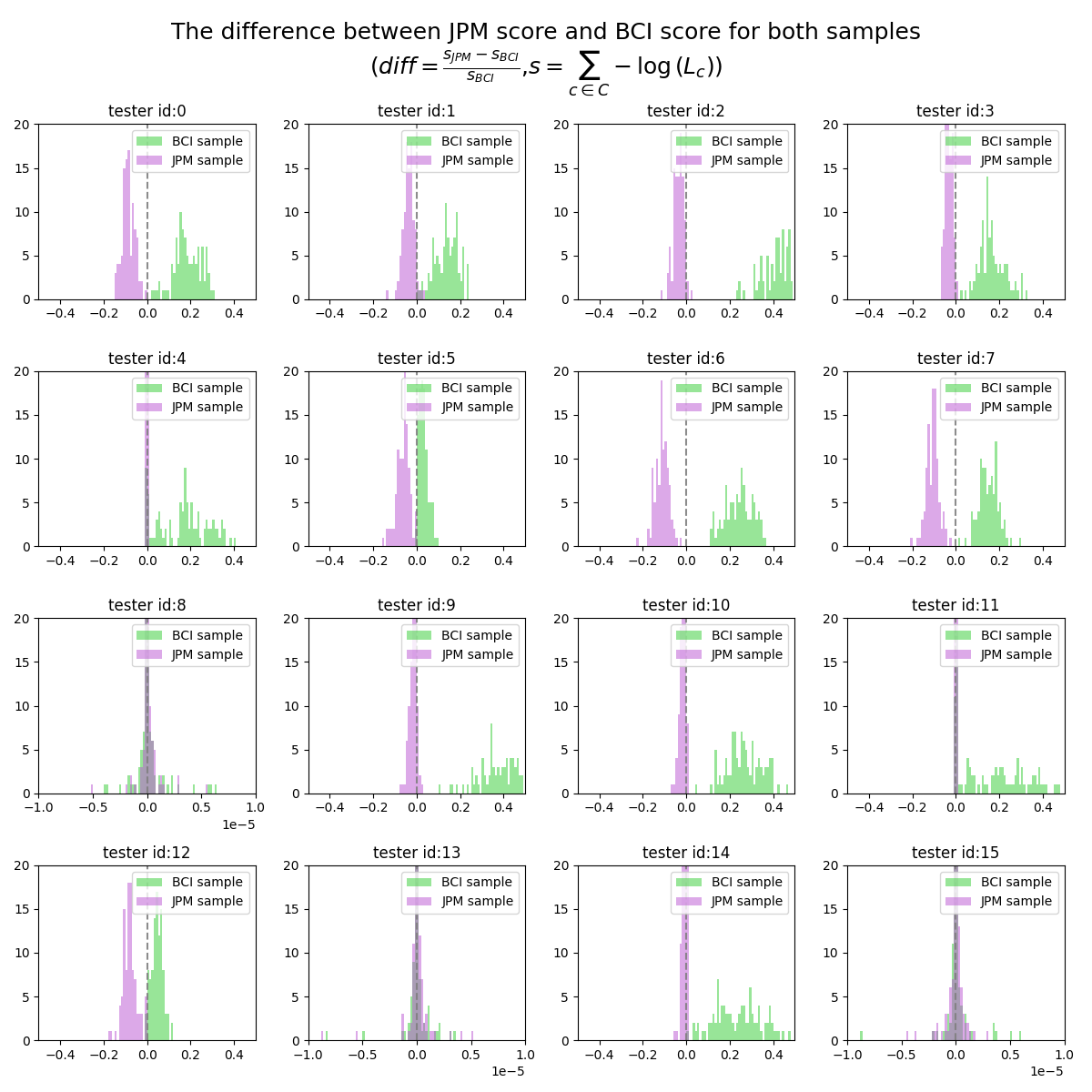

The following 2 plots shows the

Some key observations:

-

$s_M$ separates the 2 parts of distributions better than $s_{M_{fusion}} , which could be observed from section 5.2.4 as well - In general, for BCI samples, BCI model fitting outperforms JPM fitting; for JPM samples, JPM model fitting outperformed BCI model fitting. What worth mentioning is that , the degree of outperforming is much more significant for BCI sampling, which might indicates the BCI model might be closer to the human audio-visual perception.

- There are some testers (8,13,15), the

$diff$ values are very similar for both models. What need to notice is that for these testers we use different x axis scale(1e-5).

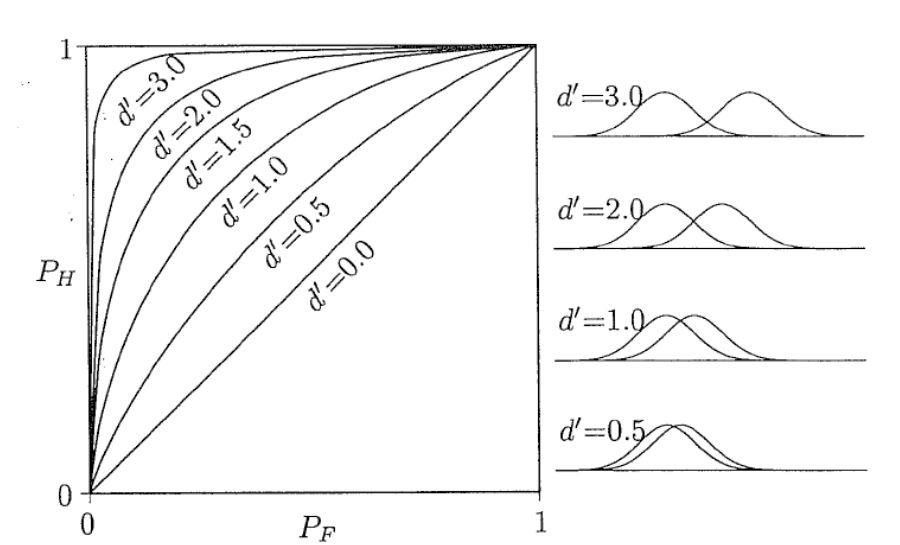

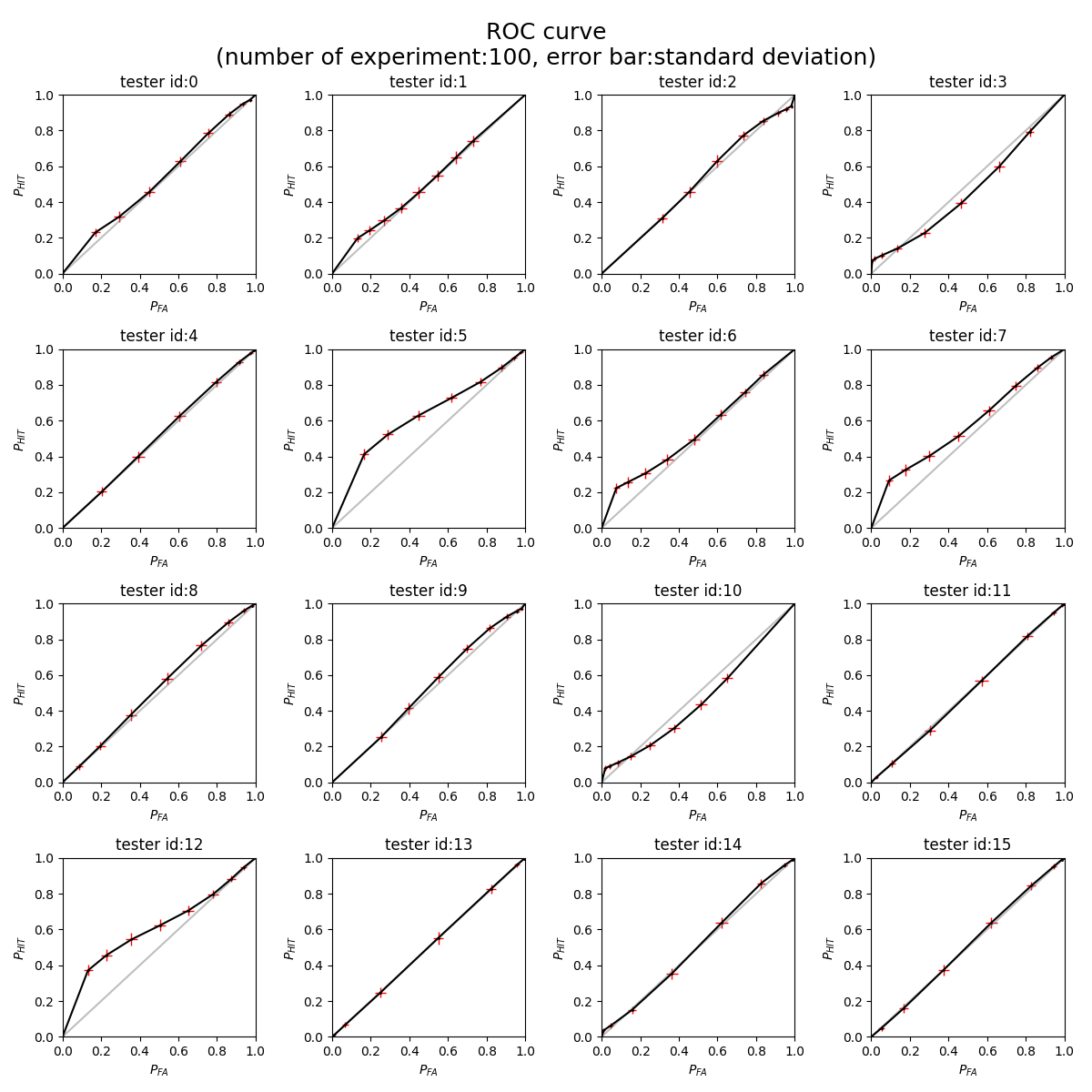

Another way of analyzing the refitting result is simply checking the ROC curve. From signal detection theory, the ROC curve is used for measure the sensitivity between two signals (or one signal and one noise). Just as the plot shows below, when curve is more close to the diagonal, two signals are more similar to each other.

The plot below shows the ROC curve for 16 tester.

We could observe from this plot that for most of the tester, the 2 fitted model are quite similar with each other, just like the plot we show before {plots needed(the fitted distribution for every tester)}. What worth mentioning is the tester no.5,6,7 and 12 are quite different from the others. They show a 'peak' with low

ROC curve in Gaussian coordinates could not only help us to measure the sensitivity (d') at one glimpse (with even covariance, d' is the intercept of the fitted line), but also reveal whether the signals are both gaussian distributed. If both signals are gaussian distributed, then the nodes should be linear distributed.

Below the plot shows the ROC in Gaussian coordinates for 16 testers. It could be seen that tester No.2,3,5,6,7,10,12 are clearly having non-Gaussian distribution for BCI model.

The last method we used for analyzing the difference between 2 fitted model is significance test. The idea is to exam how confident we are with the statement about Model A 'wins' Model B. The null hypothesis are:

- for JPM samples, JPM model works less better than BCI model

- (also the other way around) for BCI samples, BCI model works less better than JPM model.

Notice that the null hypothesis is the statement that we'd like to prove against.

We are going to generate one set of samples for each tester from both fitted distributions, then using both model to fit the stimulated data again. After this the

The above experiment would be done for 100 times. By collecting all the p-value from 100 experiments, the hypothesis could be well tested.

$H_{a_{0}}$ : For samples generated from JPM,$s_{JPM} > s_{BCI}$ , which equals to$s_{JPM} - s_{BCI} > 0$

$H_{b_0}$ : For samples generated from BCI,$s_{JPM} < s_{BCI}$ , which equals to$s_{JPM} - s_{BCI}<0$

Notice that both

as for each experiment, sample_size < 30, so considering using t-test.

-

$s_M$ , alpha = 0.01- H_a_0, p-value less then 0.01:92

- H_b_0, p-value less then 0.01:100

-

$s_M$ ,alpha = 0.05- H_a_0, p-value less then 0.05:95

- H_b_0, p-value less then 0.05:100

-

$s_{M_{fusion}}$ ,alpha=0.01- H_a_0, p-value less then 0.01:0

- H_b_0, p-value less then 0.01:98

-

$s_{M_{fusion}}$ ,alpha=0.05- H_a_0, p-value less then 0.05:12

- H_b_0, p-value less then 0.05:100

-

$s_{M_{fusion}}$ ,alpha=0.1- H_a_0, p-value less then 0.05:31

- H_b_0, p-value less then 0.05:100

- Two ways for examination. A) as what we did this week. B) test the roc (roc also could be used for testing other distributions) .

- the examination on fitting performance

- exam on 16(?) tester {at least on 5,6,7,12, maybe also 0,1,2,3,10}

- do it for 100 experiment

- ROC

- not like the A method, we don't need to fit the model again (from what I understand). Instead, we only sampled from the distribution, and then compute the proportions and take the inversed value for Gaussian coordinate

- This is for examining whether the distribution is Gaussian or not

- question: what is the noise

- *updated: error bar

- *updated: 100 trails with error bar with all 16 testers

- the examination on fitting performance

- Try with only neg log for fusion. (done)

- Increase or decrease the sample size unit and see what is the threshold for distinguish the two models

(updated on 6.15)

-

statistics on 16 testers

for each tester, run one experiment from BCI and JPM samples, and compute the p-value for the hypothesis that one model wins.

do this for 100 trails.

{plan for 6.22

-

to-do-list 4. statistics, about how to define hypothesis and how to get the p-value

-

ROC 100 trails + error bar

-

write the report Phase 1& 2, overleaf}