Ning Yu, Ke Li, Peng Zhou, Jitendra Malik, Larry Davis, Mario Fritz

ECCV 2020

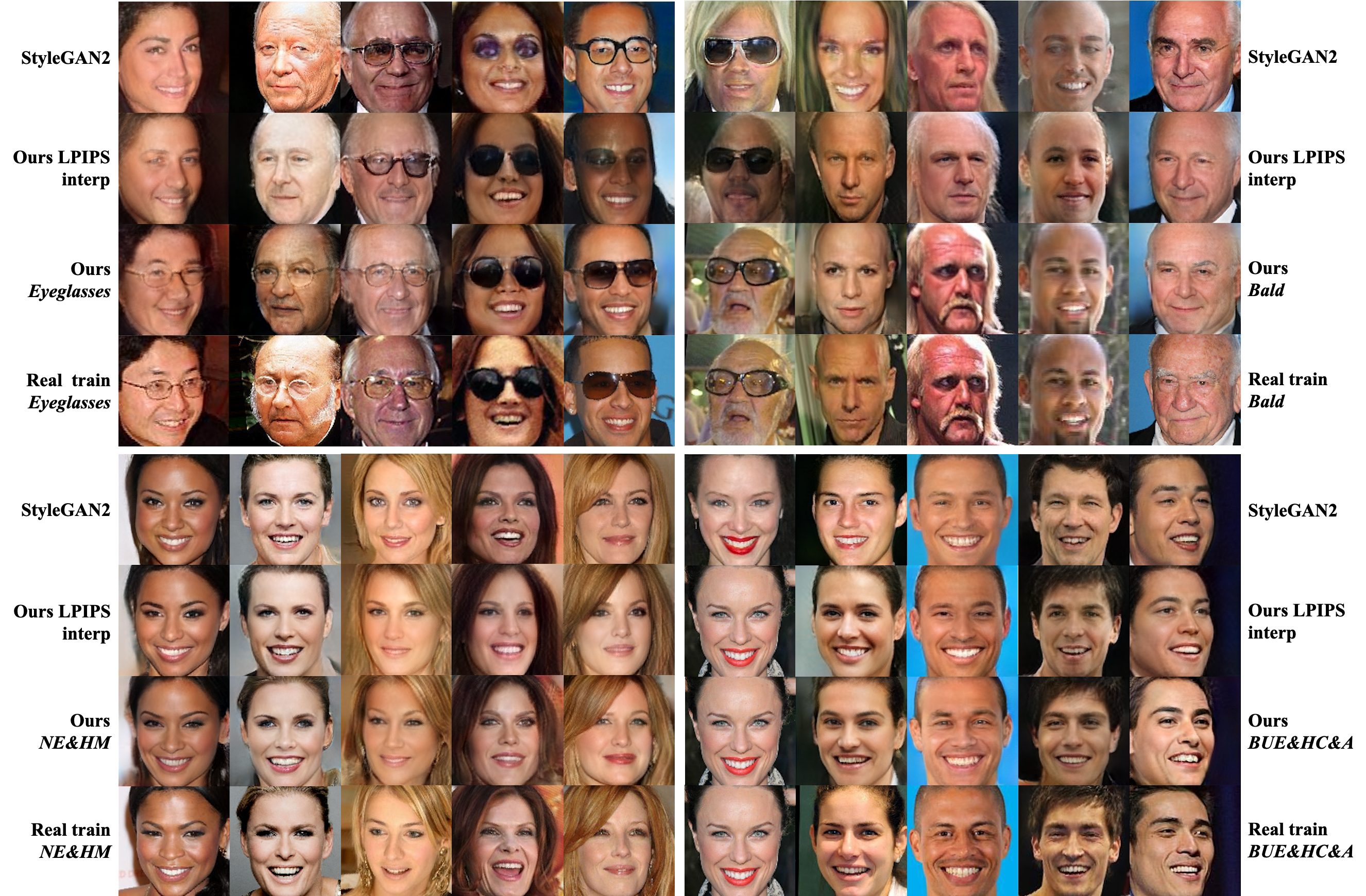

The 1st column: A majority real image for the beginning frame to reconstruct

The 2nd column: StyleGAN2

The 3rd column: Ours general

The 4th column: Ours minority inclusion

The 5th column: A minority real image for the end frame to reconstruct

- Eyeglasses

Majority real StyleGAN2 Ours general Ours minority Minority real

- Bald

Majority real StyleGAN2 Ours general Ours minority Minority real

- Narrow_Eyes&Heavy_Makeup

Majority real StyleGAN2 Ours general Ours minority Minority real

- Bags_Under_Eyes&High_Cheekbones&Attractive

Majority real StyleGAN2 Ours general Ours minority Minority real

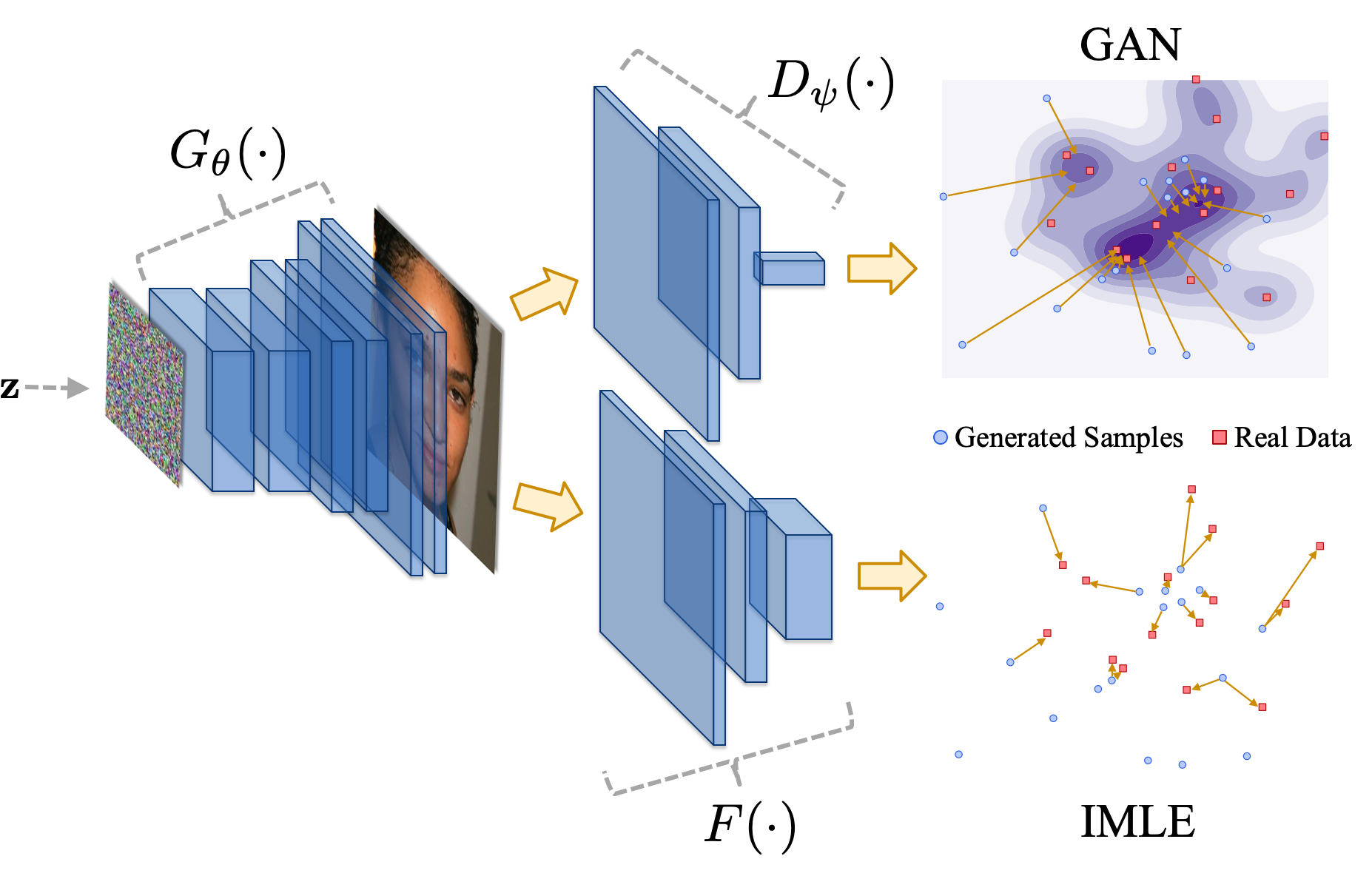

Generative Adversarial Networks (GANs) have brought about rapid progress towards generating photorealistic images. Yet the equitable allocation of their modeling capacity among subgroups has received less attention, which could lead to potential biases against underrepresented minorities if left uncontrolled. In this work, we first formalize the problem of minority inclusion as one of data coverage, and then propose to improve data coverage by harmonizing adversarial training with reconstructive generation. The experiments show that our method outperforms the existing state-of-the-art methods in terms of data coverage on both seen and unseen data. We develop an extension that allows explicit control over the minority subgroups that the model should ensure to include, and validate its effectiveness at little compromise from the overall performance on the entire dataset.

- Linux

- NVIDIA GPU + CUDA 10.0 + CuDNN 7.5

- Python 3.6

- tensorflow-gpu 1.14

- To install the other Python dependencies, run

pip3 install -r requirements.txt. - DCI for fast kNN search. Follow the instructions in

dci_code/Makefileto specify paths to BLAS, Python, NumPy in the file, and build the DCI Python interface. - Precision and Recall calculation.

pip3 install -r precision-recall-distributions/requirements.txt

We experiment on two datasets:

-

Preliminary study on Stacked MNIST dataset. We synthesize 240k images by stacking the RGB channels with random MNIST images, resulting in 1,000 discrete modes (10 digit modes for each of the 3 channels). We zero-pad the image from size 28x28 to size 32x32. To prepare the dataset, first download the MNIST .gz files to

mnist/, then runpython3 dataset_tool.py create_mnistrgb \ datasets/stacked_mnist_240k \ mnist \ --num_images 240000where

datasets/stacked_mnist_240kis the output directory containing the prepared data format that enables efficient streaming for our training. -

Main study including minority inclusion on CelebA dataset. We use the first 30k images and crop them centered at (x,y) = (89,121) with size 128x128. To prepare the dataset, first download and unzip the CelebA aligned png images to

celeba/Img/, then runpython3 dataset_tool.py create_celeba \ datasets/celeba_align_png_cropped_30k \ celeba/Img/img_align_celeba_png \ --num_images 30000where

datasets/celeba_align_png_cropped_30kis the output directory containing the prepared data format that enables efficient streaming for our training, andceleba/Img/img_align_celeba_pngis the input directory containing CelebA png files.

-

For Stacked MNIST, run, e.g.,

python3 run_training.py --data-dir=datasets --config=config-e-Gskip-Dresnet --num-gpus=2 \ --metrics=mode_counts_24k,KL24k \ --dataset=stacked_mnist_240k \ --result-dir=results/stacked_mnist_240k \ --data-size=240000where

metrics: Evaluation metric(s).mode_counts_24kcounts for the digit modes (max 1,000) of 24k randomly generated samples.KL24kmeasures their KL divergence to the uniform distribution. The evaluation results are saved inresults/stacked_mnist_240k/metric-mode_counts_24k.txtandresults/stacked_mnist_240k/metric-KL24k.txtrespectively.result-dircontains model snapshotsnetwork-snapshot-*.pkl, real samplesarb-reals.png, randomly generated samplesarb-fakes-*.pngat different snapshots, real samples for IMLE reconstructionrec-reals.png, generated samplesrec-fakes-*.pngfor those reconstructions at different snapshots, log filelog.txt, tensorboard plotsevents.out.tfevents.*, and so on.

-

For CelebA, run, e.g.,

python3 run_training.py --data-dir=datasets --config=config-e-Gskip-Dresnet --num-gpus=2 \ --metrics=fid30k \ --dataset=celeba_align_png_cropped_30k \ --result-dir=results/celeba_align_png_cropped_30k \ --data-size=30000 \ --attr-interesting=Bags_Under_Eyes,High_Cheekbones,Attractivewhere

metrics: Evaluation metric(s).fid30kmeasures the Fréchet inception distance between 30k randomly generated samples and 30k (entire) real samples. The evaluation result is save inresults/stacked_mnist_240k/metric-fid30k.txt.attr-interesting: The interesting CelebA attribute(s) (separated by comma without space) of a minority subgroup. The list of attributes refer toceleba/Anno/list_attr_celeba.txt. If this argument is omitted, the entire dataset is considered to be reconstructed by IMLE.

- The pre-trained Inclusive GAN models can be downloaded from links below. Put them under

models/.

-

Fréchet inception distance (FID) calculation. Besides the FID calculation for snapshots during training, we can also calculate FID given any well-trained network and reference real images. Run, e.g.,

python3 run_metrics.py --metrics=fid30k --data-dir=datasets \ --dataset=celeba_align_png_cropped_30k \ --network=models/celeba_align_png_cropped_30k.pkl \ --result-dir=fid/celeba_align_png_cropped_30kwhere

datasets/celeba_align_png_cropped_30k: The input directory containing the prepared format of reference real data that enables efficient streaming for evaluation.result-dir: The output directory containing the calculation result, log file, and so on.

-

Image generation. Run, e.g.,

python3 run_generator.py generate-images \ --network=models/celeba_align_png_cropped_30k.pkl \ --result-dir=generation/celeba_align_png_cropped_30k \ --num-images=30000where

result-dircontains generated samples in png. -

Precision and Recall calculation. Run, e.g.,

python3 precision-recall-distributions/prd_from_image_folders.py \ --reference_dir=celeba/Img/img_align_celeba_png_cropped_30k \ --eval_dirs=generation/celeba_align_png_cropped_30k/00000-generate-images \ --eval_labels=test_modelwhere

reference_dir: The directory containing reference real images in png. For original CelebA aligned images, they need to be center-cropped at (x,y) = (89,121) with size 128x128 in advance.eval_dirs: The directory(ies) containing generated images in png for precision and recall calculation. It allows multiple inputs, each corresponding to one source of generation.eval_labels: The label(s) of the source(s) of generation. Upon finish, the precision and recall values are printed out in the terminal.

-

Inference via Optimization Measure (IvOM) calculation. Run, e.g.,

python3 run_projector.py project-real-images --data-dir=datasets \ --dataset=celeba_align_png_cropped_30k \ --network=models/celeba_align_png_cropped_30k.pkl \ --result-dir=ivom/celeba_align_png_cropped_30k \ --num-images=3000datasets/celeba_align_png_cropped_30k: The input directory containing the prepared format of real query data that enables efficient streaming for reconstruction.result-dir: The output directory containingimage*-target.pngas real queral images,image*-step0400.pngas reconstructed images from the well-trained generator, log file, and so on. Upon finish, the mean and std of IvOM are printed out in the terminal.

@inproceedings{yu2020inclusive,

author = {Yu, Ning and Li, Ke and Zhou, Peng and Malik, Jitendra and Davis, Larry and Fritz, Mario},

title = {Inclusive GAN: Improving Data and Minority Coverage in Generative Models},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2020}

}

- This project was partially funded by DARPA MediFor program under cooperative agreement FA87501620191 and by ONR MURI N00014-14-1-0671. Any opinions, findings, conclusions, or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the DARPA or ONR MURI.

- We acknowledge Richard Zhang and Dingfan Chen for their constructive advice in general.

- We express gratitudes to the StyleGAN2 repository as our code was directly modified from theirs. We also thank the precision-recall-distributions repository for precision and recall calculation.