Probably Approximately Correct (PAC) computational learning theory provides a mathematically rigorous definition of what machine learning is.

So far, we have looked into steps required in order to run machine learning algorithms including data representations, feature selection and engineering, training, prediction and evaluation of results. In this lesson, we shall provide a brief overview of a Probably Approximately Correct or PAC learning theory that provides a foundation for experimental settings that we have looked at so far. In essence , PAC deals with the computational aspect of machine learning. The theory helps us deal with sample complexity and associated computational complexity in order to answer an analytical question.

You will be able to:

- Understand and describe the PAC learning theory

- State how PAC theory relates to computational (machine) learning practices

- Identify the major factors of PAC theory and describe how the error and confidence measures are used to approximate a desired function

The PAC is a learning models which is characterized by learning from examples.

In its simplest form, consider

Here,

Based on these examples, the learner must succeed in deducing the target function

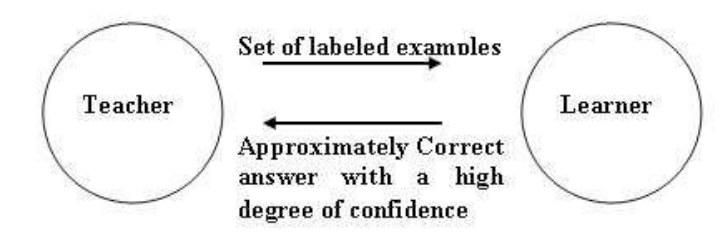

What do we actually mean by success? It should actually be any measure or a series of evaluation steps that help us find that the algorithm meets the criteria. Hence this approach can b shown using the model below:

According to PAC, there is always a small probability that the learning algorithm fails and the results produced are not accurate. One obvious reason could be that learning examples do not fully reflect the probability distribution that the examples belong to, and. i.e. This hinders l learning process seriously and target function

PAC learning approach places an upper bound on the probability of error by placing a lower bound on the number of examples required to learn the target function.

In PAC, we do not expect the learner to learn the exact function most of the time, due to limits on available examples and noise in the data etc. We expect the learner to find a function

The two factors given above are to explain the meaning of success.

"Probably: " If learner outputs the data with desired probability and confidence, the results are probably correct

"Approximately: ." A hypothesis is approximately correct if its error over the distribution of inputs is bounded by some predefined interval.

The results of PAC learning are therefore called "Probably Approximately Correct"

Based on these, PAC gives:

- an upper bound on the error in accuracy with which h approximates f and

- the probability of failure in achieving this accuracy.

With these quantities, we an express the definition of a PAC Algorithm with more mathematical clarity.

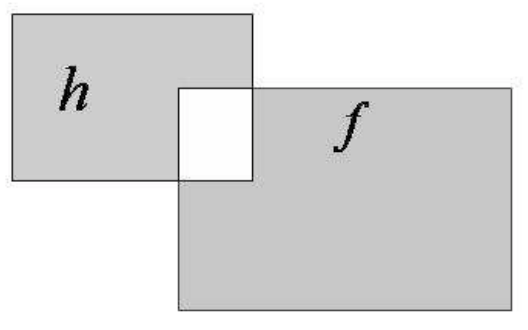

For PAC Learnability, the learner must find a concept

$h$ such that the error between$h$ and$f$ with acceptable range with a predefined level of confidence.

The notion of approximation is described as:

There is some probability distribution

The distribution

A PAC Learning Algorithm would always be independent of underlying distribution i.e.

Visit this link for detailed problem definitions with mathematical proofs and intuitions behind some PAC theorems. The document entails following problems that a PAC learnable.

- Learning positive half-lines

- Learning intervals

- Learning axis-aligned rectangles

PAC uses the term 'hypothesis',whereas ML literature normally uses the term 'model'. So we have seen that Machine learning starts with some data, (xi,yi) and we want to find a hypothesis (or model) that will, given the inputs xi return yi or something very close. More importantly given new data x̃ the model will predict the corresponding ỹ.

A model that was created using some (out of many examples) of data do not accurately reflect that data set, but can be accurate on any future data sets. The two important points are that we cannot predict new data with 100% accuracy. There is also the possibility that the one or more examples are poor and do not explain much.

We present our data to the learner and let it make an attempt to learn the hypothesis function. At discrete stages ,we then look at the error as the difference between real and hypothesis function outputs. A problem is called learnable if we can reduce this error within acceptable bounds.

SO that is PAC. It is essentially provides theoretical and mathematical underpinning to different stages of machine learning that we have been looking at in our course. This theory justifies the need for splitting data into test and training sets. It gives a reason to tune hyper parameters as the learner is independent of the data distribution. This theory also provides grounds for the evaluation methods we have seen including RMSE, ROC, AUC etc. to ensure that our model is learning and approximating a desired function.

A deeper understanding of PAC requires looking at some examples with proofs of how we identify PAC learnability. You are encouraged to visit following resources which will elaborate the points highlighted in this lesson.

In this lesson, we looked at how PAC learning allows us to develop models , train and evaluate them in a computational learning domain. We looked at developing a basic intuition behind this theory and how it relates to different stages of model development that we have been looking at. You are encouraged to read into this theory and try to relate all the upcoming architectures to this theory to help you develop a sound and scalable understanding of ML from theory to practice.