简体中文 | English

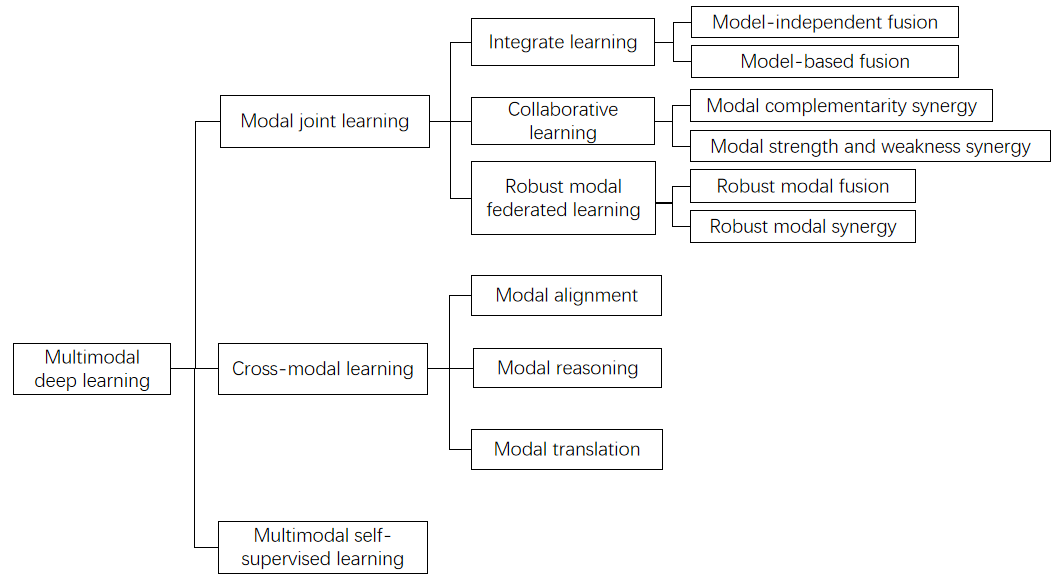

OMML mainly based on on PyTorch, compatible with Paddle version, aiming to provide modal joint learning and cross-modal learning algorithm model libraries, providing efficient solutions for processing multi-modal data such as images and texts, which promote applications of multi-modal machine learning.

Group of OMML:

- NJUST KMG Group

| Yangyang | Guoweili | |

|---|---|---|

| Baoran | Zhangyuxuan | Xiwenjuan |

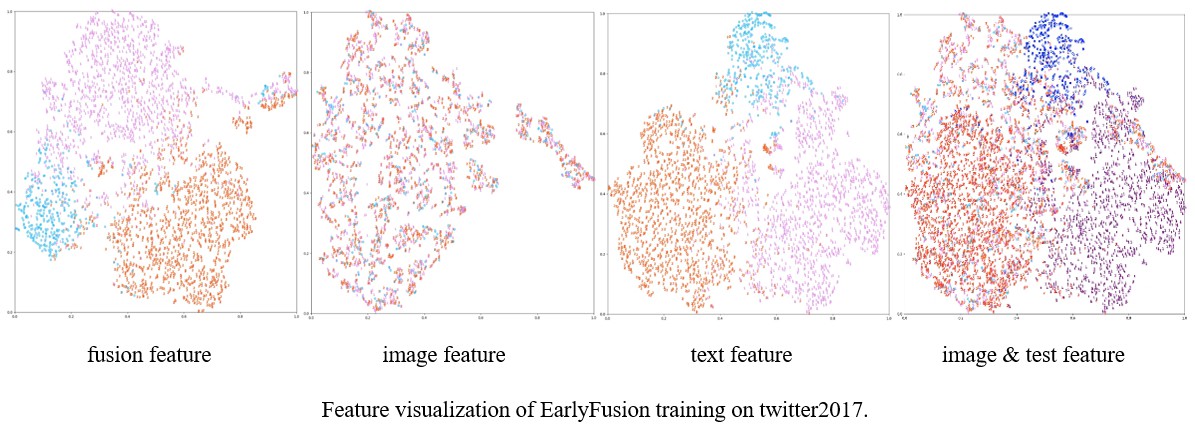

- Add visualization TSNE of fusion task 2023.1.14

- Add model DOMFN 2022.11.17

- Add model CPRC 2022.11.14

- Add model TMC 2022.5.8

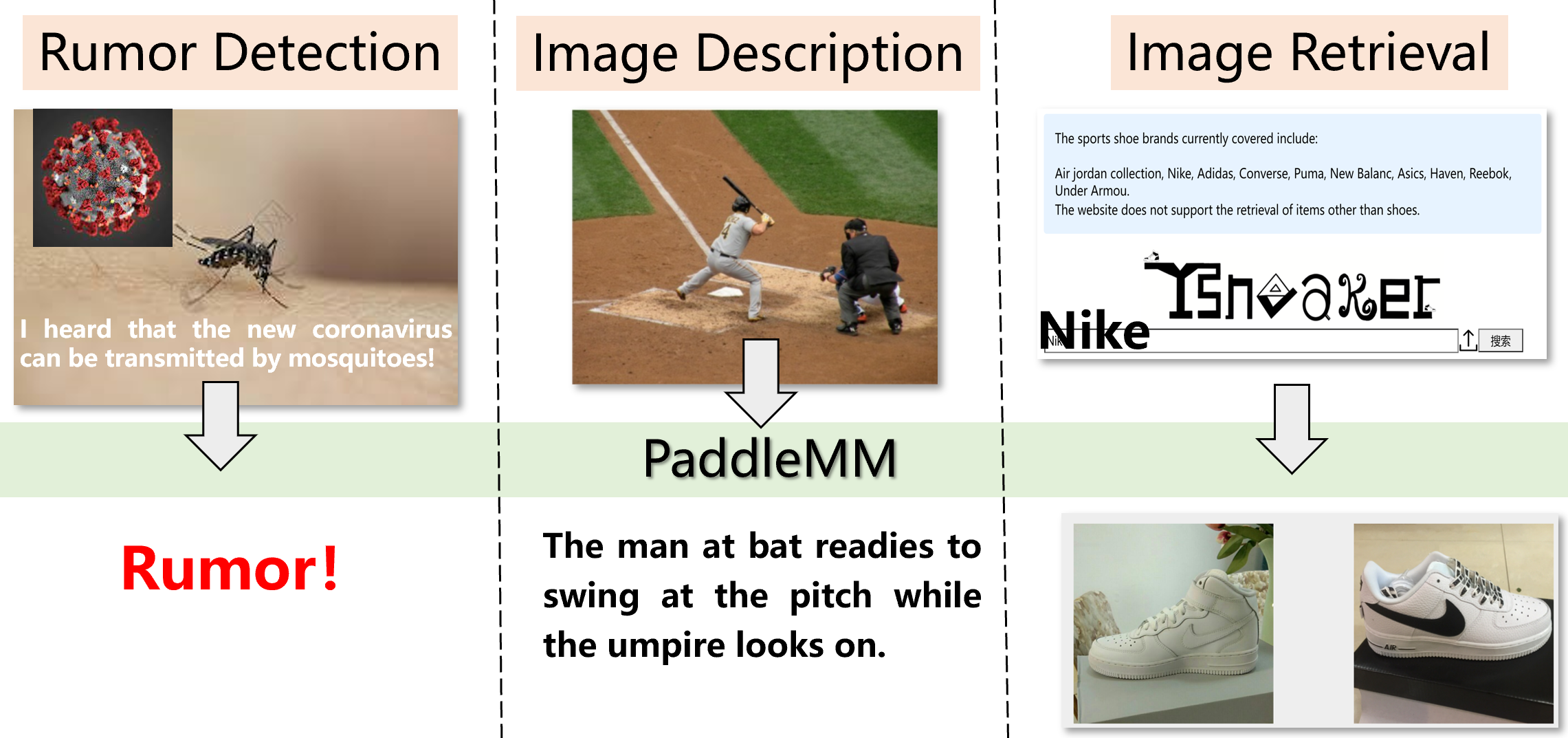

- Multiple task scenarios: PaddleMM provides a variety of multi-modal learning task algorithm model libraries such as multi-modal fusion, cross-modal retrieval, image caption, and supports user-defined data and training.

- Successful industrial applications: There are related practical applications based on the PaddleMM, such as sneaker authenticity identification, image description, rumor detection, etc.

- Sneaker authenticity identification

- more visualization

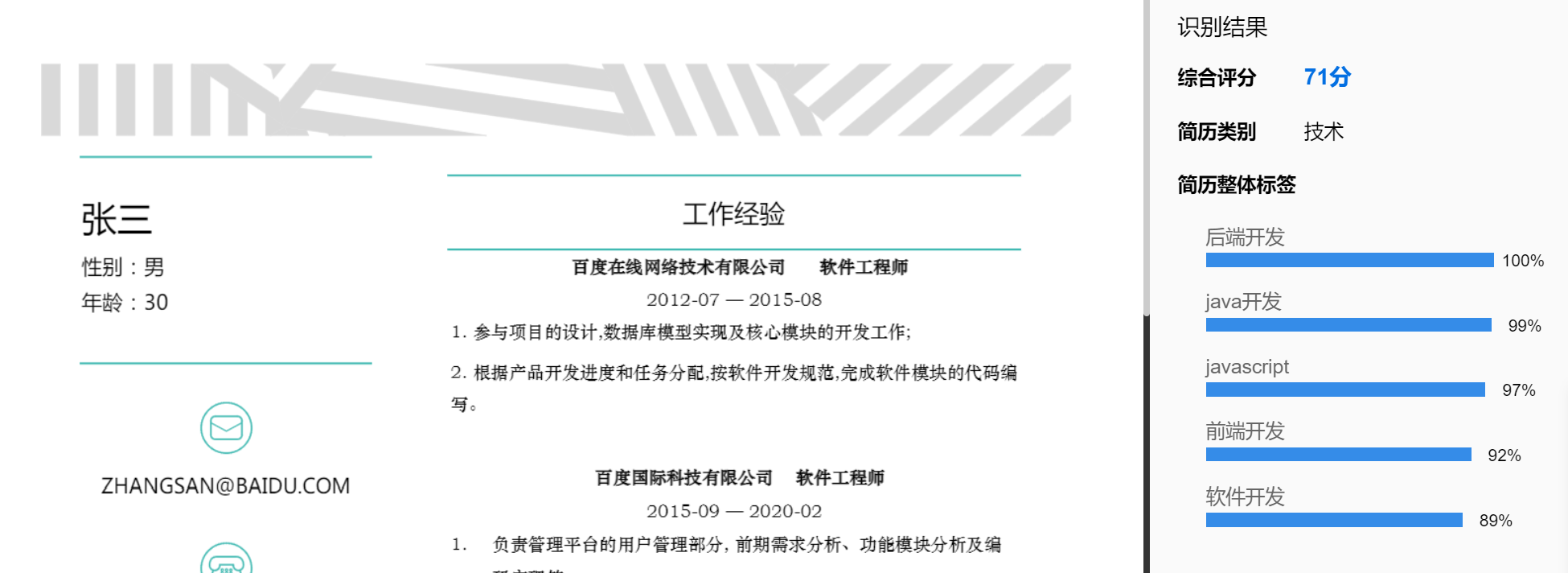

- Cooperation with Baidu TIC Smart Recruitment Resume analysis, based on multi-modal fusion algorithm and successfully implemented.

- Characteristic level:

Example parameter setting: dataset="twitter", data_ mode="twitter", visual="tsne", choose="fusion"

File output directory: out_ root/tsne

- Model level:

- Indicator level:

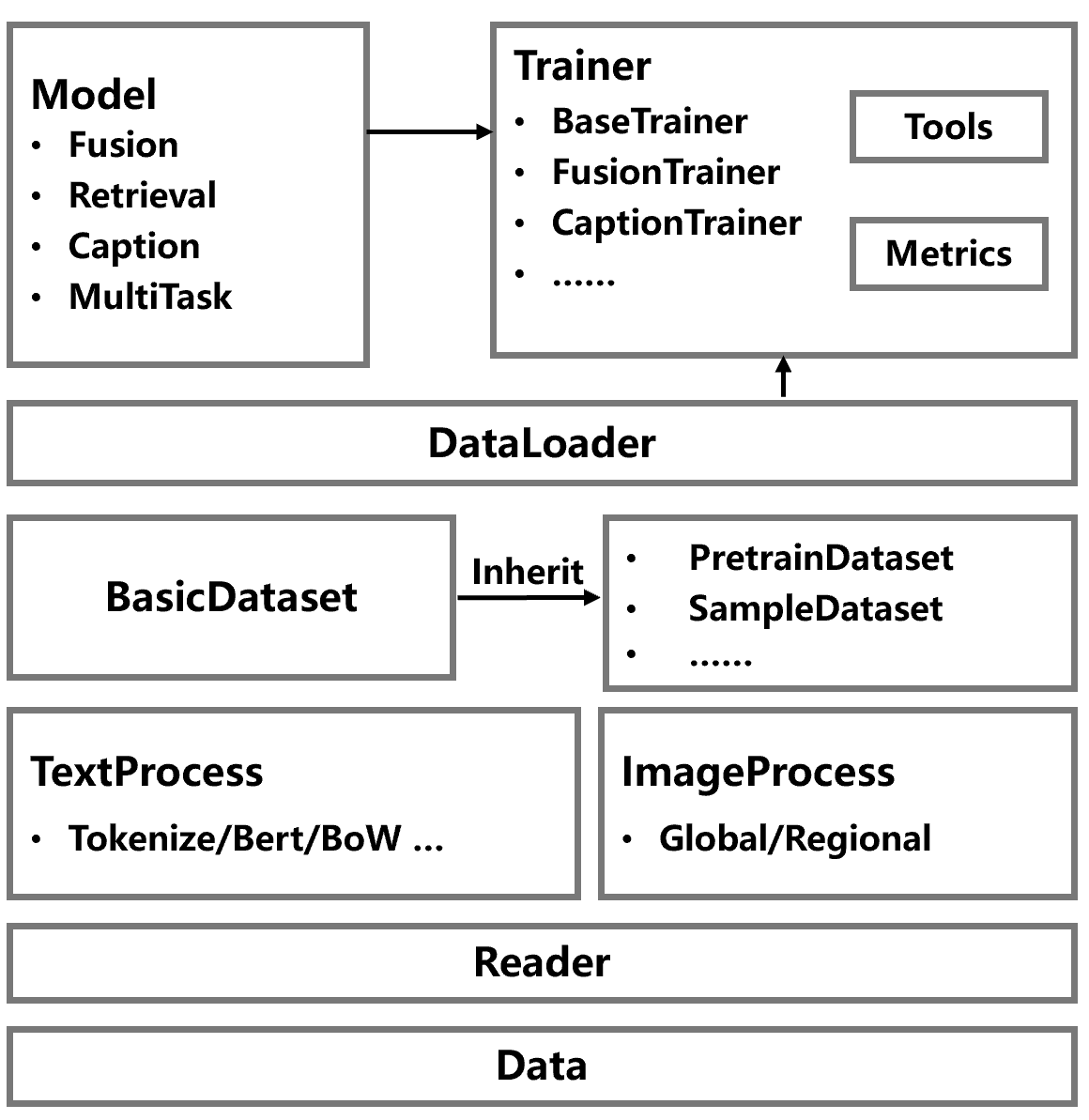

OMML includes torch version torchmm and paddle version paddlemm packages, which consists of the following three modules:

- Data processing: Provide a unified data interface and multiple data processing formats.

- Model library: Including multi-modal fusion, cross-modal retrieval, image caption, and multi-task algorithms.

- Trainer: Set up a unified training process and related score calculations for each task.

Download the toolkit:

git clone https://github.com/njustkmg/OMML.git

from torchmm import TorchMM

# config: Model running parameters, see configs/

# data_root: Path to dataset

# image_root: Path to images

# cuda: Which gpu to use

runner = TorchMM(config='configs/cmml.yml',

data_root='data/COCO',

image_root='data/COCO/images',

out_root='experiment/cmml_torch',

cuda=0)

runner.train()

runner.test()or

python run_torch.py --config configs/cmml.yml --data_root data/COCO --image_root data/COCO/images --out_root experiment/cmml_torch --cuda 0

from paddlemm import PaddleMM

# config: Model running parameters, see configs/

# data_root: Path to dataset

# image_root: Path to images

# gpu: Which gpu to use

runner = PaddleMM(config='configs/cmml.yml',

data_root='data/COCO',

image_root='data/COCO/images',

out_root='experiment/cmml_paddle',

gpu=0)

runner.train()

runner.test()or

python run.py --config configs/cmml.yml --data_root data/COCO --image_root data/COCO/images --out_root experiment/cmml_paddle --gpu 0

- Modal joint learning - Fusion learning

- Early (Multi-modal early fusion)

- Late (Multi-modal late fusion)

- LMF (Efficient Low-rank Multimodal Fusion with Modality-Specific Factors)

- TMC (Trusted Multi-View Classification)

- Modal joint learning - Co-training

- Cross-modal learning - Modal translation

- Cross-modal learning - Modal alignment

- VSE++ (VSE++: Improving Visual-Semantic Embeddings with Hard Negatives)

- SCAN (Stacked Cross Attention for Image-Text Matching)

- BFAN (Focus Your Attention: A Bidirectional Focal Attention Network for Image-Text Matching)

- IMRAM (IMRAM: Iterative Matching with Recurrent Attention Memory for Cross-Modal Image-Text Retrieval)

- SGRAF (Similarity Reasoning and Filtration for Image-Text Matching)

- Multi-task framework based on Transformer structure

- Chuan Qin, Hengshu Zhu, Tong Xu, Chen Zhu, Liang Jiang, Enhong Chen, Hui Xiong, Enhancing Person-Job Fit for Talent Recruitment: An Ability-aware Neural Network Approach, In Proceedings of the 41st International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR-2018) , Ann Arbor, Michigan, USA, 2018.

- Chen Zhu, Hengshu Zhu, Hui Xiong, Chao Ma, Fang Xie, Pengliang Ding, Pan Li, Person-Job Fit: Adapting the Right Talent for the Right Job with Joint Representation Learning, In ACM Transactions on Management Information Systems (ACM TMIS), 2018.

- Dazhong Shen, Hengshu Zhu, Chuan Qin, Tong Xu, Enhong Chen, Hui Xiong, Joint Representation Learning with Relation-enhanced Topic Models for Intelligent Job Interview Assessment, In ACM Transactions on Information Systems (ACM TOIS) , 2021.

- Yang Yang, Jia-Qi Yang, Ran Bao, De-Chuan Zhan, Hengshu Zhu, Xiao-Ru Gao, Hui Xiong, Jian Yang. Corporate Relative Valuation using Heterogeneous Multi-Modal Graph Neural Network. IEEE Transactions on Knowledge and Data Engineering (IEEE TKDE), 2021. (CCF-A). Code

- Yang Yang, Hong-Chen Wei, Heng-Shu Zhu, Dian-Hai Yu, Hui Xiong, Jian Yang. Exploiting Cross-Modal Prediction and Relation Consistency for Semi-Supervised Image Captioning. IEEE Transactions on Cybernetics (IEEE TCYB), 2022 in press. (CCF-B).[Pytorch Code] [Paddle Code]

- Yang Yang, De-Chuan Zhan, Yi-Feng Wu, Zhi-Bin Liu, Hui Xiong, and Yuan Jiang. Semi-Supervised Multi-Modal Clustering and Classification with Incomplete Modalities. IEEE Transactions on Knowledge and Data Engineering (IEEE TKDE), 2020. (CCF-A)

- Yang Yang, Chubing Zhang, Yi-Chu Xu, Dianhai Yu, De-Chuan Zhan, Jian Yang. Rethinking Label-Wise Cross-Modal Retrieval from A Semantic Sharing Perspective. Proceedings of the 30th International Joint Conference on Artificial Intelligence (IJCAI-2021), Montreal, Canada, 2021. (CCF-A).

- Yang Yang, Yi-Feng Wu, De-Chuan Zhan, Zhi-Bin Liu, Yuan Jiang. Complex Object Classification: A Multi-Modal Multi-Instance Multi-Label Deep Network with Optimal Transport. Proceedings of the Annual Conference on ACM SIGKDD (KDD-2018) , London, UK, 2018. Code

- Yang Yang, Jingshuai Zhang, Fan Gao, Xiaoru Gao, Hengshu Zhu DOMFN: A Divergence-Orientated Multi-Modal Fusion Network for Resume Assessment. ACM MULTIMEDIA(ACMMM-2022) , Lisbon, Portugal, 2022.(CCF-A)

- For more papers, welcome to our website njustkmg !

- Paddle Paper Reproduction Competition (4st): "Comprehensive Semi-Supervised Multi-Modal Learning" Championship

- Paddle Paper Reproduction Competition (5st): "From Recognition to Cognition: Visual Commonsense Reasoning" Championship

- Paddle Paper Reproduction Competition (6st): "Efficient Low-rank Multimodal Fusion with Modality-Specific Factors" Championship

- PaddlePaddle reproduce record. link .

- We welcome you to contribute code to OMML, and thank you very much for your feedback.

This project is released under Apache 2.0 license .