EET(Easy and Efficient Transformer) is an efficient Pytorch inference plugin focus on Transformer-based models with large model sizes and long sequences.

1、Pre-padding decoding. Pre-padding keep the relative position embedding remain unchanged within the context and the generated sequence, reducing the gap between training and inference. Basic on this, we achieve parallel inference for the context and incremental decoding for generated token sequence.

2、High performance. Design highly optimized CUDA kernels, referencing to NVIDIA Faster Transformer, supporting long sequences as well as large model sizes with advanced optimization.

3、Flexible. Provide op-level and model-level APIs, allowing users to construct their model or upgrade partial algorithm flexible.

4、Easy to use. EET could be integrated into Fairseq and Transformes directly by replacement of sepcified files, without any code change.

5、Smart deployment. Support dynamic batching and variable input length, Combined with python web, EET could be deployed smoothly.

EET has been applied to a variety of NetEase online services,such as NiShuiHan, NetEase's cloud music, TianYu, Lofter, etc. In the future, EET will work on urtra-large-scale model inference of trillion parameters.

| Frameworks | maximum model size | maximum sequence length | Performance | Bert | GPT-2 | Op-level | Fairseq support | Transformers support | dynamic batch & variable inputs |

|---|---|---|---|---|---|---|---|---|---|

| EET | 16384 | 16384 | highest | Y | Y | Y | Y | Y | Y |

| Faster Transformer | Multiples of specific numbers, such as 128, 256, 384, 512 | 1024 | high | Y | Y | N | N | N | N |

| TensorRT | 1024 | 1024 | high | Y | N | N | N | N | N |

| LightSeq | 1024 | 1024 | high | Y | Y | N | N | N | Y |

| TurboTransformer | 1024 | 1024 | medium | Y | Y | N | N | Y | Y |

| ONNX | non-limited | non-limited | slow | Y | Y | Y | N | N | Y |

- cuda:>=10.1

- python:>=3.7

- gcc:>= 7.4.0

- torch:>=1.5.0

- numpy:>=1.19.1

If you are installing from source, you will need install the necessary environment.Then, proceed as follows:

$ git clone git@github.com:NetEase-FuXi/EET.git

$ pip install transformers==3.5.0

$ pip install fairseq==0.10.0

$ pip install .Due to the compilation of a large number of cuda kernels, the installation time is relatively long, please be patient.

$ git clone git@github.com:NetEase-FuXi/EET.git

$ cd EET/docker

$ docker build -t your_docker_name:your_docker_version .

$ nvidia-docker run -it --net=host -v /your/project/directory/:/root/workspace your_Docker_Name:your_docker_version bashEET has been installed in the docker.

$ cd EET/example

$ python bert_transformers_example.py$ cd EET/example

$ python gpt2_transformers_example.py$ cd EET/example

$ python gpt2_fairseq_example.pyWe currenly support the GPT-2, Bert.

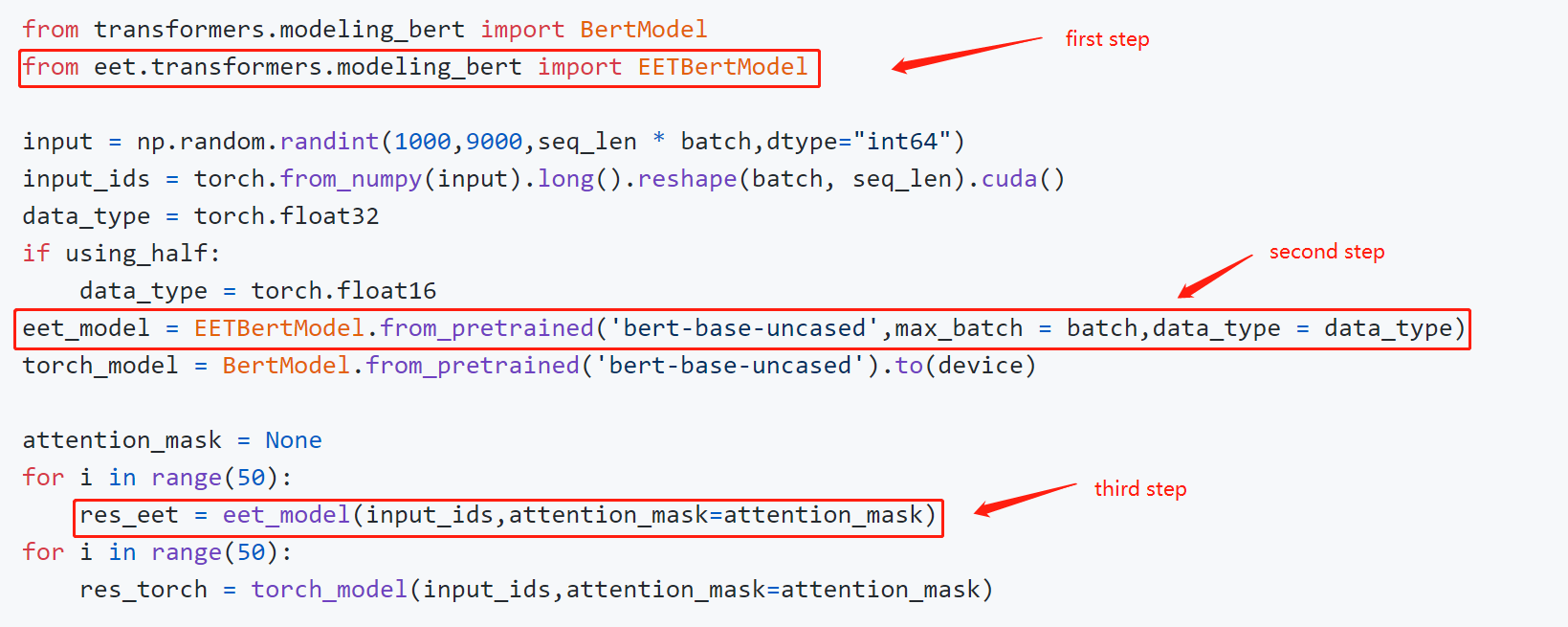

EET provides python User-friendly APIs(python/eet), integrated into Fairseq and Transformers with just a few lines of code.

1、How to inference

2、How to customize model

You can refer to Operators APIs listed below to build your own model structure, just by modifying the files under python/eet.

3、How to integrate EET into fairseq

Replace the original transformer.py in Fairseq with our transformer.py and reinstall the Fairseq, that is all ! Transformer.py in EET corresponds to the fusion of transformer.py and transformer_layer.py in fairseq.

4、How to integrate EET into Transformers

Replace the original modeling_bert.py and odeling_gpt2.py in Transformers with our modeling_bert.py and modeling_gpt2.py and reinstall the Transformers, that is all ! modeling_bert.py in EET corresponds to modeling_bert.py in transformers;modeling_gpt2.py in EET corresponds to modelling_gpt2.py in transformers.

5、How to make a server

We choose service-streamer to make the model server, building the service based on your python project directly. Please make sure the dynamic-batch is open if you want a higher throughput.

-

model APIs:We provide ready-made APIs for GPT2 and Bert models.

EET and fairseq class comparison table

EET fairseq Remarks EETTransformerDecoder TransformerDecoder EETTransformerDecoderLayer TransformerDecoderLayer EETTransformerAttention MultiheadAttention EETTransformerFeedforward TransformerDecoderLayer fusion of multiple small operators EETTransformerEmbedding Embedding + PositionalEmbedding EETTransformerLayerNorm nn.LayerNorm EET and transformers class comparison table

EET transformers Remarks EETBertModel BertModel EETBertEncoder BertEncoder EETBertEncoderLayer BertLayer EETBertAttention BertAttention EETBertFeedforward BertIntermediate + BertOutput EETBertEmbedding BertEmbeddings EETGPT2Model GPT2Model EETGPT2Decoder GPT2Model transformers has no GPT2Decoder EETGPT2DecoderLayer Block EETGPT2Attention Attention EETGPT2Feedforward MLP EETGPT2Embedding nn.Embedding EETLayerNorm nn.LayerNorm -

operators APIs:We provide all the operators required for Transformer models. You can combine different kernels to build different model structures

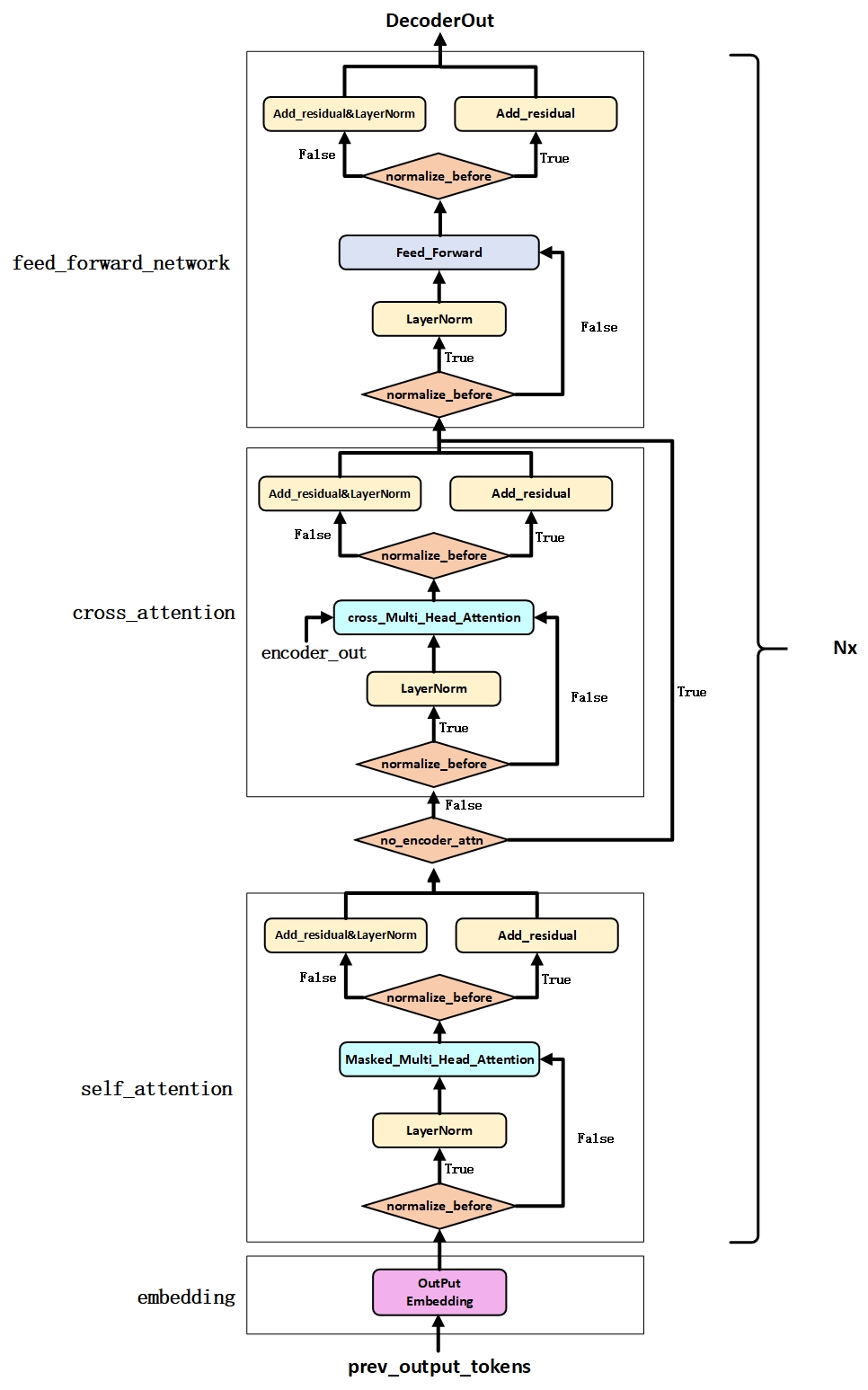

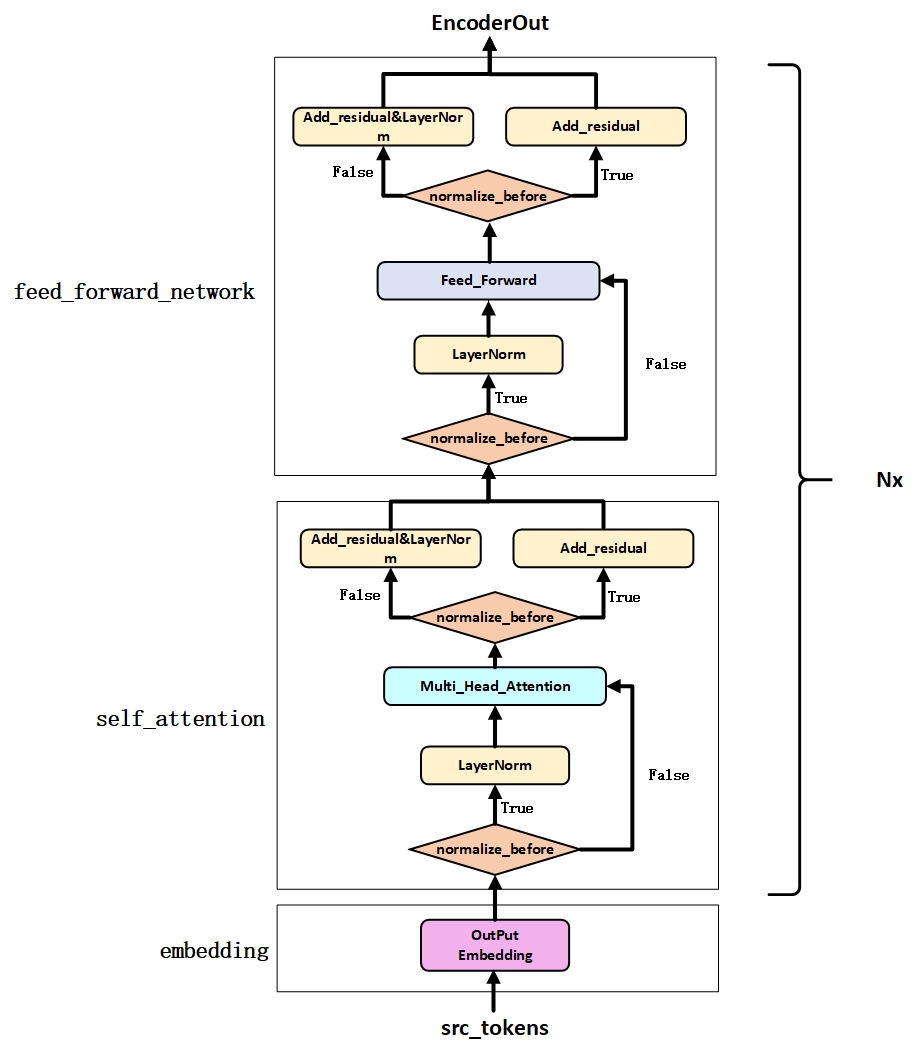

operators APIs Remarks masked_multi_head_attention GPT2 self_attention cross_multi_head_attention cross_attention multi_head_attention Bert self_attention ffn FeedForwardNetwork embedding transformers & fairseq layernorm nn.LayerNorm

We tested the performance of EET on two GPU hardware platforms. We chose pytorch, NVIDIA Faster Transformers, and lightseq implementations for comparison.

- RTX 2080ti (batch_size=4, hidden_units=1024, sequence_length=1024, precision=fp16)

- RTX 2080ti (batch_size=4, context_ratio=50%, sequence_length=1024, precision=fp16)

- A100 (batch_size=4, hidden_units=1024, sequence_length=1024, precision=fp16)

- A100 (batch_size=4, context_ratio=50%, sequence_length=1024, precision=fp16)

Medium size model(hidden_units=1024,max_seq_len=768),compare with lightseq:

Small size model(hidden_units=768,max_seq_len=128),compare with lightseq:

- RTX 2080ti

- A100

- int8

- sparse

You can post your problem with github issues.