This is the master resource list for NLP from scratch. This is a living document and will continually be updated and so should always be considered a work in progress. If you find any dead links or other issues, feel free to submit an issue.

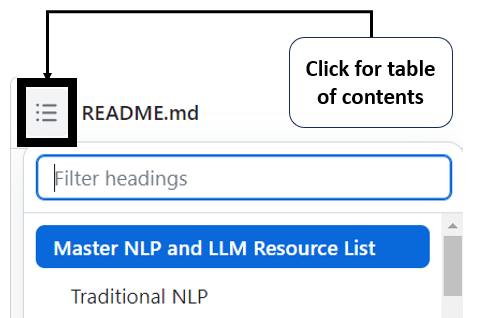

This document is quite large, so you may wish to use the Table of Contents automatically generated by Github to find what you are looking for:

Thanks, and enjoy!

- nlp-datasets: Alphabetical list of free/public domain datasets with text data for use in Natural Language Processing (NLP)

- awesome-public-datasets - Natural Language: Natural language section of the awesome public datasets github page

- SMS Spam Dataset: The “Hello World” of NLP datasets, ~55K SMS messages with label of spam/not spam for binary classification. Hosted on UC Irvine Machine Learning repository.

- IMDB dataset: The other “Hello World” of datasets for NLP, 50K “highly polar” movie reviews scraped from IMDB and compiled by Andrew Maas of Stanford.

- Twitter Airline Sentiment: Tweets from February of 2015 and associated sentiment labels at major US airlines - hosted on Kaggle (~3.5MB)

- CivilCommentst: Dataset from the Civil Comments platform which shut down in 2017. 2M public comments with labels for toxicity, obscenity, threat, insulting, etc.

- Cornell Movie Dialog: ~220K conversations from 10K pairs of characters across 617 popular movies, compiled by Cristian Danescu-Niculescu-Mizil of Cornell. Tabular compiled format available on Hugging Face.

- CNN Daily Mail: “Hello World” dataset for summarization, consisting of articles from CNN and Daily Mail and accompanying summaries. Also available through Tensorflow and via Hugging Face.

- Entity Recognition Datasets: Very large list of named entity recognition (NER) datasets (on Github).

- WikiNER: 7,200 manually-labelled Wikipedia articles across nine languages: English, German, French, Polish, Italian, Spanish,Dutch, Portuguese and Russian.

- OntoNotes: Large corpus comprising various genres of text in three languages with structural information and shallow semantic information.

- Flores-101 - Multilingual, multi-task dataset from Meta for machine translation research, focusing on “low resource” languages. Associated Github repo.

- CulturaX: Open dataset of 167 languages with over 6T words, the largest multilingual dataset ever released

- Amazon Review Datasets: Massive datasets of reviews from Amazon.com, compiled by Julian McAuley of University of California San Diego

- Yelp Open Dataset: 7M reviews, 210K businesses, and 200K images released by Yelp. Note the educational license.

- Google Books N-grams: Very large dataset (2.2TB) of all the n-grams from Google Books. Also available hosted in an S3 bucket by AWS.

- Sentiment Analysis @ Stanford NLP: Includes a link to the dataset of movie reviews used for Stanford Sentiment Treebank 2 (SST2). Also available on Hugging Face.

- CoNLL-2003: Language-independent entity recognition dataset from the Conference on Computational Natural Language Learning (CoNLL-2003) shared task. Foundational datasets for named entity recognition (NER).

- LMSYS-Chat-1M: A Large-Scale Real-World LLM Conversation Dataset: Large scale dataset of LLM 1M conversations with LLMs collected from Chatbot Arena website.

- TabLib: Largest publicly available dataset of tabular tokens (627M tables, 867B tokens), to encourage the community to build Large Data Models that better understand tabular data

- LAION 5B: Massive dataset of images and captions from Large-scale Artificial Intelligence Open Network (LAION), used to train Stable Diffusion.

- Databricks Dolly 15K: Instruction dataset compiled internally by Databricks, used to train the Dolly models based on the Pythia LLMs.

- Conceptual Captions: Large image & caption pair dataset from Google research.

- Instruction Tuning Volume 1: List of popular instruction-tuning datasets from Sebastian Ruder

- Objaverse: Massive dataset of annotated 3D objects (with associated text labels) from Allen Institute. Comes in two sizes: 1.0 (800K objects) and XL (~10M objects).

- Gretel Synthetic Text to SQL Dataset: Open dataset of synthetically generated natural language and SQL query pairs for LLM training, from Gretel AI.

- Fineweb: 15T token dataset of cleaned and deduplicated data from CommonCrawl by Hugging Face.

- API tutorials beyond OpenAPI: Detailed tutorial on the particulars of APIs and how they function.

- requests python library: The standard library for making HTTP requests in python, simple and easy.

- BeautifulSoup: Python library for parsing data out of HTML, XML, and other markup documents. Essential for web scraping.

- Selenium Python bindings: Working with Selenium in Python for more advanced web scraping.

- Web scraping with ChatGPT prompts: Medium post on working with ChatGPT to build web scraping code with requests, BeautifulSoup, and Selenium

- Natural Language Toolkit (NLTK): Core and essential NLP python library put together for teaching purposes by University of Pennsylvania, now fundamental to NLP work.

- spaCy: Fundamental python NLP library for “industrial-strength natural language processing”, focused on building production systems.

- Gensim: open-source python library with a focus on topic modeling, semantic similarity, and embeddings. Also contains implementations of word2vec and doc2vec.

- fastText: Open-source, free, lightweight library that allows users to learn text representations (embeddings) and text classifiers. Includes pre-trained word vectors from Wikipedia and Common Crawl. From Meta’s FAIR Group.

- KerasNLP: Natural language processing with deep learning and LLMs in Keras using Tensorflow, Pytorch, or JAX. Includes models such as BERT, GPT, and OPT.

- Tensorflow Text: Lower level than KerasNLP, text manipulation built into Tensorflow.

- Stanford CoreNLP: Java-based NLP library from Stanford, still important and in use

- TextBlob: Easy to use NLP library in Python, including simple sentiment scoring and part-of-speech (POS) tagging.

- Scikit-learn (sklearn): The essential library for doing machine learning in python, but more specifically for working with text data.

- SparkNLP: Essential Big Data library for NLP work from John Snow Labs. Take a look at their extensive model repo. Github repo with lots of resources here. Medium post here on using the T5 model for classification with SparkNLP.

- A Recipe for Training Neural Networks: Tips on training neural networks and debugging from Andrej Karpathy

- Deep Learning for NLP in Pytorch: Detailed tutorial on applying NLP techniques with Pytorch, including LSTMs and embeddings.

- Exploring LSTMs: A deep technical look into LSTMs and visualizations of what the networks “see”.

- VADER: Valence Aware Dictionary and sEntiment Reasoner: A lexicon and rules-based sentiment scoring model, implemented in python. Used by other major NLP libraries (NLTK, spaCy, TextBlob, etc.)

- PyABSA - Open Framework for Aspect-based Sentiment Analysis: Python library for aspect-based sentiment analysis

- Explainable AI: Integrated Gradients: Detailed blog post on using the Integrated Gradients method from the Alibi python library for explainable ML on text.

- Pytesseract: Python wrapper for Google’s Tesseract OCR engine.

- Donut - Document understanding transformer: OCR-free end-to-end Transformer model for various visual document understanding tasks, such as visual document classification or information extraction. By Clova.ai research group.

- Facebook Nougat: Neural Optical Understanding for Academic Documents (NOUGAT) is a Meta research project for specifically doing OCR and converting academic documents into a markup language. Available on Hugging Face spaces here and here.

- Amazon Textract: AWS Service for automatically extracting information from documents such as PDFs.

- OCR with Google Document AI: Codelab demonstrating OCR on PDFs with GCP Document AI

- RAKE: Rapid Automatic Keyword Extraction, a domain independent keyword extraction algorithm which tries to determine key phrases in a body of text by analyzing the frequency of word appearance and its co-occurrence with other words in the text.

- YAKE: Yet Another Keyword Extractor is a light-weight unsupervised automatic keyword extraction method which rests on text statistical features extracted from single documents to select the most important keywords of a text.

- Pytextrank: Python implementation of TextRank and associated algorithms as a spaCy pipeline extension, for information extraction and extractive summarization.

- PKE (Python Keyphrase Extraction): open source python-based keyphrase extraction toolkit, implementing a variety of algorithms. Uses spaCy.

- KeyBERT: Keyword extraction technique that leverages BERT embeddings to create keywords and keyphrases that are most similar to a document.

- UniversalNER: Targeted distillation model for named entity recognition from Microsoft Research and USC, based on data generated by ChatGPT.

- SpanMarker: Framework for NER models based on transformers such as BERT, RoBERTa and ELECTRA using Hugging Face Transformers (HF page)

- Treebank: Definition at Wikipedia

- Universal Dependencies: Universal Dependencies (UD) is a framework for consistent annotation of grammar (parts of speech, morphological features, and syntactic dependencies) across different human languages.

- UDPipe: UDPipe is a trainable pipeline for tokenization, tagging, lemmatization and dependency parsing of CoNLL-U files.

- Topic Modeling: Wikipedia page

- Latent Dirichlet Allocation (LDA): Wikipedia page

- A Beginner’s Guide to Latent Dirichlet Allocation: TDS article with some easier to understand explanations of how LDA works.

- Latent Semantic Analysis (LSA): Wikipedia page

- Termite: Python-based visualization framework for topic modeling

- Practical NLP Project: A nice overview of Topic Modeling as part of a project at Universität Osnabrück

- Topic Modeling with Llama2: Post from Maarten Grootendorst on using Meta’s LLaMA 2 model and Hugging Face transformers for topic model

- BERTopic: Topic modeling in Python using Hugging Face transformers and c-TF-IDF to create dense clusters, allowing for easily interpretable topics whilst keeping important words in the topic descriptions.

- MTEB: Massive Text Embedding Benchmark: General benchmark for LLM embedding performance.

- Nomic Atlas: Project from Nomic AI to create high-performance browser-based visualizations of the embeddings of large datasets

- fastText language identification models: Language identification models for use with fastText

- SeamlessM4T: Multimodal translation and transcription model based on the transformer architecture from Meta research.

- Helsinki NLP Translation Models: Well-known and used translation models in Hugging Face from the University of Helsinki Language Technology Research Group, based on the OPUS neural machine translation framework.

- ACL 2023 Multilingual Models Tutorial: Microsoft’s presentations from ACL 2023 - a lot of dense content here on low resource languages, benchmarks, prompting, and bias.

- ROUGE: Wikipedia page for ROUGE score for summarization and translation tasks.

- BLEU: Wikipedia page for BLEU machine translation tasks.

- sacreBLEU: Python library for hassle-free and reproducible BLEU scores

- XTREME: Comprehensive benchmark for cross-lingual transfer learning on a diverse set of languages and tasks from researchers at Google and Carnegie Mellon

- Belebele: Multiple-choice machine reading comprehension (MRC) dataset spanning 122 language variants from Meta, based upon the Flores dataset

- OpenNMT: Open neural machine translation models in Pytorch and Tensorflow. Documentation for python here.

- FinGPT-3: GPT model trained in Finnish, from a research group at the University of Turku, Finland.

- Jais 13-B: Bilingual Arabic/English model based on GPT-3 architecture, from Inception AI / Core42 group in UAE.

- Evo-LLM-JP: Japanese LLM from AI startup Sakana.ai created using evolutionary model merging. There is a chat model, a vision model, and a stable diffusion model all of which can be prompted and converse in Japanese. On Hugging Face here.

- Adversarial NLI: Benchmark for NLI from Meta research and associated dataset.

- NLP Interview Checklist: Checklist of knowledge for interviewing for NLP roles.

- A Beginner’s Guide to Large Language Models: Free e-book from NVIDIA covering some of the absolute fundamentals in plain language.

- How AI chatbots like ChatGPT or Bard work – visual explainer: A good short visual explainer from The Guardian on how embeddings works and make LLMs function.

- Generative AI Primer: A primer for the layperson on generative AI and LLMs, from Educause Review - lots of links to source materials here and well-written.

- Understanding Causal LLMs, Masked LLM’s, and Seq2Seq: A Guide to Language Model Training Approaches: Medium post breaking down the different types of language modeling training approaches, causal language modeling vs masked language modeling (CLM vs MLM).

- [1 hr Talk] Intro to Large Language Models: Great short talk by Andrej Karpathy himself, covering the fundamentals of what LLMs are and how they are trained, demonstrations of GPT-4’s capabilities, and adversarial attacks & jailbreaks.

- Explainer: What is a foundation model?: A good and lengthy explainer and discussion of foundation models, including visuals and tables. From Ada Lovelace Institute.

- Center for Research on Foundation Models (CRFM): Interdisciplinary initiative born out of the Stanford Institute for Human-Centered Artificial Intelligence (HAI) that aims to make fundamental advances in the study, development, and deployment of foundation models. See the report, transparency index, and their master list of models (increment using the URL).

- Getting Started with Llama: Official getting started page for working with LLaMA 2 model from Meta.

- How to generate text: using different decoding methods for language generation with Transformers: Overview of different text generation decoding methods from HuggingFace including beam search vs greedy, top-p and top-k sampling.

- Guiding Text Generation with Constrained Beam Search in 🤗 Transformers: Blog post from HF on using constrained Beam Search in transformers as opposed to regular beam search.

- GPT in 60 lines of Numpy: Great blog post on building GPT from scratch and the fundamental workings of the decoder side of the transformer.

- Explanations of Temperature and Top-p and Top-k sampling Cohere documentation.

- Creatively Deterministic: What are Temperature and Top P in Generative AI?: LinkedIn post on temperature, top-p, and top-k

- What is Temperature in NLP? A short explainer on temperature with a nice accompanying interactive visual, showing its effect on output probabilities.

- ChatGPT: Obviously. From OpenAI. Free, but requires an account.

- Perplexity Labs: Free, web-based LLM chat client, no account required. Includes popular models such as versions of LLaMA and Mistral as well as Perplexity’s own pplx model.

- HuggingChat: Chat client from HuggingFace, includes LLaMA and Mistral clients as well as OpenChat. Free for short conversations (in guest mode), account required for longer use.

- DeepInfra Chat: Includes LLaMA and Mistral, even Mixtral 8x7B! Free to use.

- Pi: Conversational LLM from Inflection. No account required.

- Poe: AI assistant from Quora, allows interacting with OpenAI, Anthropic, LLaMA and Google models. Account required.

- Copilot: Or is it Bing Chat? The lines are blurry. Backed by GPT, allows using GPT-4 on mobile (iOS, Android) for free! Requires a Microsoft account.

- PEGASUS - A State-of-the-Art Model for Abstractive Text Summarization: Foundational LLM for abstractive summarization from Google research in 2020. Available on Hugging Face here.

- Fine-tuning Guide from OpenAI: Official docs from OpenAI on fine-tuning hosted GPT-3.5-turb.

- Getting Started with Deep Learning with PyTorch and Hugging Face: Lots of example notebooks for fine-tuning models (T5, Falcon, LLaMA) from Phil Schmid of Hugging Face

- Fine-tune a non-English GPT-2 Model with Huggingface: “Hello World” example of fine-tuning a GPT2 model to write German recipes.

- HuggingFace Community Resources: Community resources from Hugging Face. A ton of free Colab notebooks here on fine-tuning various foundation models

- Personal Copilot: Train Your Own Coding Assistant: Blog post from Hugging Face on fine-tuning a code generating LLM, using both traditional fine-tuning and PEFT with StarCoder.

- Optimizing Pre-Trained Models: A Guide To Parameter-Efficient Fine-Tuning (PEFT): A long guide on terminology and the particulars of different types of PEFT.

- GPT 3.5 vs Llama 2 fine-tuning: A Comprehensive Comparison: Short blog post comparing fine-tuning GPT vs. LLaMA 2 on a SQL code task, taking price into consideration.

- Regression with Text Input Using BERT and Transformers: Fairly in-depth Medium post (including a lot of code) on using BERT for regression.

- LongLoRA: Efficient Fine-tuning of Long-Context Large Language Models: Method for fine-tuning models (specifically LLaMA and Alpaca) to have longer context windows. Lots of resources around this on their official Github page.

- PEFT: State-of-the-art Parameter-Efficient Fine-Tuning (PEFT) library in Hugging Face. methods. Official Github repo.

- LoRA: Low-Rank Adaptation of Large Language Models: Official Github repo for LoRA from Microsoft.

- Low-RanK Adapters (LoRA): Conceptual guide from Hugging Face.

- QLoRA: Efficient Finetuning of Quantized LLMs, official Github repo. The method that produced Guanaco from LLaMA.

- Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation): List of tips and learnings based on using LoRA/QLoRA from Sebastian Raschka

- Instruction Tuning Volume 1: Summary of instruction-tuning and links to some resources from Sebastian Ruder’s NLP newsletter.

- Finetuning LLMs with LoRA and QLoRA: Insights from Hundreds of Experiments: Results of many experiments with model fine tuning using LoRA and QLoRA from Lightning AI, on memory and compute usage, training time, etc.

- Overview of PEFT: State-of-the-art Parameter-Efficient Fine-Tuning: Article from KDNuggets with example code using LLaMA 7B

- Llama Recipes: Recipes from Meta themselves for fine-tuning LLaMA

- Axolotl: Software framework for streamlining fine-tuning of LLMs from OpenAccess AI Collective.

- Quantization: Conceptual guide from Hugging Face

- What are Quantized LLMs?: Explainer post from TensorOps, including Hugging Face code and links to other resources.

- 7 Ways To Speed Up Inference of Your Hosted LLMs: Medium post with techniques for speeding up Inference of LLMs, including an explainer on quantization.

- HuggingFace meets bitsandbytes for lighter models on GPU for inference (Colab): Colab notebook demonstrating usage of bitsandbytes for model quantization with BLOOM 3B.

- Label Studio: Open source python library / framework for data labelling

- OpenAI Cookbook: Recipes and tutorial posts for working and building with OpenAI, all in one place. Example code in the Github repo.

- Cohere Guides: Example notebooks for working with Cohere for various LLM usage cases.

- GPT4All: Locally-hosted LLM from Nomic for offline development.

- LM Studio: Software framework for local LLM development and usage.

- Jan: Offline GUI for working with LLMs. Mobile app under development.

- Open WebUI: Self-hosted WebUI for LLMS to operate entirely offline - formly Ollama Web UI.

- TransformerLab: Open source project for GUI interface for working with LLMs locally.

- SuperWhisper: Local usage of Whisper model on Mac OS, allows you to speak commands to your machine and have them transcribed (all locally).

- Cursor: Locally installable code editor with autocomplete, chat, etc. backed by OpenAI GPT3.5/4.

- llama.cpp: Inference from Meta’s LLaMA model in pure C/C++. Python integration through llama-cpp-python.

- Ollama: Host LLMs locally, includes models like LLaMA, Mistral, Zephyr, Falcon, etc.

- Exploring Ollama for On-Device AI: Comprehensive tutorial on Ollama from PyImageSearch

- llamafile: Framework for LLMs as single executable files for local execution and development work, examples of one-liners and use from its creator here Bash One-Liners for LLMs

- PowerInfer: CPU/GPU LLM inference engine leveraging activation locality for fast on-device generation and serving of results from LLMs locally.

- MLC LLM: Native deployment of LLMs with native APIs with compiler acceleration. Includes WebLLM for serving LLMs through the browser and examples of locally developed Android and iPhone LLM apps.

- DSPy: Framework for algorithmically optimizing LLM prompts and weights from Stanford NLP.

- AnythingLLM: Docker-based framework for offline LLM usage with RAG.

- Stable Diffusion: The open model from Stability AI that brought AI-generated images to the forefront. Official Github repo here, and (one of many) Hugging Face Space here (for SD 2.1).

- Deepfloyd Lab: Multimodal research AI lab that is a part of Stability AI, has released the IF Pixel Diffusion model, which does much better on complex image generations such as those involving text.

- Finetune Stable Diffusion Models with DDPO via TRL: Blog post from Hugging Face on fine-tuning SD with reinforcement learning and Denoising Diffusion Policy Optimization (DDPO).

- Fast Stable Diffusion XL on TPU v5e: Hugging Face space with hosted SDXL on TPU for free and fast generation of high quality (1024x1024) images.

- SDXL in 4 steps with Latent Consistency LoRAs: Distilling Stable Diffusion XL with Latent Consistency LoRA for highly compute-optimized synthetic image generation.

- DeciDiffusion: Optimized SD 1.5 model from Deci.ai

- Segmind-Distill-SD: Distilled Stable Diffusion model from Segmind, claims 50% smaller and 60% faster. Github repo here & Hugging Face model here.

- Emu: Enhancing Image Generation Models Using Photogenic Needles in a Haystack AI: Fine-tuning of Stable Diffusion from Meta research focusing on high-quality images.

- DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation: Method for fine-tuning diffusion models to generate custom images of a subject based on samples.

- AutoTrain Dreambooth (Colab): Google Colab notebook for Autotraining Dreambooth models using Hugging Face.

- Kosmos-G: Generating Images in Context with Multimodal Large Language Models: Model from Microsoft Research for generating variations of images given text prompts with minimal to no training.

- Multimodal LLMs by Chip Hyugen: A good post on multimodal LLMs, including foundational / historical models leading up to SOTA like CLIP and Flamingo.

- LLaVA: Large Language and Vision Assistant: A kind of open-source GPT4-V, chat / instruction agent able to work with image data, from researchers at Microsoft, U of Wisconsin, and Columbia. Demo site is here.

- SPHINX: Multimodal, multi-task LLM released by researchers at the University of Shanghai. Demo is here.

- Ferret: Open model from Apple for grounding and object identification.

- XGen-MM: Continuation of (and rebranding) of Salesforce’s multimodal BLIP model for image interrogation.

- wav2vec 2.0 And w2v-BERT: Explanations of the technical details behind these multimodal models from Meta’s FAIR group and Google Brain, by Mohamed Anwar

- Musenet: Older research from OpenAI, Musenet applied the GPT architecture to MIDI files to compose music.

- AudioCraft: Multiple models from Meta research, for music (MusicGen), sound effect (AudioGen), and a codec and diffusion model for recovering compressed audio (EnCodec and Multi-band Diffusion). Demo also available in a Hugging Face space, and a sample Colab notebook here.

- Audiobox: Text-to-audio and speech prompt to audio from Meta. Interactive demo site here.

- StableAudio: Diffusion-based music generation model from Stability AI. Blog post with technical details.

- SALMONN: Speech Audio Language Music Open Neural Network from researchers at Tsinghua University and ByteDance. Allows for things like inquiring about the content of audio files, multilingual speech recognition & translation and audio-speech co-reasoning.

- Real-time translation and lip-synching: https://blog.invgate.com/video-translator

- HeyGen: Startup creating AI generated avatars and multimedia content, _e.g. _for instructional videos. Video demo of lip-synching (dubbing) and translation.

- Whisper: OpenAI’s open source multilingual, text-to-speech transcription model. Official Github repo with lots of details.

- whisper_real_time: Example of real-time audio transcription using Whisper

- whisper.cpp: High-performance plain C/C++ implementation of inference using OpenAI's Whisper without dependencies

- Deepgram: Audio AI company with enterprise offerings for TTS including both their own Nova-2 model as well as Whisper or custom models.

- AdaSpeech 4: Adaptive Text to Speech in Zero-Shot Scenarios: Model for realistic audio generation (text-to-speech / TTS) from researchers at Microsoft.

- Project Gutenberg Audio Collection Project: Thousands of free audiobooks transcribed using AdaSpeech4, brought to you by Project Gutenberg, MIT, and Microsoft

- ElevenLabs: Well-known American software company with AI voice cloning and translation products.

- Projects: Create High-Quality Audiobooks in Minutes: Tool for creating high-quality audiobooks via TTS from ElevenLabs.

- Brain2Music: Research from Google for using fMRI scans to reconstruct audio perceived by the listener.

- WavJourney: Compositional Audio Creation with Large Language Models: An approach for generating audio combining generative text for scriptwriting plus audio generation models.

- XTTS: Voice cloning model specifically designed with game creators in mind from coqui.ai. Available in a Hugging Face space here.

- The Future of Music - How Generative AI Is Transforming the Music Industry: Blog post from Anderssen-Horowitz covering a lot of recent developments in the intersection of the music industry and GenAI tools.

- StyleTTS2: Diffusion and adversarial model for realistic speech synthesis (TTS). Audio samples and comparisons with previous models are here.

- Qwen-Audio: Multimodal audio understanding LLM from Alibaba Group

- Audio Diffusion Pytorch: A fully featured audio diffusion library in PyTorch, from researchers at ElevenLabs.

- Generative Image Dynamics: Model from researchers at Google for creating looping images or interactive images from still ones.

- IDEFICS: Open multimodal text and image model from Hugging Face based on Flamingo, similar to GPT4-V. Updated version IDEFICS 2 released 04/2024 with demo here.

- NeRF; Neural Radiance fields creates multiple views of a scene from a single image.

- ZipNeRF: Building on NeRF with more advanced techniques and impressive results, generating drone-style “fly-by” videos from still images of settings.

- Pegasus-1: Multimodal model from TwelveLabs for describing videos and video-to-text generation.

- Gen-2 by RunwayML: Video-generating multimodal model from Runway ML that takes text or images as input.

- Replay: Video (animated picture) generating model from Genmo AI

- Hotshot XL: Text to animated GIF generator based on Stable Diffusion XL. Github and Hugging Face model page.

- ModelScope: Open model for text-to-video generation from Alibaba research

- Stable Video Diffusion: Generative video diffusion model from Stability AI.

- VideoPoet: Synthetic video generation from Google Research, taking a variety of inputs (text, image, video).

- Pika Labs: AI startup for video creation with $55 million in backing.

- Assistive Video: Video generation from text from AI startup Assistive

- Haiper: Text-to-video for short clips (2-4s) from Google Deepmind alumni. Free to use with an account.

- MagicVideo-V2: Multi-Stage High-Aesthetic Video Generation. Text-to-video model from ByteDance research.

- Stable Zero123: 3D image generation model from Stability AI building on the Zero123-XL model. Weights available for non-commercial use on HF here.

- DreamBooth3D: Approach for generating high-quality custom 3D models from source images.

- MVDream: 3D model generation from Diffusion from researchers at ByteDance.

- TADA! Text to Animatable Digital Avatars: Research on models for synthetic generation of 3D avatars from text prompts, from researchers in China and Germany

- TripoSR: Image to 3D generative model jointly developed by Tripo AI & Stability AI

- Microdreamer: Github repo for implementation of Zero-shot 3D Generation in ~20 Seconds from researchers at Renmin University of China

- Tome: Startup for AI-generated slides (Powerpoint). Free to signup.

- Decktopus: “World’s #1 AI-Powered Presentation Generator”. Paid signup

- Beautiful.ai: Another AI-based slide deck generator (paid)

- Github Copilot: Github’s AI coding assistant, based on OpenAI’s Codex model.

- GitHub Copilot Fundamentals - Understand the AI pair programmer: Introductory online training / short course on Copilot from Microsoft.

- Gemini Code Assist: Code assistant from Google based on Gemini. Available in Google Cloud or in local IDEs via a plugin (requires subscription).

- CodeCompose: (TechCruch article): Meta’s internal coding LLM / answer to Copilot

- CodeInterpreter: Experimental ChatGPT plugin that provides it with access to executing python code.

- StableCode: Stability AI’s generative LLM coding model. Hugging Face collection here. Github here.

- Starcoder: Coding LLM from Hugging Face. Github is here. Update: Starcoder 2 has been released as of Feb 2024!

- CodeQwen-1.5: Code-specific version of Alibaba’s Qwen model.

- Codestral: 22B coding model from Mistral AI, supports 80+ languages.

- Ghostwriter: an AI-powered programming assistant from Replit AI.

- DeciCoder 1B: Code completion LLM from Deci AI, trained on Starcoder dataset.

- SQLCoder: Open text-to-SQL query models fine-tuned on Starcoder, from Defog AI. Demo is here.

- CodeLLama: Fine-tuned version of LLaMA 2 for coding tasks, from Meta.

- Refact Code LLM: 1.6B coding LLM with fill-in-the-middle (fim) capability, trained by Refact AI.

- Tabby: Open source, locally-hosted coding assistant framework. Can use Starcoder or CodeLLaMA.

- DuetAI for Developers: Coding assistance based on PaLM as part of Google’s DuetAI offering.

- Gorilla LLM: LLM model from researchers at UC Berkeley trained to generate API calls across many different platforms and tools.

- Deepseek Coder: Series of bilinginual English/Chinese coding LLMs from DeepSeek AI, trained from scratch on 2T tokens, with a composition of 87% code and 13% natural language.

- Phind 70B: Code generation model purported to rival GPT-4 from AI startup Phind.

- Granite: Open-sourced family of code-specific LLMs from IBM Research. On Hugging Face here.

- MathGLM: Open model from Tsinghua University researchers challenging the statement that LLMs cannot do mathematics well. Nonetheless, math remains hard if you’re an LLM.

- Llemma: An Open Language Model For Mathematics: Fine-tuned version of CodeLLaMA on new dataset Proof-Pile-2 from Eleuther AI, a mixture of scientific papers and mathematical web content.

- BloombergGPT: LLM trained by Bloomberg from scratch based on code / approaches from BLOOM

- FinGPT: Finance-specific family of models trained with RLHF, fine-tuned from various base foundation models.

- DocLLM: Layout-aware large language moel from JPMorgan

- Galactica: (MIT Blog Post) Learnings from Meta’s Galactica LLM, trained on scientific research papers.

- BioGPT: Generative Pre-trained Transformer for Biomedical Text Generation and Mining, open LLM from Microsoft Research trained on PubMeb papers.

- MedPALM: A large language model from Google Research, designed for the medical domain. Google has continued this work with MedLM,

- Meditron: Fine-tuned LLaMAs on medical data from Swiss university EPFL. HuggingFace space here. Github here. Llama3 version released 2024/04/19.

- MedicalLLM: Evaluation benchmark for medical LLMs from Hugging Face including leaderboard.

- SaulLM-7B: Legal LLM from researchers at Equall.ai and other universities. A fine-tune of Mistral-7B trained on a legal corpus of over 30B tokens.

- TimeGPT: Transformer-based time series prediction models from NIXTLA. Requires using their service / an API token.

- Lag-Llama: Towards Foundation Models for Probabilistic Time Series Forecasting. Open-source foundation model for time series forecasting based on the transformer architecture.

- Granite: Time-series versions of open-sourced family of LLMs from IBM Research. On Hugging Face here.

- Docarray: python library for nested, unstructured, multimodal data in transit, including text, image, audio, video, 3D mesh, and so on.

- Faiss: Library for efficient similarity search and clustering of dense vectors from Meta Research.

- Pinecone: Vector database is a vector-based database that offers high-performance search and similarity matching.

- Weaviate: Open-source vector database to store data objects and vector embeddings from your favorite ML-models.

- Chroma: Open-source vector store used for storing and retrieving vector embeddings and metadata for use with large language models.

- Milvus: Vector database built for scalable similarity search.

- AstraDB: Datastax’s vector database offering built atop of Apache Cassandra.

- Activeloop: Database for AI powered by a unique storage format optimized for deep-learning and Large Language Model (LLM) based applications.

- OSS Chat: Demo of RAG from Zilliz, allowing chat with OSS documentation.

- The Stanford Natural Language Inference (SNLI) Corpus: Foundational dataset for NLI-based evaluation, 570k human-written English sentence pairs manually labeled for balanced classification with the labels entailment, contradiction, and neutral.

- GLUE: General Language Understanding Evaluation Benchmark from NYU, University of Washington, and Google - model evaluation using Natural Language Inference (NLI) tasks.

- SuperGLUE: The Super General Language Understanding Evaluation, a new benchmark styled after GLUE with a new set of more difficult language understanding tasks, improved resources, and a new public leaderboard.

- SQuAD (Stanford Question Answering Dataset): Reading comprehension question answering dataset for LLM evaluation.

- BigBench: The Beyond the Imitation Game Benchmark (BIG-bench) from Google, a collaborative benchmark with over 200 tasks.

- BigBench Hard: Subset of BigBench tasks considered to be the most challenging, with associated paper.

- MMLU: Multi-task Language Understanding is a benchmark developed by researchers at UC Berkeley and others to specifically measure knowledge acquired during pretraining by evaluating models exclusively in zero-shot and few-shot settings.

- HeLM: Holistic Evaluation of Language Models, a “living” benchmark designed to be comprehensive, from the Center for Research on Foundation Models (CRFM) at Stanford.

- HellaSwag: a challenge dataset for evaluating commonsense NLI that is specially hard for state-of-the-art models, though its questions are trivial for humans (>95% accuracy).

- Dynabench: A “platform for dynamic data collection and benchmarking”. Sort of a Kaggle / collaborative site for benchmarks and data collaboration, an effort of researchers from Meta and American universities.

- LMSys Chatbot Area: Leaderboard from LMSys group based upon human evaluation and Elo score. The only evaluation that Andrej Karpathy trusts.

- Hugging Face Open LLM Leaderboard: Leaderboard from H4 (alignment) Group at Hugging Face. Largely open and fine-tuned models, though this can be filtered.

- AlpacaEval Leaderboard: AlpacaEval an LLM-based automatic evaluation based on the AlpacaFarm evaluation set, which tests the ability of models to follow general user instructions.

- OpenCompass: Leaderboard for Chinese LLMs.

- Evaluating LLMs is a minefield: Popular deck from researchers at Princeton (and authors of AI Snake Oil) on the pitfalls and intricacies of evaluating LLMs.

- LM Contamination Index: The LM Contamination Index is a manually created database of contamination of LLM evaluation benchmarks.

- The Curious Case of LLM Evaluation: In depth blog post, examining some of the finer nuances and sticking points of evaluating LLMs.

- LLM Benchmarks: Dynamic dataset of crowd-sourced prompt that changes weekly for more realistic LLM evaluation.

- Language Model Evaluation Harness: EleutherAI’s language model evaluation harness, a unified framework to test generative language models on over 200 different evaluation tasks.

- PromptBench: Unified framework for LLM evaluation from Microsoft.

- HarmBench: Standardized evaluation framework for automated red teaming for mitigating risks associated with malicious use of LLMs. Paper on arxiv.

- AutoGPT: One of the most popular frameworks for using LLM agents, using the OpenAI API / GPT4.

- ThinkGPT: python library for implementing Chain of Thoughts for LLMs, prompting the model to think, reason, and to create generative agents.

- AutoGen: Multi-agent LLM framework for building applications from Microsoft.

- XAgent: Open-source experimental agent, designed to be a general-purpose and applied to a wide range of tasks. From students at Tsinghua University.

- Thought Cloning: Github repo for implementation of Thought Cloning (TC), an imitation learning framework by training agents to think like humans.

- Demonstrate-Search-Predict (DSP): framework for solving advanced tasks with language models (LMs) and retrieval models (RMs).

- ReAct Framework: Prompting method includes examples with actions, the observations gained by taking those actions, and transcribed thoughts (reasoning) for LLMs to take complex actions and reason or solve problems.

- Tree of Thoughts (ToT): LLM reasoning process as a tree, where each node is an intermediate "thought" or coherent piece of reasoning that serves as a step towards the final solution.

- GPT Engineer: Python framework for attempting to get GPT to write code and build software.

- MetaGPT - The Multi-Agent Framework: Agent framework where different assigned roles (product managers, architects, project managers, engineers) are used for building different products (user stories, competitive analysis, requirements, data structures, etc.) given a requirement.

- OpenGPTs: Open source effort from Langchain to create a similar experience to OpenAI's GPTs with greater flexibility and choice.

- Devin: “AI software engineer” from startup Cognition Labs.

- SWE-Agent: Open source software engineering agent framework from researchers at Princeton.

- GATO: Generalist agent from Google Deepmind research for many tasks and media types

- WebLLaMa: Fine-tuned version of LLaMa 3 from McGill University and optimized for web browsing tasks..

- LlamaIndex: LlamaIndex (formerly GPT Index) is a data framework for LLM applications to ingest, structure, and access private or domain-specific data. Usedl for RAG and building LLM applications working with stored data.

- LangChain: LangChain is a framework for developing applications powered by language models.

- Chainlit: Chainlit is an open-source Python package that makes it incredibly fast to build ChatGPT-like applications with your own business logic and data.

- Deepspeed: Deep learning optimization software suite that enables unprecedented scale and speed for DL Training and Inference from Microsoft.

- Megatron-LM: From NVIDIA, Megatron-LM enables training large transformer language models with efficient tensor, pipeline and sequence-based model parallelism for pre-training transformer based language models.

- GPT-NeoX: Eleuther AI’s library for large scale GPU training of LLMs, based on Megatron.

- TRL (Transformer Reinforcement Learning): Library for Reinforcement Learning of Transformer and Stable Diffusion models built atop of the transformers library.

- Autotrain Advanced: In development offering and python library from Hugging Face for easy and fast auto-training of LLMs and Stable Diffusion models.

- Transformer Math: Detailed blog post from Eleuther AI on the mathematics of compute requirements for training LLMs

- Reinforcement Learning from Human Feedback: ELI5 from Wikipedia

- RLHF: Reinforcement Learning from Human Feedback: Blog post from Chip Hyugen on breaking down RLHF.

- Illustrating Reinforcement Learning from Human Feedback (RLHF): Blog post from Hugging Face breaking down how RLHF works with accompanying visuals.

- The Illustrated Word2vec: Explanation of word2vec from Jay Allamar

- Sentence Transformers: Python framework for state-of-the-art sentence, text and image embeddings from Siamese BERT networks.

- Text Embeddings: Documentation / explainer from Cohere with accompanying video.

- Text Embeddings Visually Explained: Another Cohere post explaining the intuition and use cases behind text embeddings.

- A Deep Dive into NLP Tokenization and Encoding with Word and Sentence Embeddings: Lengthy blog post going into detail on embeddings from a deep learning fundamentals perspective and building up to word2vec and BERT.

- vLLM: vLLM is a fast and easy-to-use library for LLM inference and serving, using Paged Attention for working in parallel.

- Skypilot: SkyPilot is a framework for running LLMs, AI, and batch jobs on any cloud.

- 7 Frameworks for Serving LLMs: Medium post comparing different LLM serving frameworks.

- Deploying custom fine-tuned LLMs on Vertex AI: Medium post with a how-to on serving LLMs via GCP and Vertex AI

- Tiktoken: OpenAI’s BPE-based tokenizer

- SentencePiece: Unsupervised text tokenizer and detokenizer for text generation systems from Google (but not an official product).

- LLaMa 2: Incredibly popular open weights (with license) model from Meta AI which spawned a generation of offspring and fine-tunes. Comes in 7, 13, and 70B versions.

- Mistral 7B: Popular open model from French startup Mistral with no fine-tuning (only pretraining). See also: the Mixtral 8x7B mixture of experts successor, Mixtral 8x22B

- Gemma: Lightweight open models from Google based on the same architecture as Gemini. Comes in 2B and 7B base and instruction-tuned versions.

- GPT-J and GPT Neo-X: Open model trained from scratch by Eleuther AI.

- Falcon 40B: Open text generation LLM from UAE’s Technology Innovation Institute (TII). Available on Hugging Face here.

- Falcon 2 11B: Second set of models in the series from TII, released May 2024, including a multimodal model. On Hugging Face herec.

- StableLM: Open language model from Stability AI. Succeeded by StableLM 2, in 1.6B (Jan 2024) and 12B versions (April 2024, try live demo here)

- OLMo: Open Language Models from the Allen Institute for AI (AI2)

- Snowflake Arctic: Open LLM from Snowflake, released April 2024. Github here and on Hugging Face here.

- Minotaur 15B: Fine-tuned version of Starcoder on open code datasets from the OpenAccess AI Collective

- MPT: Family of open models free for commercial use from MosaicML. Includes MPT Storywriter which has a 65K context window.

- DBRX: Family of mixture-of-experts (MoE) large language model trained from scratch by Databricks Mosaic Research. Try it out in the Hugging Face playground here.

- Qwen: Open LLM models from Alibaba Cloud in 7B and 14B sizes, including chat versions. Model family 1.5 released Feb 2024 and Qwen1.5-MoE Mixture of Experts model released 03/28/2024.

- Command-R / Command-R+: Open LLM from Cohere for AI for long-context tasks such as retrieval augmented generation (RAG) and tool use. Available on HuggingFace Command-R, Command-R+

- Aya: Massively multilingual models from Cohere for AI, Aya 101 and 23 which support those many languages respectively each. Aya 23 comes in 8B and 35B versions.

- Grok-1: X.ai’s LLM, an MoE with 314B parameters, weights available via torrent. This is the (pre-trained) base model only, and not fine-tuned for chat.

- Jamba: Hybrid SSM-Transformer model from AI21 Labs - “world’s first production grade Mamba based model”. Weights on Hugging Face here.

- Fuyu-8B: Open multimodal model from Adept AI, a smaller version of the model that powers their commercial product.

- Yi: Bilingual open LLM from Chinese startup 01.AI founded by Kai-Fu Lee, with two versions Yi-34B & 6B. Also Yi-9B open-sourced in March 2024.

- OpenHermes: Popular series of open (and uncensored) LLMs from Nousresearch, fine tunes of models such as LLaMA, Mixtral, Yi, and SOLAR.

- Poro 34B: Fully open-source bilingual Finnish & English model trained in collaboration between Finnish startup Silo AI and the TurkuNLP group of the University of Turku.

- Nemotron-3 8B: Family of “semi-open” (requires accepting a license) LLMs from NVIDIA, optimized for their Nemo framework. Find them all on the collections page on HF.

- ML Foundations: Github repo for Ludwig Schmidt from University of Washington, includes open versions of multimodal models Flamingo & CLIP

- BERTViz: Interactive tool for visualizing attention in Transformer language models such as BERT, GPT2, or T5, based in Python and can be run in Colab.

- Jay Alammar’s Blog: Lots of great posts here visualizing and explaining LLMs such as The Illustrated Transformer and The Illustrated Stable Diffusion

- LLM Visualization: Interactive 3-D visualizations of nanoGPT, GPT-2, and GPT-3, with explanation walking through each piece of the model in detail.

- Prompt Engineering Guide: Comprehensive site for all things prompting related and beyond.

- Prompts for Education: Enhancing Productivity & Learning: Github repo with resources on using generative AI and prompting in educational settings.

- How I Think About Prompt Engineering: Post by Francis Chollet (creator of Keras) relating prompting back to programming paradigms.

- PromptIDE: Development environment and paradigm for prompt programming from xAI using their Grok model.

- Prompt Engineering Guide from OpenAI: Official Guide from OpenAPI on prompt engineering best practices (December 2023).

- Introduction to prompt design: Anthropic guide for prompt engineering with Claude.

- Prompt Library: Library of prompts from Anthropic for use with their models.

- More Useful Things: Prompt Library: Prompt library from researchers at Wharton, primarily geared towards a classroom / teaching setting.

- Awesome LLM Uncertainty Robustness: Collection of resources and papers on Uncertainty, Reliability and Robustness in Large Language Models.

- Foundation Model Transparency Index: LLM Transparency Index from the Center for Research on Foundation Models (CRFM) Group at Stanford, based upon 100 transparency indicators.

- AI Alignment: Writings on AI alignment from Paul Christiano, of the Alignment Research Center (ARC) & previous head of the language model alignment team at OpenAI.

- LIMA - Less is More for Alignment: Paper from Meta showing the data quality can trump model size for performance of smaller models.

- Safety Guidance | PaLM API: Safety Guidance guidelines from Google for using their PaLM model, though are generally applicable.

- LLM Hacking: Prompt Injection Techniques: Medium post describing different techniques for prompt injection.

- Anthropic Long-term Benefit Trust (LTBT): Anthropic’s approach for governance of the company and addressing leadership and governance of AI.

- Guardrails: Python library for assurance and validation of the outputs of LLMs. In alpha.

- Detoxify: Toxic comment classification models based on BERT.

- Artificial Intelligence and Data Act (AIDA) Companion Document: High level details on the proposed Canadian AI legislation as part of Bill C-27.

- Evaluating social and ethical risks from generative AI: Blog post and source paper from Deepmind on framework for risks from GenAI.

- The Alignment Handbook: From the Hugging Face team, provides a series of robust training recipes that span the whole LLM pipeline for ensuring model alignment.

- Decoding Intentions: Artificial Intelligence and Costly Signals: The paper from Helen Toner on the OpenAI board that ruffled Sam Altan’s feathers.

- Cold Takes: Ethics and AI blog and podcast from Holden Karnofsky of Open Philanthropy

- You don’t need hosted LLMs, do you?: Comparison of costs and considerations for using self-hosted solutions vs OpenAI’s offerings.

- MLOps Community: Community of machine learning operations (MLOps) practitioners, but lately very much focused on LLMs.

- LLMOps Space: global community for LLM practitioners & enthusiasts, focused on topics related to deploying LLMs into production

- Aggregate Intellect Socratic Circles (AISC): Online community of ML and AI practitioners based in Toronto, with Slack server, journal club, and free talks

- /r/LanguageTechnology: Reddit community on Natural Language Processing and LLMs with over 40K members

- /r/LocalLLaMA: Subreddit to discuss training Llama and development around it, though also contains a lot of good general LLM discussion.

- Stanford CS324: Large Language Models at Stanford. All course materials are freely available and viewable online.

- Stanford CS224U: Natural Language Understanding: NLU course including a lot on LLMs and transformers, taught by Christopher Potts. Materials are in the Github repo. Lectures are in a Youtube playlist.

- Stanford CS224N: NLP with Deep Learning: NLP with Deep Learning, Youtube playlist of lectures is here.

- Stanford CS25: Transformers United V3: Stanford course breaking down how transformers work, and dive deep into the different kinds of transformers and how they're applied in different fields. The associated playlist with all the lectures is available on Youtube.

- CS685: Advanced Natural Language Processing (Spring 2023): Content of this graduate-level course from the University of Massachusetts Amherst - lots of content on LLMs and Transformers. Full course materials here.

- CS5785 Applied Machine Learning: Lecture notes and slides from Cornell’s Applied Machine Learning Course, Fall 2023.

- CS388: Natural Language Processing: University of Texas at Austin offers its Master-level NLP course online CS388 by Prof Greg Durrett from the University of Texas at Austin. Videos for lectures are in a Youtube playlist here.

- Hugging Face’s NLP Course: With a focus on using the transformers library and transformer models.

- LLM University: Documentation and free learning on LLMs from Cohere.

- Large Language Model Course: A microcourse composed of Colab notebooks and associated blog posts from Maxime Labonne @ JPMorganChase.

- Advanced NLP with spaCy: Course from Ines Montani, one of the course developers at spaCY, one using it. Includes data analysis, pipelines, and fitting models.

- Applied Language Technology: MOOC from the University of Helsinki on NLP, focusing on using spaCy.

- LangChain for LLM Application Development by Andrew Ng: Apply LLMs to your proprietary data to build personal assistants and specialized chatbots.

- Full Stack LLM Bootcamp: Best practices and tools for building LLM-powered apps - materials are free.

- LangChain & Vector Databases in Production: Free course on LangChain using Deep Lake, ActiveLoop’s Vector database offering.

- UVA Deep Learning Course: from MSc in Artificial Intelligence for the University of Amsterdam. Highly technical! Tutorial notebooks here, Youtube playlist here.

- Intro to Text Analytics with R: From Data Science Dojo. The associated Youtube playlist is here.

- Natural Language Processing for Semantic Search: Course from Pinecone focused on embeddings and information retrieval, with accompanying code and videos.

- Generative AI Foundations on AWS Technical Deep Dive Series: Youtube playlist of working with GenAI, training and fine-tuning models with Sagemaker.

- FourthBrain Resources: Free resources from Fourthbrain from their community sessions and webinars, mainly focused on LLM development.

- Natural Language Processing with Large Language Models: Technical notebooks here from Jon Krohn’s half-day ODSC East workshop. Includes using transformers library for fine-tuning with T5 and using OpenAI API.

- Spark NLP Workshops: A lot of resources here on all things SparkNLP, including code in Jupyter notebooks for different applications of SparkNLP to many use cases.

- Generative AI for Beginners: Free online course from Microsoft

- Anaconda Learning: Free learning courses from Anaconda on Jupyter and conda basics.

- Weights & Biases Courses: Free LLM-related courses from Weights & Biases using their platform (requires email signup)

- Speech and Language Processing (3rd ed. draft): by Dan Jurafsky and James H. Martin. A fundamental text on all things NLP.

- Foundations of Statistical Natural Language Processing: by Christopher Manning and Hinrich Schütze

- Foundations of Machine Learning: by Mehryar Mohri, Afshin Rostamizadeh, and Ameet Talwalkar. Full text freely available as well as accompanying slides.

- Mathematics for Machine Learning: Free textbook from Cambridge University. Includes accompanying Jupyter notebooks.

- The Data Science Interview Book: A free online e-book for DS interview prep. Includes a growing section on NLP topics.

- Introduction to Modern Statistics: Free online textbook on statistics.

- Dive into Deep Learning: Interactive deep learning book with code, math, and discussions implemented with multiple frameworks. Chapters 9-11 focus on RNNs & Transformers and 15-16 on NLP applications.

- Understanding Deep Learning: by Simon J.D. Prince, free online and includes all code in Jupyter notebooks. Chapter 12 covers the transformer architecture.

- Natural Language Processing in Action, Second Edition: by Hobson Lane and Maria Dyshel from Manning. Currently a MEAP set for release in Jan 2024.

- Natural Language Processing with Transformers: by Lewis Tunstall, Leandro von Werra, and Thomas Wolf. From O’Reilly. You can view the code associated with the book in the Github repo here.

- Applied Text Analysis with Python: by Benjamin Bengfort, Rebecca Bilbro, Tony Ojeda, from O’Reilly. Aimed at Python developers breaking into NLP and focuses on building product and includes using Spark.

- Anaconda’s State of Data Science Report 2023: Anaconda’s annual survey for general DS. Requires form submission with email / personal details to download.

- State of AI Report 2023: From AirStreet Capital. Very dense report focusing on high-level trends, industry players, funding, etc. and focused on LLMs and generative AI.

- Kaggle’s AI Report 2023: State of AI from Kaggle, taking the form of community-written long-form essays as part of a Kaggle competition

- MLOps Community 2023 LLM Survey Report: Survey from MLOps.communtiy on trends in LLM usage and adoption.

- LLM normcore reads: “Anti-hype LLM reading list” compiled by Vicky Boykis in a Github gist

- Machine Learning Glossary: From Google Developers

- AI Canon: A collection of links to fundamental resources for AI and LLMs, from Anderssen Horowitz

- Practical Large Language Models - Open Book: Programmatically generated open book compiling summaries talks and events from aggregate intellect

- NLP Progress: Repository to track the progress in Natural Language Processing (NLP), including the datasets and the current state-of-the-art for the most common NLP tasks.

- Awesome Legal NLP: Compilation of links to NLP resources related to the field of law and legislation.

- Awesome LLM Fine-tuning: Github awesome list of fine-tuning related resources.

- Instruction Tuning Papers: Repo with a list of papers related to instruction tuning LLMs.

- List of foundation LLMS: https://github.com/zhengzangw/awesome-huge-models#language-model

- Awesome LLM: Curated list of important LLM papers, frameworks, resources, and other lists. Actively updated.

- LLM Survey: A collection of papers and resources related to Large Language Models.

- Uni-LM: Aggregate repo of LLM and foundation model work across Microsoft Research. They also have a repo specifically for LLMOps.

These are not referral links.

- GPTRoad: Daily no-nonsense newsletter covering developments in the AI / LLM space. They also have a site following the HackerNews template.

- TLDR AI: Daily newsletter with little fluff, covering developments in AI news.

- AI Tool Report: Newsletter from Respell, with AI headlines, jobs,

- The Memo from Lifearchitect.ai: Bi-weekly newsletter with future-focused updates on developments in the LLM-space.

- AI Breakfast: Curated weekly analysis of the latest AI projects, products, and news

- The Rundown AI: Another daily AI newsletter (400K+ readers)

- Interconnects: LLM / AI newsletter for more technical readers.

- The Neuron: Another AI newsletter with cutesy and light tone.

- Attention is All You Need: _The _paper that started it all in 2017 and introduced the Transformer Architecture, from Google Brain.

- GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers: Post-training quantization paper from researchers at ETH Zurich ahd IST Austria

- QLoRA: Efficient Finetuning of Quantized LLMs: The efficient method combining quantization with LoRA that produced Guanaco from LLaMA. From researchers at the University of Washington.

- Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback: Paper from Anthropic on using RLHF for desired safety behaviors in LLMs.

- BRIO - Bringing Order to Abstractive Summarization: Abstractive summarization model from researchers at Yale and Carnegie Mellon using contrastive learning to rank candidate summaries.

- Association of Computational Linguistics (ACL): https://www.aclweb.org/portal/

- ACL 2023 in Toronto: https://virtual2023.aclweb.org/