This paper is accepted by CVPR'2021(Oral).

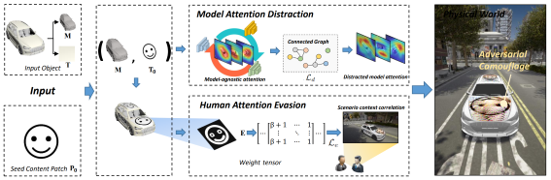

This paper proposed a dual attention supression attack approach, which exploits both the modle attention and human attention. Specifically, we distract the model attention to obtain a better attack ability, and moreover, we evade the human attention to help improving the naturalness.

you need:

- dataset

- The dataset can be generated by CARLA, which is a 3D virtual simulated environment and a commonly used open-source simulator for autonomous driving research. Specifically, you can select different conditional parementers to dicide the angles, distances, and so on.

- The dataset we generated can be accessed in baidu pan (dual) and Google Drive.

- unzip the masks.zip and phy_attack.zip in the

src/data.

- 3d object

.objand texture file.mtl(eg.src/audi_et_te.objandsrc/audi_et_te.mtl) - face id list

.txtwhich need to be trained (eg.src/all_faces.txt) - seed content texture and edge mask texture

- Requirements:

- pytorch: 1.4.0

- neural_render: 1.1.3

python train.py --datapath=[path to dataset] --content=[path to seed content] --canny=[path to edge mask]results will be stored in src/logs/, include:

- output images

loss.txttexture.npythe trained texture file

python test.py --texture=[path to texture]results will be stored in src/acc.txt