What you can find here is scrapers for all major Israeli banks and credit card companies. That's the plan at least. Currently only the following banks are supported:

- Bank Hapoalim (thanks @sebikaplun)

- Leumi Bank (thanks @esakal)

- Discount Bank

- Mizrahi Bank (thanks @baruchiro)

- Otsar Hahayal Bank (thanks @matanelgabsi)

- Visa Cal (thanks @nirgin)

- Max (Formerly Leumi Card)

- Isracard

- Amex (thanks @erezd)

- Union Bank (Thanks to Intuit FDP OpenSource Team @dratler,@kalinoy,@shanigad,@dudiventura and @NoamGoren)

- Beinleumi (Thanks to @dudiventura from the Intuit FDP OpenSource Team)

To use this you will need to have Node.js >= 8.10.0 installed.

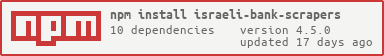

To use these scrapers you'll need to install the package from npm:

npm install israeli-bank-scrapers --saveThen you can simply import and use it in your node module:

const { createScraper } = require('israeli-bank-scrapers');

const credentials = {...}; // different for each bank

const options = {...};

(async function() {

try {

const scraper = createScraper(options);

const scrapeResult = await scraper.scrape(credentials);

if (scrapeResult.success) {

scrapeResult.accounts.forEach((account) => {

console.log(`found ${account.txns.length} transactions for account number

${account.accountNumber}`);

});

}

else {

throw new Error(scrapeResult.errorType);

}

} catch(e) {

console.error(`scraping failed for the following reason: ${e.message}`);

}

})();The definition of the options object is as follows:

{

companyId: string, // mandatory; one of 'hapoalim', leumi', 'discount', 'mizrahi', 'otsarHahayal', 'visaCal', 'max', 'isracard', 'amex'

startDate: Date, // the date to fetch transactions from (can't be before the minimum allowed time difference for the scraper)

combineInstallments: boolean, // if set to true, all installment transactions will be combine into the first one

showBrowser: boolean, // shows the browser while scraping, good for debugging (default false)

verbose: boolean, // include more debug info about in the output

browser : Browser, // optional option from init puppeteer browser instance outside the libary scope. you can get browser diretly from puppeteer via `puppeteer.launch()` command.

executablePath: string, // optional. provide a patch to local chromium to be used by puppeteer. Relevant when using `israeli-bank-scrapers-core` library

args: {}, // optional. additional arguments to pass to the browser instance. The list of flags can be found below in (*),

prepareBrowser: async (browser) => {}, // optional. adjust the browser instance before it is being used.

preparePage: async (page) => {}, // optional. adjust the page instance before it is being used.

}(*) links of flags that can be used with args options can be found here, and here is the list of Firefox flags.

The structure of the result object is as follows:

{

success: boolean,

accounts: [{

accountNumber: string,

txns: [{

type: string, // can be either 'normal' or 'installments'

identifier: int, // only if exists

date: string, // ISO date string

processedDate: string, // ISO date string

originalAmount: double,

originalCurrency: string,

chargedAmount: double,

description: string,

memo: string, // can be null or empty

installments: {

number: int, // the current installment number

total: int, // the total number of installments

},

status: string //can either be 'completed' or 'pending'

}],

}],

errorType: "INVALID_PASSWORD"|"CHANGE_PASSWORD"|"ACCOUNT_BLOCKED"|"UNKNOWN_ERROR"|"TIMEOUT"|"GENERIC", // only on success=false

errorMessage: string, // only on success=false

}You can also use the SCRAPERS list to get scraper metadata:

const { SCRAPERS } = require('israeli-bank-scrapers');The return value is a list of scraper metadata:

{

<companyId>: {

name: string, // the name of the scraper

loginFields: [ // a list of login field required by this scraper

'<some field>' // the name of the field

]

}

}This library is currently deployed to NPM manually and not as part of automatic process. You should expect situations when code was pushed to master and wasn't deployed to NPM yet.

If you are a developer and want to access the next version, install the library with next tag as shown below:

npm install israeli-bank-scrapers@next --saveKeep in mind that although this

nextversion should be stable as it passed our code review, it was deployed automatically using github action workflow without the usual tests we run manually before we deploy the official version.

TL;DR this is the same library as the default library. The only difference is that it is using

puppeteer-coreinstead ofpuppeteerwhich is useful if you are using frameworks like Electron to pack your application.In most cases you will probably want to use the default library (read Getting Started section).

Israeli bank scrapers library is published twice:

- israeli-bank-scrapers - the default variation, great for common usage as node dependency in server application or cli.

- israeli-bank-scrapers-core - extremely useful for applications that bundle

node_moduleslike Electron applications.

The default variation israeli-bank-scrapers is using puppeteer which handles the installation of local chroumium on its' own. This behavior is very handy since it takes care on all the hard work figuring which chromium to download and manage the actual download process. As a side effect it increases node_modules by several hounded megabytes.

The core variation israeli-bank-scrapers-core is using puppeteer-core which is exactly the same library as puppeteer except that it doesn't download chromium when installed by npm. It is up to you to make sure the specific version of chromium is installed locally and provide a path to that version. It is useful in Electron applications since it doesn't bloat the size of the application and you can provide a much friendlier experience like loading the application and download it later when needed.

To install israeli-bank-scrapers-core:

npm install israeli-bank-scrapers-core --saveWhen using the israeli-bank-scrapers-core it is up to you to make sure the relevant chromium version exists. You must:

- query for the specific chromium revision required by the

puppeteer-corelibrary being used. - make sure that you have local version of that revision.

- provide an absolute path to

israeli-bank-scrapers-corescrapers.

Please read the following to learn more about the process:

- To get the required chromium revision use the following code:

import { getPuppeteerConfig } from 'israeli-bank-scrapers-core';

const chromiumVersion = getPuppeteerConfig().chromiumRevision;

-

Once you have the chromium revision, you can either download it manually or use other liraries like download-chromium to fetch that version. The mentioned library is very handy as it caches the download and provide useful helpers like download progress information.

-

provide the path to chromium to the library using the option key

executablePath.

This scraper expects the following credentials object:

const credentials = {

userCode: <user identification code>,

password: <user password>

};This scraper supports fetching transaction from up to one year.

This scraper expects the following credentials object:

const credentials = {

username: <user name>,

password: <user password>

};This scraper supports fetching transaction from up to one year.

This scraper expects the following credentials object:

const credentials = {

id: <user identification number>,

password: <user password>,

num: <user identificaiton code>

};This scraper supports fetching transaction from up to one year (minus 1 day).

- Missing memo field

This scraper expects the following credentials object:

const credentials = {

username: <user identification number>,

password: <user password>

};This scraper supports fetching transaction from up to one year.

This scraper expects the following credentials object:

const credentials = {

username: <user name>,

password: <user password>

};This scraper supports fetching transaction from up to one year.

This scraper expects the following credentials object:

const credentials = {

username: <user name>,

password: <user password>

};This scraper supports fetching transaction from up to one year.

This scraper expects the following credentials object:

const credentials = {

username: <user name>,

password: <user password>

};This scraper supports fetching transaction from up to one year.

This scraper expects the following credentials object:

const credentials = {

id: <user identification number>,

card6Digits: <6 last digits of card>

password: <user password>

};This scraper supports fetching transaction from up to one year.

This scraper expects the following credentials object:

const credentials = {

id: <user identification number>,

card6Digits: <6 last digits of card>

password: <user password>

};This scraper supports fetching transaction from up to one year.

These are the projects known to be using this module:

- Israeli YNAB updater - A command line tool for exporting banks data to CSVs, formatted specifically for YNAB

- Israel Finance Telegram Bot - A simple telegram bot that sends notifications about new transactions and interacts with them

- Automated budget tracking app - An app for automatically sending transactions from Israeli banks and credit cards to budget tracking apps

- Oshi - Secure desktop app for retriving your transactions from all israeli banks and credit cards

Built something interesting you want to share here? Let me know.

The MIT License