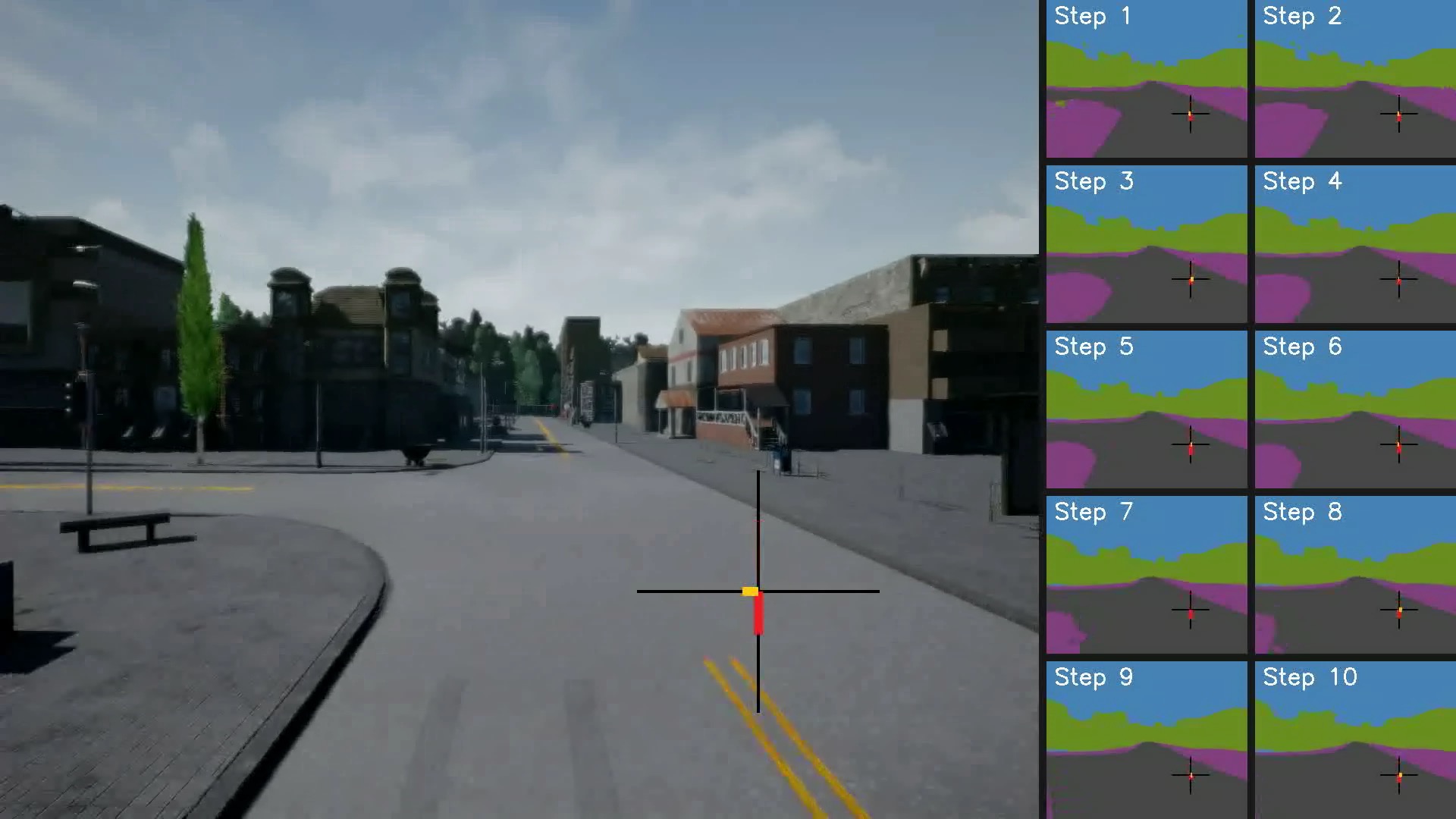

Semantic predictive control (SPC) is a policy learning framework that predicts future semantic segmentation and events by aggregating multi-scale feature maps. It utilizes dense supervision from semantic segmentation for feature learning and greatly improves policy learning efficiency. The learned features are explainable as they depict future scenes with semantic segmentation and explicit events.

This repository contains a PyTorch implementation of SPC, as well as some training scripts to reproduce policy learning results reported in our paper.

Our model is composed of four sub-modules:- The feature extraction module extracts multi-scale intermediate features from RGB observations;

- The extracted features are then concatenated with tiled actions and feed to the multi-scale prediction module that sequentially predicts future features;

- The information prediction module takes in the predicted latent feature representation and outputs corresponding future frame semantic segmentation and task-related signals, such as collision, off-road, and speed;

- The guidance network module that predicts action distribution for efficient sampling-based optimization.

Our results in the paper can be reproduced with the provided scripts by running

cd scripts/

bash train_#ENVNAME.shTogether with the training scripts, simulator environments need to be activated:

To train on carla, the carla simulator should be started at first, we give an example with default settings here:

### On Ubuntu

SDL_VIDEODRIVER=offscreen SDL_HINT_CUDA_DEVICE=0 ./CarlaUE4.sh -carla-settings=Example.CarlaSettings.ini -windowed -ResX=256 -ResY=256 -carla-server -carla-no-hud

### On Windows

CarlaUE4.exe -windowed -ResX=800 -ResY=600 -carla-server -carla-no-hud -carla-settings=Example.CarlaSettings.ini

By default, the message port of Carla Simulator is 2000 and the --port arg should be set as the same.

To evaluate the model and to produce demo with the latest model saved, we can simply run main.py with the flag --eval. Then, to transform saved snapshot images to a demo video, simply run the script:

python merge_demo.py