Monitor the health of a Kubernetes cluster with ease, using Service Level Objectives (SLOs).

Essential Kubernetes Gauges (EKG) provides a set of standardized, prefabricated SLOs that measure the reliability of a Kubernetes cluster. You can think of these SLOs as a check engine light that tells you when your EKS cluster is misbehaving, with a historical record of when the cluster was behaving as desired and when not. SLOs allow you to set adjustable goals for the reliability aspects of your clusters. EKG includes SLOs that measure several aspects of a cluster:

- Control Plane Health

- Is the Kubernetes API responding normally?

- Is it performant?

- Cluster Health

- Are the nodes healthy?

- Is there some minimum of resource headroom?

- Can we start new workloads?

- Workload Health

- Is anything in a bad state?

- Is there at least some kind of workload running?

- Resource Efficiency

- Are resources underutilized in this cluster?

- Is the cluster scaling in such a way that it is making good use of resources, without endangering workloads?

- Cost Efficiency measurements have been proposed as future enhancement and are under consideration.

These aspects of cluster behavior provide a gauge on how well Kubernetes (as an application) is running, as well as how the overall cluster is faring, along with some insight into the health of the workloads the cluster is supporting. By running EKG's SLOs, you can measure how well your clusters are doing over time, share numbers, charts, and reports on cluster reliability across your teams, managers, and stakeholders, and use all the features of Nobl9, including alert policies and alert integrations with a wide variety of popular tools.

For more information on the specific SLOs included in EKG and how to make use of them, please see the SLO docs

While EKG's SLOs and techniques are usable with any flavor of Kubernetes, and while the underlying instrumentation frameworks are compatible with many distributions, we currently provide end-to-end automation for the following products:

- AWS EKS (Elastic Container Service for Kubernetes)

Support for additional Kubernetes distributions has been proposed and is under consideration.

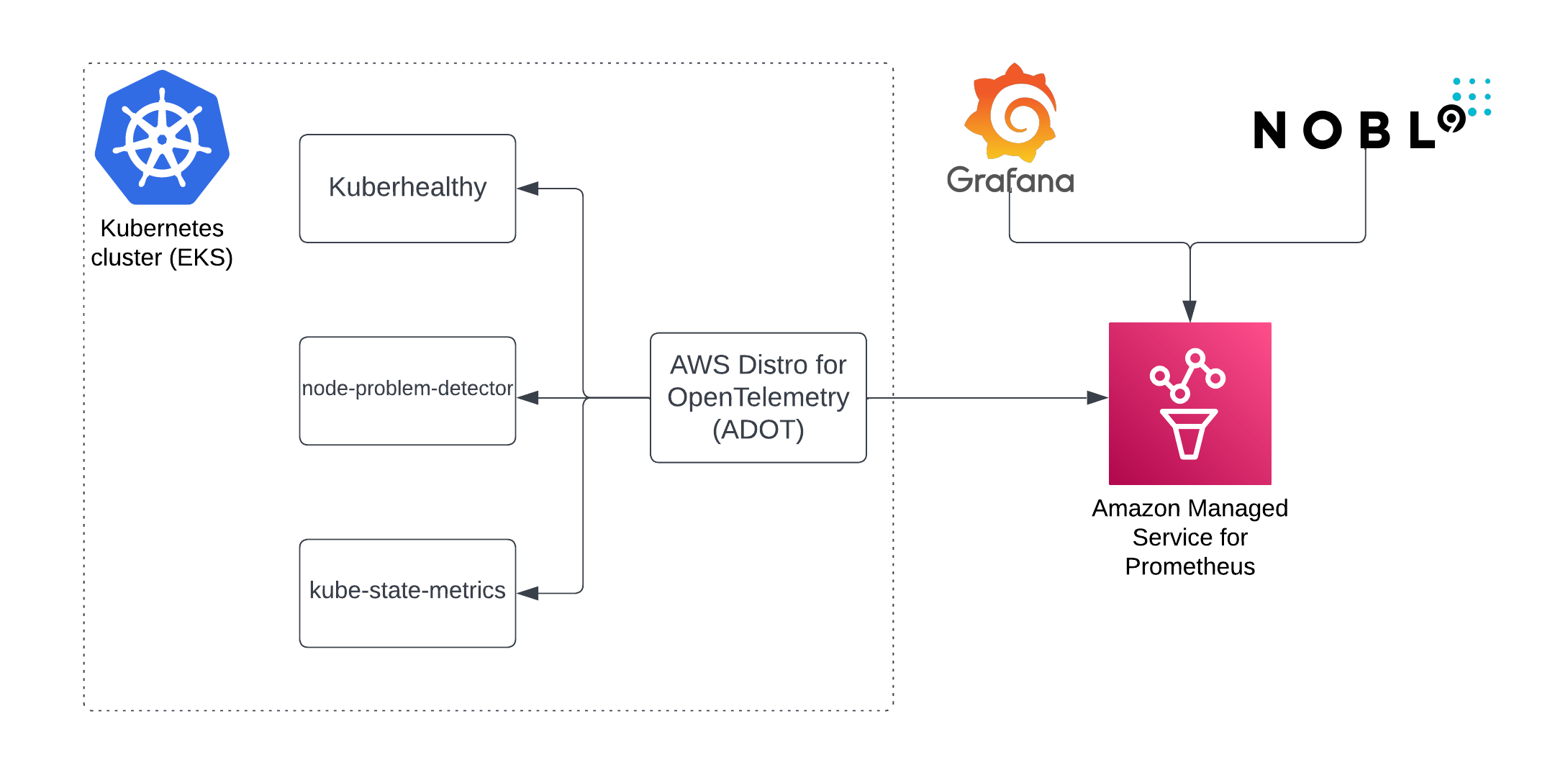

Amazon Managed Service for Prometheus is created (optionally), and metrics are collected from electrodes:

- kube-state-metrics - metrics for the health of the various objects inside, such as deployments, nodes and pods

- node-problem-detector - metrics for the health of the node e.g. infrastructure daemon issues: ntp service down, hardware issues e.g. bad CPU, memory or disk, kernel issues e.g. kernel deadlock, corrupted file system, container runtime issues e.g. unresponsive runtime daemon

- Kuberhealthy - metrics for the health of the cluster, performs synthetic tests that ensures daemonsets, deployments can be deployed, DNS resolves names, etc.

Metrics are plumbed into Amazon Managed Service for Prometheus using AWS Distro for OpenTelemetry (ADOT). It collects metrics exposed by other services too, thus it can be the only thing you need to collect all of the platform and application metrics from a cluster.

The repository gives fine-grain control over what can be deployed or reused. For instance, you can install only chosen electrodes, or all of them, reuse existing Amazon Managed Service for Prometheus, or install only ADOT. For details check the documentation of specific modules. Electrodes can be used with any Kubernetes cluster - EKS, GKE, on-premise, etc.

To learn how to contribute please read the contribution guidelines.

-

Prerequisites. You will need

- A Nobl9 Organization. If you don't already have a Nobl9 org, you can sign up for Nobl9 free edition at https://app.nobl9.com/signup/

- Terraform. If you need to install it: https://developer.hashicorp.com/terraform/downloads

- An AWS account, with configuration and credentials connecting it to Terraform, for example by installing AWS CLI

- An EKS cluster. We assume you have a bunch of these, but if you want to spin up a fresh test cluster to try out EKG in isolation, how about following the steps in this tutorial? The tutorial defaults to Terraform Cloud (which is quite nice) but for this exercise we recommend you click on the Terraform OSS tabs as you proceed.

- You need to configure IAM OIDC provider for the EKS cluster. Tutorial linked above does it for you, but if you are using an existing cluster, you may need to do it manually.

-

Create a

terraform.tfvarsfile. A staring point can be found interraform.tfvars.examplecp terraform.tfvars.example terraform.tfvars # edit that file with an editor of your choice # provide values for your AWS region, cluster name, and Nobl9 organization

-

Provide required secrets to the Nobl9 Terraform Provider. In the Nobl9 web UI, go to Settings > Access Keys, create an access key (save it somewhere) and then set the values as env vars:

export TF_VAR_nobl9_client_id="<your Nobl9 Client ID>" export TF_VAR_nobl9_client_secret="<your Nobl9 Client Secret>"

-

Use Terraform to install the EKG components. In the root of this repository, run:

terraform initterraform applyOutput of the above is

Apply complete! Resources: 36 added, 0 changed, 0 destroyed.

Outputs:

amp_ws_endpoint = "https://aps-workspaces.us-east-2.amazonaws.com/workspaces/ws-abcdef12-3456-7890-abcd-ef1234567890/"Congratulations! EKG is up and running. Go back to the Nobl9 web UI and explore your newly created SLOs. For more information about these SLOs, how to use them, and how to tune them to your clusters' conditions and workloads, see the SLO docs

- kube-state-metrics

- Kuberhealthy

- node-problem-detector

- Amazon Distro for OpenTelemetry (ADOT)

- A Nobl9 Agent compatible with and connecting to Amazon Managed Service for Prometheus

-

A Nobl9 Project and a Service to hold the SLOs

-

The EKG SLOs (several prefab SLOs for Kubernetes)

-

An agent-based Data Source configured to receive data from the Nobl9 Agent running in the EKS cluster

-

An IAM User with access keys (configured in the Nobl9 Agent) and an inline policy allowing it to access the Amazon Managed Service for Prometheus workspace

-

An Amazon Managed Service for Prometheus workspace, available at the URL output as

amp_ws_endpoint

The amp_ws_endpoint is a URL for Amazon Managed Service for Prometheus that can be

directly used for instance in Grafana or Nobl9. Deploying Grafana or

other visualization tools is not in the scope of this project, but if you are looking for a quick and clean Grafana to

play with as you explore the metrics in your newly created Prometheus, how about running it locally from a docker image

and include the additional env vars required to allow it to connect to Amazon's managed prom.

Or in short:

docker run -d -p 3000:3000 --name="grafana" -e "AWS_SDK_LOAD_CONFIG=true" -e "GF_AUTH_SIGV4_AUTH_ENABLED=true" grafana/grafana-ossThen when you configure a Prometheus data source it will offer AWS specific settings to connect it to that amp_ws_endpoint

- Nobl9 Documentation

- Nobl9 SLOcademy, a starting point to explore SLOs and Nobl9's feature set

- SLOconf, a conference dedicated to SLOs, rich with online content about SLOs from across the industry

| Name | Version |

|---|---|

| terraform | >= 1.1.0 |

| aws | >= 3.72 |

| helm | >= 2.4.1 |

| kubernetes | >= 2.10 |

| nobl9 | 0.26.0 |

| Name | Version |

|---|---|

| aws | 5.53.0 |

| kubernetes | 2.30.0 |

| Name | Source | Version |

|---|---|---|

| adot_amp | ./modules/adot-amp | n/a |

| electrodes | ./modules/electrodes | n/a |

| nobl9 | ./modules/nobl9 | n/a |

| Name | Type |

|---|---|

| kubernetes_namespace.this | resource |

| aws_eks_cluster.this | data source |

| aws_eks_cluster_auth.this | data source |

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| api_error_rate_target | SLO reliability target for apiserver error rate | number |

n/a | yes |

| api_latency_target | SLO reliability target for apiserver latency | number |

n/a | yes |

| aws_region | AWS Region | string |

n/a | yes |

| create_namespace | Namespace speciefed by variable namespace will be created, if not exists | bool |

true |

no |

| eks_cluster_id | Name of the EKS cluster | string |

n/a | yes |

| enable_kube_state_metrics_slos | Would you like to include SLOs that rely on kube-state-metrics? | bool |

n/a | yes |

| enable_kuberhealthy_slos | Would you like to include SLOs that rely on kuberhealthy metrics? | bool |

n/a | yes |

| enable_node_problem_detector_slos | Would you like to include SLOs that rely on node-problem-detector? | bool |

n/a | yes |

| kuberhealthy_pod_start_latency | Allowable duration of kuberhealthy check | number |

n/a | yes |

| kuberhealthy_pod_start_latency_target | SLO reliability target for kuberhealthy pod start latency | number |

n/a | yes |

| kuberhealthy_pod_start_success_target | SLO reliability target for kuberhealthy pod start success | number |

n/a | yes |

| managed_prometheus_workspace_id | Amazon Managed Service for Prometheus Workspace IDAmazon Managed Service for Prometheus Workspace ID (when nothing passed new will be created) | string |

"" |

no |

| memory_headroom_target | SLO reliability target for memory headroom | number |

n/a | yes |

| memory_headroom_threshold | Maximum allowable memory consumption (fraction of 1.0) | number |

n/a | yes |

| memory_utilization_target | SLO reliability target for memory utilization | number |

n/a | yes |

| memory_utilization_threshold | Minimum allowable memory consumption (fraction of 1.0) | number |

n/a | yes |

| namespace | Namespace where electrodes will be installed | string |

"ekg-monitoring" |

no |

| nobl9_client_id | Nobl9 Client ID (create in Nobl9 web app using Settings > Access Keys) | string |

n/a | yes |

| nobl9_client_secret | Nobl9 Client Secret (create in Nobl9 web app using Settings > Access Keys) | string |

n/a | yes |

| nobl9_organization_id | Nobl9 Organization ID (visible in Nobl9 web app under Settings > Account) | string |

n/a | yes |

| nobl9_project_name | Nobl9 Project name (create one in the Nobl9 web app using Catalog > Projects, or use 'default') | string |

"default" |

no |

| node_not_ready_target | SLO reliability target for node not ready | number |

n/a | yes |

| node_not_ready_threshold | Maximum allowable number of nodes in a non-ready state | number |

n/a | yes |

| node_problem_target | SLO reliability target for node problems | number |

n/a | yes |

| node_problem_threshold | Number of nodes problems that is considered a problem for the cluster | number |

n/a | yes |

| phase_failed_unknown_target | SLO reliability target for not having pods in a bad state (failed or unknown) | number |

n/a | yes |

| phase_failed_unknown_threshold | This many pods in a bad state (failed or unknown) is considered a failing workload | number |

n/a | yes |

| phase_pending_target | SLO reliability target for not having pods in a pending state | number |

n/a | yes |

| phase_pending_threshold | Maximum allowable number of pods in a pending state | number |

n/a | yes |

| phase_running_target | SLO reliability target for having enough things running in the cluster | number |

n/a | yes |

| phase_running_threshold | Minimum allowable ratio of pods that should be running | number |

n/a | yes |

| rolling_window_days | Evaluation time period for EKG SLOs | number |

n/a | yes |

| tags | Additional tags (e.g. map('BusinessUnit,XYZ) |

map(string) |

{} |

no |

| Name | Description |

|---|---|

| amp_ws_endpoint | Amazon Managed Prometheus endpoint |