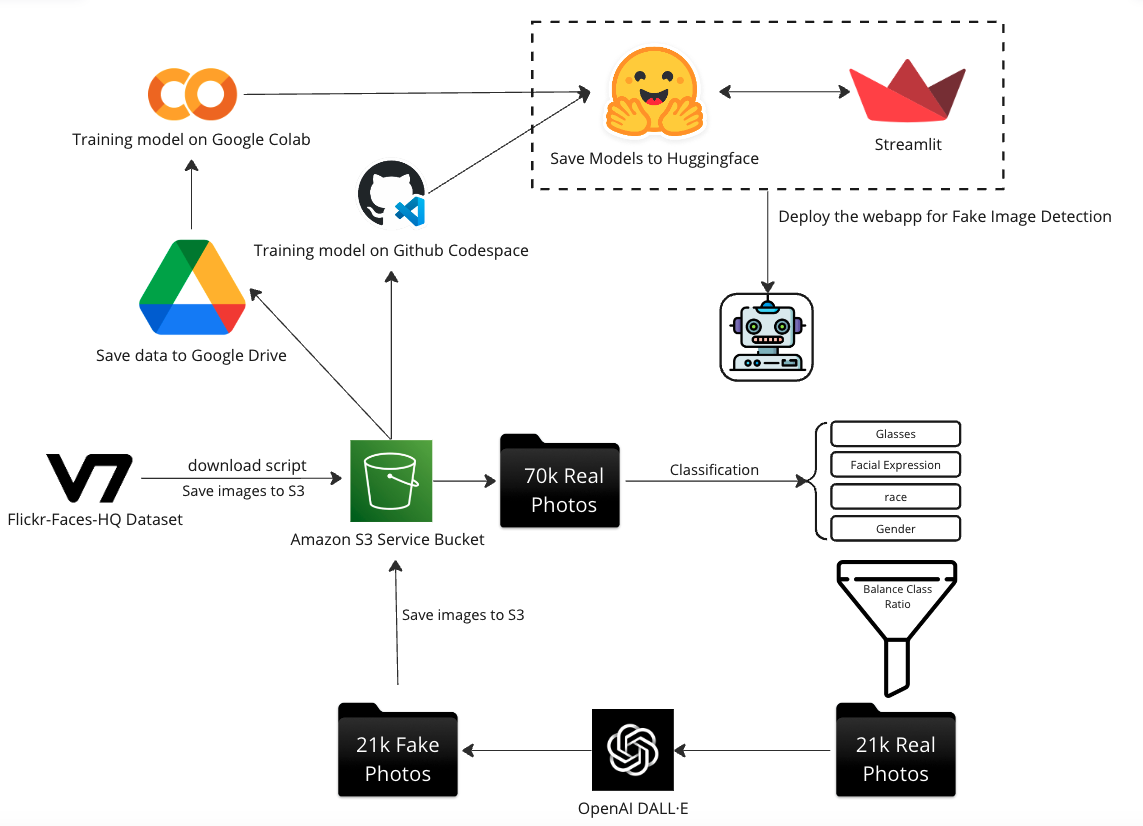

This project generates AI images using the DALL-E2 API from OpenAI and use the generate picture to build a AI-Generated Image detector.

Try the final webapp 🤖🤖🤖 AI Human face generator & dectector Webapp

*Note: You will need OpenAI API keys to get access. Read more how to get Openai API

*Note: You will need OpenAI API keys to get access. Read more how to get Openai API

Watch the project presentation video below to get more information:

We have witnessed significant advancements in image-generation AI models in recent years, including the impressive transformer autoregressive model DALL-E 2 developed by OpenAI. While these models have various applications, including entertainment, advertising, and architecture design, their use raises important ethical questions due to the potential misuse of AI-generated images. As such, we aim to build a machine learning model that can differentiate between AI-generated images and real human face images to promote ethical practices in their use and contribute to this significant area of study.

- dalle2_code.py: generate AI pic and save it into Google drive.

- localDalle2.py: Read pic from input folder and generate AI pic and save it into local output folder.

- downloaded-json: Get real human picture from V7.

- aws_upload.ipynb: upload image into Amazon s3 bucket.

- final_output_mobielnet.ipynb: Train mobileNetv2 model using s3 and codespace

- MobileNetV2_model.py: Train MobileNetV2 model using s3 on Apple M2 GPU

- Resnet.ipynb: Train ResNet50 on Gooogle colab.

- cnn_model.ipynb: Train and test model using huggingface model on local.

- mobileNetV2_visual.ipynb: Visualize MobileNetV2 model.

- resNet_visualization.ipynb & restNet_visual.ipynb: Visualize RestNet50 using UMAP.

- app.py: deploy the AI generator and detector using huggingface and streamlit.

To install the required packages, run the following command:

make install

This will install the required packages specified in the requirements.txt file.

To use the DALL-E2 API, you will need to obtain an API key from OpenAI. Once you have an API key, create a .env file in the root directory of the project and add the following line:

touch .env

OPENAI_API_KEY = "YOUR_OPENAI_API_KEY"

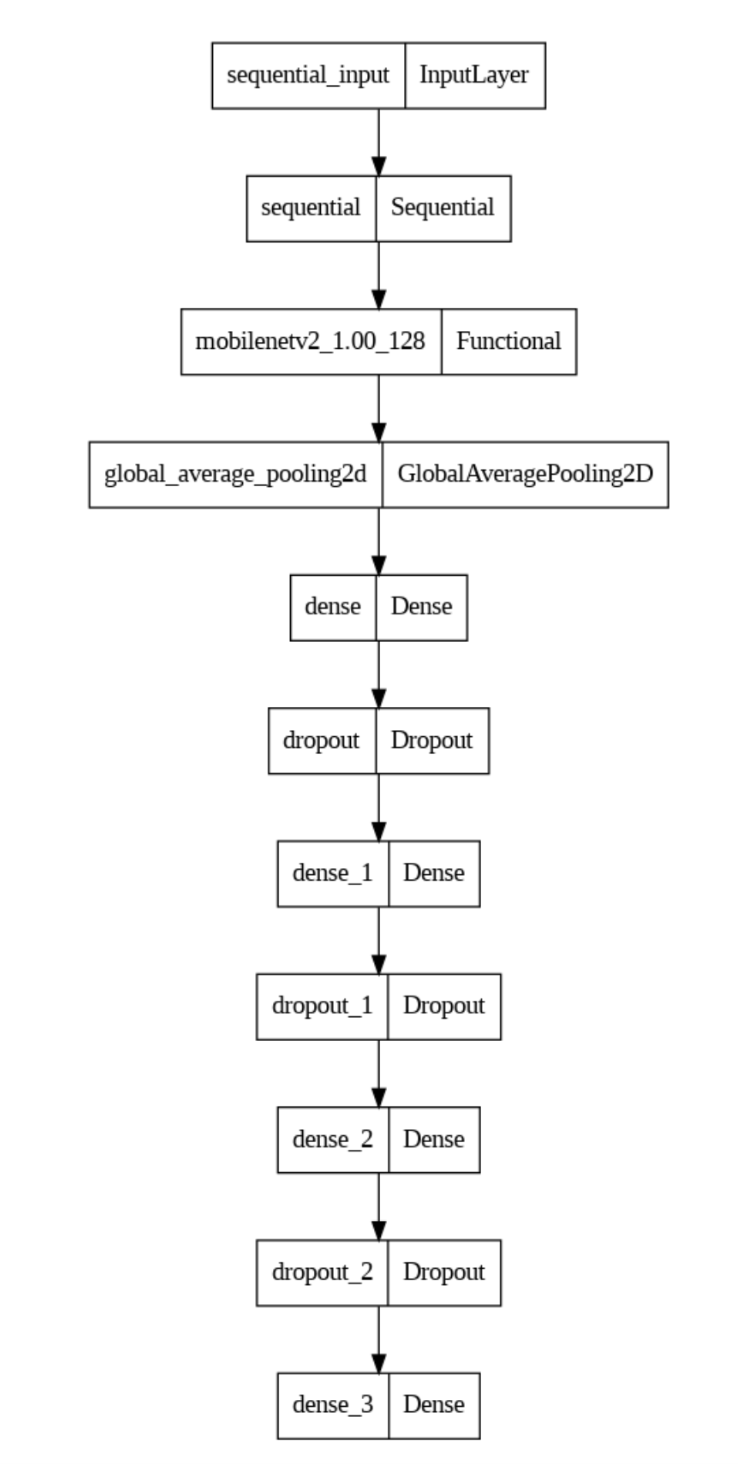

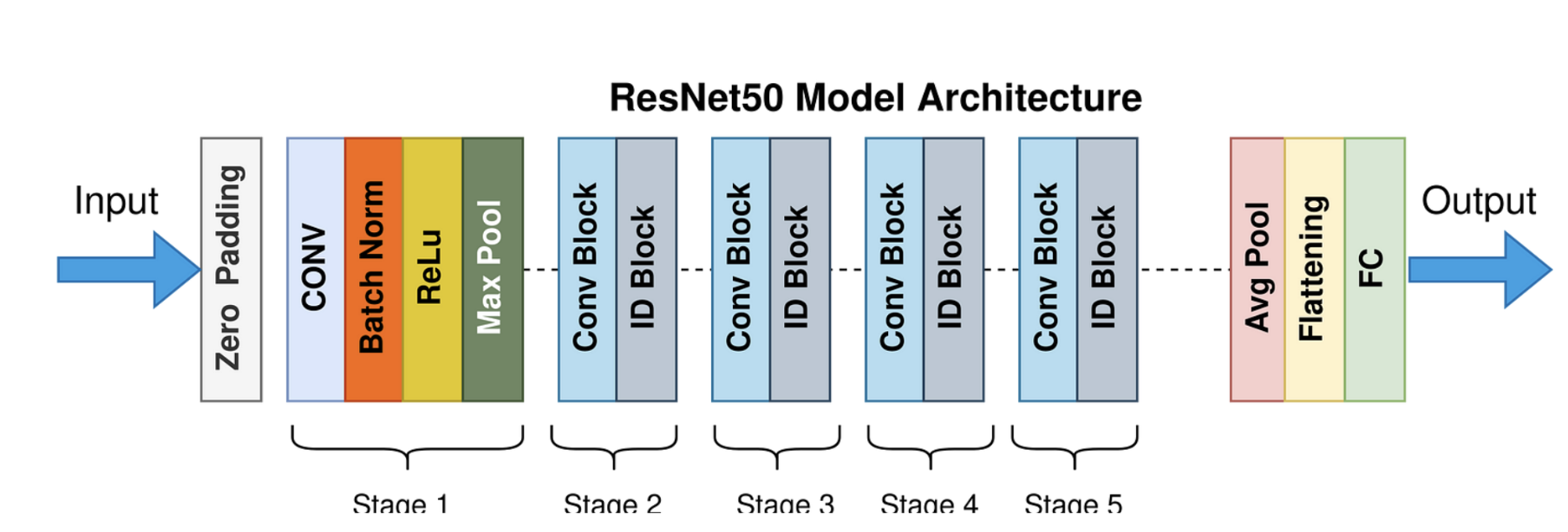

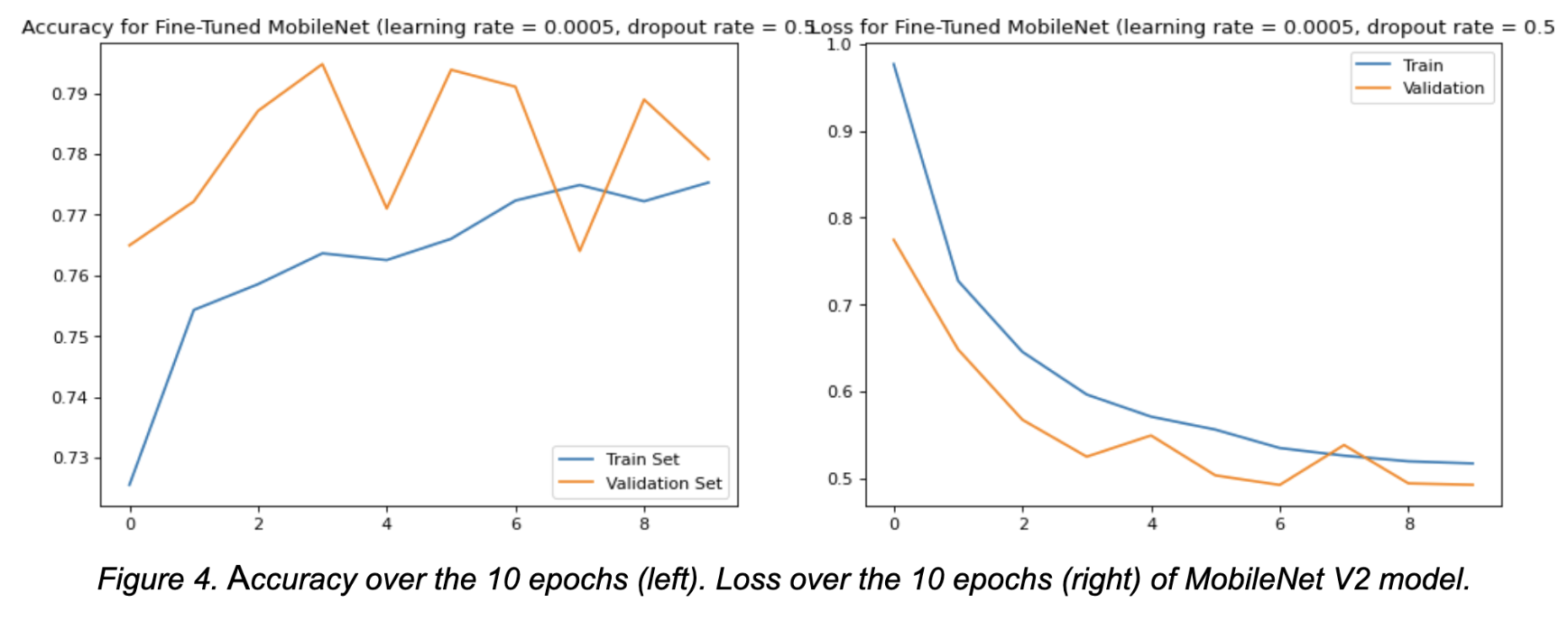

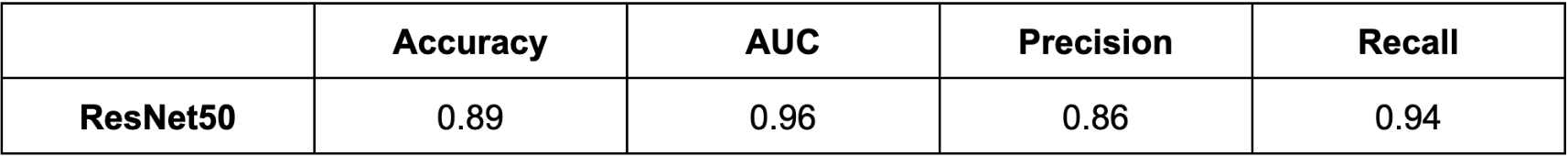

We aim to develop a neural network model for detecting fake AI-generated images, drawing inspiration from previous works by Sabithaa et al. (2020), Zhang et al. (2020), and He et al. (2016). To fine-tune the pre-trained ResNet model, we will use the FFHQ dataset, which Sabithaa et al. (2020) also used as an evaluation dataset. Additionally, we will explore the performance of MobileNetV2, a lightweight version of ResNet50, to understand its effectiveness in comparison to ResNet50. Our goal is to achieve high accuracy while gaining insights into the factors influencing detection performance through model visualization.

the code is in AI_generate folder, contains three different way to generate the AI image from DallE-2, local-local, S3-S3, and local-google.

-> Model_processing & Model_train folder

- MobileNetV2

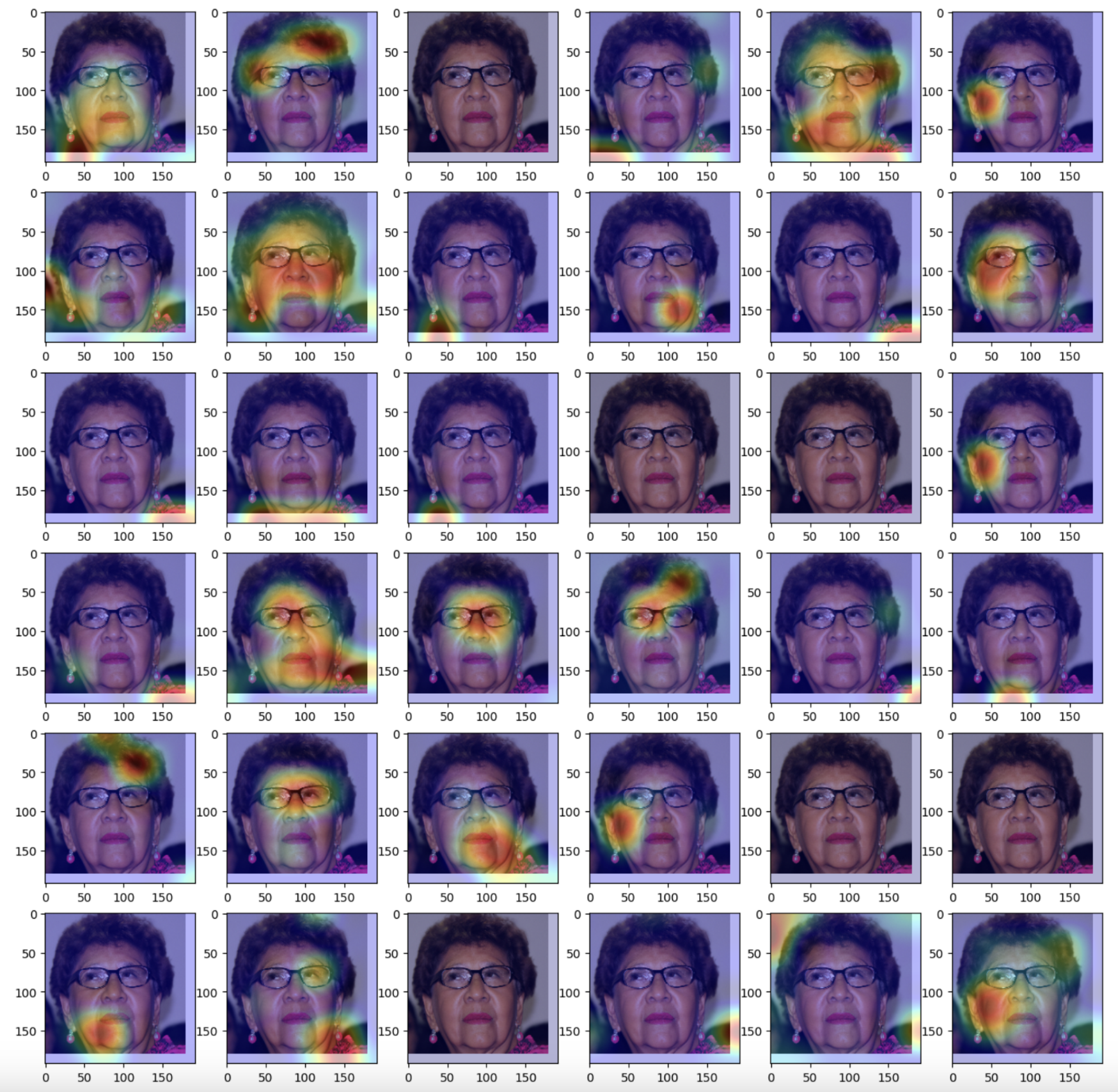

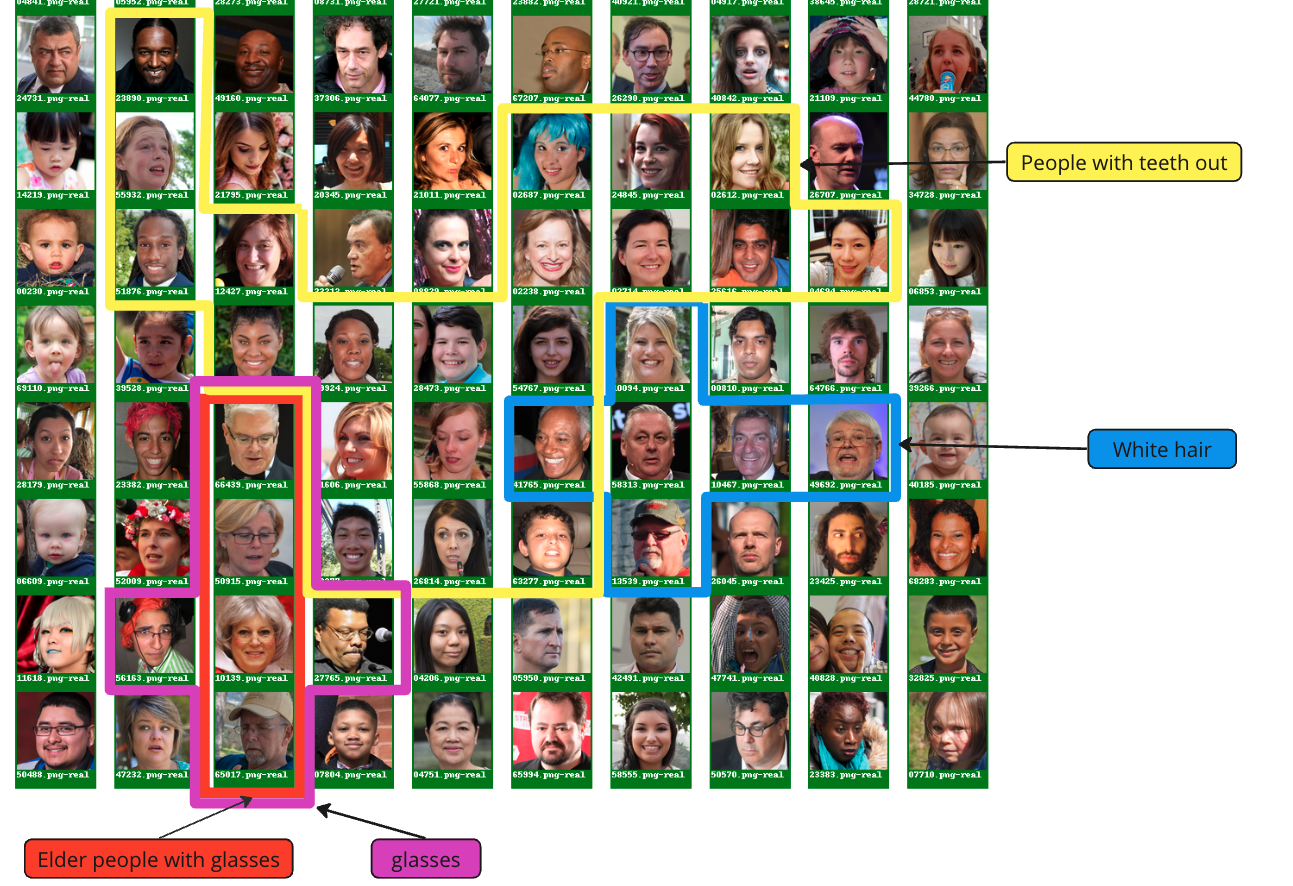

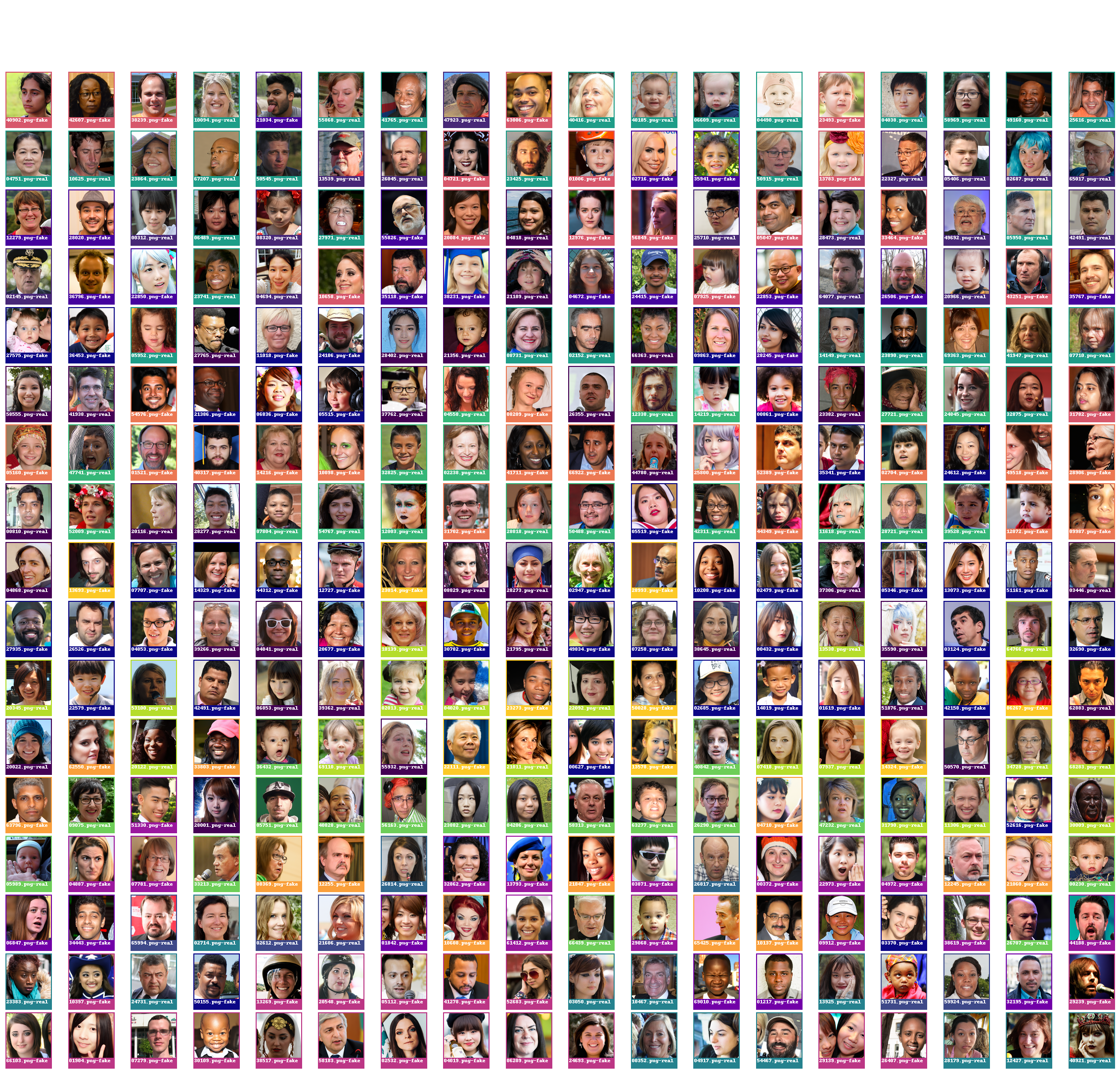

After training ResNet50, we deploy the pre-trained model on HuggingFace and use it for visualization by clustering and plotting feature maps and corresponding images. The resulting plot shows that the ResNet model learns facial attributes and whether the image is real or fake, as similar images and predicted labels are grouped together. This information is encoded in the feature map of the final model layer.

We also tried the images in a lower-dimensional space (2D) obtained by UMAP, which helps in identifying patterns and relations between the images.

We upload the model into huggingface and deploy the model use huggingface and streamlit

-

Fake Image Detection. To try MobileNetV2 model.

-

AI image generator & Fake image detector. To try AI-image generator and ResNet Fake image detector

Pull requests are welcome! If you would like to contribute to this project, please fork the repository and create a new branch for your changes. Submit a pull request when you are ready to merge your changes back into the main branch.

This project is licensed under the MIT License - see the LICENSE file for details.

-

Sabithaa, R., Aruna, A., Karthik, S., & Shanthini, J. (2020). Enhanced model for fake image detection (EMFID) using convolutional neural networks with histogram and wavelet based feature extractions. Journal of Ambient Intelligence and Humanized Computing, 11(7), 3005-3018.

-

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770-778).

-

Zeyang S., Zheng L., Ning Y., and Yang Z. (2022). DE-FAKE: Detection and Attribution of Fake Images Generated by Text-to-Image Generation Models. arXiv preprint arXiv:2210.06998. 4. Riccardo C., Davide C., Giada Z., Giovanni P., Koki N., and Luisa V. (2022). On the Detection of Synthetic Images Generated by Diffusion Models. arXiv preprint arXiv:2211.00680v1.

-

Utkarsh O., Yuheng L., and Yong Jae L. (2023). Towards Universal Fake Image Detectors that Generalize Across Generative Models. arXiv preprint arXiv:2302.10174v1.

-

Rezende, E.R.S.D.; Ruppert, G.C.S.; Carvalho, T. Detecting Computer Generated Images with Deep Convolutional Neural Networks. In Proceedings of the 30th SIBGRAPI Conference on Graphics, Patterns and Images, Niteroi, Brazil, 17–20 October 2017; pp. 71–78.

-

Ye Y., Weitong H., Wei Z., Ting W., and Yun-Qing S. (2018). Distinguishing Computer-generated Graphics from Natural Images Based on Sensor Pattern Noise and Deep Learning. arXiv preprint arXiv:1803.09403.

-

Bekhet S. & Alahmer H. A Robust Deep Learning Approach for Glass Detection in Non-standard Facial Images. IET Biom. 2021;10:74-86.

-

Falko M., Christian R., and Marc S. Exploiting visual artifacts to expose deepfakes and face manipulations. In 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW), pages 83–92. IEEE, 2019.

-

Andreas R., Davide C., Luisa V., Christian R., Justus T., and Matthias N. Faceforensics++: Learning to detect manipulated facial images. In Proceedings of the IEEE International Conference on Computer Vision, pages 1–11, 2019.

-

Lingzhi L., Jianmin B., Ting Z., Hao Y., Dong C., Fang W., and Baining G. Face x-ray for more general face forgery detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5001–5010, 2020.

-

Yuyang Q., Guojun Y., Lu S., Zixuan C., and Jing S. Thinking in frequency: Face forgery detection by mining frequency-aware clues. In the European Conference on Computer Vision, pages 86–103. Springer, 2020.

-

Xi W., Zhen X., YuTao G., and Yu X. Sstnet: Detecting manipulated faces through spatial, steganalysis and temporal features. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 2952–2956. IEEE, 2020.

-

Xin Y., Yuezun L., and Siwei L. Exposing deep fakes using inconsistent head poses. In IEEE International Confer- ence on Acoustics, Speech and Signal Processing, ICASSP 2019, Brighton, United Kingdom, May 12-17, 2019, pages 8261–8265. IEEE, 2019.

-

Mingxing T. and Quoc V. Le. EfficientNet: Rethinking model scaling for convolutional neural networks. In Ka- malika Chaudhuri and Ruslan Salakhutdinov, editors, Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA, volume 97 of Proceedings of Machine Learning Research, pages 6105–6114. PMLR, 2019.

-

Francois Chollet. Xception: Deep Learning with Depthwise Separable Convolutions. In IEEE Conference on Computer Vision and Pattern Recognition, 2017.

-

Trpakov, D. (2022). Vit Face Expression Model. Hugging Face. https://huggingface.co/trpakov/vit-face-expression

-

Siddharth Mandgi (2021). Glasses Detection - OpenCV, DLIB & Edge Detection. MLearning.ai. https://medium.com/mlearning-ai/glasses-detection-opencv-dlib-bf4cd50856da

-

O. M. Parkhi, A. Vedaldi, A. Zisserman: Deep Face Recognition. British Machine Vision Conference, 2015