MapReader is an end-to-end computer vision (CV) pipeline for exploring and analyzing images at scale.

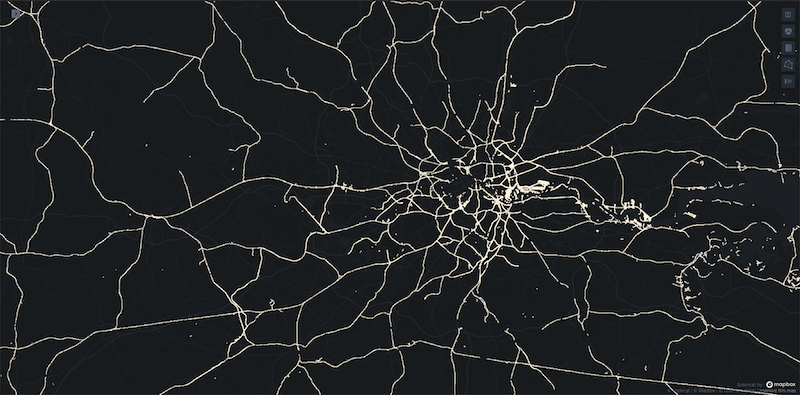

MapReader was developed in the Living with Machines project to analyze large collections of historical maps but is a generalisable computer vision pipeline which can be applied to any images in a wide variety of domains. See Gallery for some examples.

Refer to each tutorial/example in the use cases section for more details on MapReader's relevant functionalities for non-geospatial and geospatial images.

- Gallery

- What is MapReader?

- Overview

- Installation and setup

- Use cases

- How to contribute

- How to cite MapReader

- Credits and re-use terms

- Digitized maps: MapReader can retrieve maps from NLS via tileserver. Read the re-use terms in this section.

- Metadata: the metadata files are stored at mapreader/persistent_data. Read the re-use terms in this section.

- Acknowledgements

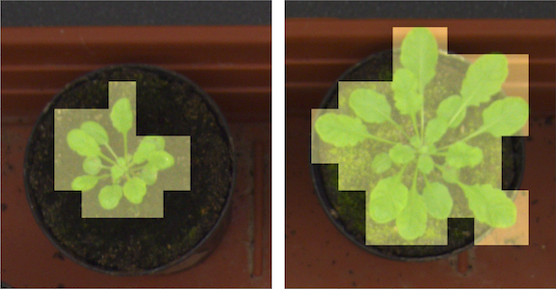

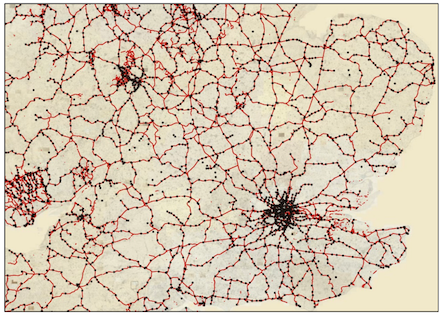

MapReader is a groundbreaking interdisciplinary tool that emerged from a specific set of geospatial historical research questions. It was inspired by methods in biomedical imaging and geographic information science, which were adapted for annotation and use by historians, for example in JVC and MapReader papers. The success of the tool subsequently generated interest from plant phenotype researchers working with large image datasets, and so MapReader is an example of cross-pollination between the humanities and the sciences made possible by reproducible data science.

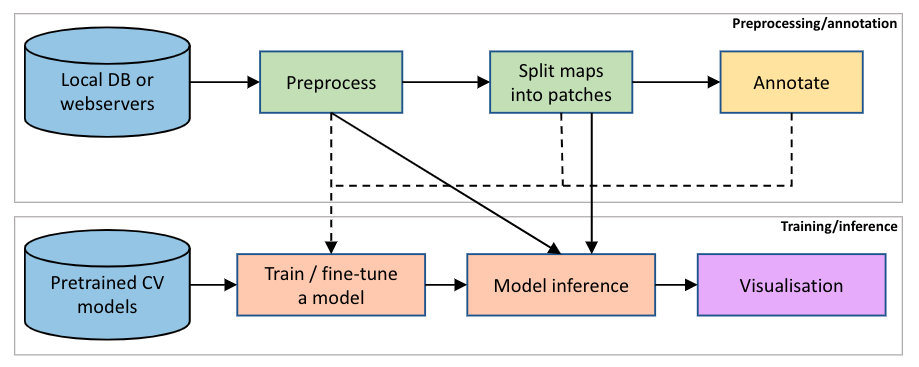

MapReader has two main components: preprocessing/annotation and training/inference as shown in this figure:

It provides a set of tools to:

- load images or maps stored locally or retrieve maps via web-servers (e.g., tileservers which can be used to retrieve maps from OpenStreetMap (OSM), the National Library of Scotland (NLS), or elsewhere).

⚠️ Refer to the credits and re-use terms section if you are using digitized maps or metadata provided by NLS. - preprocess images or maps (e.g., divide them into patches, resampling the images, removing borders outside the neatline or reprojecting the map).

- annotate images or maps or their patches (i.e. slices of an image or map) using an interactive annotation tool.

- train, fine-tune, and evaluate various CV models.

- predict labels (i.e., model inference) on large sets of images or maps.

- Other functionalities include:

- various plotting tools using, e.g., matplotlib, cartopy, Google Earth, and kepler.gl.

- compute mean/standard-deviation pixel intensity of image patches.

We recommend installation via Anaconda (refer to Anaconda website and follow the instructions).

- Create a new environment for

mapreadercalledmr_py38:

conda create -n mr_py38 python=3.8- Activate the environment:

conda activate mr_py38- Install

mapreader:

pip install mapreader To work with geospatial images (e.g., maps):

pip install "mapreader[geo]" - We have provided some Jupyter Notebooks to showcase MapReader's functionalities. To allow the newly created

mr_py38environment to show up in the notebooks:

python -m ipykernel install --user --name mr_py38 --display-name "Python (mr_py38)"-

Continue with the examples in Use cases!

-

⚠️ On Windows and for geospatial images (e.g., maps), you might need to do:

# activate the environment

conda activate mr_py38

# install rasterio and fiona manually

conda install -c conda-forge rasterio=1.2.10

conda install -c conda-forge fiona=1.8.20

# install git

conda install git

# install MapReader

pip install git+https://github.com/Living-with-machines/MapReader.git

# open Jupyter Notebook (if you want to test/work with the notebooks in "examples" directory)

cd /path/to/MapReader

jupyter notebook- Clone

mapreadersource code:

git clone https://github.com/Living-with-machines/MapReader.git - Install:

cd /path/to/MapReader

pip install -v -e .To work with geospatial images (e.g., maps):

cd /path/to/MapReader

pip install -e ."[geo]"- We have provided some Jupyter Notebooks to showcase MapReader's functionalities. To allow the newly created

mr_py38environment to show up in the notebooks:

python -m ipykernel install --user --name mr_py38 --display-name "Python (mr_py38)"- Continue with the examples in Use cases!

Tutorials are organized in Jupyter Notebooks. Follow the hyperlinks on input type names ("Non-Geospatial" or "Geospatial") to read guidance specific to those image types.

- Non-Geospatial:

- classification_plant_phenotype

- Goal: train/fine-tune PyTorch CV classifiers on plant patches in images (plant phenotyping example).

- Dataset: Example images taken from the openly accessible

CVPPP2014_LSV_training_datadataset available from https://www.plant-phenotyping.org/datasets-download. - Data access: locally stored

- Annotations are done on plant patches (i.e., slices of each plant image).

- Classifier: train/fine-tuned PyTorch CV models.

- classification_mnist

- Goal: train/fine-tune PyTorch CV classifiers on MNIST.

- Dataset: Example images taken from http://yann.lecun.com/exdb/mnist/.

- Data access: locally stored

- Annotations are done on whole MNIST images, not on patches/slices of those images.

- Classifier: train/fine-tuned PyTorch CV models.

- classification_plant_phenotype

- Geospatial:

- Maps:

- classification_one_inch_maps_001

- Goal: train/fine-tune PyTorch CV classifiers on historical maps.

- Dataset: from National Library of Scotland: OS one-inch, 2nd edition layer.

- Data access: tileserver

- Annotations are done on map patches (i.e., slices of each map).

- Classifier: train/fine-tuned PyTorch CV models.

- classification_one_inch_maps_001

- Maps:

We welcome contributions related to new applications, both with geospatial images (other maps, remote sensing data, aerial photography) and non-geospatial images (for example, other scientific image datasets).

Please consider acknowledging MapReader if it helps you to obtain results and figures for publications or presentations, by citing:

Link: https://arxiv.org/abs/2111.15592

Kasra Hosseini, Daniel C. S. Wilson, Kaspar Beelen and Katherine McDonough (2021), MapReader: A Computer Vision Pipeline for the Semantic Exploration of Maps at Scale, arXiv:2111.15592.

and in BibTeX:

@misc{hosseini2021mapreader,

title={MapReader: A Computer Vision Pipeline for the Semantic Exploration of Maps at Scale},

author={Kasra Hosseini and Daniel C. S. Wilson and Kaspar Beelen and Katherine McDonough},

year={2021},

eprint={2111.15592},

archivePrefix={arXiv},

primaryClass={cs.CV}

}MapReader can retrieve maps from NLS (National Library of Scotland) via webservers. For all the digitized maps (retrieved or locally stored), please note the re-use terms:

We have provided some metadata files in mapreader/persistent_data. For all these file, please note the re-use terms:

This work was supported by Living with Machines (AHRC grant AH/S01179X/1) and The Alan Turing Institute (EPSRC grant EP/N510129/1). Living with Machines, funded by the UK Research and Innovation (UKRI) Strategic Priority Fund, is a multidisciplinary collaboration delivered by the Arts and Humanities Research Council (AHRC), with The Alan Turing Institute, the British Library and the Universities of Cambridge, East Anglia, Exeter, and Queen Mary University of London.