Warning: This work is ongoing: contact me to get more information on current experiments not included here yet. Train syntactic word embeddings from Ancient Greek treebanks (PROIEL or AGDT annotation schemes).

The setup relies on the integration of pyenv and poetry. Make sure you first install pyenv following these instructions and poetry following these instructions.

git clone https://github.com/npedrazzini/ancientgreek-syntactic-embeddings.git

Install python 3.10.1. This is the version on which this project has been tested (on MacOS and Linux/Ubuntu), so there is no guarantee that everything will run smoothly on other Python versions. To install it run:

pyenv install 3.10.1

And set it as the global version:

pyenv global 3.10.1

Restart the terminal for the changes to take effect:

exec bash -l

Change directory to the current project:

cd ancientgreek-syntactic-embeddings

Create virtual environment and install project dependencies:

poetry install

Then to start using the project scripts, activate the virutal environment:

poetry shell

You can now run a .py script as usual, for example:

python scripts/training/train_diach_emb.py

Ancient Greek treebank in the PROIEL or AGDT formats can be downloaded from the following sources.

- PROIEL:

- PROIEL AG treebank:

- AGDT:

- Gorman treebank:

- Papyri treebank:

- Pedalion treebank:

- Perseus treebank:

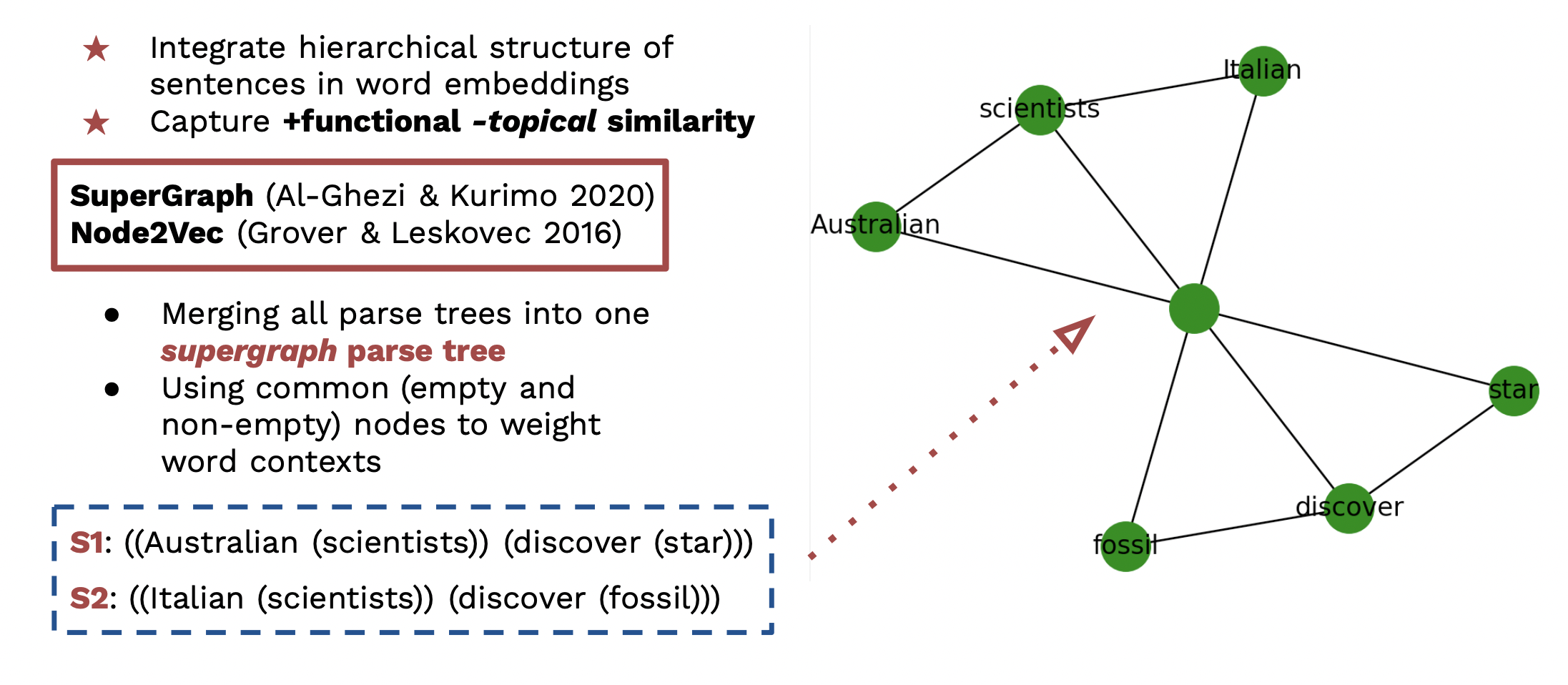

Fig. 1 - From Evaluating Language Models for Ancient Greek: Design, Challenges, and Future Directions (paper presented by Barbara McGillivray, Silvia Stopponi, Nilo Pedrazzini, Malvina Nissim & Saskia Peels-Matthey at International Conference of Historical Linguistics, ICHL 2022, Oxford, United Kingdom).

This is a modified version of the implementation of Al-Ghezi and Kurimo's (2020) [2] framework to learn syntactic embeddings based on multiple constituency parse trees merged into one 'supergraph'. The embeddings are then trained using node2vec [3].

You can generate parse trees from Ancient Green treebank in either the PROIEL or the AGDT annotation scheme. Follow the steps below to process either.

First convert Ancient Greek treebanks following the PROIEL or AGDT schemes (and .xml formats) to parenthetical/parse tree, without the dependency tag (needed as input to node2vec).

Note: we use lemmas, not token forms, and we remove stopwords. Multiple empty parentheses are kept because they indicate an empty node.

There are separate scripts for the PROIEL and the AGDT schemes:

For PROIEL-formatted treebanks:

python ./scripts/preprocess/xml-to-parenth-proiel.py

For AGDT-formatted treebanks:

python ./scripts/preprocess/xml-to-parenth-agdt.py

Note: the above assume you have all .xml files under one

./PROIEL_treebanks/and./AGDT_treebanks/folder. If you have them in a different structure, make sure you adjust the variablesallproielandallagdtrespectively before running the scripts.

After running either or both of the above, make sure you run:

python ./scripts/preprocess/mergetrees.py

This will generate a tree.txt file, containing all parenthetical trees you wish to use to train the node2vec model.

After generating parse trees using the preprocessing scripts provided, you should now have a tree.txt under outputs/<modelname>/. This will be the input of the training script. You do not need to change anything in the script. When you run it, you will only be asked in enter the <modelname> directly in the terminal and the script will find the right file for you.

Run:

train.py

This will train a node2vec model, saving the output vectors as in a .txt file, in non-binary format which can then be explored using gensim.KeyedVector as usual. You can find a minimal example of exploration script in scripts/exploration/most_similar.py.

[1] Omer Levy and Yoav Goldberg. 2014. Dependency-Based Word Embeddings. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pages 302–308, Baltimore, Maryland. Association for Computational Linguistics.

[2] Ragheb Al-Ghezi and Mikko Kurimo. 2020. Graph-based Syntactic Word Embeddings. In Proceedings of the Graph-based Methods for Natural Language Processing (TextGraphs), pages 72–78, Barcelona, Spain (Online). Association for Computational Linguistics. https://aclanthology.org/2020.textgraphs-1.8.pdf

[3] Aditya Grover and Jure Leskovec. 2016. node2vec: Scalable Feature Learning for Networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.