| docs | |

|---|---|

| tests | |

| package |

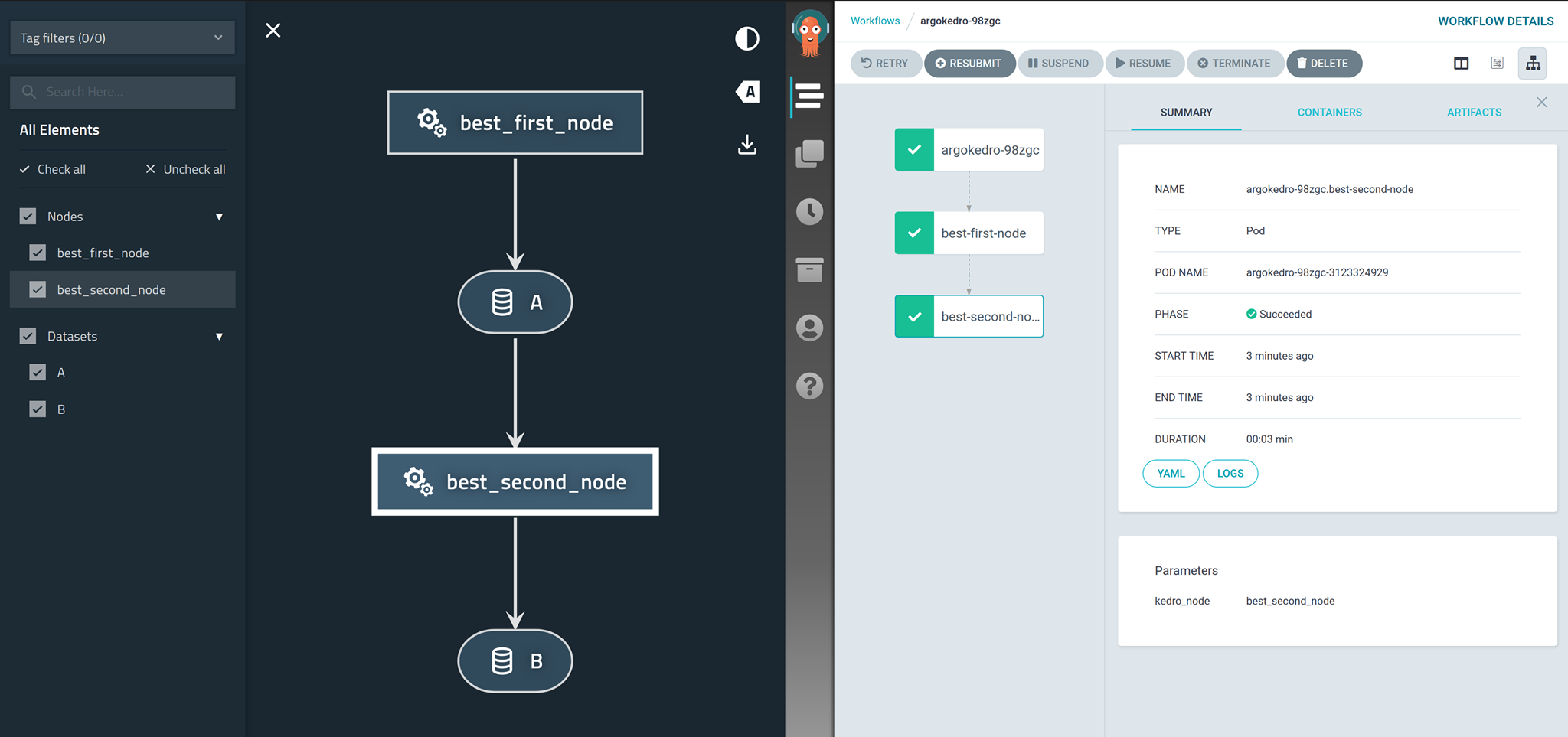

Converting kedro pipelines to argo pipelines.

- Free software: BSD 3-Clause License

pip install kedro-argo

You can also install the in-development version with:

pip install https://github.com/nraw/kedro-argo/archive/master.zip

- To be used with Kedro, so it's assumed this package is used with a Kedro project.

- Argo CLI is needed for the deployment step. It's also assumed that Argo is already installed on your kuberentes instance.

- You must specify an image name as a parameter. You can generate the image using Kedro-docker.

- As the workflow will be in Argo, which means every step will run in its own container. Therefore, all datasets should be somehow passed between containers or else the pipeline will fail. This means either all datasets should be saved externally (S3, Azure, etc.) or in a shared folder that your deployment would have access to.

When installed, argo should be visible under your kedro commands, if you're in a kedro project

kedro

Then you have two options for obtaining the yaml file, namely via Helm or via ytt.

kedro argo IMAGE_NAME

Add this repository to your helm charts:

helm repo add kedro-argo https://nraw.github.io/kedro-argo-helm/

Then either directly install it by passing the kedro.yaml for input values

helm install -f templates/kedro.yaml kedro-argo kedro-argo/kedro-argo

Or clone it to your repository and change anything that you would still need:

helm pull kedro-argo/kedro-argo --untar

Get the kedro.yaml file by running

kedro argo --ytt IMAGE_NAME

You can now run:

ytt -f templates > argo.yaml

or if you prefer in Docker:

docker run --rm -it --name ytt -v $(pwd)/templates:/templates gerritk/ytt:latest -f /templates > argo.yaml

and finally

argo submit --watch argo.yaml

https://kedro-argo.readthedocs.io/

To run the all tests run:

tox

Note, to combine the coverage data from all the tox environments run:

| Windows | set PYTEST_ADDOPTS=--cov-append tox |

|---|---|

| Other | PYTEST_ADDOPTS=--cov-append tox |