To test the performance of simple-data-analysis, we calculated the average temperature per decade and city with the daily temperatures from the Adjusted and Homogenized Canadian Climate Data.

We ran the same calculations with simple-data-analysis@1.8.1 (both NodeJS and Bun), simple-data-analysis@2.0.1 (NodeJS), simple-data-analysis@2.7.3 (NodeJS), Pandas (Python), and the tidyverse (R).

In each script, we:

- Load a CSV file (Importing)

- Select four columns, remove rows with missing temperature, convert date strings to date and temperature strings to float (Cleaning)

- Add a new column decade and calculate the decade (Modifying)

- Calculate the average temperature per decade and city (Summarizing)

- Write the cleaned-up data that we computed the averages from in a new CSV file (Writing)

Each script has been run ten times on a MacBook Pro (Apple M1 Pro / 16 GB). The durations have been averaged and we calculated the standard deviation.

The charts displayed below come from this Observable notebook.

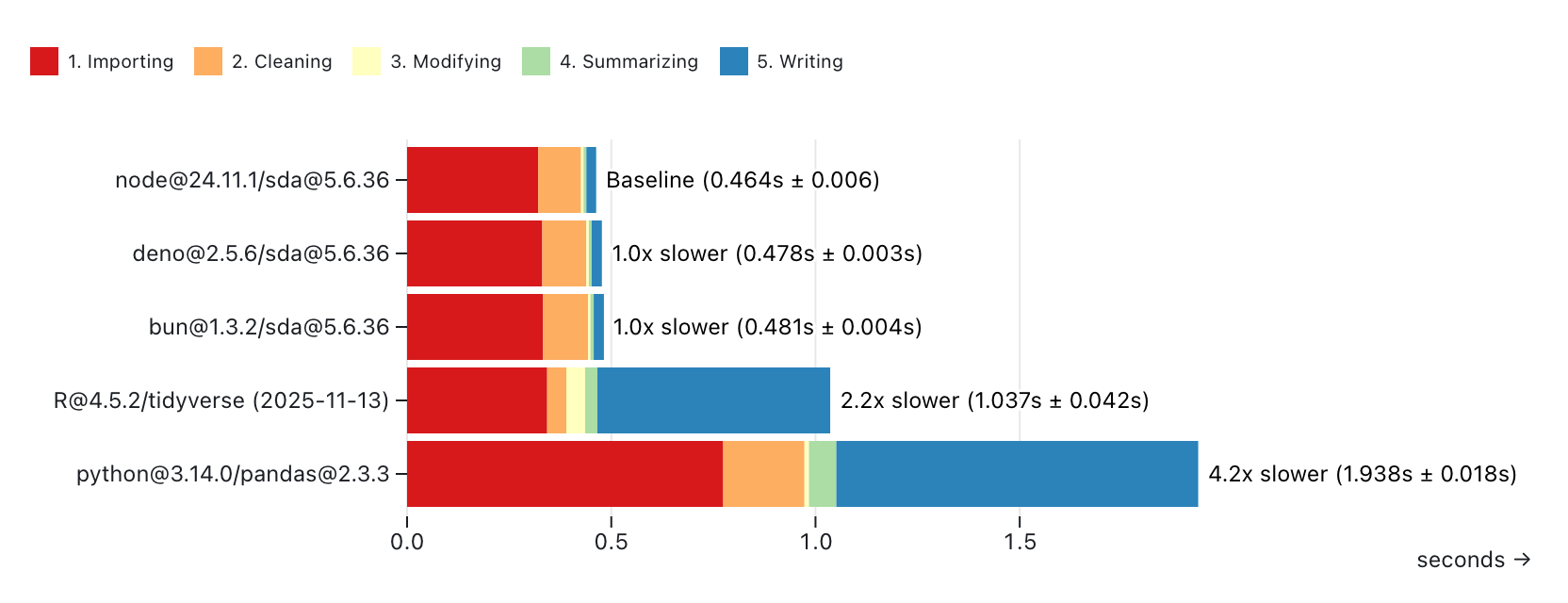

With ahccd-samples.csv:

- 74.7 MB

- 19 cities

- 20 columns

- 971,804 rows

- 19,436,080 data points

simple-data-analysis@1.8.1 was the slowest, but simple-data-analysis@2.x.x versions are now the fastest.

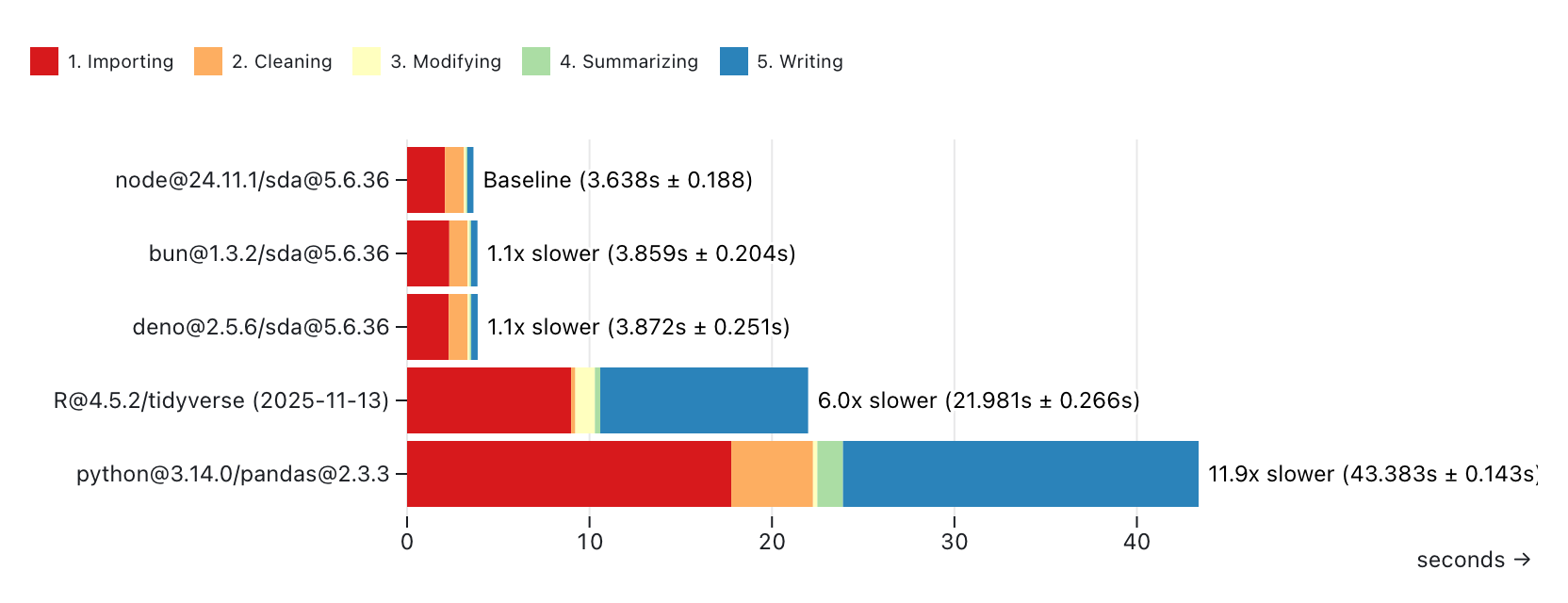

With ahccd.csv:

- 1.7 G

- 773 cities

- 20 columns

- 22,051,025 rows

- 441,020,500 data points

The file was too big for simple-data-analysis@1.8.1, so it's not included here.

While simple-data-analysis@2.0.1 was already fast, simple-data-analysis@2.7.3 shines even more with big files.