While the adoption of machine learning and deep learning techniques continue to grow, many organizations find it difficult to actually deploy these sophisticated models into production. It is common to see data scientists build powerful models, yet these models are not deployed because of the complexity of the technology used or lack of understanding related to the process of pushing these models into production.

As part of this talk, I will review several deployment design patterns for both real-time and batch use cases. I’ll show how these models can be deployed as scalable, distributed deployments within the cloud, scaled across hadoop clusters, as APIs, and deployed within streaming analytics pipelines. I will also touch on topics related to security, end-to-end governance, pitfalls, challenges, and useful tools across a variety of platforms. This presentation will involve demos and sample code for the the deployment design patterns.

This repo contains a few of my ideas, strategies, and code for deploying your models in production and at scale.

• Deploy as a Batch Process

• Deploy as a Web Service (REST API)

• Deploy within Web App

• Deploy online as part of a real-time data stream

• Deploy/Embed within Devices

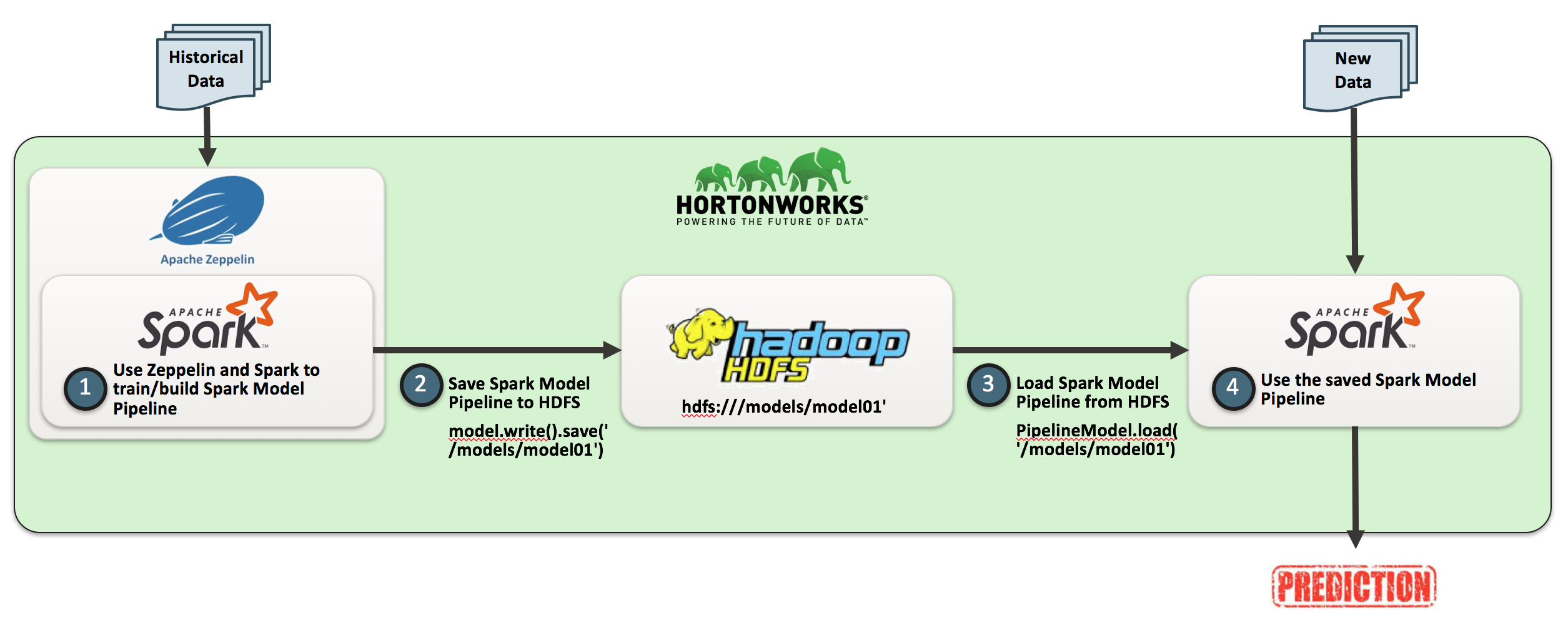

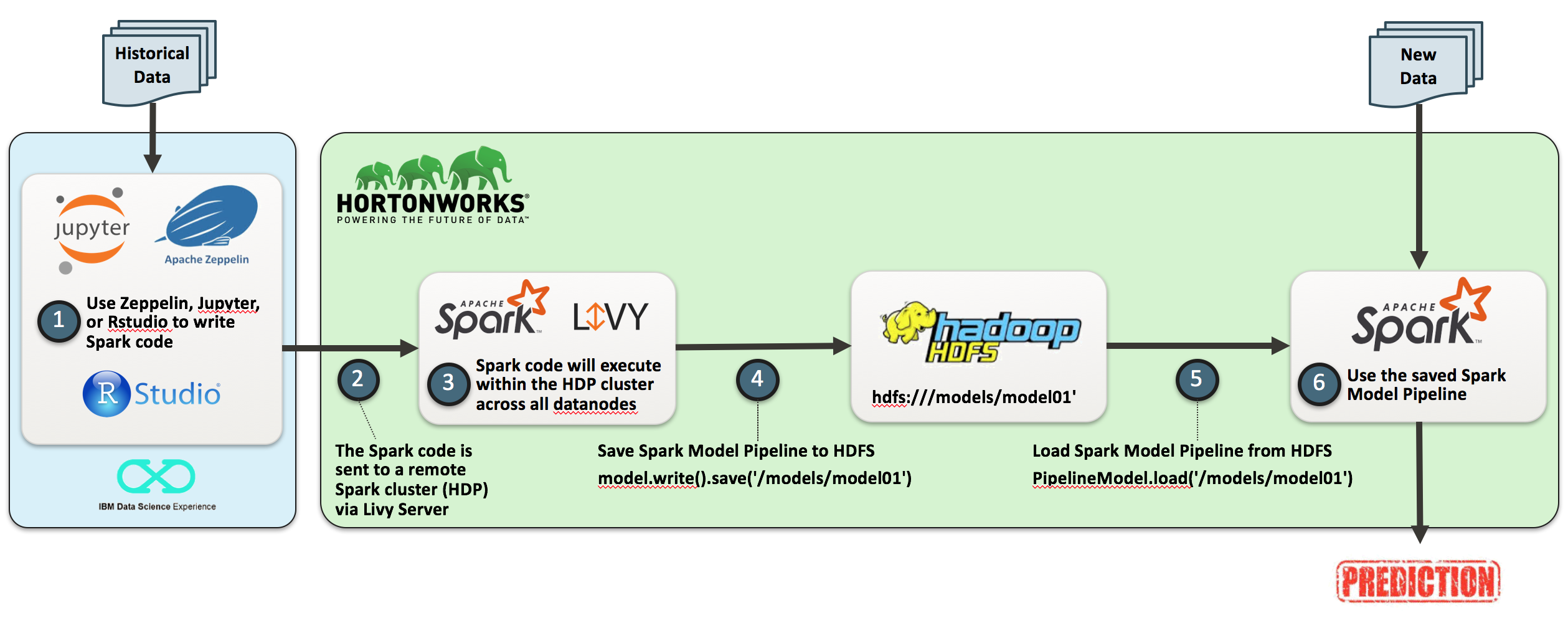

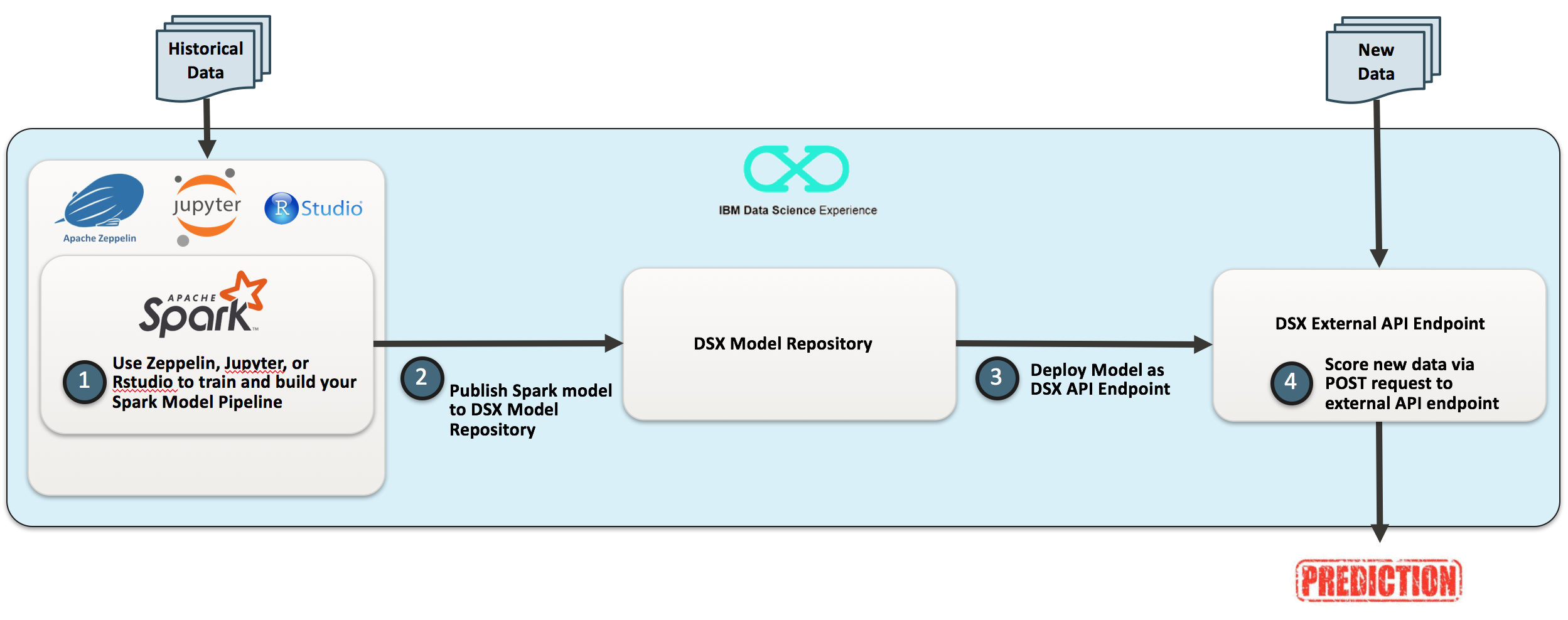

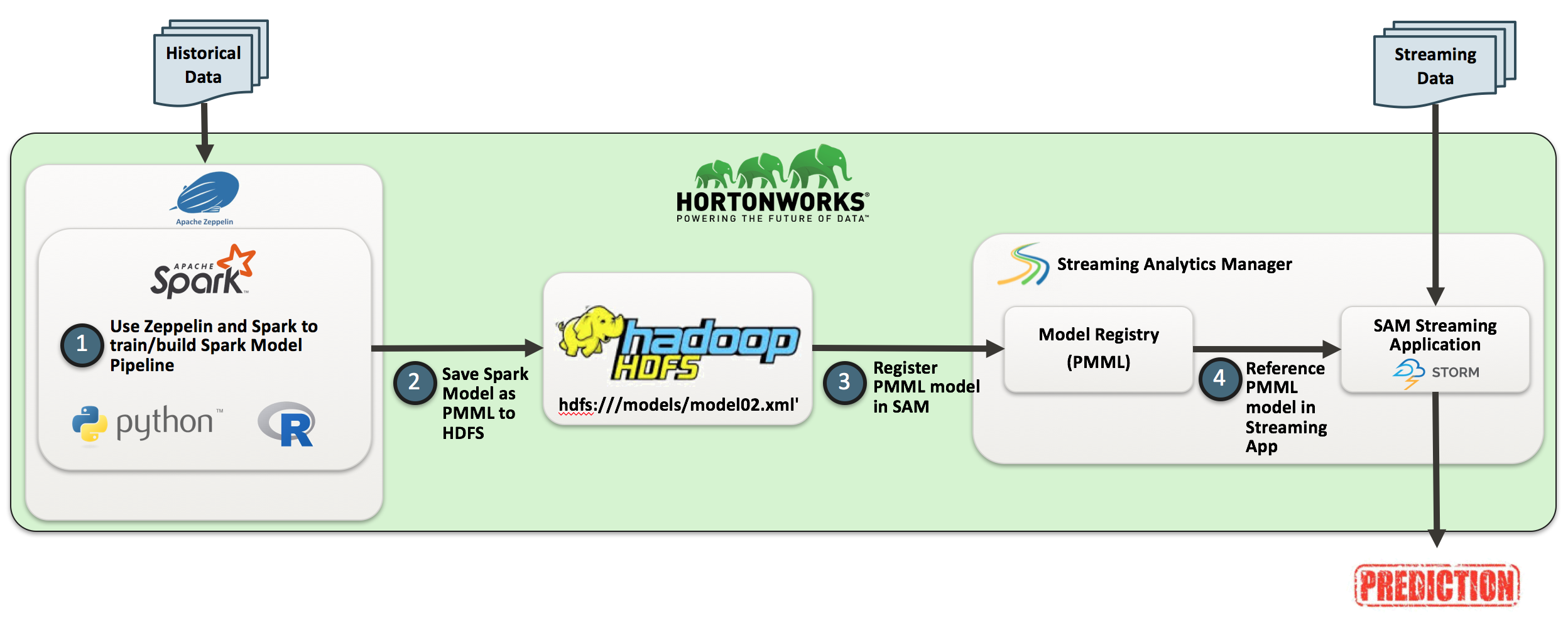

Based on these deployments, here are high-level flows for several deployment designs (including open source tech being used):

ML Deployment Design - Batch

ML Deployment Design - Batch

ML Deployment Design - Batch

ML Deployment Design - API Endpoint

ML Deployment Design - Real-time, Streaming Analytics

References:

• Presented these concepts at the AllThingsOpen Conference