This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Working with data can be challenging: it often doesn’t come in the best format for analysis, and understanding it well enough to extract insights requires both time and the skills to filter, aggregate, reshape, and visualize it. This session will equip you with the knowledge you need to effectively use pandas – a powerful library for data analysis in Python – to make this process easier.

Pandas makes it possible to work with tabular data and perform all parts of the analysis from collection and manipulation through aggregation and visualization. While most of this session focuses on pandas, during our discussion of visualization, we will also introduce at a high level Matplotlib (the library that pandas uses for its visualization features, which when used directly makes it possible to create custom layouts, add annotations, etc.) and Seaborn (another plotting library, which features additional plot types and the ability to visualize long-format data).

This is an introductory workshop on pandas first delivered at ODSC Europe 2021 and subsequently at the 5th Annual Toronto Machine Learning Summit in 2021, PyCon US 2022, ODSC Europe 2023, and PyCon CZ 2023, along with abbreviated versions at PyCon UK 2022, PyCon Portugal 2022, EuroSciPy 2023, PyCon UK 2023, PyCon MEA 2023, and PyCon Poland 2024. It's divided into the following sections:

We will begin by introducing the Series, DataFrame, and Index classes, which are the basic building blocks of the pandas library, and showing how to work with them. By the end of this section, you will be able to create DataFrames and perform operations on them to inspect and filter the data.

To prepare our data for analysis, we need to perform data wrangling. In this section, we will learn how to clean and reformat data (e.g., renaming columns and fixing data type mismatches), restructure/reshape it, and enrich it (e.g., discretizing columns, calculating aggregations, and combining data sources).

The human brain excels at finding patterns in visual representations of the data; so in this section, we will learn how to visualize data using pandas along with the Matplotlib and Seaborn libraries for additional features. We will create a variety of visualizations that will help us better understand our data.

We will practice all that you’ve learned in a hands-on lab. This section features a set of analysis tasks that provide opportunities to apply the material from the previous sections.

You should have basic knowledge of Python and be comfortable working in Jupyter Notebooks. Check out this notebook for a crash course in Python or work through the official Python tutorial for a more formal introduction. The environment we will use for this workshop comes with JupyterLab, which is pretty intuitive, but be sure to familiarize yourself using notebooks in JupyterLab and additional functionality in JupyterLab.

Pick the installation option that makes sense for you:

Warning: It is highly recommended that you use your personal laptop for the installation.

-

Install the following, if not already installed:

-

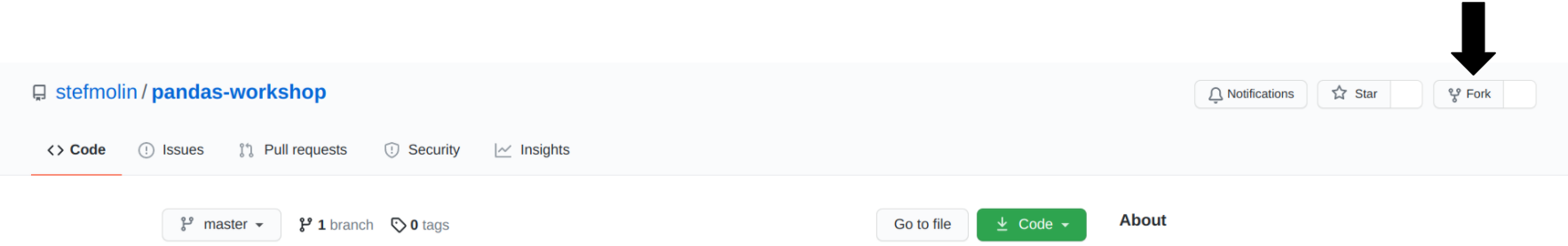

Fork this repository:

-

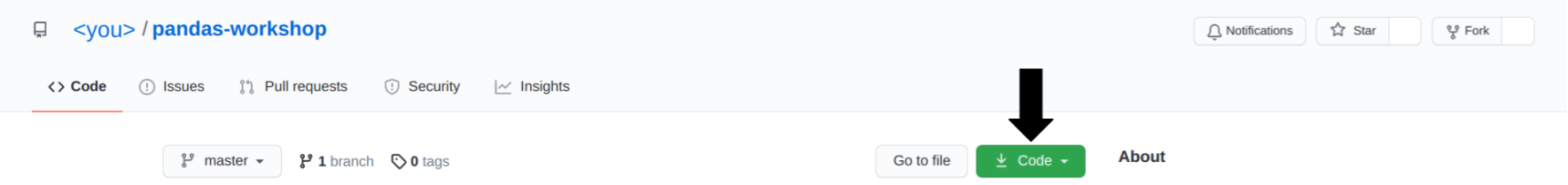

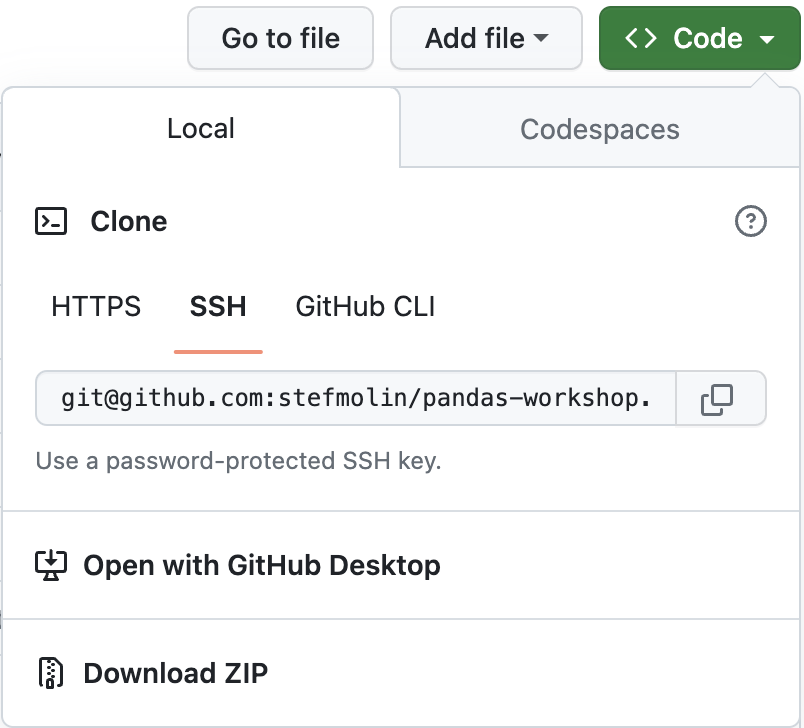

Navigate to your fork, and click the Code button:

-

Clone your forked repository using the desired method from the Local tab:

-

Create and activate a Python virtual environment:

-

If you installed Anaconda/Miniconda, use

conda(on Windows, these commands should be run in Anaconda Prompt):$ cd pandas-workshop ~/pandas-workshop$ conda env create --file environment.yml ~/pandas-workshop$ conda activate pandas_workshop (pandas_workshop) ~/pandas-workshop$

-

Otherwise, use

venv:$ cd pandas-workshop ~/pandas-workshop$ python3 -m venv pandas_workshop ~/pandas-workshop$ source pandas_workshop/bin/activate (pandas_workshop) ~/pandas-workshop$ pip3 install -r requirements.txt

-

-

Launch JupyterLab:

(pandas_workshop) ~/pandas-workshop$ jupyter lab -

Navigate to the

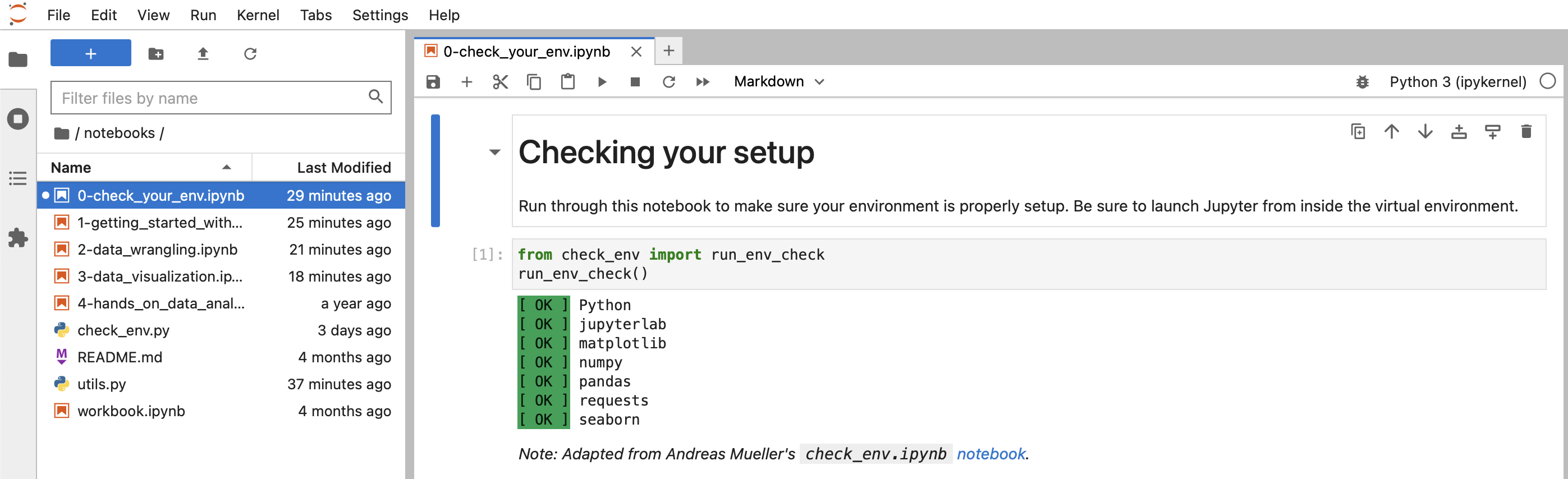

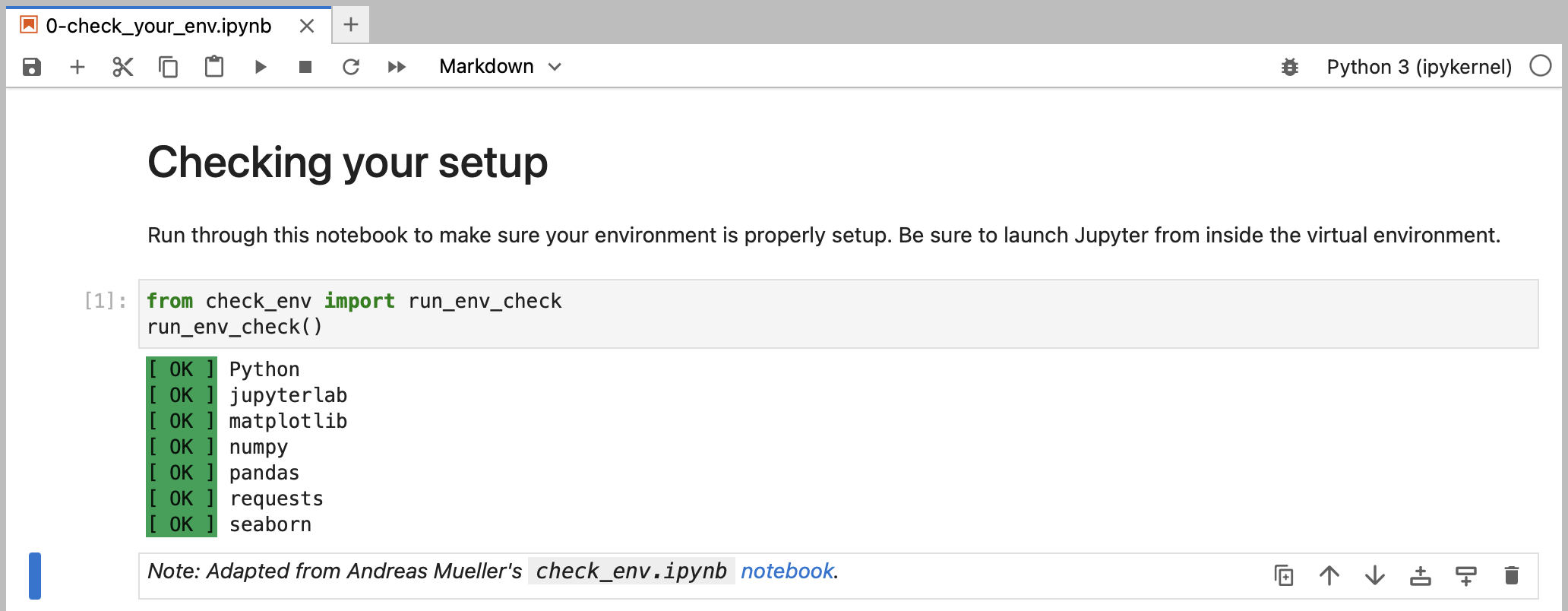

0-check_your_env.ipynbnotebook in thenotebooks/folder: -

Run the notebook to confirm everything is set up properly:

-

Install the following, if not already installed:

-

Fork this repository:

-

Navigate to your fork, and click the Code button:

-

Clone your forked repository using the desired method from the Local tab:

-

Build the Docker image needed to run the Jupyter environment:

$ cd pandas-workshop ~/pandas-workshop$ docker compose build

-

Launch JupyterLab from within a Docker container:

~/pandas-workshop$ docker compose upYou should be able to access the environment at http://localhost:8888

-

Navigate to the

0-check_your_env.ipynbnotebook in thenotebooks/folder: -

Run the notebook to confirm everything is set up properly:

Note: Once you're done, use ctrl+c to stop the Docker container.

The GitHub Codespaces setup provides a pre-configured machine accessible via your browser. You will need a GitHub account and available quota (all users get more than enough free monthly quota to be able to run this workshop). Note that this will take a while to build. It's recommended that you click the badge above to build the codespace in advance of the workshop and then stop the codespace until the workshop, at which point you can simply resume and pick up where you left off.

Note that if you want to save your changes, you will need to fork the repository before creating the codespace. You will then be able to commit your changes directly from the codespace. Be sure to create your codespace in advance of the session and resume when we start.

-

Fork this repository:

-

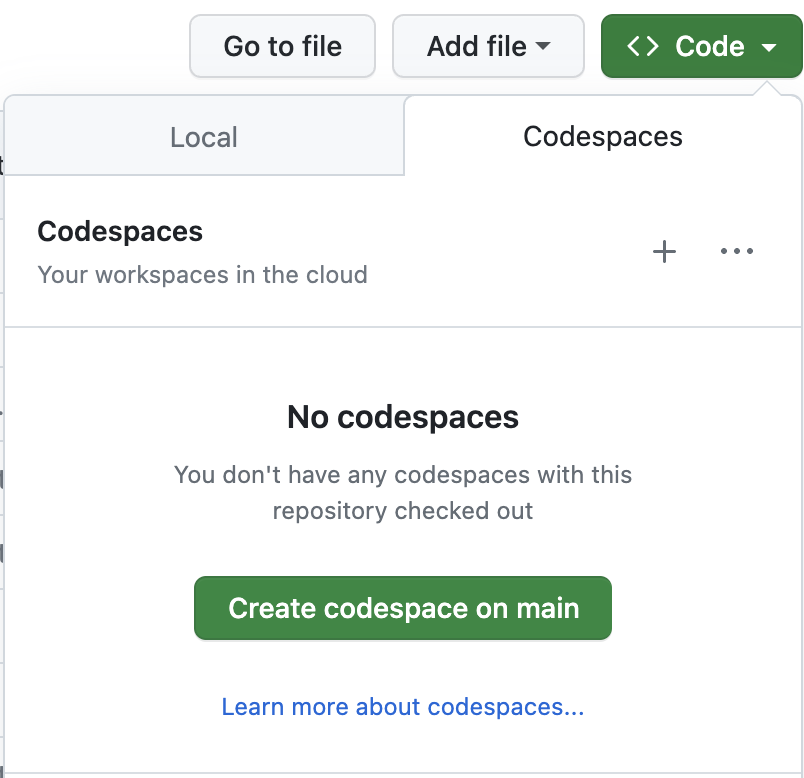

Navigate to your fork, and click the Code button:

-

Launch the codespace from your fork by clicking on the + or Create codespace on main button in the Codespaces tab:

-

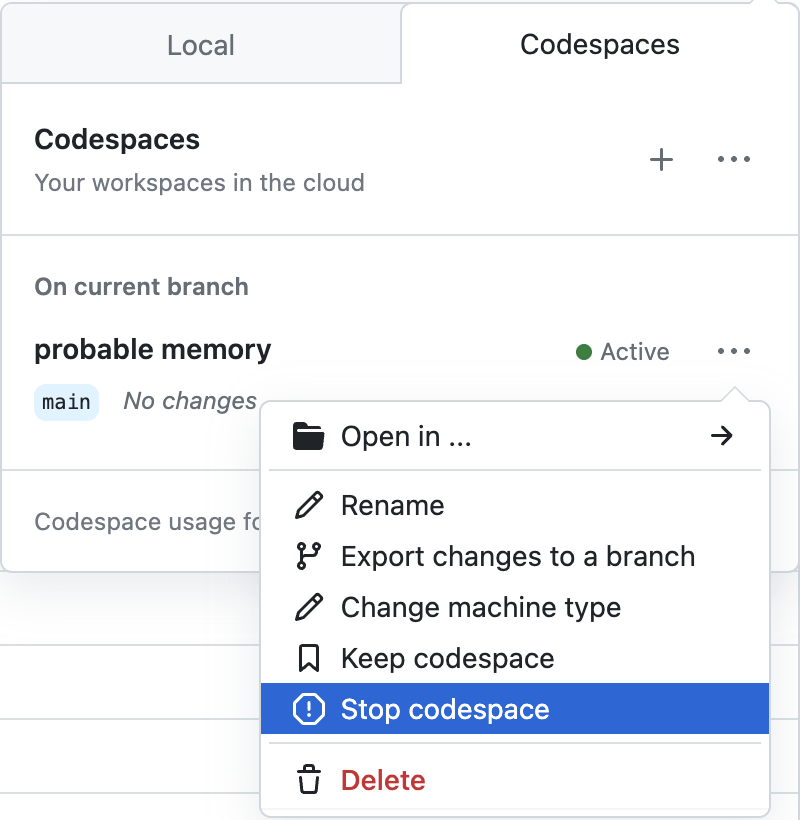

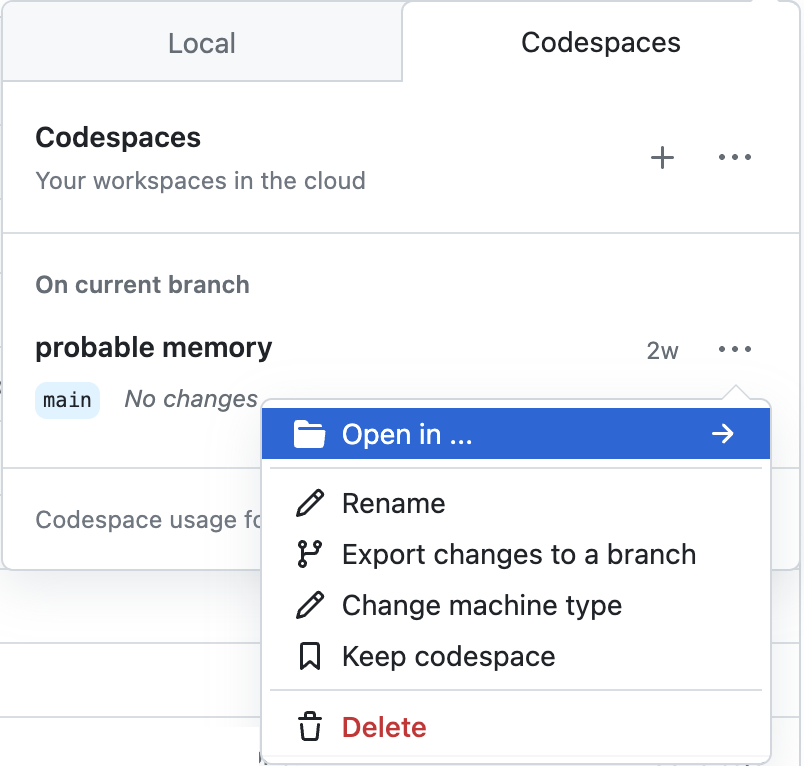

Stop the codespace until the session starts by selecting Stop codespace from the ... menu.

-

To resume the codespace, click Open in ... and then select your preferred method. If you aren't sure, select JupyterLab.

Depending on server availability, you can use this Binder environment, which does not require the creation of a GitHub account. There is no guarantee that you will be able to access this during the workshop.

Stefanie Molin (@stefmolin) is a software engineer and data scientist at Bloomberg in New York City, where she tackles tough problems in information security, particularly those revolving around data wrangling/visualization, building tools for gathering data, and knowledge sharing. She is also the author of Hands-On Data Analysis with Pandas, which is currently in its second edition and has been translated into Korean. She holds a bachelor’s of science degree in operations research from Columbia University's Fu Foundation School of Engineering and Applied Science, as well as a master’s degree in computer science, with a specialization in machine learning, from Georgia Tech. In her free time, she enjoys traveling the world, inventing new recipes, and learning new languages spoken among both people and computers.

All examples herein were developed exclusively for this workshop. Hands-On Data Analysis with Pandas contains additional examples and exercises, as does this article. For a deeper dive into data visualization in Python, check out my Beyond the Basics: Data Visualization in Python workshop.

Introduction to Data Analysis Using Pandas by Stefanie Molin is licensed under CC BY-NC-SA 4.0