This package implements this paper in which the author tries to address the problem of interpretability and actionability of tree-based models. The author of the paper presents a novel framework to post-process any tree-based classifier to extract an optimal actionable plan that can change a given input to a desired class with a minimum cost. Currently this package only supports scikit-learn's implementation of Random Forest.

pip install oae

import numpy as np

import pandas as pd

from oae.core import *

from oae.tree import *

from oae.optimizer import *

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split as tts

from sklearn.metrics import accuracy_score, roc_auc_score

SEED = 41

np.random.seed(SEED)data = get_external_dataset(URLS['BREAST_CANCER'])data.target.value_counts(normalize=True)2 0.655222

4 0.344778

Name: target, dtype: float64

Convert benign represented as 2 and malignant represented as 4 to 0 and 1 respectively.

# convert benigna

lbls, lbl_map = pd.factorize(data['target'])Let's look at the data-type of the features

data.dtypescode_number int64

clump_thickness int64

cell_size_uniformity int64

cell_shape_uniformity int64

marginal_adhesion int64

single_epithelial_cell_size int64

bare_nuclei object

bland_chromatin int64

normal_nucleoli int64

mitoses int64

target int64

dtype: object

data.bare_nuclei.unique()array(['1', '10', '2', '4', '3', '9', '7', '?', '5', '8', '6'],

dtype=object)

Let's replace this ? with -1 and convert it into int64 like others

data = data.assign(bare_nuclei=data.bare_nuclei.str.replace('?', '-1').astype(np.int))

data = data.assign(target=lbls); data.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| code_number | clump_thickness | cell_size_uniformity | cell_shape_uniformity | marginal_adhesion | single_epithelial_cell_size | bare_nuclei | bland_chromatin | normal_nucleoli | mitoses | target | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1000025 | 5 | 1 | 1 | 1 | 2 | 1 | 3 | 1 | 1 | 0 |

| 1 | 1002945 | 5 | 4 | 4 | 5 | 7 | 10 | 3 | 2 | 1 | 0 |

| 2 | 1015425 | 3 | 1 | 1 | 1 | 2 | 2 | 3 | 1 | 1 | 0 |

| 3 | 1016277 | 6 | 8 | 8 | 1 | 3 | 4 | 3 | 7 | 1 | 0 |

| 4 | 1017023 | 4 | 1 | 1 | 3 | 2 | 1 | 3 | 1 | 1 | 0 |

data.iloc[:, 1:-1].nunique()clump_thickness 10

cell_size_uniformity 10

cell_shape_uniformity 10

marginal_adhesion 10

single_epithelial_cell_size 10

bare_nuclei 11

bland_chromatin 10

normal_nucleoli 10

mitoses 9

dtype: int64

All of the features of interest ( excluding code_number and target ) are categorical variables. Let's create a holdout set and train a Random Forest Classifier.

SEED = 41

np.random.seed(SEED)

features = data.columns[1:-1]

Xtr, Xte, ytr, yte = tts(data.loc[:, features], data.target, test_size=.2, random_state=SEED)clf = RandomForestClassifier(n_estimators=10, n_jobs=-1, random_state=SEED)

clf.fit(Xtr, ytr)

print(f'train accuracy: {accuracy_score(ytr, clf.predict(Xtr))}')

print(f'holdout accuracy: {accuracy_score(yte, clf.predict(Xte))}')train accuracy: 0.998211091234347

holdout accuracy: 0.9714285714285714

Let's select an instance from holdout set and look at the ground. We realize that the classifier marks it as malignant and we want to know what features could be changed so that classifier would mark it as benign.

instanceidx = 4

print(yte.iloc[instanceidx], ' ', clf.predict_proba(Xte.iloc[instanceidx:instanceidx+1]))1 [[0. 1.]]

Now we will try to extract an optimal action problem by posing this problem as an Integer Linear Programming problem.

atm = ATMSKLEARN(clf, data.loc[:, features].values)

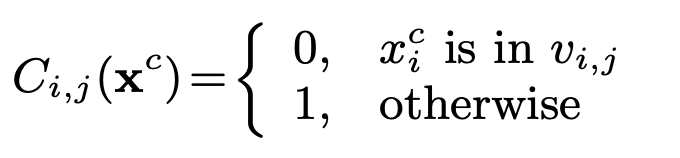

instance = Instance(Xte.iloc[instanceidx], ['categorical'] * len(features))We would be using the following cost function so our OAE problem minimize the number of changed features, i.e. Hamming distance.

But we don' need to restrict ourselves to this particular cost function, you can design your cost function and pass it to the solver.

In this example our input has ground label 1 and we want to find out how to tweak features with minimum cost such that classifier classifies it as label 0 with z being the target threshold.

opt = Optimizer(cost_matrix, combine, z=0.45, class_=0)

v_i_j_sol, phi_t_k_sol = opt.solve(atm, instance)The package would help suggest changes that should be made to the feature to move it from classified as malignant to being benign.

atm.suggest_changes(v_i_j_sol, instance)['no change, current value: 5',

'no change, current value: 3',

'no change, current value: 5',

'no change, current value: 1',

'no change, current value: 8',

'current value: 10, proposed change: [-1, 1]',

'current value: 5, proposed change: [3, 4]',

'no change, current value: 3',

'no change, current value: 1']

An action plan is extracted which says that we need to change 5th feature which currently has value 10 to -1 and 6th feature to 3 and then our classifier would classify it as label 0. Let's find it out.

X_transformed = atm.transform(v_i_j_sol, instance); X_transformed.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| clump_thickness | cell_size_uniformity | cell_shape_uniformity | marginal_adhesion | single_epithelial_cell_size | bare_nuclei | bland_chromatin | normal_nucleoli | mitoses | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 5 | 3 | 5 | 1 | 8 | -1 | 3 | 3 | 1 |

clf.predict_proba(X_transformed)array([[0.6, 0.4]])

Indeed we can see that classifier will label it as 0 and probability is also greater than z=0.45 so it also satisfies that concern as well.

- One example coult be in targeted marketing, we can use the action plan generated per customer to better understand which all levers can we pull to get desired results.