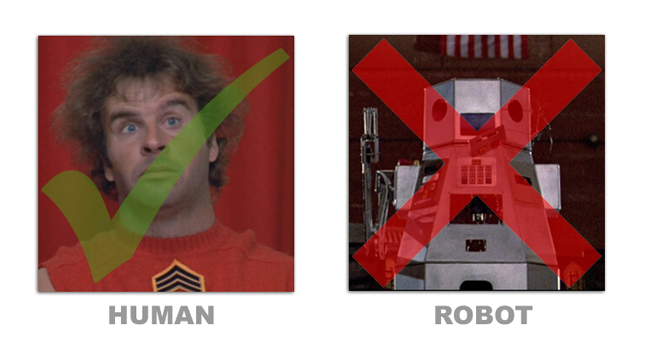

AWS CloudFront Lambda / TensorFlow face detection via Human

- Facial biometrics data that include age, gender, and emotion.

- Serverless function, scales on a tight budget.

- Can be set-up easily (in minutes).

$ ./deploy --profile <AWS credentials profile>

The following operations are orchestrated by AWS CloudFormation during execution:

- Docker container image is created and uploaded to AWS Elastic Container Registry.

- AWS Lambda function is created with a configured Function URL endpoint.

- AWS CloudFront distribution is created using the new function as origin.

- Network routing occurs thereby exposing your Lambda function URL

curl -X 'POST' \

'https://<url-id>.lambda-url.<region>.on.aws/' \

-H 'Accept: application/json' \

-H 'Content-Type: application/json' \

-d '{"file": "<base64-image>"}'const {LambdaClient, InvokeCommand} = require('@aws-sdk/client-lambda');

const client = new LambdaClient({region: '<region>'});

const params = {

FunctionName: 'HumanFaceApi',

InvocationType: 'RequestResponse',

LogType: 'Tail',

Payload: JSON.stringify({file: '<base64-image>'})

};

const command = new InvokeCommand(params);

try {

const {PayLoad} = await client.send(command);

const data = JSON.parse(Payload);

console.log(data.statusCode === 200 && data.body?.faces);

} catch (err) {

console.warn(err.message);

throw err;

}const AWS = require('aws-sdk');

const client = new AWS.Lambda({region: '<region>'});

const params = {

FunctionName: 'HumanFaceApi',

InvocationType: 'RequestResponse',

LogType: 'Tail',

Payload: JSON.stringify({file: '<base64-image>'})

};

client.invoke(params).promise()

.then(function({Payload}) {

const data = JSON.parse(Payload);

console.log(data.statusCode === 200 && data.body?.faces);

})

.catch(function(err) {

console.warn(err.message);

throw err;

});The following function environment overrides can be configured in the SAM template file:

| Variable name | Description | Default value |

|---|---|---|

HUMAN_DEBUG |

Enable verbose logging to CloudWatch group | false |

HUMAN_MODELS_DIR |

Relative path to your custom models directory | node_modules/@vladmandic/human/models |

In order to successfully deploy your application you must have set-up your AWS Config and have created an IAM user with the following policies:

- IAMFullAccess

- CloudFrontFullAccess

- AWSCloudFormationFullAccess

- AWSLambda_FullAccess

- AmazonEC2ContainerRegistryFullAccess

WARNING: The policies above are provided to ensure a successful application deployment. It is recommended that you adjust these policies to meet the security requirements of your Lambda application. They should NOT be used in a Production environment.

Starting up a local instance using AWS SAM CLI:

$ sam local start-api

Run ESLint on project sources:

$ npm run lint

Generate Swagger OpenAPI definitions:

$ npm run genapi

Run Mocha unit tests:

$ npm run test

Running in Lambda there is overhead that occurs (cold start) when the environment is first launched. This overhead does not include TensorFlow initialization (model loading) which creates its own latency. Due to this, you can expect a longer response time for the first request. As long as there is an active Lambda handler (hot start) all subsequent requests will not incurr this overhead.

- Setting IAM Permissions and Roles

- Scaling and concurrency in Lambda

- Lambda quotas

- AWS SDK for JavaScript

This package is maintained under the Semantic Versioning guidelines.

This package is distributed in the hope that it will be useful, but without any warranty; without even the implied warranty of merchantability or fitness for a particular purpose.

human-face-detection is provided under the terms of the MIT license

AWS is a registered trademark of Amazon Web Services, Inc.