GENIE is built using PyTorch 1.11.0 and CUDA 11.3. Please use the following command to install the requirements:

pip install -r requirements.txt Optionally, you may also install NVIDIA Apex. The Adam optimizer from this library is faster than PyTorch's native Adam.

We provide pre-trained checkpoints for all models presented in the paper. Note that the CIFAR-10 base diffusion model is taken from the ScoreSDE repo.

After placing the provided checkpoints at the paths outlined above, you can sample from the base model via:

python main.py --mode eval --config <dataset>.eval --workdir <new_directory> --sampler ttm2Here, dataset is one of cifar10, church, bedroom, imagenet, or cats. To turn off the GENIE model and sample from the plain diffusion model (via DDIM), simply remove the --sampler ttm2 flag. By default, the above generates 16 samples using a single GPU.

On the cats dataset, we also provide an upsampler, which can be run using the following command:

python main.py --mode eval --config cats.eval_upsampler --workdir <new_directory> --data_folder <folder_with_128x128_samples> --sampler ttm2On ImageNet, we also provide a class-conditional checkpoint, which can be controleld via the --labels flag.

python main.py --mode eval --config imagenet.eval_conditional --workdir output/testing_sampling/imagenet_genie_conditional/v2/ --sampler ttm2 --labels 1000To generate all samples from the same class, you can set --labels to a single integer between 0 and 999 (inclusive). Alternatively, you can provide a list of labels, for example, --labels 0,87,626,3; note, however, that the length of the list needs to be the same as the total number of generated samples. To sample using random labels, you may set the --labels flag to the number of classes, for ImageNet that would be 1000.

Furthermore, since we provide both class-conditinal and unconditional checkpoints for ImageNet, you can generate samples using classifier-free guidance:

python main.py --mode eval --config imagenet.eval_with_guidance --workdir output/testing_sampling/imagenet_genie_guidance/v3 --sampler ttm2 --labels 1000 --guidance_scale 1.

The --guidance_scale flag should be set to a positive float.

First, create the following two folders:

mkdir -p data/raw/

mkdir -p data/processed/Afterwards, run the following commands to download and prepare the data used for training.

CIFAR-10

wget -P data/raw/ https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

python dataset_tool.py --source data/raw/cifar-10-python.tar.gz --dest data/processed/cifar10.zipLSUN Chuch

python lsun/download.py -c church_outdoor

mv church_outdoor_train_lmdb.zip data/raw

mv church_outdoor_val_lmdb.zip data/raw

unzip data/raw/church_outdoor_train_lmdb.zip -d data/raw/

python dataset_tool.py --source=data/raw/church_outdoor_train_lmdb/ --dest=data/processed/church.zip --resolution=128x128LSUN Bedroom

python lsun/download.py -c bedroom

mv bedroom_train_lmdb.zip data/raw

mv bedroom_val_lmdb.zip data/raw

unzip data/raw/bedroom_train_lmdb.zip -d data/raw/

python dataset_tool.py --source=data/raw/bedroom_train_lmdb/ --dest=data/processed/bedroom.zip --resolution=128x128AFHQ-v2

wget -N https://www.dropbox.com/s/vkzjokiwof5h8w6/afhq_v2.zip?dl=0

mv 'afhq_v2.zip?dl=0' data/raw/afhq_v2.zip

unzip data/raw/afhq_v2.zip -d data/raw/afhq_v2

python dataset_tool.py --source=data/raw/afhq_v2/train/cat --dest data/processed/cats.zip

python dataset_tool.py --source=data/raw/afhq_v2/train/cat --dest data/processed/cats_128.zip --resolution=128x128ImageNet

First download the ImageNet Object Localization Challenge, then run the following

python dataset_tool.py --source==data/raw/imagenet/ILSVRC/Data/CLS-LOC/train --dest=data/processed/imagenet.zip --resolution=64x64 --transform=center-cropBefore training, you should compute FID stats.

python compute_fid_statistics.py --path data/processed/cifar10.zip --file cifar10.npz

python compute_fid_statistics.py --path data/processed/church.zip --file church_50k.npz --max_samples 50000

python compute_fid_statistics.py --path data/processed/bedroom.zip --file bedroom_50k.npz --max_samples 50000

python compute_fid_statistics.py --path data/processed/imagenet.zip --file imagenet.npz

python compute_fid_statistics.py --path data/processed/cats.zip --file cats.npzWe provide configurations to reproduce our models here. Feel free to use a different numbers of GPUs than us, however, in that case, you should also change the (per GPU) batch size (config.train.batch_size) in the corresponding config file. To train the base diffusion models, use the following commands:

python main.py --mode train --config church.train_diffusion --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config bedroom.train_diffusion --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config imagenet.train_diffusion --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config imagenet.train_diffusion_conditional.py --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config cats.train_diffusion --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config cats.train_diffusion_upsampler --workdir <new_directory> --n_gpus_per_node 8 --n_nodes 2To continue an interrupted training run, you may run the following command:

python main.py --mode continue --config <config_file> --workdir <existing_working_directory> --ckpt_path <path_to_checkpoint>We recommend to use the same number of GPUs (via --n_gpus_per_node) and nodes (via --n_nodes) as in the interrupted run.

Our GENIE models can be trained using the following commands:

python main.py --mode train --config cifar10.train_genie --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config church.train_genie --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config bedroom.train_genie --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config imagenet.train_genie --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config imagenet.train_genie_conditional.py --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config cats.train_genie --workdir <new_directory> --n_gpus_per_node 8

python main.py --mode train --config cats.train_genie_upsampler --workdir <new_directory> --n_gpus_per_node 8 --n_nodes 2To continue interrupted training runs, use the same syntax as above.

If you find the provided code or checkpoints useful for your research, please consider citing our NeurIPS paper:

@inproceedings{dockhorn2022genie,

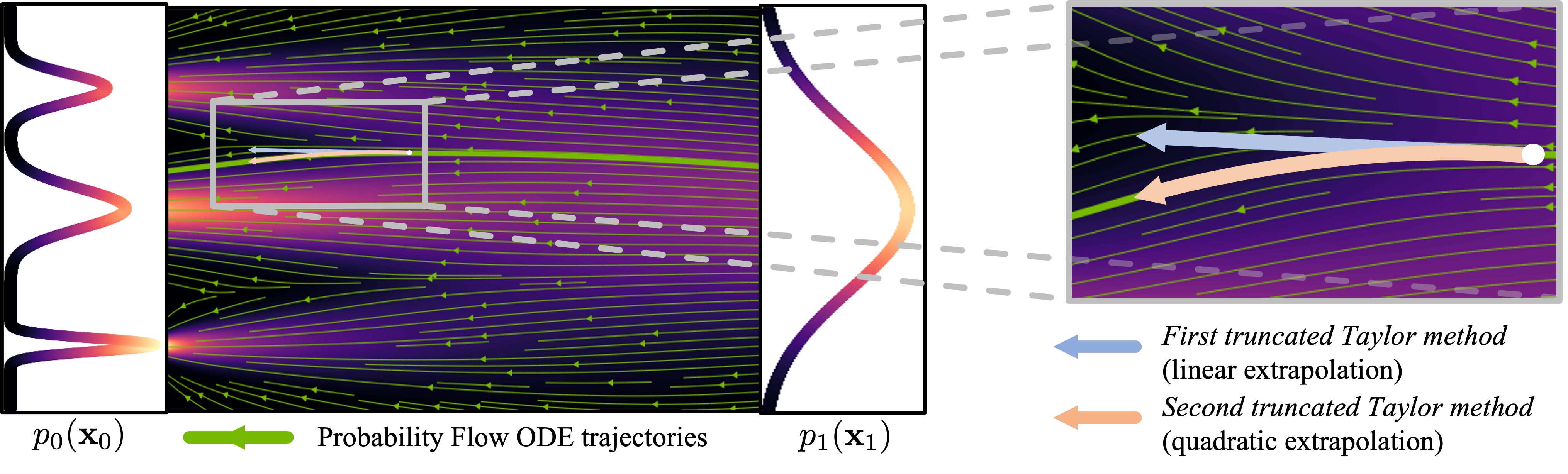

title={{{GENIE: Higher-Order Denoising Diffusion Solvers}}},

author={Dockhorn, Tim and Vahdat, Arash and Kreis, Karsten},

booktitle={Advances in Neural Information Processing Systems},

year={2022}

}Copyright © 2023, NVIDIA Corporation. All rights reserved.

The code of this work is made available under the NVIDIA Source Code License. Please see our main LICENSE file.

All pre-trained checkpooints are licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

For any code dependencies related to StyleGAN3 (stylegan3/, torch_utils/, and dnnlib/), the license is the Nvidia Source Code License by NVIDIA Corporation, see StyleGAN3 LICENSE.

The script to download LSUN data has the MIT License.

We use three diffusion model architectures; see below:

| Model | License |

|---|---|

| ScodeSDE | Apache License 2.0 |

| Guided Diffusion | MIT License |

| PyTorch Diffusion | MIT License |