Table of Contents

This project is a complete MLOps pipeline encapsulated within a Docker container. It demonstrates the entire machine learning lifecycle, starting from data extraction, transformation, model training, and finally deploying the model for predictions using Flask. The entire process is containerized to ensure consistency and reproducibility across different environments.

This section should list any major frameworks/libraries used to bootstrap your project. Leave any add-ons/plugins for the acknowledgements section. Here are a few examples.

- Amazon S3: For storing and retrieving the dataset.

- Pandas: For data manipulation and transformation.

- Scikit-learn: For training the machine learning model.

- Flask: For serving the trained model and making predictions.

- Docker: For containerizing the entire pipeline to ensure a consistent environment.

- Boto3: For interacting with Amazon S3 from Python scripts.

This is an example of how you may give instructions on setting up your project locally. To get a local copy up and running follow these simple example steps.

- Docker: Make sure Docker is installed on your machine. You can download it from Docker Desktop.

- Python 3.10+: The project uses Python for data processing and model training.

- AWS Account and Console: To interact with Amazon S3, you need to create an account with AWS. Do it from here.

- Amazon S3 Bucket: Create a bucket in S3. (All the objects(datasets, files) are stored in buckets in S3). To learn how to create an AWS Bucket, follow this link.

Pull the repository and to use this project, follow these steps:

-

Install the dependencies:

- Install python dependencies required for the project.

pip install -r requirements.txt

-

Extract Data from S3:

- Navigate to the extract_data_from_s3 folder.

- Run the extract_data_s3.py script to download the dataset files from your S3 bucket. Ensure that the dataset files are downloaded into the same folder.

-

Train the model:

- Navigate to the model_training folder.

- Run the model_training.py script to preprocess the data, train the model using a RandomForest classifier, create a pickle file of the trained model, and perform evaluation.

-

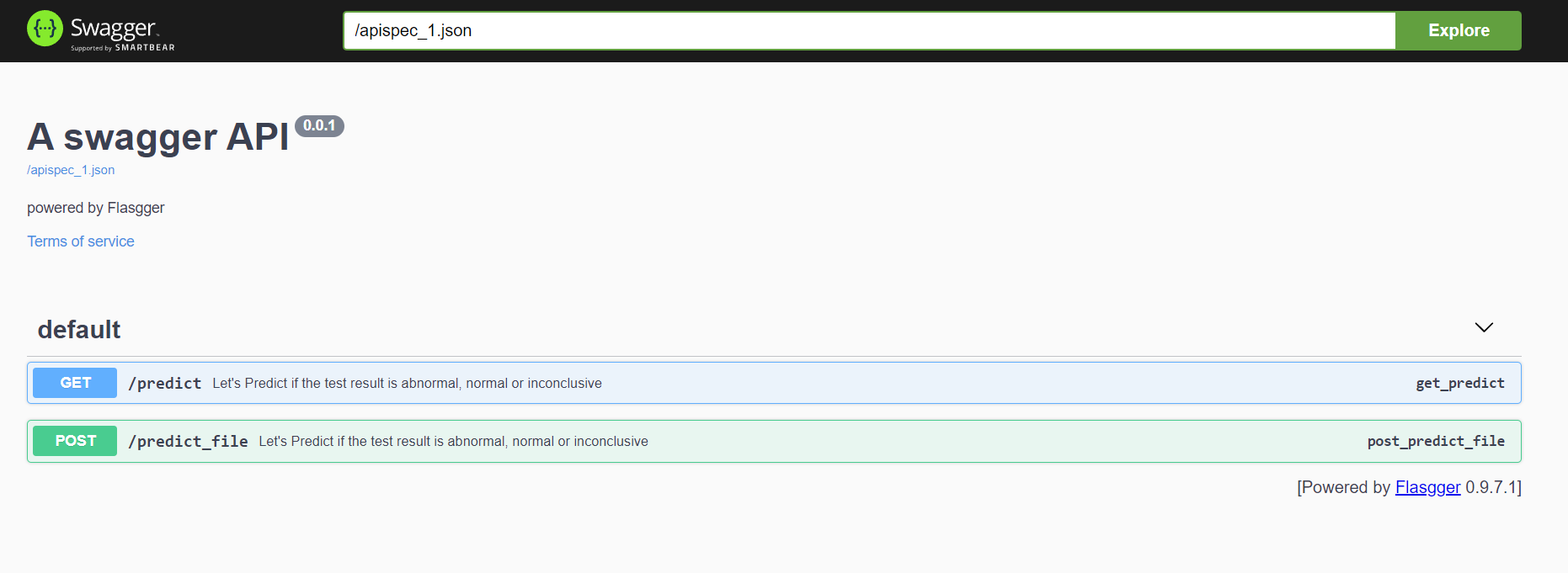

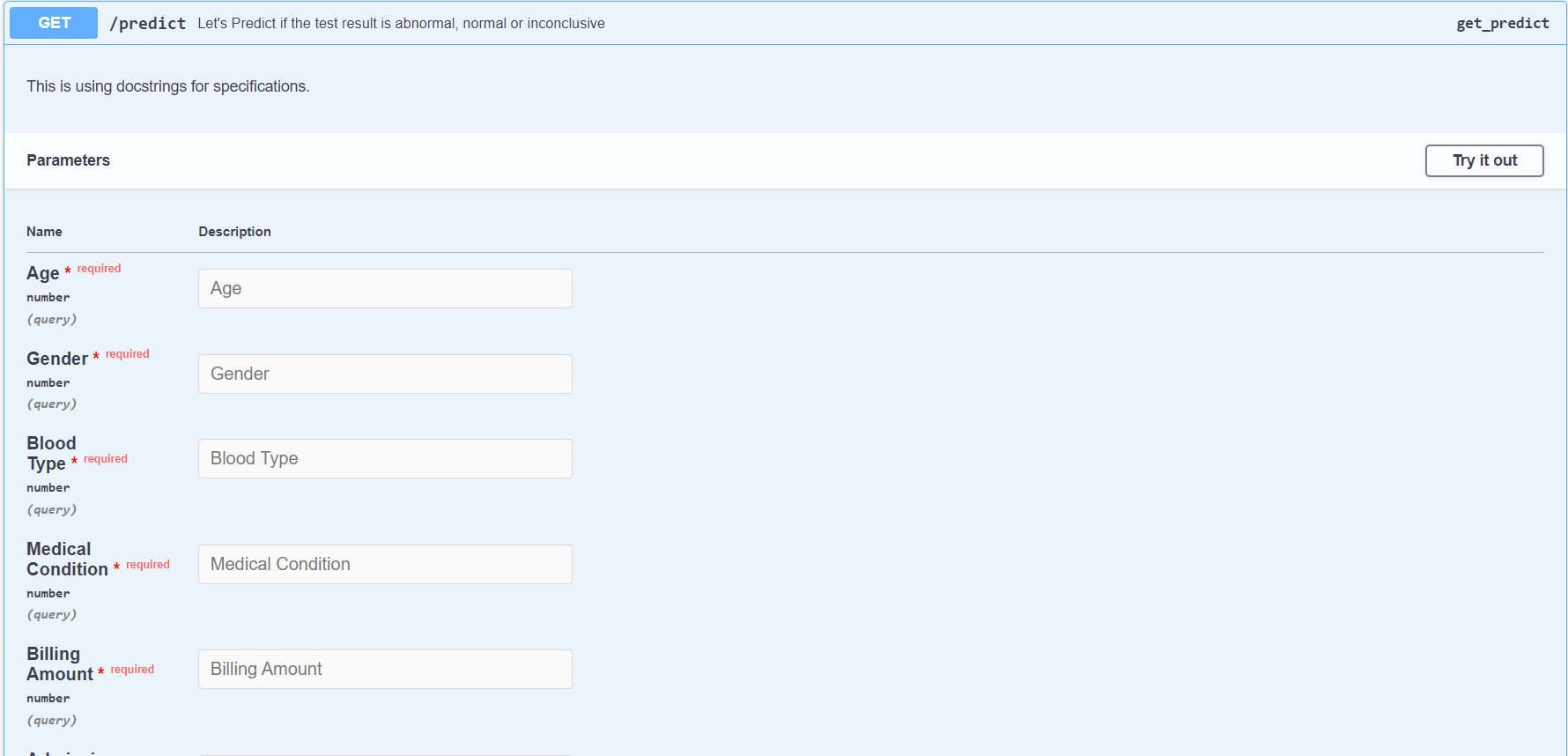

Deploy Model with Flask:

- Navigate to the model_deploy_to_flask folder.

- Run the flask_02.py script. This will start a Flask server and provide a UI using Swagger to perform predictions with the trained model's pickle file.

-

Run the file with respect to the host and port mentioned in app.run().

app.run(host='0.0.0.0',port=5000)

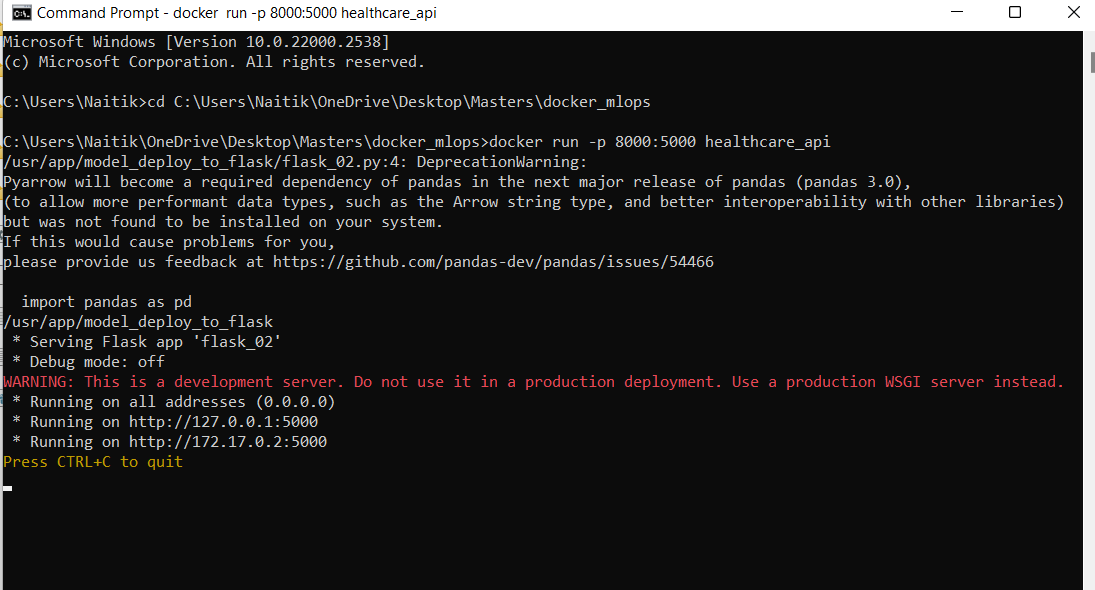

The entire MLOps pipeline is containerized using Docker to ensure a consistent environment. Follow the instructions below to build and run the Docker container.

In the repository, you will find a Dockerfile that describes the steps to create the Docker image. Here’s a breakdown of the commands used in the Dockerfile:

- FROM

- The FROM command sets the base image for subsequent instructions. In this case, we are using an official Python image.

FROM python:3.12.4-slim

- COPY

- The COPY command copies files or directories from the host file system into the Docker image.

COPY . /usr/app/ - EXPOSE

- The EXPOSE command informs Docker that the container listens on the specified network ports at runtime.

EXPOSE 5000

- WORKDIR

- The WORKDIR command sets the working directory inside the Docker image. All subsequent commands are run from this directory.

WORKDIR /usr/app/

- RUN

- The RUN command executes any commands in a new layer on top of the current image and commits the results.

RUN pip install -r requirements.txt

- CMD

- The CMD command provides defaults for an executing container. It sets the command to run when the container starts.

CMD python model_deploy_to_flask/flask_02.py

- Build the Docker Image

- Navigate to the root directory of the project where the Dockerfile is located.

docker build -t mlops-container . - Run the Docker Container

- Run the container using the image built in the previous step.

docker run -p 8000:5000 mlops-container

Distributed under the MIT License. See LICENSE.txt for more information.

Project Link: https://github.com/your_username/repo_name