NYC City Directory - OCR Scripts

Takes a directory containing scans of New York City Directories, and runs OCRopus — a set of open source OCR and document analysis tools — to extract text data from those scans.

The script in this repository reads a directory of image files, runs OCRopus using a model trained on NYC city directories, and produces an hOCR file containing detected text segments and their pixel position in the image.

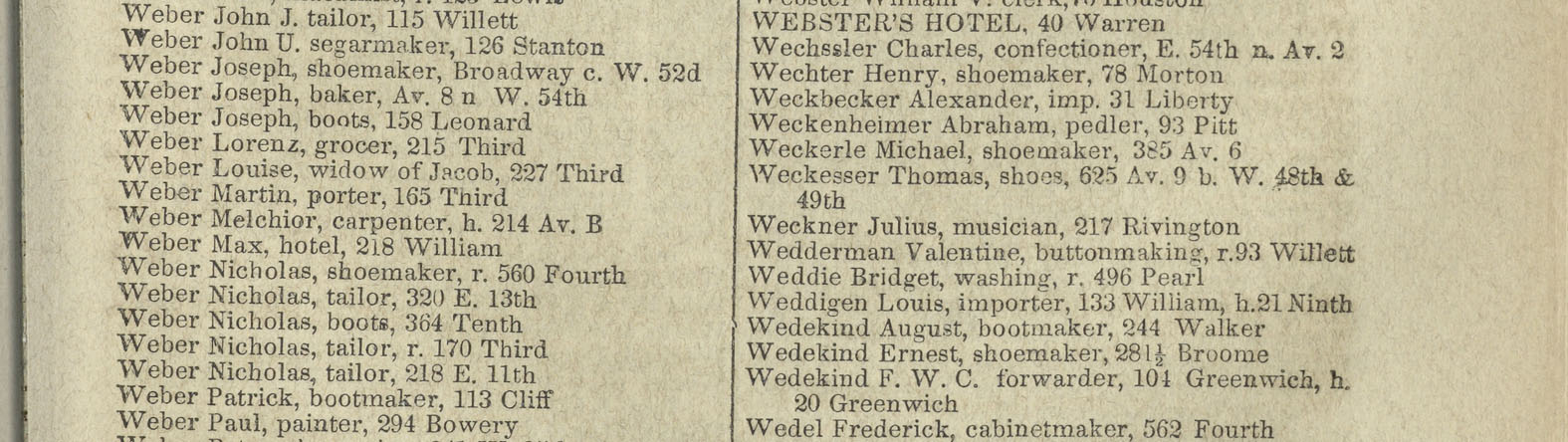

While City Directories can contain different types of pages (i.e. advertisements, tables with street names), this script focuses on extracting data from the two-column pages listing people and their professions and addresses (see the example above).

For more example pages, see the example-pages directory.

NYC Space/Time Directory

NYPL's NYC Space/Time Directory project aims to show the history of NYC's businesses and professions on a map:

- Make high-res scans of all volumes of City Directories

- Use this script to extract text data from those scans

- Detect columns and indented text and create a file containing all relevant lines — see https://github.com/nypl-spacetime/hocr-detect-columns

- Use conditional random fields to extract

name,professionandaddressfields from those lines — see https://github.com/nypl-spacetime/crf-classify

OCRopus

This script uses OCRopus (Or ocropy? There seems to be no agreement on the name...) for binarization, page segmentation and OCR. OCRopus does not seem to be under very active development but it's — once set-up — easy to train and tweak. Maybe we should Tesseract instead, one day.

Training data

The models directory contains models trained on manually transcribed text segments from our City Directory collections. See the See also section on how to train OCRopus.

Installation

See https://github.com/tmbdev/ocropy#installing.

On my MacBook with HomeBrew installed, this worked:

brew install hdf5

pip install --upgrade pip

pip install matplotlib

pip install lxml

git clone https://github.com/tmbdev/ocropy.git

cd ocropy

virtualenv ocropus_venv/

source ocropus_venv/bin/activate

pip install -r requirements_1.txt

pip install -r requirements_2.txt

To make the tools work with clang on OSX, do this: https://github.com/tmbdev/ocropy/blob/master/README_OSX.md

Afterwards, you can run the OCRopus tools (e.g. ocropus-nlbin, ocropus-gpageseg, ocropus-rpred, ocropus-hocr) from this virtual environment.

Usage

cd ocropy

source ocropus_venv/bin/activate

/path/to/ocr-scripts-dir/run.sh /path/to/images/*.tif

run.sh will create hocr.html in /path/to/images (and OCRopus' intermediate output files are in /path/to/images/temp).

See also

Dan Vanderkam's blog (the creator of OldNYC) has a few posts dedicated to using and training OCRopus: