Face recognition system using MTCNN, FACENET, SVM and FAST API to track participants of Big Brother Brasil in real time.

Install dependencies using requirements.txt

pip install -r requirements.txtTo use the project successfully, you need to follow the steps below.

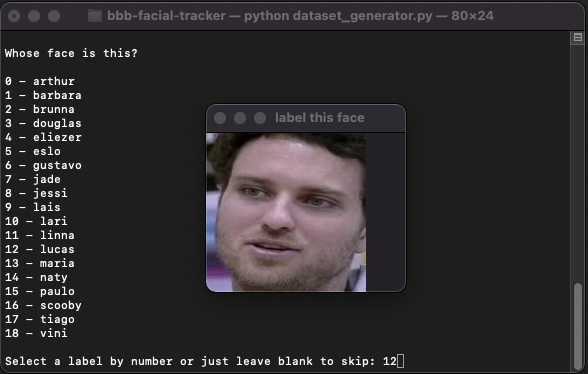

It is needed to build a dataset through the dataset_generator.py script.

This script builds a dataset with train and validation directories according by user labeling, using real time cam frames from reality show.

On execute will be created a directory on src folder with the following structure:

dataset

└── train

└── label1

└── label2

└── label3

└── ...

└── val

└── label1

└── label2

└── label3

└── ...

And you will be able to populate the train dataset.

If you want populate validation dataset use "-val" as first command line argument.

As the screenshot below, insert the label number that matches with shown face and repeat this process until you have enough data.

For each label input, the .jpg image will be auto stored on respective dataset.

If you don't recognize the shown face, just leave blank input to skip.

Now is needed to generate a model through the model_generator.py script.

Upon successful execution, the accuracy and confusion matrix of train and validation will be presented, and a directory will be created in the src folder with the following structure:

model_files

└── label_encoder.joblib

└── metrics.txt

└── model.joblib

This joblib files will be loaded by face_predictor.py to use generated model.

Lastly the API can be started.

For development purpose run the live server with commands below.

cd src

uvicorn api:app --reloadUpon successful run, access in your browser http://127.0.0.1:8000/cams to get a json response with list of cams with recognized faces, like presented below.

[

{

"name": "BBB 22 - Câmera 1",

"location": "Acompanhe a Casa",

"snapshot_link": "https://live-thumbs.video.globo.com/bbb01/snapshot/",

"slug": "bbb-22-camera-1",

"media_id": "244881",

"stream_link": "https://globoplay.globo.com/bbb-22-camera-1/ao-vivo/244881/?category=bbb",

"recognized_faces": [

{

"label": "arthur",

"probability": 64.19885945991763,

"coordinates": {

"topLeft": [

118,

45

],

"bottomRight": [

240,

199

]

}

},

{

"label": "eliezer",

"probability": 39.81395352766756,

"coordinates": {

"topLeft": [

380,

53

],

"bottomRight": [

460,

152

]

}

},

{

"label": "scooby",

"probability": 37.971779438946054,

"coordinates": {

"topLeft": [

195,

83

],

"bottomRight": [

404,

358

]

}

}

],

"scrape_timestamp": "2022-03-01T22:24:41.989674",

"frame_timestamp": "2022-03-01T22:24:42.307244"

},

...

]To see all provided routes access the documentation auto generated by FAST API with Swagger UI.

For more details access FAST API documentation.

If you want to visualize the frame and face recognition on real time, set

VISUALIZATION_ENABLEDtoTruein theapi.pyfile (use only for development), for each cam frame will be apresented like the first screenshot.

cam_scraper.py: upgrade scrape_cam_frame() to get a high definition cam frame.api.py: return cam list by label based on probabilityapi.py: use a database to store historical dataface_predictor.py: predict emotions