If you’re using any data collected by this tool, consider switching to alternatives like BigQuery Information Schema or Google Cloud Asset Inventory for efficient BigQuery metadata management.

GAE python based app which regularly collects metadata about BigQuery tables and stores it in BigQuery.

GCP Census was created to answer the following questions:

- How much data we have in the whole GCP organisation?

- How many tables/partitions do we have?

- Which datasets/tables are the largest or most expensive?

- How often tables/partitions are updated over time?

- How our datasets/tables/partitions are growing over time?

- Which tables/datasets are stored in specific location?

Now every question above can be easily answered by querying metadata tables in BigQuery or looking at our dashboard created in Google Data Studio.

- Count all data to which GCP Census has access

SELECT sum(numBytes) FROM `YOUR-PROJECT-ID.bigquery_views.table_metadata_v1_0`- Count all tables and partitions

SELECT count(*) FROM `YOUR-PROJECT-ID.bigquery_views.table_metadata_v1_0`

SELECT count(*) FROM `YOUR-PROJECT-ID.bigquery_views.partition_metadata_v1_0`- Select top 100 largest datasets

SELECT projectId, datasetId, sum(numBytes) as totalNumBytes

FROM `YOUR-PROJECT-ID.bigquery_views.table_metadata_v1_0`

GROUP BY projectId, datasetId ORDER BY totalNumBytes DESC LIMIT 100- Select top 100 largest tables

SELECT projectId, datasetId, tableId, numBytes

FROM `YOUR-PROJECT-ID.bigquery_views.table_metadata_v1_0`

ORDER BY numBytes DESC LIMIT 100- Select top 100 largest partitions

SELECT projectId, datasetId, tableId, partitionId, numBytes

FROM `YOUR-PROJECT-ID.bigquery_views.partition_metadata_v1_0`

ORDER BY numBytes DESC LIMIT 100- Provide cost estimation for the top 100 largest datasets

SELECT * FROM `YOUR-PROJECT-ID.bigquery_views.datasets_cost_estimation_v1_0`

ORDER BY estimatedMonthlyCostInUSD DESC LIMIT 100Views with costs are only estimation because:

- monthly cost is estimated based on current data (i.e. if you created large table today, estimation will show it's costs for the whole month),

- snapshots are done daily and don't include temporary tables created and deleted between snapshots,

- deduplication views summarises all data from the last 2 days (i.e. it includes table deleted yesterday),

- estimation costs doesn't include free 10 GB per month as Census doesn't know how many billing accounts are used.

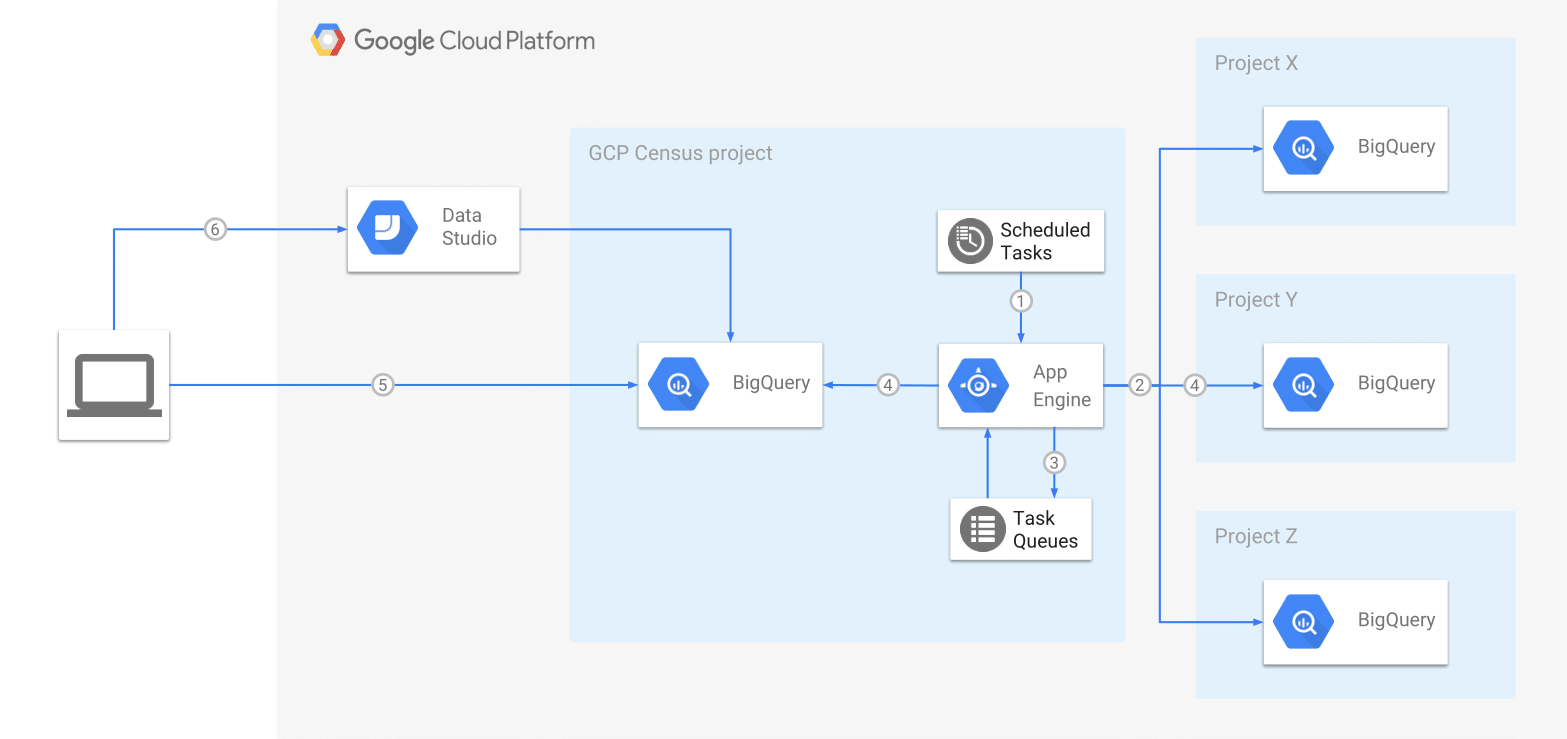

GCP Census retrieves BigQuery metadata using REST API:

- Daily run is triggered by GAE cron (see cron.yaml for exact details)

- GCP Census iterates over all projects/datasets/tables to which it has access

- A task is created for each table and queued for execution in GAE Task Queue

- Task worker retrieves Table metadata and streams it into bigquery.table_metadata table. In case of partitioned tables, GCP Census retrieves also partitions summary by querying the partitioned table and stores metadata in bigquery.partition_metadata table

- User can query metadata using BigQuery UI/API

- Optionally you can create a Data Studio dashboard based on metadata

GCP Census will retrieve metadata of tables it has read access to, which means the scope is derived from GCP IAM settings.

- Create GCP project and assign billing to it

- Clone GCP Census repository

- Specify metadata output BigQuery location in app.yaml (defaults to 'EU')

- Install dependencies (ideally using virtualenv):

pip install -r requirements.txt pip install -t lib -r requirements.txt - Deploy to App Engine using gcloud CLI tool:

gcloud app deploy --project YOUR-PROJECT-ID -v v1 app.yaml config/cron.yaml config/queue.yaml - Grant bigquery.dataViewer role to YOUR-PROJECT-ID@appspot.gserviceaccount.com service account at GCP organisation, folder or selected projects level.

- Enable BigQuery in the project. BigQuery is automatically enabled in new projects. To activate it in a pre-existing project, enable the BigQuery API.

- GCP Census job will be triggered daily by cron, see cron.yaml for exact details

- Optionally you can manually trigger cron jobs in the Cloud Console:

- run

/createModelsto create BigQuery dataset and table - run

/bigQueryto start collecting BigQuery metadata

- run

GCP Census endpoints are accessible only for GAE Administrators, i.e. all endpoints are secured with login: admin in app.yaml. Still, anyone may attempt to access your app and will be redirected to Google Account login page.

That's why we strongly recommend enabling GAE Firewall on your project. You can enable it with three simple gcloud commands:

gcloud app firewall-rules create 500 --action allow --source-range 0.1.0.1 --description "Allow GAE cron" --project YOUR-PROJECT-ID

gcloud app firewall-rules create 510 --action allow --source-range 0.1.0.2 --description "Allow GAE tasks" --project YOUR-PROJECT-ID

gcloud app firewall-rules update default --action deny --project YOUR-PROJECT-ID

You can find all development setup/steps in .travis.yml

Subscribe or post to gcp-census-discuss@googlegroups.com to follow along and ask questions about the GCP Census.

Feel free to submit feature requests and bug reports under Issues.

If you would like to contribute to our development efforts, please review our contributing guidelines and submit a pull request.