This repository contains the implementation of OctFormer. The code is released under the MIT license. The code has been awarded the Replicability Stamp by the Graphics Replicability Stamp Initiative.

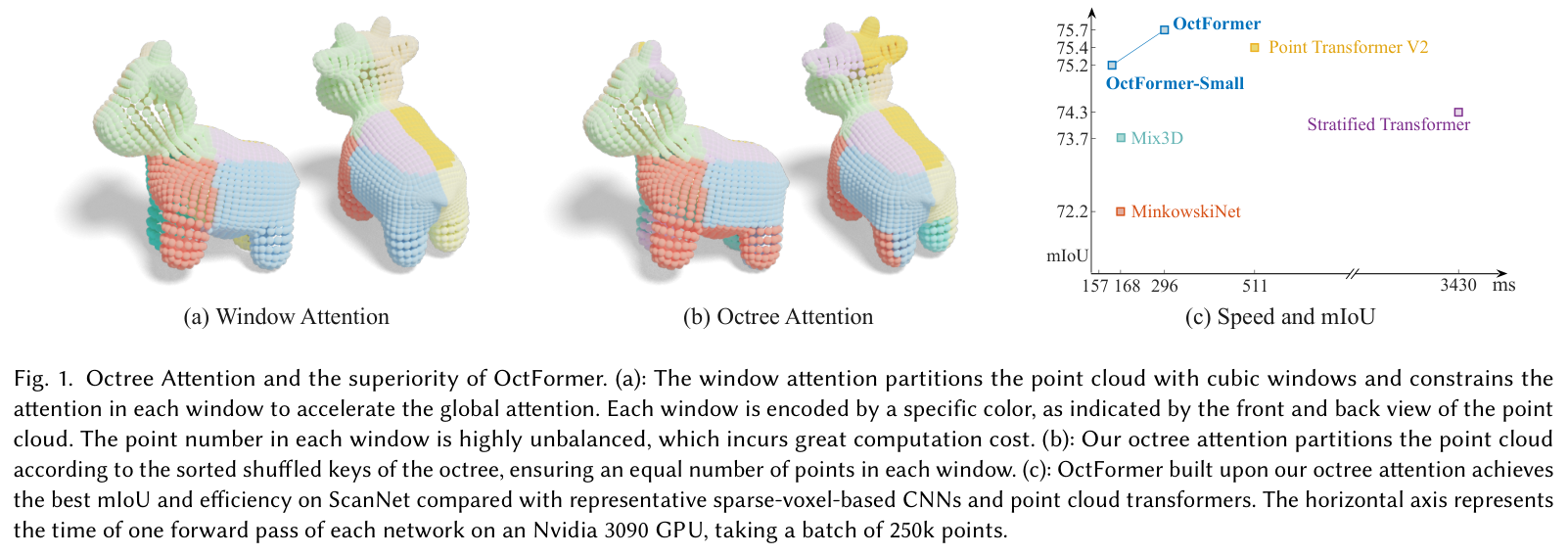

OctFormer: Octree-based Transformers for 3D Point Clouds

Peng-Shuai Wang

ACM Transactions on Graphics (SIGGRAPH), 42(4), 2023

The code has been tested on Ubuntu 20.04 with 4 Nvidia 3090 GPUs (24GB memory).

-

Install Conda and create a

Condaenvironment.conda create --name octformer python=3.8 conda activate octformer

-

Install PyTorch-1.12.1 with conda according to the official documentation.

conda install pytorch==1.12.1 torchvision torchaudio cudatoolkit=11.3 -c pytorch

-

Clone this repository and install the requirements.

git clone https://github.com/octree-nn/octformer.git cd octformer pip install -r requirements.txt -

Install the library for octree-based depthwise convolution.

git clone https://github.com/octree-nn/dwconv.git pip install ./dwconv

-

To run the detection experiments, mmdetection3d is required. And the code is tested with

mmdet3d==1.0.0rc5. Run the following commands to install it. For detailed instructions, please refer to the official documentation. Make sure the results of FCAF3D can be reproduced before running the experiments.pip install openmim==0.3.3 mim install mmcv-full==1.6.2 mim install mmdet==2.26.0 mim install mmsegmentation==0.29.1 git clone https://github.com/open-mmlab/mmdetection3d.git cd mmdetection3d git checkout v1.0.0rc5 pip install -e .

-

Data: Download the data from the ScanNet benchmark. Unzip the data and place it to the folder <scannet_folder>. Run the following command to prepare the dataset.

python tools/seg_scannet.py --run process_scannet --path_in <scannet_folder>

-

Train: Run the following command to train the network with 4 GPUs and port 10001. The mIoU on the validation set without voting is 74.8. The training takes less than 16h on 4 Nvidia 3090 GPUs. And the training log and weights can be downloaded here.

python scripts/run_seg_scannet.py --gpu 0,1,2,3 --alias scannet --port 10001

-

Evaluate: Run the following command to get the per-point predictions for the validation dataset with a voting strategy. And after voting, the mIoU is 76.3 on the validation dataset.

python scripts/run_seg_scannet.py --gpu 0 --alias scannet --run validate

-

Data: Download the data from the ScanNet benchmark. Unzip the data and place it to the folder <scannet_folder>. Run the following command to prepare the dataset.

python tools/seg_scannet.py --run process_scannet --path_in <scannet_folder> \ --path_out data/scanet200.npz --align_axis --scannet200

-

Train: Run the following command to train the network with 4 GPUs. The mIoU on the validation set without voting is 31.7, the training log and weights can be downloaded here. With OctFormer-Large, the mIoU increases to 32.2.

python scripts/run_seg_scannet200.py --gpu 0,1,2,3 --alias scannet200

-

Evaluate: Run the following command to get the per-point predictions for the validation dataset with a voting strategy. And after voting, the mIoU is 32.6 on the validation dataset.

python scripts/run_seg_scannet200.py --gpu 0 --alias scannet200 --run validate

-

Data: Prepare the data according to the official documentation of mmdetection3d. Denote the path to the data as <sunrgbd_folder>. Run the following command to build a symbolic link to the data.

ln -s <sunrgbd_folder> data/sunrgbd

-

Training: Run the following command to train the network with 4 GPUs. The maximum mAP@0.25 and mAP@05 on the validation set are 66.6 and 50.6, respectively. The training log and weights can be downloaded here.

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node=4 \ --master_port=29000 detection.py configs/det_sunrgbd.py --launcher=pytorch \ --work-dir=logs/sunrgbd/octformer

-

Data: Run the following command to prepare the dataset.

python tools/cls_modelnet.py

-

Train: Run the following command to train the network with 1 GPU. The classification accuracy on the testing set without voting is 92.7%. And the training log and weights can be downloaded here.

python classification.py --config configs/cls_m40.yaml SOLVER.gpu 0,

@article {Wang2023OctFormer,

title = {OctFormer: Octree-based Transformers for {3D} Point Clouds},

author = {Wang, Peng-Shuai},

journal = {ACM Transactions on Graphics (SIGGRAPH)},

volume = {42},

number = {4},

year = {2023},

}