A PyTorch implement of TextSnake: A Flexible Representation for Detecting Text of Arbitrary Shapes (ECCV 2018) by Face++

- Paper link: arXiv:1807.01544

- Github: princewang1994/TextSnake.pytorch

- Blog: TextSnake: A Flexible Representation for Detecting Text of Arbitrary Shapes

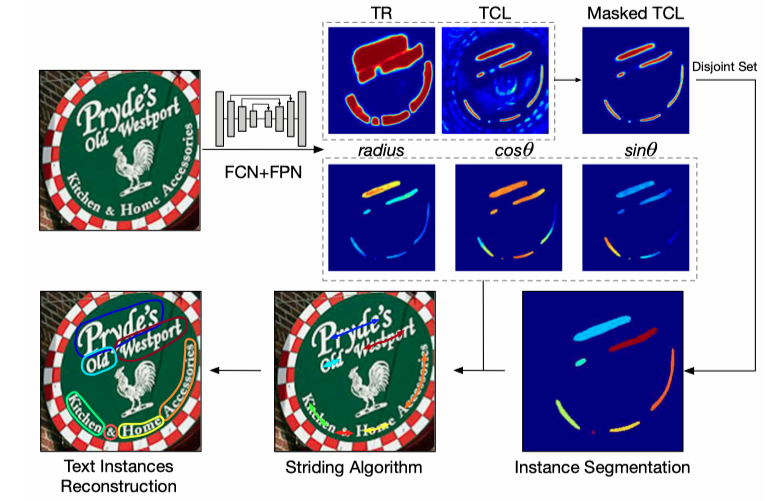

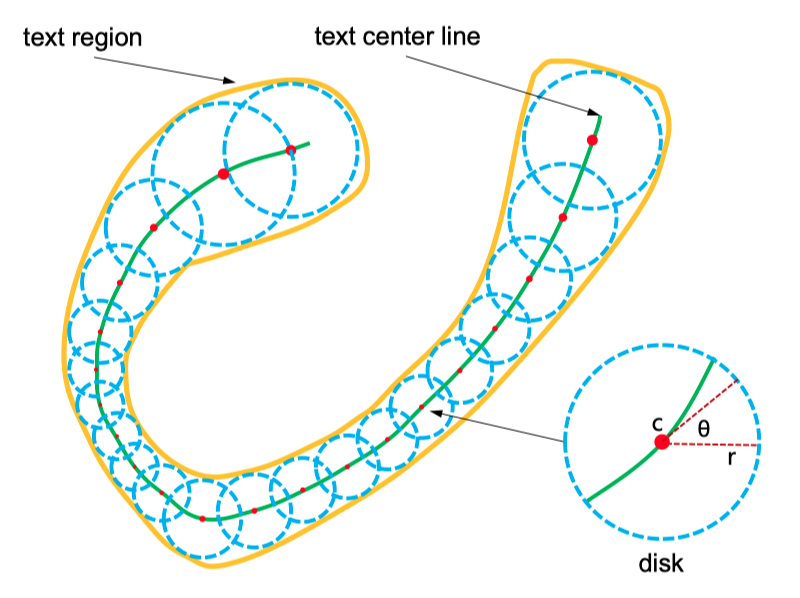

Comparison of different representations for text instances. (a) Axis-aligned rectangle. (b) Rotated rectangle. (c) Quadrangle. (d) TextSnake. Obviously, the proposed TextSnake representation is able to effectively and precisely describe the geometric properties, such as location, scale, and bending of curved text with perspective distortion, while the other representations (axis-aligned rectangle, rotated rectangle or quadrangle) struggle with giving accurate predictions in such cases.

Text snake element:

- center point

- tangent line

- text region

Generally, this code has following features:

- include complete training and inference code

- pure python version without extra compiling

- compatible with laste PyTorch version (write with pytroch 0.4.0)

- support TotalText dataset

This repo includes the training code and inference demo of TextSnake, training and infercence can be simplely run with a few code.

To run this repo successfully, it is highly recommanded with:

- Linux (Ubuntu 16.04)

- Python3.6

- Anaconda3

- NVIDIA GPU(with 8G or larger GPU memroy for training, 2G for inference)

(I haven't test it on other Python version.)

- clone this repository

git clone https://github.com/princewang1994/TextSnake.pytorch.git- python package can be installed with

pip

$ cd $TEXTSNAKE

$ pip install -r requirements.txtTotal-Text: follow the total_text/README.md

Training model with given experiment name $EXPNAME

$ EXPNAME=example

$ CUDA_VISIBLE_DEVICES=$GPUID python train.py $EXPNAME --vizoptions:

exp_name: experiment name, used to identify different training process--viz: visualization toggle, output pictures are saved to './vis' by default

other options can be show by run python train.py -h

Runing following command can generate demo on TotalText dataset (300 pictures), the result are save to ./vis by default

$ EXPNAME=example

$ CUDA_VISIBLE_DEVICES=$GPUID python demo.py --checkepoch 190options:

exp_name: experiment name, used to identify different training process

other options can be show by run python train.py -h

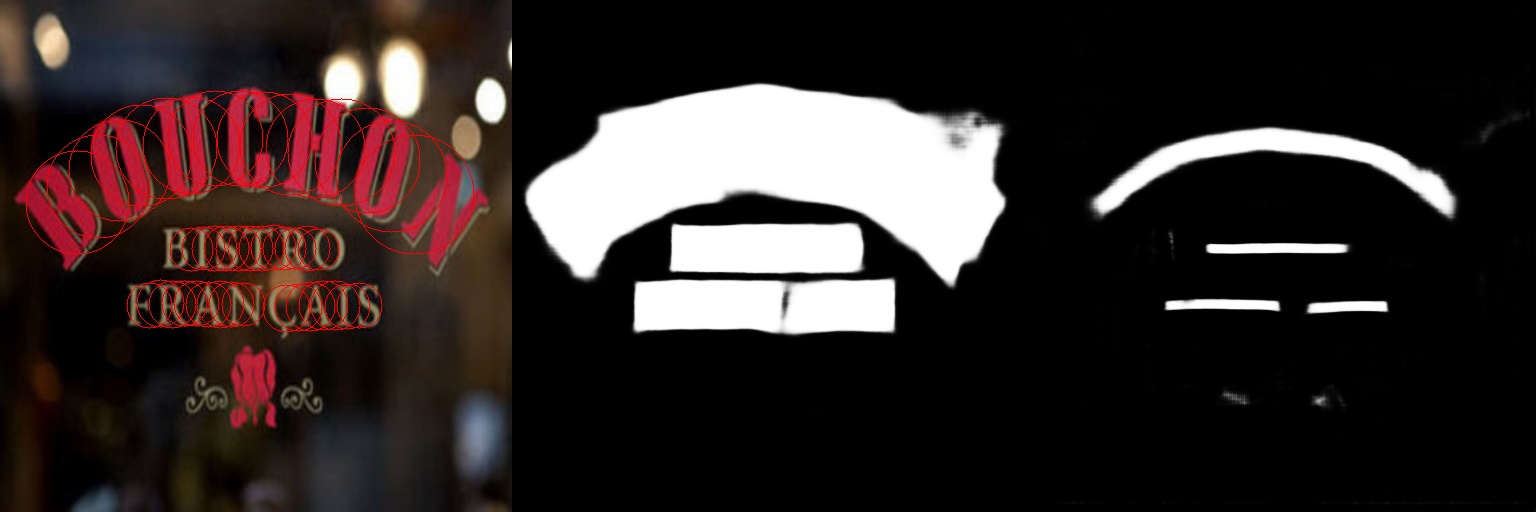

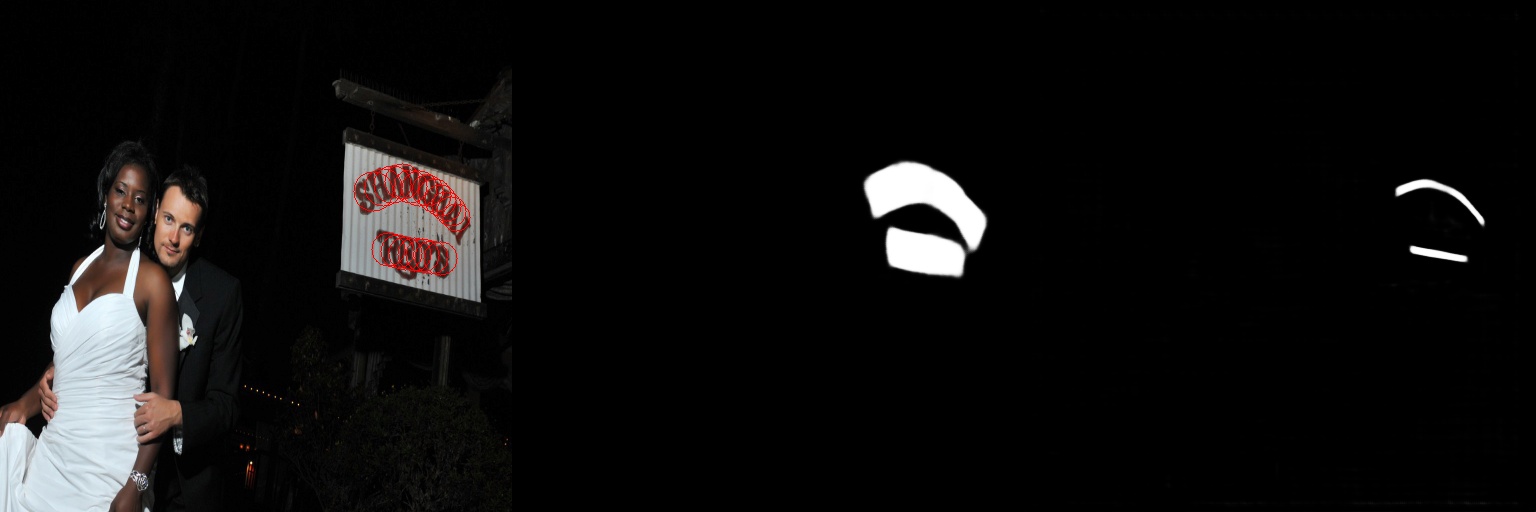

- left: prediction

- middle: text region(TR)

- right: text center line(TCL)

- Pretrained model upload (soon)

- More dataset suport: [ICDAR15/SynthText]

- Metric computing

- Cython/C++ accelerate core functions

This project is licensed under the MIT License - see the LICENSE.md file for details

This project is writen by Prince Wang, part of codes refer to songdejia/EAST