We received Best Poster Prize at ICVSS 2019.

This is the official website of our work 3D Appearance Super-Resolution with Deep Learning (arxiv) published on CVPR2019.

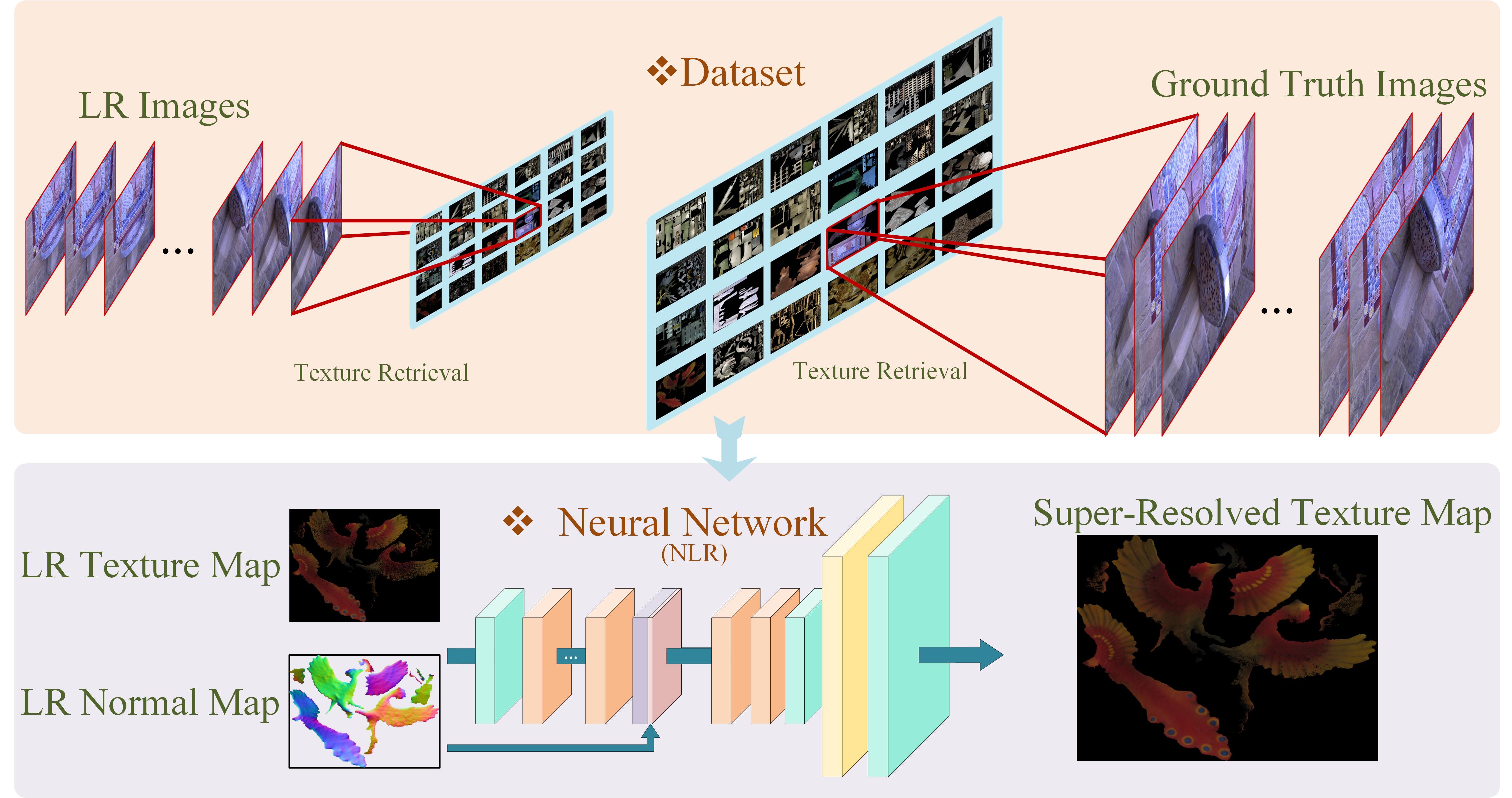

We provided 3DASR, a 3D appearance SR dataset that captures both synthetic and real scenes with a large variety of texture characteristics. The dataset contains ground truth HR texture maps and LR texture maps of scaling factors ×2, ×3, and ×4. The 3D mesh, multi-view images, projection matrices, and normal maps are also provided. We introduced a deep learning-based SR framework in the multi-view setting. We showed that 2D deep learning-based SR techniques can successfully be adapted to the new texture domain by introducing the geometric information via normal maps.

We introduce the 3DASR, a 3D appearance SR dataset and a deep learning-based approach to super-resolve the appearance of 3D objects.

- Python 3.6

- PyTorch >= 1.0.0

- numpy

- skimage

- imageio

- matplotlib

- tqdm

-

git clone https://github.com/ofsoundof/3D_Appearance_SR.git -

Download pretrained model and texture map dataset.

-

Put pretrained model at this website ➡

./experiment/. -

cd ./code/scriptCUDA_VISIBLE_DEVICES=xx python ../main.py --model FINETUNE --submodel NLR --save Test/NLR_first --scale 4 --n_resblocks 32 --n_feats 256 --res_scale 0.1 --pre_train ../../experiment/model/NLR/model_x2_split1.pt --data_train texture --data_test texture --model_one one --subset . --normal_lr lr --input_res lr --chop --reset --save_results --print_model --test_onlyUse

--ext sep_resetfor the first run that uses a specific split of the two splits from cross-validation.Be sure to change log directory

--dirand data directory--dir_data.

-

Download our pretrained model for 3D appearance SR from google drive or BaiduNetDisk, extraction code: nnnm. The pretrained models of NLR and NHR in the paper are included.

-

Download the pretrained EDSR model from EDSR project page.

-

Put the pretrained model at

./experiment.

- Download the texture map of the 3D appearance dataset from Google Drive or BaiduNetDisk, extraction code: crnw.

- Please refer to

demo.shfor the training and testing demo script. In a batch system, you can also useqsub_NLR.sh. - Remember to change the log directory

--dirand data directory--dir_data.--diris the directory where you can put your log information and the trained model.--dir_datais the directory where you put the dataset.

If you find our work useful in your research or publication, please cite our work:

Yawei Li , Vagia Tsiminaki, Radu Timofte, Marc Pollefeys, and Luc van Gool, "3D Appearance Super-Resolution with Deep Learning" In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019.

@inproceedings{li2019_3dappearance,

title={3D Appearance Super-Resolution with Deep Learning},

author={Li, Yawei and Tsiminaki, Vagia and Timofte, Radu and Pollefeys, Marc and Van Gool, Luc},

booktitle={In Proceedings of the IEEE International Conference on Computer Vision},

year={2019}

}