This is a quick demo for:

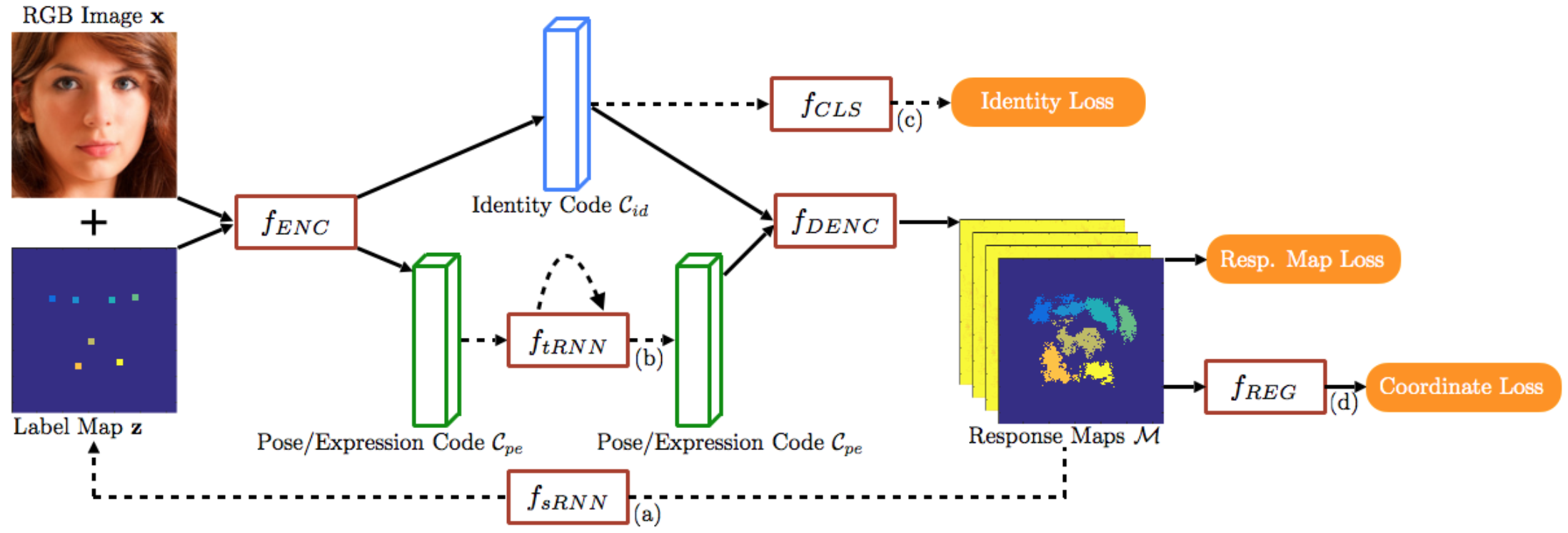

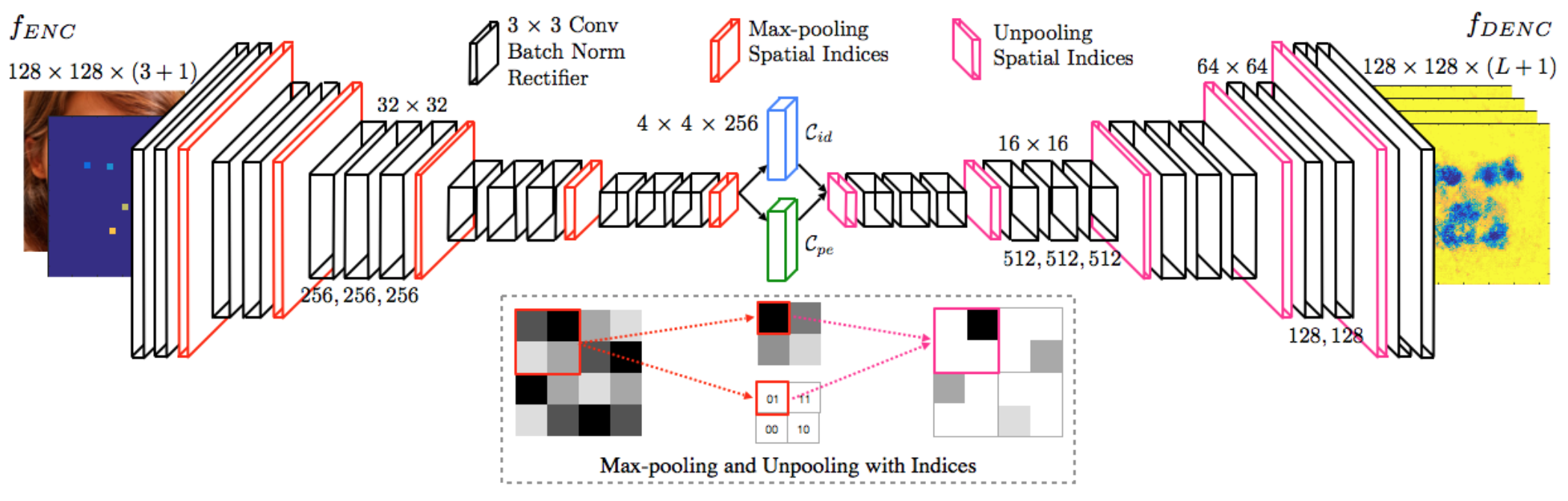

"A Recurrent Encoder-Decoder Network for Sequential Face Alignment"

Xi Peng, Rogerio S. Feris, Xiaoyu Wang, Dimitris N. Metaxas

European Conference on Computer Vision (ECCV), Amsterdam, 2016. (Oral)

-

Clone/Download the project to

recurrent-face-alignment/ -

Download folders

model/anddata/from Google Drive -

Copy folders

model/anddata/intorecurrent-face-alignment/ -

Edit

TrackVideoDemo.pyto set (a)path/to/caffe/python/, and (b)video names to be tracked -

python TrackDemo.py -

Check tracking results in

recurrent-face-alignment/result/

-

Edit

DetectImageDemo.pyto set (a)path/to/caffe/python/, and (b)image folder to be detected -

python DetectImageDemo.py

For research convenience, we split video into frames using ffmpeg.

The tracker need the bbox of the face at the first frame for initialization.

The detector need img_bbox.txt that each line has 5 tokens: path/to/image left top right bottom.

[left top right bottom] is the bbox of detected face.

caffe: any version that support batch normalization layer (such as SegNet). We will relase our distributed caffe version soon.

python 2.7.

@InProceedings{PengECCV16,

author = "Peng, Xi and Feris, Rogerio S.and Wang, Xiaoyu and Metaxas, Dimitris N.",

title = "A Recurrent Encoder-Decoder Network for Sequential Face Alignment",

booktitle = "European Conference on Computer Vision (ECCV)",

year = "2016",

pages="38--56"}