Owen Jow, owen@eng.ucsd.edu

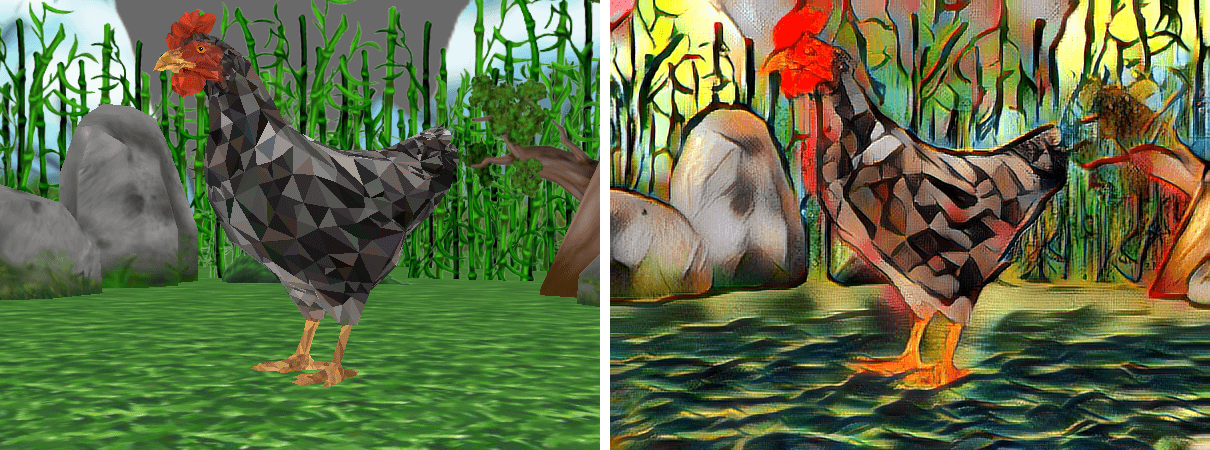

In this program, users can interactively walk around a stylized 3D scene. The idea is that some parts of the scene are intentionally stylized to be "more beautiful" than others, and a user can, if he/she chooses, search for this beauty. To this aim, I place a target object somewhere in the scene. The closer and more oriented toward the object the camera is, the more "beautiful" the view will become. In keeping with a theme from my previous projects, I make the target object a chicken because chickens are beautiful.

I use Panda3D for initial rendering, and pytorch-AdaIN for arbitrary style transfer. pytorch-AdaIN is an implementation of Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization, which encodes style and content images using VGG, transfers statistics from style to content in feature space using adaptive instance normalization (AdaIN), and finally decodes the AdaIN feature output back to image space. Importantly, it allows for real-time stylization with multiple arbitrary style targets. In accordance with section 7.3 of the paper, I interpolate between AdaIN features corresponding to two different styles before decoding each frame. In my eyes, one of these styles always has a greater aesthetic value than the other. When the camera is closer to the chicken, the weight for the "more beautiful" of the styles is higher.

I have intended for this project to characterize the relative and subjective nature of beauty. If I deliberately try to make things less beautiful, it might make other things appear more beautiful by comparison. Furthermore, when I say that some stylized views are more beautiful than others, it is only my own preference; others may find other parts of the scene more or less beautiful and that's okay too. Finally, the project premise and stylization algorithm prompt the following question about beauty: how much of it is about style, and how much of it is about content?

| More "Beautiful" | Less "Beautiful" |

|---|---|

| Vibrant colors | Monochromatism |

| Artwork | Official documents |

| Oceans | Deserts |

| Chickens | People |

| Sunsets | Cimmerian gloom |

| Cathedrals | Asylums |

| Composition | Few frequencies |

| Warm lighting | Fluorescent lighting |

-

Add music as well. Associate some relevant music with each stylization extreme (e.g. "most beautiful" aligns with beautiful-sounding music), and use MusicVAE to interpolate between the sounds as the user walks through the scene (MIDI is fine, synthesize using something simple). The main problem is doing all of this in real-time.

-

Interactive drum-esque music with stylization. Use WebGL/Babylon alongside phone and WebSockets to play music with motion and determine stylization. Could also control stylization with phone orientation in this project. As the phone aligns more and more with a certain direction, the image becomes more beautiful (or less chaotic). Symbolizes chaos that results from loss of control.

-

Temporal consistency. Have network take multiple images. Following this paper, compute motion field and disocclusion mask between each pair of consecutive frames, warp each stylized t-1 frame into t frame, enforce MSE loss between warped stylized t-1 frames and stylized t frames in unmasked regions during training.

- There are links to pre-trained arbitrary stylization models in the "Download models" section of

pytorch-AdaIN. By default,renderloop.pyassumes that these models are stored in thepytorch-AdaIN/modelsfolder. If you save them elsewhere, specify their paths using the--vgg_ckpt_pathand--decoder_ckpt_pathcommand-line options.

renderloop.py: The main file. Launches the rendering loop.image_styler.py: Arbitrary stylization class, adapted from thepytorch-AdaINevaluation example.

Download chicken_01.obj and chicken_01.tga from TurboSquid (link) and place them in the scene/ folder. Download the pre-trained models according to the Model section. Install requirements. Buy a GPU. Now you are ready to run

python3 renderloop.py

- You can change the camera position with the arrow keys.

- You can change the camera yaw with the A and S keys.

- You can quit the program using Q or ESC.

- You can save a snapshot by hitting the spacebar.

The following video (click the image) is a usage demonstration. I describe the project in the accompanying voiceover.

With stylization, I get 11 FPS in regular mode (600x450) and 61 FPS in tiny mode (200x150).

- This project requires PyTorch, Panda3D,

lycon, andpynput. It has been tested locally with Python 3.6 and Ubuntu 18.04. Ideally you will also have a GPU, because the app might not run in real-time otherwise. - If you only have a CPU, you can try running the program with the

--tinyflag (uses a smaller resolution). - If you're running this on macOS, you should invoke

python3 renderloop.pywith sudo. Otherwise keyboard monitoring won't work due to security restrictions (see here).

- In-browser scene stylization using the Magenta.js port of this repo. Too slow for real-time, though.

- Other scenes, with random chicken locations. I was able to load the other scenes (this one, for example), but decided it wasn't worth the trouble to map out valid object locations.

- Downsizing, then stylizing, then upsizing. Faster, but with an unacceptable quality drop.

- Papers

- Repositories

- Other

- Panda3D Manual

- NumPy arrays from Panda3D textures - gist by Alex Lee

- The chicken model was made by SHULDYAKOV (link).