tensorflow-rl-pong

Code from the Google I/O 2018 session "Tensorflow and deep reinforcement

learning, without a PhD". Other samples from the "Tensorflow without a PhD" series will soon be migrated

to this repository.

|  |

A crash course in six episodes for software developers who want to learn machine learning, with examples, theoretical concepts,

and engineering tips, tricks and best practices to build and train the neural networks that solve your problems.

|

The basics of building neural networks for software engineers. Neural weights and biases, activation functions, supervised learning and gradient descent.

Tips and best practices for efficient training: learning rate decay, dropout regularisation and the intricacies of overfitting. Dense and convolutional neural networks. This session starts with low-level

Tensorflow and also has a sample of high-level Tensorflow code using layers and Datasets. Code sample: MNIST handwritten digit recognition with 99% accuracy. Duration: 55 min |

|

What is batch normalisation, how to use it appropriately and how to see if it is working or not.

Code sample: MNIST handwritten digit recognition with 99.5% accuracy. Duration: 25 min |

|

|

RNN basics: the RNN cell as a state machine, training and unrolling (backpropagation through time).

More complex RNN cells: LSTM and GRU cells. Application to language modeling and generation. Tensorflow APIs for RNNs.

Code sample: RNN-generated Shakespeare play. Duration: 55 min |

|

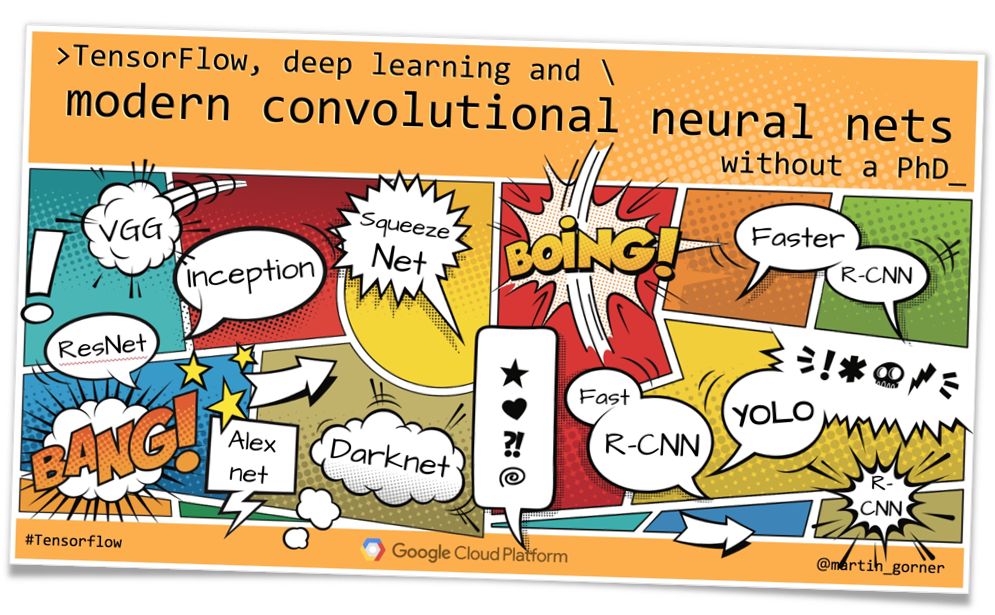

Convolutional neural network architectures for image processing. Convnet basics, convolution filters and how to stack them.

Learnings from the Inception model: modules with parallel convolutions, 1x1 convolutions. A simple modern convnet architecture: Squeezenet.

Convenets for detection: the YOLO (You Look Only Once) architecture. Full-scale model training and serving with Tensorflow's Estimator API on Google Cloud ML Engine.

Application: airplane detection in aerial imagery. Duration: 55 min |

|

|

Advanced RNN architectures for natural language processing. Word embeddings, text classification,

bidirectional models, sequence to sequence models for translation. Attention mechanisms. This session also explores

Tensorflow's powerful seq2seq API. Applications: toxic comment detection and langauge translation.

Co-author: Nithum Thain. Duration: 55 min |

|

A neural network trained to play the game of Pong from just the pixels of the game.

Uses reinforcement learning and policy gradients. The approach can be generalized to

other problems involving a non-differentiable step that cannot be trained using traditional supervised learning techniques.

A practical application: neural architecture search - neural networks designing neural networks. Co-author: Yu-Han Liu. Duration: 40 min |

|

*Disclaimer: This is not an official Google product but sample code provided for an educational purpose*