- In this project, I have created a simple ETL pipeline that extracts the data from 2 csv files, then I transform the extracted data to create fact and dimension tables of data warehouse, finally I load the data into the data warehouse.

- As, I am doing this project with main objective of learning and exploring new technologies, I will be implementing etl pipeline and data warehouse using different methods.

- Using Python, Pandas and Postgresql to create ETL pipeline and data warehouse

- Using AWS services (S3, Glue, Athena, Redshift) to create ETL pipeline and data warehouse

- To create a complete ETL pipeline and datawarehouse

Sephor Products and Skincare Reviews

I have chosen the following two datasets from this dataset for my project:

- product_info.csv => Contains description of individual products

- product_reviews.csv => Contains product reviews by different customers

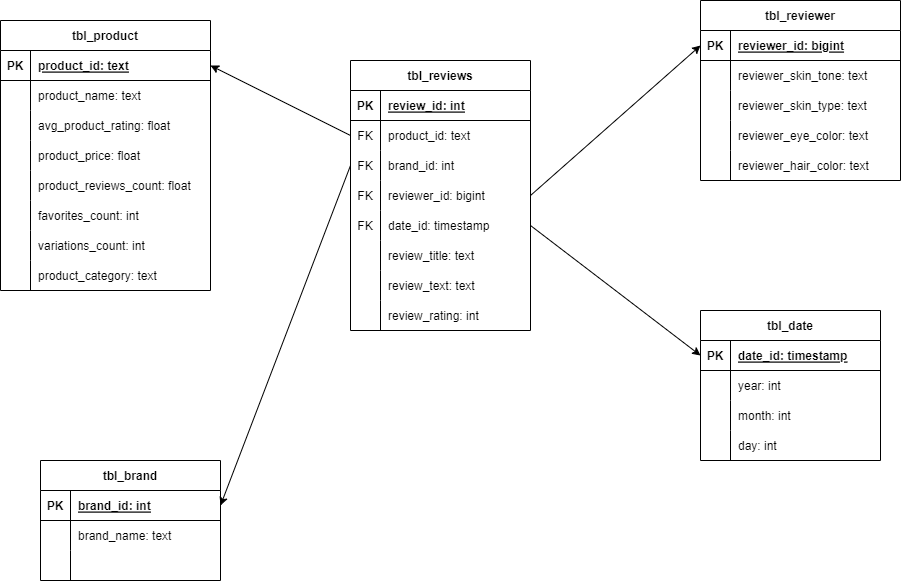

I am planning to implement the datawarehouse using star schema with a fact table and 4 dimension tables as shown in the data model below:

- etl_pipeline_data_warehouse_python_postgres.ipynb contains implementation of etl pipeline and datawarehouse using Python and Postgresql

- etl_pipeline_data_warehouse_aws.ipynb contains implementation of etl_pipeline and datawarehouse using aws services

- datasets contains all dataset files

- diagrams contains various diagrams including outputs.

- ETL pipeline and Data warehouse using Python and Postgresql - DONE

- ETL pipeline and Data warehouse using AWS services - DONE