[ISBI 2024]Leveraging AI Predicted and Expert Revised Annotations in Interactive Segmentation: Continual Tuning or Full Training?

Leveraging AI Predicted and Expert Revised Annotations in Interactive Segmentation: Continual Tuning or Full Training?

Tiezheng Zhang1, Xiaoxi Chen2, Chongyu Qu1, Alan L. Yuille1, and Zongwei Zhou1,*

1 Johns Hopkins University,

2 Shanghai Jiao Tong University

ISBI 2024

paper | code | [poster](Coming Soon)

RSNA 2023 (Oral Presentation)

paper | code | slides

git clone https://github.com/ollie-ztz/Continue_TuningSee installation instructions to create an environment and obtain requirements.

We offer pre-trained checkpoints of Swin UNETR and U-Net. The models were trained on a combination of 14 publicly available CT datasets, consisting of 3,410 (see details in CLIP-Driven Universal Model).

Download the trained models and save them into ./pretrained_checkpoints/.

| Architecture | Param | Download |

|---|---|---|

| U-Net | 19.08M | link |

| Swin UNETR | 62.19M | link |

Our method could be applied to publicly available datasets (e.g.m BTCV) or your private datasets. For the public datasets, please refer to Datasets. Currently, we only take data formatted in nii.gz. For example, using the BTCV dataset as a reference, organize your datasets following the structure outlined below. Create your own dataset list and store it in /dataset/dataset_list. The revised data should be arranged in the same format.

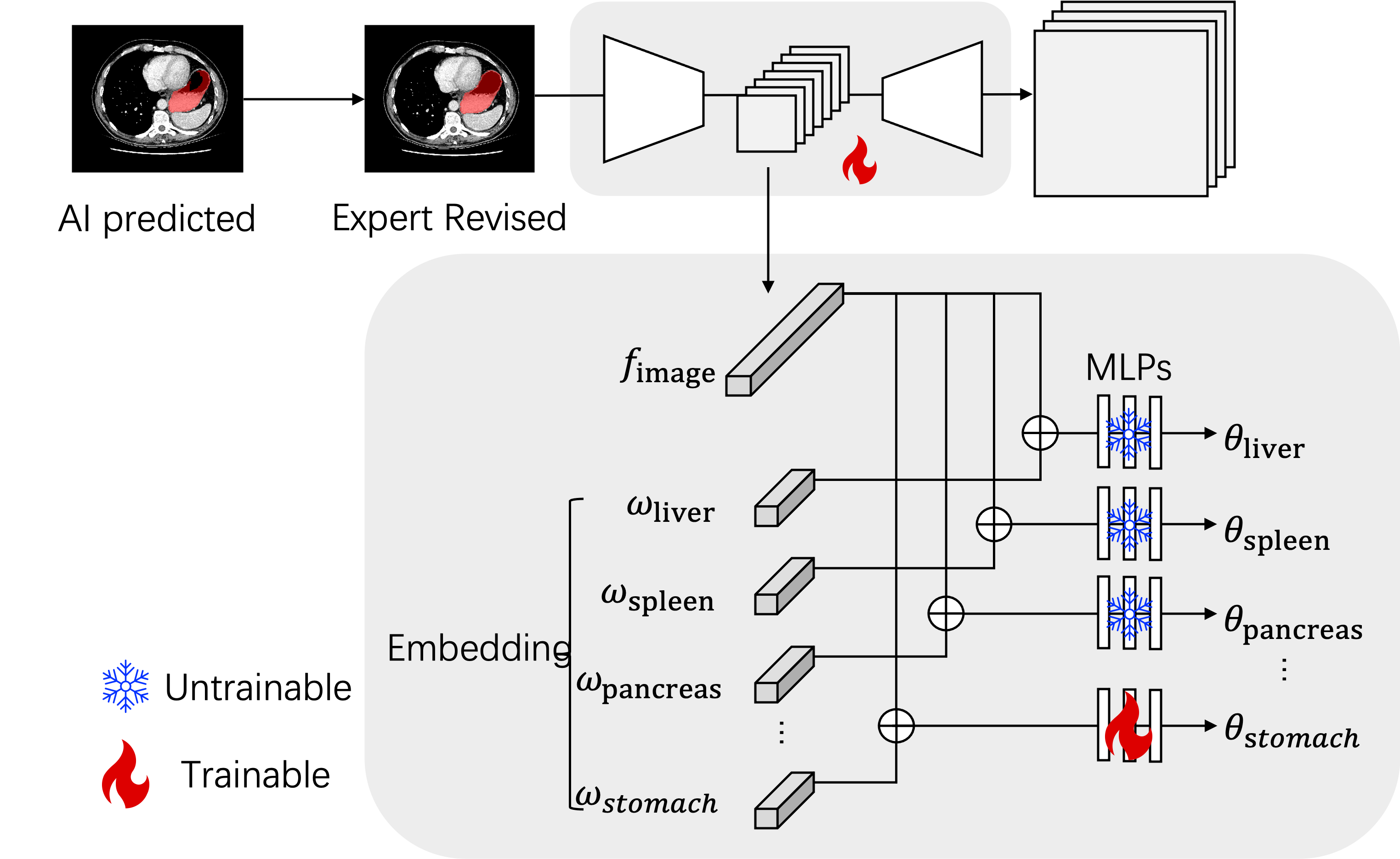

01_Multi-Atlas_Labeling/img/img0001.nii.gz 01_Multi-Atlas_Labeling/label/label0001.nii.gzCUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -W ignore -m torch.distributed.launch --nproc_per_node=8 --master_port=$RANDOM train.py --dist True --backbone unet --data_root_path DATA_PATH --dataset_list DATA_LIST --batch_size 1 >>logs/DATASET.txtCUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -W ignore -m torch.distributed.launch --nproc_per_node=8 --master_port=$RANDOM train.py --dist True --backbone swinunetr --data_root_path DATA_PATH --dataset_list DATA_LIST --batch_size 1 >>logs/DATASET.txtIn our experiments, we select the data needed to be revised based on the attention map. In your settings, you could ask your annotators to revise all the data predicted by your models from Round 1.

We suggest that using our proposed Continual Tuning method to perform round 2. Then, in your dataset_list, you only need the revised data. While, if you want to train from scratch, please use the same amount of data as previous step.

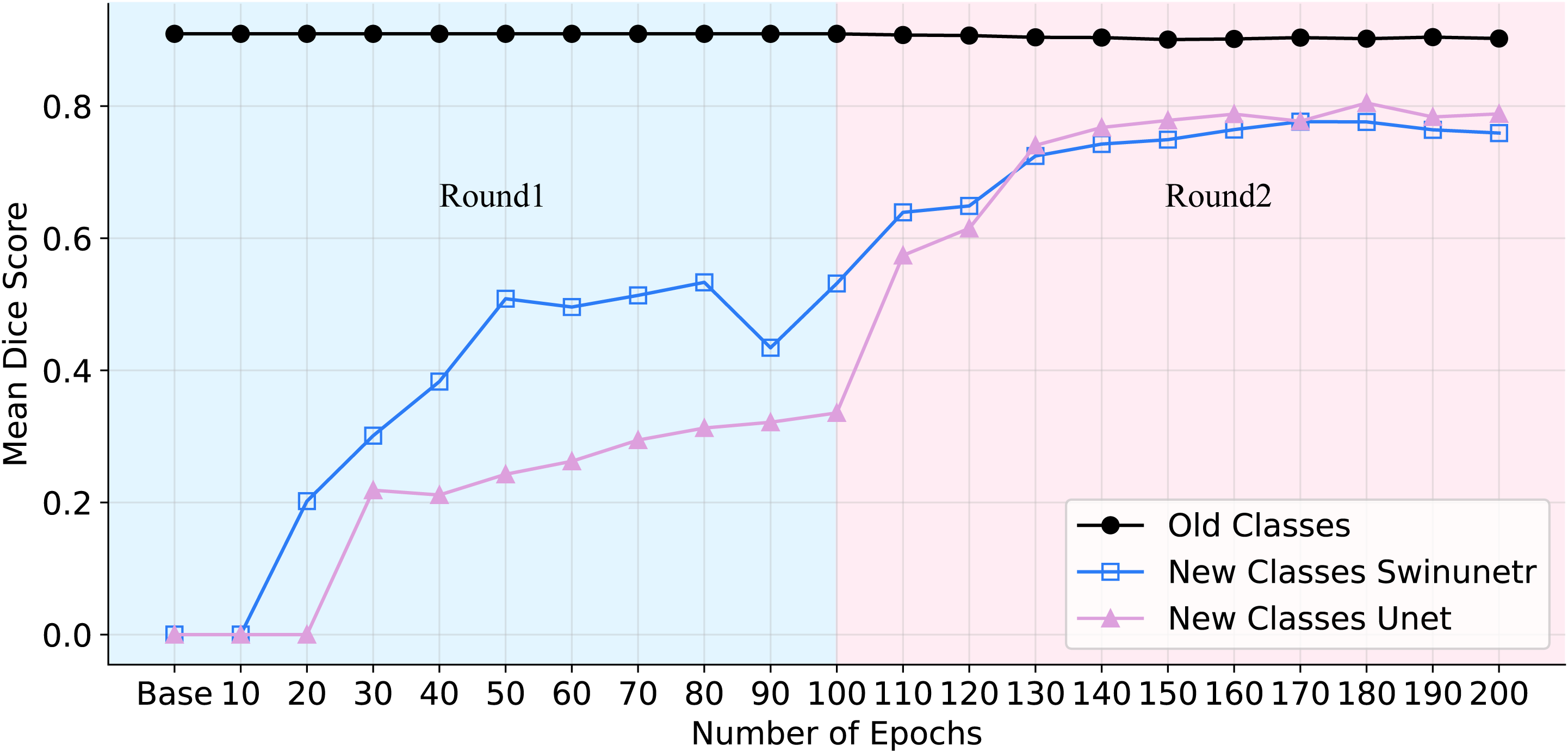

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -W ignore -m torch.distributed.launch --nproc_per_node=8 --master_port=$RANDOM train.py --dist True --backbone unet --data_root_path DATA_PATH --dataset_list DATA_LIST --continual_tuning >>logs/DATASET.txtCUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -W ignore -m torch.distributed.launch --nproc_per_node=8 --master_port=$RANDOM train.py --dist True --backbone swinunetr --data_root_path DATA_PATH --dataset_list DATA_LIST --continual_tuning >>logs/DATASET.txtThe expected reults after two rounds are shown below.

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -W ignore -m torch.distributed.launch --nproc_per_node=8 --master_port=$RANDOM train.py --dist True --backbone unet --data_root_path DATA_PATH --dataset_list DATA_LIST --batch_size 1 >>logs/DATASET.txtCUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python -W ignore -m torch.distributed.launch --nproc_per_node=8 --master_port=$RANDOM train.py --dist True --backbone swinunetr --data_root_path DATA_PATH --dataset_list DATA_LIST --batch_size 1 >>logs/DATASET.txtCUDA_VISIBLE_DEVICES=0 python -W ignore test.py --resume CHECKPOINT_PATH --backbone unet --save_dir SAVING_PATH --dataset_list DATA_LIST --data_root_path DATA_PATH --store_result >> logs/DATASET.unet.txtCUDA_VISIBLE_DEVICES=0 python -W ignore test.py --resume CHECKPOINT_PATH --backbone swinunetr --save_dir SAVING_PATH --dataset_list DATA_LIST --data_root_path DATA_PATH --store_result >> logs/DATASET.unet.txt@article{zhang2024leveraging,

title={Leveraging AI Predicted and Expert Revised Annotations in Interactive Segmentation: Continual Tuning or Full Training?},

author={Zhang, Tiezheng and Chen, Xiaoxi and Qu, Chongyu and Yuille, Alan and Zhou, Zongwei},

journal={arXiv preprint arXiv:2402.19423},

year={2024}

}

This work was supported by the Lustgarten Foundation for Pancreatic Cancer Research and partially by the Patrick J. McGovern Foundation Award. We appreciate the effort of the MONAI Team to provide open-source code for the community.